Working with the COS Line Spread Function (LSF)¶

Learning Goals¶

This Notebook is designed to walk the user (you) through: Working with the COS Line Spread Function (LSF) to simulate, fit, or model COS observations.

1. Understanding the Line Spread Function

- 1.1. What is an LSF?

- 1.2. Getting the files you need

2. Taking a look at the LSF kernels

- 2.1. Reading in an LSF

- 2.2. Plotting an LSF kernel

- 3.1. Defining some functions for LSF convolution

3.1.1

get_disp_params: Getting the dispersion relationship parameters3.1.2

redefine_lsf: Redefining the LSF in terms of wavelength

0. Introduction¶

The Cosmic Origins Spectrograph (COS) is an ultraviolet spectrograph on-board the Hubble Space Telescope(HST) with capabilities in the near ultraviolet (NUV) and far ultraviolet (FUV).

This tutorial aims to prepare you to work with the COS data of your choice by walking you through convolving a template or high-resolution spectrum with the COS LSF - both for FUV and NUV data. This is an important step for many spectral applications, notably fitting spectral lines.

- For an in-depth manual to working with COS data and a discussion of caveats and user tips, see the COS Data Handbook.

- For a detailed overview of the COS instrument, see the COS Instrument Handbook.

We will import the following packages:¶

numpyto handle arrays and functionsastropy.io fitsandastropy.table Tablefor accessing FITS filesastropy.modeling functional_modelsto generate synthetic spectral line shapesastropy.convolution convolvefor a convolution of two discretized functionsastroquery.mast MastandObservationsfor finding and downloading data from the MAST archivescipy.interpolate interp1dfor interpolating two discretized functions to the same samplingmatplotlib.pyplotfor plottingurllibfor downloading files stored at a web address onlinetarfilefor unzipping some compressed STIS datapathlib Pathfor managing system paths

If you have an existing astroconda environment, it may or may not already have the necessary packages to run this Notebook. To create a Python environment capable of running all the data analyses in these COS Notebooks, please see Section 1 of our Notebook tutorial on setting up an environment.

# Import for: array manipulation

import numpy as np

# Import for: reading fits files

from astropy.table import Table

from astropy.io import fits

# Import for: line profile functions

from astropy.modeling import functional_models

# Import for: convolutions

from astropy.convolution import convolve

# Import for: downloading the data

from astroquery.mast import Observations

#Import for: interpolating a discretized function

from scipy.interpolate import interp1d

#Import for: plotting

from matplotlib import pyplot as plt

#Import for: downloading COS' LSF files within python

import urllib

#Import for: unzipping a compressed file of STIS data

import tarfile

#Import for: working with system paths

from pathlib import Path

We will also define a few directories we will need:¶

# These will be important directories for the Notebook

cwd = Path(".")

datadir = cwd / "data"

outputdir = cwd / "output"

plotsdir = cwd / "output" / "plots"

# Make the directories if they don't exist

datadir.mkdir(exist_ok=True), outputdir.mkdir(exist_ok=True), plotsdir.mkdir(exist_ok=True)

And we will need to download the data we wish to filter and analyze¶

We choose the exposures with the association obs_id: LCRS51010, because we know there is also STIS data on the source, AV75. For more information on downloading COS data, see our notebook tutorial on downloading COS data.

# Search for obs_id of files, generate data product list

pl = Observations.get_product_list(

Observations.query_criteria(obs_id="LCRS51010")

)

download = Observations.download_products(

pl[pl["productSubGroupDescription"] == "X1DSUM"], # filter and download searched files

download_dir=str(datadir),

)

# Give the program the path to your downloaded data

fuvFile = download["Local Path"][0]

1. Understanding the Line Spread Function¶

Each COS observation is taken with a specific grating and a specific central wavelength setting (cenwave). Each such configuration (grating and cenwave) and - thus each COS dataset - has a set of corresponding Line Spread Functions (LSFs).

1.1. What is an LSF?¶

A Line Spread Function (LSF) is a model of a spectrograph's response to a monochromatic light source: it explains how an infinitely thin spectral line (a delta function) input into the spectrograph would be output at the focal plane. A spectrum with infinite spectral resolution convolved with the COS LSF will reproduce the COS spectral line profiles:

$$True\ Input\ Spectrum \ast COS\ LSF = True\ Output\ Spectrum$$Of course, we don't have access to a "true", infinite resolution input spectrum, nor can we know the infinite resolution LSF. The best we can do is use a model spectrum, or a spectrum from a higher resolution spectrograph (STIS often works well) and convolve it with a kernel of our LSF model. Convolving these yields our model output spectrum:

$$Model\ Input\ Spectrum \ast COS\ LSF\ Model = Model\ Output\ Spectrum$$Note that there is a corresponding spread function in the cross-dispersion direction, known as the cross-dispersion spread function (CDSF). These functions are also modelled by the COS team and hosted on their website listed below. Working with the CDSFs is out of the scope of this Notebook.

How does COS handle the LSF?

The COS LSFs are generated using an optical model of the spectrograph in the program Code V, and are then validated using real spectral data obtained with the instrument. The LSF kernels are sampled at regular intervals over the wavelength range of each COS configuration. Each of these wavelengths thus corresponds to an LSF kernel. The kernel size (in COS "pixels") of each LSF varies for the near ultraviolet (NUV) and far ultraviolet (FUV) modes, as does the sample rate (how many Angstroms between sampled kernels). These values are shown below:

Table 1.1: Line Spread Function (LSF) kernel parameters for the COS instrument ¶

| LSF kernels sampled every... (Å) | Actual kernel size (pixels) | Approximate kernel size in wavelength space(Å) | |

|---|---|---|---|

| COS/NUV | 100 Å | 101 pixels | - |

| NUV M Gratings | 100 Å | 101 pixels | 3.7 Å |

| NUV L Gratings | 100 Å | 101 pixels | 39 Å |

| COS/FUV | 5 Å | 321 pixels | - |

| FUV M Gratings | 5 Å | 321 pixels | 3.5 Å |

| FUV L Gratings | 5 Å | 321 pixels | 26 Å |

How are the LSF files structured?

In short, the LSF files are structured as a list of LSF profile kernels. It begins with a space-separated list of central sample wavelengths and the input monochromatic lines used by Code V. These are not to be confused with COS central wavelength settings, or cenwaves. To avoid confusing the two concepts, we'll call the central wavelengths of the LSF kernels the LSF wavelengths.

1.2. Getting the files you need¶

Which LSF files will you need?

This depends on your data's parameters, specifically those listed in Table 1.2.

| Parameter | Corresponding Header Keyword | Example used in this Notebook |

|---|---|---|

| Wavelength range | DETECTOR | FUV |

| COS lifetime position | LIFE_ADJ | 3 |

| Grating | OPT_ELEM | G130M |

| Central wavelength setting | CENWAVE | 1291 |

If your data was taken in COS' NUV configuration, there's only one NUV LSF file which contains all the LSF profiles for the entire NUV. FUV data is more complicated: you will need to choose an LSF file based on the COS Lifetime position, grating, and central wavelength setting used to capture your data.

You'll also need to get the DISPTAB file associated with your data. This Dispersion Coefficient Table, or DISPTAB, file contains polynomial coefficients to convert from pixel number to wavelength. You can find the relevant DISPTAB in your data's primary fits header. You can figure out the relevant DISPTAB file by examining your data's header in DS9 or other software, but we'll briefly do this programmatically below.

Note that you should always ensure that your LSF kernels are normalized such that they integrate to 1. The files we use in this Notebook are normalized to this contraint.

fuvHeader0 = fits.getheader(fuvFile, ext=0) # Select the primary header

print(f"For the file {fuvFile}, the relevant parameters are: ")

param_dict = {} # Make a dict to store what you find here

for hdrKeyword in [

"DETECTOR",

"OPT_ELEM",

"LIFE_ADJ",

"CENWAVE",

"DISPTAB",

]: # Print out the relevant values

try: # For DISPTAB

value = fuvHeader0[hdrKeyword].split("$")[1] # Save the key/value pairs to the dictionary

param_dict[hdrKeyword] = value # DISPTAB needs the split here

except: # For other params

value = fuvHeader0[hdrKeyword] # Save the key/value pairs to the dictionary

param_dict[hdrKeyword] = value

print(f"{hdrKeyword} = {value}") # Print the key/value pairs

Now that you know which LSF files you need, we'll go gather them from the COS team's website:

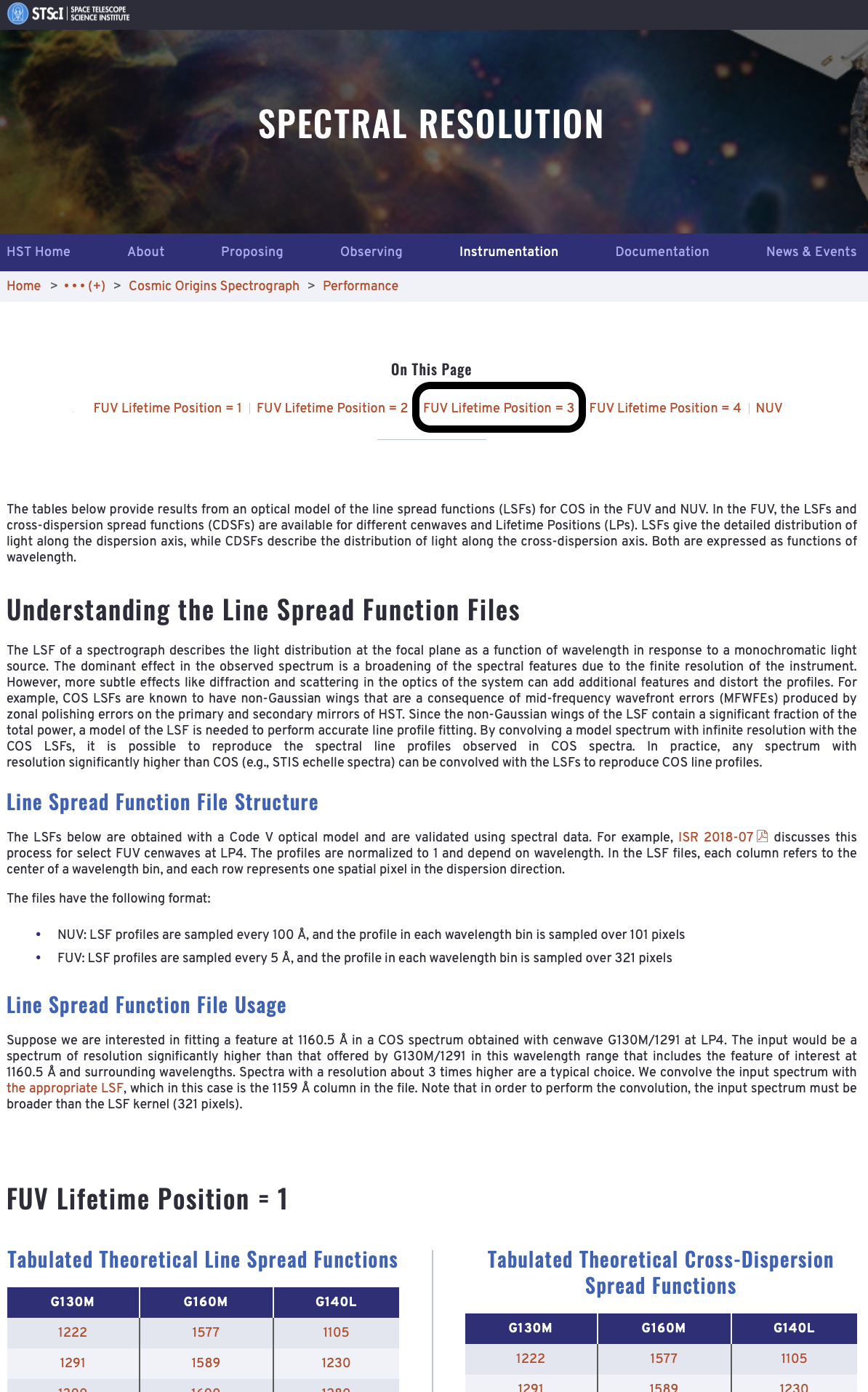

Where can you find the LSF files? The COS team maintains up-to-date LSF files on the COS Spectral Resolution page. Opening up this link leads to a page like that shown in Fig. 1.1, where the LSF files are discussed in detail. The bottom part of this page has links to all the relavent files. The links at the top of the page will take you to the relevant section. In Fig. 1.1, we have circled in black the link to the section pertaining to our data: FUV at the Lifetime Position: 3.

Fig 1.1: Screenshot of the COS Spectral Resolution Site ¶

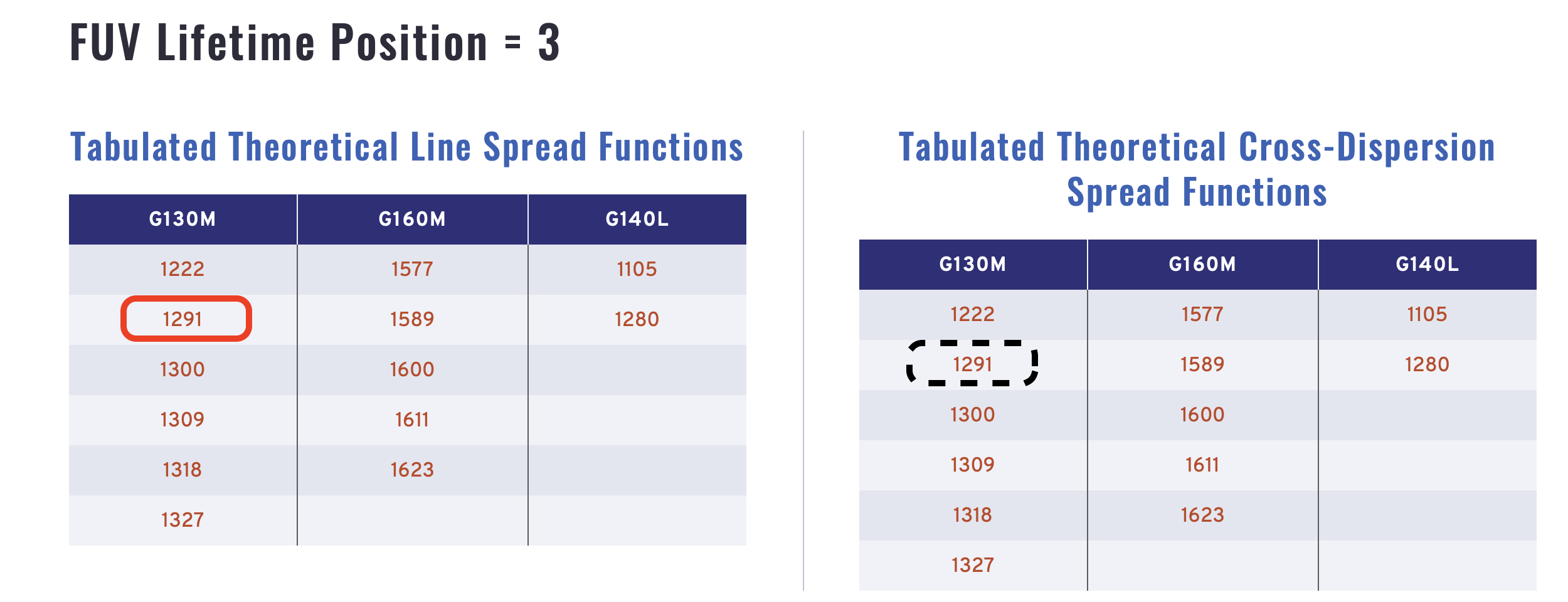

Clicking on the circled link takes us to the table of hyperlinks to all the files perataining to data taken with the FUV, Lifetime Postition 3 configutation, shown in Fig. 1.2:

Fig 1.2: Screenshot of the COS Spectral Resolution Site - Focus on LP-POS 3 ¶

Circled in solid red is the button to download the LSF file we need for our data with CENWAVE = 1291. Circled in dashed black is the corresponding CDSF.

You can click on the solid red circled button to download the LSF file and move it to this current working directory or right-click and select "Download Linked File As..." and download directly to this directory.

- This step may look somewhat different depending on your browser

- If you're unsure of the current working directory, un-comment the

printstatement in the next cell, and run the cell.

# equivalent of !pwd

# print(cwd.resolve())

These files can also be downloaded programmatically from with python, with the function we define below:

def fetch_files(det, grating, lpPos, cenwave, disptab):

"""

Given all the inputs: (detector, grating, LP-POS, cenwave, dispersion table,) this will download both

the LSF file and Disptab file you should use in the convolution and return their paths.

Returns:

LSF_file_name (str): filename of the new downloaded LSF file

disptab_path (str): path to the new downloaded disptab file

"""

COS_site_rootname = (

"https://www.stsci.edu/files/live/sites/www/files/home/hst/instrumentation/cos/"

"performance/spectral-resolution/_documents/"

) # Link to where all the files live - split into 2 lines

if det == "NUV": # Only one file for NUV

LSF_file_name = "nuv_model_lsf.dat"

elif det == "FUV": # FUV files follow a naming pattern

LSF_file_name = f"aa_LSFTable_{grating}_{cenwave}_LP{lpPos}_cn.dat"

LSF_file_webpath = COS_site_rootname + LSF_file_name # Where to find file online

urllib.request.urlretrieve(

LSF_file_webpath, str(datadir / LSF_file_name)

) # Where to save file to locally

print(f"Downloaded LSF file to {str(datadir/ LSF_file_name)}")

# And we'll need to get the DISPTAB file as well

disptab_path = str(datadir / disptab)

urllib.request.urlretrieve(

f"https://hst-crds.stsci.edu/unchecked_get/references/hst/{disptab}",

disptab_path,

)

print(f"Downloaded DISPTAB file to {disptab_path}")

return LSF_file_name, disptab_path

We'll run this function with the parameters we saved earlier to gather the proper LSF file:

# We'll pass that fetch function the parameters we determined earlier

# This "unpacked argument" phrasing works because of the order in which we added the params to the dict

LSF_file_name, disptab_path = fetch_files(*list(param_dict.values()))

def read_lsf(filename):

# This is the table of all the LSFs: called "lsf"

# The first column is a list of the wavelengths corresponding to the line profile, so we set our header accordingly

if "nuv_" in filename: # If its an NUV file, header starts 1 line later

ftype = "nuv"

else: # assume its an FUV file

ftype = "fuv"

hs = 0

lsf = Table.read(filename, format="ascii", header_start=hs)

# This is the range of each LSF in pixels (for FUV from -160 to +160, inclusive)

# middle pixel of the lsf is considered zero ; center is relative zero

pix = np.arange(len(lsf)) - len(lsf) // 2 # integer division to yield whole pixels

# the column names returned as integers.

lsf_wvlns = np.array([int(float(k)) for k in lsf.keys()])

return lsf, pix, lsf_wvlns

Now let's read the LSF file we downloaded and display the first 5 columns:

Because this is an FUV file, the columns are 321 values long, corresponding to the 321 pixel size of an LSF kernal in the FUV.

lsf, pix, lsf_wvlns = read_lsf(str(datadir / LSF_file_name))

lsf[lsf.colnames[:5]]

2.2. Plotting an LSF kernel¶

We can plot the LSF kernels themselves and take a look.

Here's a very simple plot of the first LSF kernel sampled at 1134 Å:

# Set up the figure

plt.figure(figsize=(10, 8))

# Fill the figure with a plot of the data

plt.plot(pix, lsf["1134"])

# Give figure the title and labels

plt.title("Figure 2.1\nCOS FUV LSF kernel sampled at 1134 Å", size=20)

plt.xlabel("Pixel", size=20)

plt.ylabel("Flux [$normalized,unitless$]", size=20)

# format and save the figure

plt.tight_layout()

plt.savefig(str(plotsdir / "oneKernel.png"), bbox_inches="tight")

We'll now make a more complex plot showing all of the lines contained in the LSF file, distributed in wavelength space.

Note that while the centers of the lines are correctly mapped to the LSF wavelength at which they were sampled, their kernel width in pixels has only been roughly translated to a wavelength range in Å. In short, the x-axis is not strictly to-scale. This will be rectified by the remapping shown in Fig 3.1.

fig, (ax0, ax1) = plt.subplots(2, 1, figsize=(10, 8), dpi=100)

# Loop through the lsf kernels

for i, col in enumerate(lsf.colnames):

line_wvln = int(col) # central position

contents = lsf[col].data # actual shape

if line_wvln < 1291: # split into the FUVB segment

xrange = 0.00997 * pix + line_wvln # ROUGHLY convert pix to wvln

ax1.plot(xrange, contents, linewidth=0.5) # Plot the kernel

ax1.axvline(line_wvln, linewidth=0.1) # plot the LSF wvln as a faded line

elif line_wvln > 1291: # split into the FUVA segment

xrange = 0.00997 * pix + line_wvln # ROUGHLY convert pix to wvln

ax0.plot(xrange, contents, linewidth=0.5) # Plot the kernel

ax0.axvline(line_wvln, linewidth=0.1) # plot the LSF wvln as a faded line

# Format the figure

ax0.set_xlim(1290, 1435)

ax1.set_xlim(1125, 1295)

# Add labels, titles and text

fig.suptitle(f"Fig 2.2\nAll the LSF kernels in {LSF_file_name}", fontsize=20)

ax1.set_title("FUVB Detector")

ax0.set_title("FUVA Detector")

ax1.set_xlabel("Approximate Wavelength [$\AA$]", size=14)

fig.text(s="Flux [$normalized,unitless$]", x=-0.01, y=0.35, rotation="vertical", size=14)

plt.tight_layout()

# Save the figure

plt.savefig(str(plotsdir / "allKernels.png"), bbox_inches="tight")

We will also demonstrate all of the above for a NUV dataset:¶

First, downloading the NUV data and getting the relevant header parameters:

#### NUV: download the data

pl = Observations.get_product_list(

Observations.query_criteria(obs_id="LBY606010")

) # search for file, generate data product list

download = Observations.download_products(

pl[pl["productSubGroupDescription"] == "X1DSUM"], # filter and download searched files

download_dir=str(datadir),

)

nuvFile = download["Local Path"][0] # Give the program the path to your downloaded data

#### NUV: get relavent params

nuvHeader0 = fits.getheader(nuvFile, ext=0) # Select the primary header

print(f"For the file {nuvFile}, the relevant parameters are: ")

nuv_param_dict = {} # Make a dict to store what you find here

for hdrKeyword in [

"DETECTOR",

"OPT_ELEM",

"LIFE_ADJ",

"CENWAVE",

"DISPTAB",

]: # print out the relevant values

try: # For DISPTAB

value = nuvHeader0[hdrKeyword].split("$")[1] # Save the key/value pairs to the dictionary,

nuv_param_dict[hdrKeyword] = value # DISPTAB needs the split here

except: # For other params

value = nuvHeader0[hdrKeyword] # Save the key/value pairs to the dictionary

nuv_param_dict[hdrKeyword] = value

print(f"{hdrKeyword} = {value}") # Print the key/value pairs

Downloading the disptab and LSF files:

#### NUV: Fetch disptab + LSF files

# We'll pass that fetch function the parameters we determined earlier

# This phrasing works because of the order in which we added the params to the dict

nuv_LSF_file_name, nuv_disptab_path = fetch_files(*list(nuv_param_dict.values()))

Read in the NUV LSF kernels and plot them:

#### Read in the NUV LSF kernels:

lsf_nuv, pix_nuv, colnames_nuv = read_lsf(str(datadir / nuv_LSF_file_name))

# Set up the figure

plt.figure(figsize=(10, 8))

# Fill the figure with a plot of the data

plt.plot(pix_nuv, lsf_nuv["1800"])

# Give fig the title and labels

plt.title("Figure 2.3\nCOS NUV LSF kernel sampled at 1800 Å", size=20)

plt.xlabel("Pixel", size=20)

plt.ylabel("Flux [$normalized,unitless$]", size=20)

# format and save the figure

plt.tight_layout()

plt.savefig(str(plotsdir / "oneKernel_nuv.png"), bbox_inches="tight")

### Get the approximate NUV dispersion to convert pixel --> wavelength

nuv_dtab = Table.read(nuv_disptab_path)

approx_disp = nuv_dtab[

np.where(

(nuv_dtab["CENWAVE"] == 1921)

& (nuv_dtab["SEGMENT"] == "NUVB")

& (nuv_dtab["APERTURE"] == "PSA")

)

]["COEFF"][0][1]

### Now plot

fig, (ax0) = plt.subplots(1, 1, figsize=(10, 5), dpi=100)

# Loop through the lsf kernels

for i, col in enumerate(lsf_nuv.colnames):

line_wvln = int(col) # central position

contents = lsf_nuv[col].data # actual data on line shape

xrange = approx_disp * pix_nuv + line_wvln # Roughly convert pix to wvln

ax0.plot(xrange, contents, linewidth=0.5) # Plot the kernel

ax0.axvline(line_wvln, linewidth=0.1) # plot the LSF wvln as a faded line

# Add labels, titles and text

fig.suptitle(f"Fig 2.4\nAll the NUV LSF kernels in {nuv_LSF_file_name}", fontsize=20)

ax0.set_xlabel("Wavelength [$\AA$]", size=14)

ax0.set_ylabel("Flux [$normalized,unitless$]", size=14)

# format and save the figure

plt.tight_layout()

plt.savefig(str(plotsdir / "allKernels_nuv.png"), bbox_inches="tight")

3. Convolving an LSF¶

Now we come to the central goal of the Notebook, convolving a spectrum with the COS LSF. We'll begin by defining some functions we'll use for the convolution.

3.1. Defining some functions for LSF convolution¶

We've already defined a function to read in the LSF file, but we'll need the main function to take a spectrum and convolve it with the LSF kernel. We'll call this function:convolve_lsf. This function will, in turn, call two short functions: get_disp_params to and redefine_lsf:

3.1.1. Getting the dispersion relationship parameters¶

First, we'll define a function, get_disp_params, to interpret the DISPTAB to find the dispersion relationship.

If provided with a range of pixel values, it will apply the dispersion relationship to those values and return the equivalent wavelength values as well as the dispersion coefficients.

def get_disp_params(disptab, cenwave, segment, x=[]):

"""

Helper function to redefine_lsf(). Reads through a DISPTAB file and gives relevant\

dispersion relationship/wavelength solution over input pixels.

Parameters:

disptab (str): Path to your DISPTAB file.

cenwave (str): Cenwave for calculation of dispersion relationship.

segment (str): FUVA or FUVB?

x (list): Range in pixels over which to calculate wvln with dispersion relationship (optional).

Returns:

disp_coeff (list): Coefficients of the relevant polynomial dispersion relationship

wavelength (list; if applicable): Wavelengths corresponding to input x pixels

"""

with fits.open(disptab) as d:

wh_disp = np.where(

(d[1].data["cenwave"] == cenwave)

& (d[1].data["segment"] == segment)

& (d[1].data["aperture"] == "PSA")

)[0]

disp_coeff = d[1].data[wh_disp]["COEFF"][0] # 0 is needed as this returns nested list [[arr]]

d_tv03 = d[1].data[wh_disp]["D_TV03"] # Offset from WCA to PSA in Thermal Vac. 2003 data

d_orbit = d[1].data[wh_disp]["D"] # Current offset from WCA to PSA

delta_d = d_tv03 - d_orbit

if len(x): # If given a pixel range, build up a polynomial wvln solution pix -> λ

wavelength = np.polyval(p=disp_coeff[::-1], x=np.arange(16384))

return disp_coeff, wavelength

else: # If x is empty:

return disp_coeff

3.1.2. Redefining the LSF in terms of wavelength¶

Now, we'll define a function, redefine_lsf, to apply the dispersion relationship to the LSF kernels.

This effectively converts an LSF kernel from a function of pixel to a function of wavelength, making it compatible with a spectrum.

def redefine_lsf(lsf_file, cenwave, disptab, detector="FUV"):

"""

Helper function to convolve_lsf(). Converts the LSF kernels in the LSF file from a fn(pixel) -> fn(λ)\

which can then be used by convolve_lsf() and re-bins the kernels.

Parameters:

lsf_file (str): path to your LSF file

cenwave (str): Cenwave for calculation of dispersion relationship

disptab (str): path to your DISPTAB file

detector (str): FUV or NUV?

Returns:

new_lsf (numpy.ndarray): Remapped LSF kernels.

new_w (numpy.ndarray): New LSF kernel's LSF wavelengths.

step (float): first order coefficient of the FUVA dispersion relationship; proxy for Δλ/Δpixel.

"""

if detector == "FUV":

xfull = np.arange(16384)

# Read in the dispersion relationship here for the segments

### FUVA is simple

disp_coeff_a, wavelength_a = get_disp_params(disptab, cenwave, "FUVA", x=xfull)

### FUVB isn't taken for cenwave 1105, nor 800:

if (cenwave != 1105) & (cenwave != 800):

disp_coeff_b, wavelength_b = get_disp_params(

disptab, cenwave, "FUVB", x=xfull)

elif cenwave == 1105:

# 1105 doesn't have an FUVB so set it to something arbitrary and clearly not real:

wavelength_b = [-99.0, 0.0]

# Get the step size info from the FUVA 1st order dispersion coefficient

step = disp_coeff_a[1]

# Read in the lsf file

lsf, pix, w = read_lsf(lsf_file)

# take median spacing between original LSF kernels

deltaw = np.median(np.diff(w))

lsf_array = [np.array(lsf[key]) for key in lsf.keys()]

if (deltaw < len(pix) * step * 2): # resamples if the spacing of the original LSF wvlns is too narrow

# this is all a set up of the bins we want to use

# The wvln difference between kernels of the new LSF should be about twice their width

new_deltaw = round(len(pix) * step * 2.0)

new_nw = (int(round((max(w) - min(w)) / new_deltaw)) + 1) # nw = number of LSF wavelengths

new_w = min(w) + np.arange(new_nw) * new_deltaw # new version of lsf_wvlns

# populating the lsf with the proper bins

new_lsf = np.zeros((len(pix), new_nw)) # empty 2-D array to populate

for i, current_w in enumerate(new_w):

dist = abs(current_w - w) # Find closest original LSF wavelength to new LSF wavelength

lsf_index = np.argmin(dist)

orig_lsf_wvln_key = lsf.keys()[lsf_index] # column name corresponding to closest orig LSF wvln

new_lsf[:, i] = np.array(lsf[orig_lsf_wvln_key]) # assign new LSF wvln the kernel of the closest original lsf wvln

else:

new_lsf = lsf

new_w = w

return new_lsf, new_w, step

elif detector == "NUV":

xfull = np.arange(1024)

# Read in the dispersion relationship here for the segments

disp_coeff_a, wavelength_a = get_disp_params(disptab, cenwave, "NUVA", x=xfull)

disp_coeff_b, wavelength_b = get_disp_params(disptab, cenwave, "NUVB", x=xfull)

disp_coeff_c, wavelength_c = get_disp_params(disptab, cenwave, "NUVC", x=xfull)

# Get the step size info from the NUVB 1st order dispersion coefficient

step = disp_coeff_b[1]

# Read in the lsf file

lsf, pix, w = read_lsf(lsf_file)

# take median spacing between original LSF kernels

deltaw = np.median(np.diff(w))

lsf_array = [np.array(lsf[key]) for key in lsf.keys()]

# this section is a set up of the new bins we want to use:

new_deltaw = round(len(pix) * step * 2.0) # The wvln difference between kernels of the new LSF should be about twice their width

new_nw = (int(round((max(w) - min(w)) / new_deltaw)) + 1) # nw = number of LSF wavelengths

new_w = min(w) + np.arange(new_nw) * new_deltaw # new version of lsf_wvlns

# populating the lsf with the proper bins

new_lsf = np.zeros((len(pix), new_nw)) # empty 2-D array to populate

for i, current_w in enumerate(new_w):

dist = abs(current_w - w) # Find closest original LSF wavelength to new LSF wavelength

lsf_index = np.argmin(dist)

orig_lsf_wvln_key = lsf.keys()[lsf_index] # column name corresponding to closest orig LSF wvln

new_lsf[:, i] = np.array(lsf[orig_lsf_wvln_key]) # assign new LSF wvln the kernel of the closest original lsf wvln

return new_lsf, new_w, step

Let's make a version of the Fig. 2.2 with these newly remapped LSF kernels:

# Generate the redefined lsf for the plot

new_lsf, new_w, step = redefine_lsf(

str(datadir / LSF_file_name),

param_dict["CENWAVE"],

str(datadir / param_dict["DISPTAB"]),

)

fig, (ax0, ax1) = plt.subplots(2, 1, figsize=(10, 8), dpi=100)

# Loop through the new remapped lsf kernels

for i, col in enumerate(new_w):

line_wvln = int(col) # central position

contents = new_lsf[:, i] # actual shape

if line_wvln < 1291: # split into the FUVB segment

xrange = new_w[i] + pix * step # ROUGHLY convert pix to wvln

ax1.plot(xrange, contents, linewidth=0.5) # Plot the kernel

ax1.axvline(line_wvln, linewidth=0.1) # plot the LSF wvln as a faded line

elif line_wvln > 1291: # split into the FUVA segment

xrange = new_w[i] + pix * step # ROUGHLY convert pix to wvln

ax0.plot(xrange, contents, linewidth=0.5) # Plot the kernel

ax0.axvline(line_wvln, linewidth=0.1) # plot the LSF wvln as a faded line

# Add labels, titles and text

fig.suptitle(f"Fig 3.1\nAll the $remapped$ LSF kernels in {LSF_file_name}\n")

ax1.set_title("FUVB Detector")

ax0.set_title("FUVA Detector")

ax0.set_xlim(1290, 1435)

ax1.set_xlim(1125, 1295)

ax1.set_xlabel("Wavelength [$\AA$]", size=14)

fig.text(

s="Flux [$normalized,unitless$]", x=-0.01, y=0.35, rotation="vertical", size=14

)

# format and save the figure

plt.tight_layout()

plt.savefig(str(plotsdir / "allKernels_new.png"), bbox_inches="tight")

3.1.3. Applying the convolution¶

Finally, we'll define the main function, convolve_lsf, to convolve the template spectrum with the redefined LSF:

def convolve_lsf(wavelength, spec, cenwave, lsf_file, disptab, detector="FUV"):

"""

Main function; Convolves an input spectrum - i.e. template or STIS spectrum - with the COS LSF.

Parameters:

wavelength (list or array): Wavelengths of the spectrum to convolve.

spec (list or array): Fluxes or intensities of the spectrum to convolve.

cenwave (str): Cenwave for calculation of dispersion relationship

lsf_file (str): Path to your LSF file

disptab (str): Path to your DISPTAB file

detector (str) : Assumes an FUV detector, but you may specify 'NUV'.

Returns:

wave_cos (numpy.ndarray): Wavelengths of convolved spectrum.!Different length from input wvln

final_spec (numpy.ndarray): New LSF kernel's LSF wavelengths.!Different length from input spec

"""

# First calls redefine to get right format of LSF kernels

new_lsf, new_w, step = redefine_lsf(lsf_file, cenwave, disptab, detector=detector)

# sets up new wavelength scale used in the convolution

nstep = round((max(wavelength) - min(wavelength)) / step) - 1

wave_cos = min(wavelength) + np.arange(nstep) * step

# resampling onto the input spectrum's wavelength scale

interp_func = interp1d(wavelength, spec) # builds up interpolated function from input spectrum

spec_cos = interp_func(wave_cos) # builds interpolated initial spectrum at COS' wavelength scale for convolution

final_spec = interp_func(wave_cos) # Initializes final spectrum to the interpolated input spectrum

for i, w in enumerate(new_w): # Loop through the redefined LSF kernels

# First need to find the boundaries of each kernel's "jurisdiction": where it applies

# The first and last elements need to be treated separately

if i == 0: # First kernel

diff_wave_left = 500

diff_wave_right = (new_w[i + 1] - w) / 2.0

elif i == len(new_w) - 1: # Last kernel

diff_wave_right = 500

diff_wave_left = (w - new_w[i - 1]) / 2.0

else: # All other kernels

diff_wave_left = (w - new_w[i - 1]) / 2.0

diff_wave_right = (new_w[i + 1] - w) / 2.0

# splitting up the spectrum into slices around the redefined LSF kernel wvlns

# will apply the kernel corresponding to that chunk to that region of the spectrum - its "jurisdiction"

chunk = np.where(

(wave_cos < w + diff_wave_right) & (wave_cos >= w - diff_wave_left)

)[0]

if len(chunk) == 0:

# off the edge, go to the next chunk

continue

current_lsf = new_lsf[:, i] # selects the current kernel

if len(chunk) >= len(

current_lsf

): # Makes sure that the kernel is smaller than the chunk

final_spec[chunk] = convolve(

spec_cos[chunk],

current_lsf, # Applies the actual convolution

boundary="extend",

normalize_kernel=True,

)

return wave_cos, final_spec # Remember, not the same length as input spectrum data!

3.2. Convolving simple line profiles¶

Let's demonstrate the convolution with a quick initial plot.

The cell below first creates a simple synthetic spectrum (with a wavelength range from 1147-1153Å and a Voigt profile emission line at 1150Å), then convolves it with the COS LSF. The next cell then plots the two spectra together.

##### Generate data:

# Define a model spectral line with a Voigt profile

voigt_shape = functional_models.Voigt1D(

x_0=1150, amplitude_L=1, fwhm_G=0.05, fwhm_L=0.05

)

# Make a simple spectrum with just that line at 1150 Å

# generate x data - Minimum size of Δ6Å for the kernel to apply here.

wvln_in = np.linspace(1147, 1153, int(1e5))

spec_in = voigt_shape(wvln_in) # Generate the y data

spec_in /= max(spec_in) # Normalize the y data to a max of 1

##### Run the convolution

wvln_out, spec_out = convolve_lsf(

wvln_in,

spec_in,

1291,

str(datadir / LSF_file_name),

str(datadir / param_dict["DISPTAB"]),

)

##### Make a plot from the data generated above:

plt.figure(figsize=(10, 8)) # Set up figure

# Plot the two spectra

plt.plot(wvln_in, spec_in, label="Un-Convolved Voigt line spectrum")

plt.plot(

wvln_out,spec_out,

linestyle="--",linewidth=2,

c="k",label="Convolved Voigt line spectrum",

)

# Format and give fig the title and labels

plt.title("Figure 3.2\nConvolution applied to a single synthetic line in the FUV", size=20)

plt.xlabel("Wavelength [$\AA$]", size=20)

plt.ylabel("Flux [$normalized,unitless$]", size=20)

# Add a legend

plt.legend(fontsize=12, loc="upper right")

# Save the figure

plt.tight_layout()

plt.savefig(str(plotsdir / "applyConv1.png"), bbox_inches="tight")

As shown above, the LSF pushes power from the line's peak into the wings of the line profile.

And, as we confirm below, the total flux has not changed, but has just been spread out:

integral1, integral2 = np.trapz(x=wvln_in, y=spec_in), np.trapz(x=wvln_out, y=spec_out)

print(f"The integrated fluxes are within {100* (integral1-integral2)/integral2:.2f} % of eachother")

We can now examine how the convolution differs across the spectrum. We do this by creating a "picket fence"/"frequency comb"-like spectrum of evenly-spaced, identical, normalized Voigt profiles. Each of these lines is normalized to a maximum of 1.

We'll make our synthetic spectrum slightly more realistic by converting from emission lines to absorption lines on a flat spectrum. To do this, we define a simple function, emit2abs(), which defines a flat continuum of 1, then multiplies this continuum by $e^{-emission\ spectrum}$

We then use convolve_lsf to convolve each of these synthetic spectral lines with the LSF kernel under whose "jurisdiction" they fall. For each line, we plot pre- and post- convolution spectrum in the 8 smaller panels. In the bottom, larger panel, we also plot the entire spectrum of all the lines.

def emit2abs(emitspec, continuum=-1):

"""

Takes an emission spectrum and "reverses" it, that is it:

Makes a default flat continuum of 1 and subtracts exp(line)

Inputs:

emitspec (1D arraylike): the emission spectrum

continuum (array or -1) : if -1, default of continuum of 1, \

otherwise must be same length as emitspec

"""

if type(continuum) == int:

if continuum == -1:

continuum = np.ones(len(emitspec))

abs_spec = continuum * np.exp(-1.0 * emitspec)

return abs_spec

########## Set up the DATA ##############

# Build up a synthetic wavelength range comparable to a real COS spectrum

wvln = np.linspace(1120, 1442, 16384 * 2)

# Initialize the spectrum to continuum of zero, we'll build it up with a series of Voigt profiles

flux = np.zeros(wvln.shape)

combo_flux = flux # A copy of flux that we'll add each new line's flux to, in turn

# Set up figure

fig = plt.figure(figsize=(20, 15))

gs = fig.add_gridspec(nrows=10, ncols=4) # Using gridspec to control panel sizes and locations

# Loop through the input spectrum's wavelength range and place a synthetic Voigt line every 40 Å

for i, discrete_wvln in enumerate(np.arange(int(min(wvln) + 5), max(wvln) - 5, 40)):

voigt_shape = functional_models.Voigt1D(

x_0=discrete_wvln, # Center a Voigt profile there

amplitude_L=1,

fwhm_G=0.05,

fwhm_L=0.05)

flux = voigt_shape(wvln) # Evaluate flux from that Voigt profile function

flux /= max(flux) # Normalize that line's flux

combo_flux = combo_flux + flux # Add each line's flux to total summed flux

# "Reverse" emission spectrum to absorption spectrum

combo_flux = emit2abs(combo_flux)

# Apply the convolution to the combined (many-line) synthetic spectrum to create an lsf_convolved wvln and flux

lwvln, lsf_combo_flux = convolve_lsf(

wvln,

combo_flux,

1291,

str(datadir / LSF_file_name),

str(datadir / param_dict["DISPTAB"]),

)

########## Make the plots ##############

# Loop through again to build up the plots

for i, discrete_wvln in enumerate(

np.arange(int(min(wvln) + 5), max(wvln) - 5, 40)

): # This will make 8 subplots

###### Build the small subplots for each line

ax = fig.add_subplot(

gs[4 * int(i / 4) : 4 * int(i / 4) + 4, i % 4 : (i) % 4 + 1]

) # Add a plot at the correct position on the grid

# First plot the original, unconvolved line at each position

ax.plot(

wvln, combo_flux, label="Un-convolved"

)

# Now plot the convolved line

ax.plot(

lwvln, lsf_combo_flux, c="k", linestyle="--", linewidth=2, label="LSF-convolved"

)

# Now add the peak wvln

ax.axvline(

discrete_wvln, c="C1", linestyle="dotted", alpha=0.8, label="Peak of Voigt"

)

# Some formatting

ax.set_xlim(discrete_wvln - 0.5, discrete_wvln + 0.5)

ax.ticklabel_format(axis="x", style="plain", useOffset=True, useMathText=True)

if i == 0: # Add a legend to the first subplot

ax.legend(fontsize=12, loc="upper left")

# Build up lower plot of all the lines:

low_ax = fig.add_subplot(gs[8:, :])

low_ax.plot(wvln, combo_flux, label="Un-convolved")

low_ax.plot(lwvln, lsf_combo_flux, c="k", linestyle="--", label="LSF-convolved")

low_ax.legend(fontsize=14, loc="upper right")

fig.suptitle(

'Fig 3.3\nComparison of convolutions across a "picket fence" of absorption lines in the FUV\n',

size=25,

)

fig.text(0.5, -0.01, "Wavelength [$\AA$]", ha="center", fontsize=20)

fig.text(

-0.01,0.5,

"Flux [$normalized,\ unitless$]",

va="center",rotation="vertical",

fontsize=20,

)

plt.tight_layout()

plt.savefig(str(plotsdir / "applyConv_picketFence.png"), bbox_inches="tight", dpi=200)

The figure above demonstrates the way in which the LSF changes significantly throughout the wavelength range of a spectrum.

It can be difficult to distinguish the LSFs plotted on their own; however, the results of their convolution with a synthetic line shows some are more sharply peaked, or contain more flux in the wings, etc.

We'll finish this section with a brief example of convolving a line in the NUV with the relevant LSF:

##### Generate data:

# Define a model spectral line with a Voigt profile

voigt_shape = functional_models.Voigt1D(

x_0=1803, amplitude_L=1, fwhm_G=0.05, fwhm_L=0.05

)

# Make a simple spectrum with just that line at 1150 Å

# generate x data - Minimum size of Δ6Å for the kernel to apply here.

wvln_in = np.linspace(1800, 1806, int(1e4))

spec_in = voigt_shape(wvln_in) # Generate the y data

spec_in /= max(spec_in) # Normalize the y data to a max of 1

### Run the convolution

wvln_out, spec_out = convolve_lsf(

wavelength=wvln_in,

spec=spec_in,

cenwave=1786,

lsf_file=str(datadir / nuv_LSF_file_name),

disptab=nuv_disptab_path,

detector="NUV",

)

############################################################################################

##### Make a plot from that data:

plt.figure(figsize=(10, 8)) # Set up figure

# Plot the two spectra

plt.plot(wvln_in, spec_in, label="Un-Convolved Voigt line spectrum")

plt.plot(wvln_out,spec_out,

linestyle="--",linewidth=2,

c="k",label="Convolved Voigt line spectrum",

)

# Add a legend

plt.legend(fontsize=12, loc="upper right")

# Give fig the title and labels

plt.title("Figure 3.4\nConvolution applied to a single synthetic line in the NUV", size=20)

plt.xlabel("Wavelength [$\AA$]", size=20)

plt.ylabel("Flux [$normalized,unitless$]", size=20)

# format and save the figure

plt.tight_layout()

plt.savefig(str(plotsdir / "applyConv_nuv1.png"), bbox_inches="tight")

# Give the user a heads-up that the integrated fluxes should agree:

integral1, integral2 = np.trapz(x=wvln_in, y=spec_in), np.trapz(x=wvln_out, y=spec_out)

print(f"The integrated fluxes are within {100* (integral1-integral2)/integral2:.2f} % of eachother")

And we'll create an NUV equivalent of Fig. 3.3:

########## Set up the DATA ##############

# Build up a synthetic wavelength range comparable to a real COS spectrum

wvln = np.linspace(1600, 3300, int(1e6))

# Initialize the spectrum to zero, we'll build it up with a series of Voigt profiles

flux = np.zeros(wvln.shape)

combo_flux = flux # A copy of flux that we'll add each new line's flux to, in turn

# Set up figure

fig = plt.figure(figsize=(20, 15))

gs = fig.add_gridspec(nrows=10, ncols=4) # Using gridspec to let us control panel sizes and locations

# Loop through the input spectrum's wavelength range and place a synthetic Voigt line every 40 Å

for i, discrete_wvln in enumerate(np.arange(int(min(wvln) + 10), max(wvln) - 5, 240)):

voigt_shape = functional_models.Voigt1D(

x_0=discrete_wvln, # Center a Voigt profile there

amplitude_L=1,

fwhm_G=0.05,

fwhm_L=0.05,

)

flux = voigt_shape(wvln) # evaluate flux from that Voigt profile function

flux /= max(flux) # Normalize that line's flux

combo_flux = combo_flux + flux # Add each line's flux to total summed flux

# Reverse emit -> abs spectrum

combo_flux = emit2abs(combo_flux)

# Apply the convolution to the combined (many-line) synthetic spectrum to create an lsf_convolved wvln and flux

lwvln, lsf_combo_flux = convolve_lsf(

wvln,

combo_flux,

1786.0,

lsf_file=str(datadir / nuv_LSF_file_name),

disptab=nuv_disptab_path,

detector="NUV",

)

########## Make the PLOTS ##############

# Loop through again to build up the plots

for i, discrete_wvln in enumerate(

np.arange(int(min(wvln) + 10), max(wvln) - 5, 240)

): # This will make 8 subplots

###### Build the small subplots for each line

# Add a plot at the right position on the grid

ax = fig.add_subplot(gs[4 * int(i / 4) : 4 * int(i / 4) + 4, i % 4 : (i) % 4 + 1])

# First plot the original, unconvolved line at each position

ax.plot(wvln, combo_flux, label="Un-convolved")

# Now plot the convolved line

ax.plot(

lwvln, lsf_combo_flux, c="k", linestyle="--", linewidth=2, label="LSF-convolved"

)

# Now add the peak wvln

ax.axvline(

discrete_wvln, c="C1", linestyle="dotted", alpha=0.8, label="Peak of Voigt"

)

# Apply some formatting

ax.set_xlim(discrete_wvln - 1.0, discrete_wvln + 1.0)

ax.ticklabel_format(axis="x", style="plain", useOffset=True, useMathText=True)

if i == 0: # Add a legend to the first subplot

ax.legend(fontsize=12, loc="upper left")

plt.setp(

ax.get_xticklabels(), rotation=30, horizontalalignment="right"

) # rotate tick labels

# Build up lower plot of all the lines:

low_ax = fig.add_subplot(gs[8:, :])

low_ax.plot(wvln, combo_flux, label="Un-convolved")

low_ax.plot(lwvln, lsf_combo_flux, c="k", linestyle="--", label="LSF-convolved")

low_ax.legend(fontsize=14, loc="upper right")

fig.suptitle(

'Fig 3.5\nComparison of convolutions across a "picket fence" of absorption lines in the NUV\n',

size=25,

)

fig.text(0.5, -0.01, "Wavelength [$\AA$]", ha="center", fontsize=20)

fig.text(-0.01,0.5,

"Flux [$normalized,\ unitless$]",

va="center",

rotation="vertical",

fontsize=20,

)

plt.tight_layout()

plt.savefig(str(plotsdir / "applyConv_picketFence_nuv_absorb.png"), bbox_inches="tight", dpi=200)

3.3. Convolving real data from STIS¶

Let's first read in the COS FUV spectrum. For more information, see our Notebook on Reading-in and Plotting data COS in Python. Ignore any UnitsWarnings.

cos_table = Table.read(fuvFile)

COS_wvln, COS_flux = [], []

for cos_segment in [1, 0]: # The COS segment at index 0 has a longer wvln domain

COS_wvln_, COS_flux_ = list(cos_table[cos_segment]["WAVELENGTH", "FLUX"])

COS_dqw_ = np.asarray(cos_table[cos_segment]["DQ_WGT"], dtype=bool)

COS_wvln += list(COS_wvln_[COS_dqw_])

COS_flux += list(COS_flux_[COS_dqw_])

COS_wvln, COS_flux = np.asarray(COS_wvln), np.asarray(COS_flux)

Now, we download the STIS FUV spectrum, using astroquery, as demonstrated in our Notebook on Downloading data from the archive.

# Search for the file

pl = Observations.get_product_list(

Observations.query_criteria(obs_id="O4WR11010", instrument_name="STIS/FUV-MAMA")

)

download = Observations.download_products(

pl[pl["productSubGroupDescription"] == "X1D"], # filter and download searched files

download_dir=str(datadir),

)

stisfile = download["Local Path"][0] # Give the program the path to your downloaded data

We now read in the STIS spectrum.

This is a bit trickier than with the COS data, as there are many more segments of the STIS data (one per echelle order), each represented by a row of the table. We choose to combine them all here and sort by wavelength, but this may or may not be the right choice for your data. For more information on working with STIS data, see the STIS Instrument Handbook.

stis_table = Table.read(stisfile) # read the fits to an astropy Table

STIS_wvln, STIS_flux = [],[] # Empty list to populate

for i in range(len(stis_table)): # go through Echelle order rows + populate

stis_chunk_mask = stis_table['DQ'][i] == 0 # We'll filter to only data with no identified quality issues

STIS_wvln += list(stis_table['WAVELENGTH'][i][stis_chunk_mask])

STIS_flux += list(stis_table['FLUX'][i][stis_chunk_mask])

sort_order = np.argsort(STIS_wvln) # sort by wvln to work out order overlaps - blunt fix

STIS_wvln, STIS_flux = np.asarray(STIS_wvln)[sort_order], np.asarray(STIS_flux)[sort_order] # Get STIS spec as sorted array

# Run the actual convolution on the STIS data - a simple task once we have those functions defined

STIS_lwvln, STIS_lflux = convolve_lsf(

STIS_wvln,

STIS_flux,

1291,

str(datadir / LSF_file_name),

str(datadir / param_dict["DISPTAB"]),

)

We'll plot the COS spectra with the STIS spectra, (both pre- and post- convolution,) to demonstrate the effect on our data. We'll first plot the entire spectrum:

# Set up figure

plt.figure(figsize=(10, 8))

# Plot each of the spectra

plt.plot(STIS_wvln, STIS_flux, linestyle='-', c='C0',

label="STIS Un-convolved")

plt.plot(STIS_lwvln, STIS_lflux, markersize=1, linestyle='-', c='C1',

label="STIS Convolved")

plt.plot(COS_wvln, COS_flux, markersize=0.1, linestyle='-', c ='k', alpha=0.8,

label="COS")

# Set ybounds to avoid spike at shorter wvln side

plt.ylim(-1E-13, 1E-12)

#Add a legend

plt.legend(fontsize=15, loc='upper right')

# Give fig the title and labels

plt.title("Figure 3.6\nCOS FUV LSF kernel sampled at 1134 Å", size=20)

plt.xlabel("Wavelength [$\AA$]", size=20)

plt.ylabel("Flux [$erg\ \AA^{-1}\ cm^{-2}\ s^{-1}$]", size=20)

# format and save the figure

plt.tight_layout()

plt.savefig(str(plotsdir / 'COS_STIS_compare_wide.png'), bbox_inches='tight')

We can tell that the peaks have been cut shorter in the STIS convolved spectrum.

However, to see a bit more detail, let's make plots zooming in on spectral lines. We arbitrarily selected a few spectral lines in the UV from Table 1 of Leitherer et al.'s "An ultraviolet spectroscopic atlas of local starbursts and star-forming galaxies: the legacy of FOS and GHRS." The Astronomical Journal 141, no. 2 (2011): 37.

# Select a few UV lines from the table in Leitherer et al, 2011

lines = {"S II": 1250.58, "Si II": 1304.37, "C II": 1335.71, "Si IV": 1393.76}

# Set up figure

fig = plt.figure(figsize=(10, 16))

gs = fig.add_gridspec(nrows=4, ncols=1) # Using gridspec to let us control panel sizes and locations

for i, (linename, line) in enumerate(lines.items()): # For each line:

ax = fig.add_subplot(gs[i, 0]) # Add a plot at the correct position on the grid

ax.plot(STIS_wvln, STIS_flux,

linestyle="-.", c="C0", # Plot all the spectra for each line

label="STIS Un-convolved",

)

ax.plot(STIS_lwvln, STIS_lflux,

markersize=1,

linestyle="-", c="C1",

label="STIS Convolved",

)

ax.plot(COS_wvln, COS_flux,

markersize=0.1, linestyle="--", c="k",

label="COS")

# Add a vertical line at the reference frame wavelength of the line

ax.axvline(line, c="gray", linestyle="--", label=f"{linename} line at {line}Å")

# Set bounds and add legends

ax.set_xlim(line - 1, line + 1)

ax.set_ylim(-1e-13, 1e-12)

ax.legend(fontsize=15, loc="upper right")

# Give fig the title and labels

fig.suptitle(

f"Fig. 3.7\nA series of UV lines with COS\n and STIS spectra convolved with COS LSFs\n",

size=20,

)

ax.set_xlabel("Wavelength [$\AA$]", size=20)

fig.text(s="Flux [$erg\ \AA^{-1}\ cm^{-2}\ s^{-1}$]",

x=-0.018,y=0.4,

rotation="vertical",

size=20,

)

# format and save the figure

plt.tight_layout()

plt.savefig(str(plotsdir / "COS_STIS_compare.png"), bbox_inches="tight")

We'll do something similar again for the NUV:¶

First downloading the NUV data to compare.

Note we're downloading a STIS spectrum from the ULLYSES high level science products archive:

# First STIS, but through the ULLYSES database - non-standard

readfile, __ = urllib.request.urlretrieve(

"https://ullyses.stsci.edu/files/bi173.tar.gz", "./ul_zip.tar.gz"

) # Where to save file to locally

tar = tarfile.open(readfile, "r:gz")

tar.extractall()

tar.close()

stis_data_dir = Path("./bi173/")

# Next two lines prevent new ULLYSES data releases,

# (and subsequent file renaming,) from breaking the code.

stis_glob = stis_data_dir.glob("hlsp_ullyses_hst_stis_bi173_e230m_dr*_cspec.fits")

stis_nuv_cspec = sorted(stis_glob)[-1]

# Now COS

nuv_cos_x1dsum = Observations.download_products(

Observations.get_product_list(Observations.query_criteria(obs_id="LBY615010")),

mrp_only=True,

)[1]["Local Path"]

Now read in all the COS and STIS data and convolve the STIS data with the COS NUV LSFs:

# Read in both COS and STIS NUV data:

# COS we need to build up from 3 stripes:

cos_nuv_table = Table.read(nuv_cos_x1dsum)

COS_nuv_wvln, COS_nuv_flux = [], []

for cos_segment in [0, 1, 2]: # The COS segment at index 0 has a longer wvln domain

COS_wvln_, COS_flux_ = list(cos_nuv_table[cos_segment]["WAVELENGTH", "FLUX"])

COS_dqw_ = np.asarray(cos_nuv_table[cos_segment]["DQ_WGT"], dtype=bool)

COS_nuv_wvln += list(COS_wvln_[COS_dqw_])

COS_nuv_flux += list(COS_flux_[COS_dqw_])

COS_nuv_wvln, COS_nuv_flux = np.asarray(COS_nuv_wvln), np.asarray(COS_nuv_flux)

# Read STIS:

STIS_nuv_wvln, STIS_nuv_flux = Table.read(stis_nuv_cspec)["WAVELENGTH", "FLUX"][0]

# Convolve STIS with COS' LSFs:

STIS_nuv_lwvln, STIS_nuv_lflux = convolve_lsf(

STIS_nuv_wvln,

STIS_nuv_flux,

1921,

str(datadir / nuv_LSF_file_name),

nuv_disptab_path,

detector="NUV",

)

Finally, generate a plot like Fig 3.7, but for this NUV data:

# Select a few NUV lines from the table in Leitherer et al, 2011

lines = {"Si II (Milky Way)": 1808.01, "Si II (LMC)": 1809.5, "Zn II": 2026.14}

# Set up figure

fig = plt.figure(figsize=(10, 16))

gs = fig.add_gridspec(nrows=3, ncols=1) # Using gridspec to let us control panel sizes and locations

for i, (linename, line) in enumerate(lines.items()): # For each line:

ax = fig.add_subplot(gs[i, 0]) # Add a plot at the correct position on the grid

ax.plot(STIS_nuv_wvln,STIS_nuv_flux,

linestyle="-.",c="C0", # Plot all the spectra for each line

label="STIS Un-convolved",

)

ax.plot(STIS_nuv_lwvln,STIS_nuv_lflux,

markersize=1,linestyle="-",c="C1",

label="STIS Convolved",

)

ax.plot(COS_nuv_wvln, COS_nuv_flux,

markersize=0.1, linestyle="--", c="k",

label="COS"

)

# Add a vertical line at the reference frame wavelength of the line

ax.axvline(line, c="gray", linestyle="--", label=f"{linename} line at {line}Å")

# Set bounds and add legends

ax.set_xlim(line - 2, line + 2)

ax.set_ylim(-5e-15, 5.5e-13)

ax.legend(fontsize=15, loc="upper right")

# Give fig the title and labels

fig.suptitle(

f"Fig. 3.8\nA series of NUV lines with COS\n and STIS spectra convolved with COS NUV LSFs\n",

size=20,

)

ax.set_xlabel("Wavelength [$\AA$]", size=20)

fig.text(

s="Flux [$erg\ \AA^{-1}\ cm^{-2}\ s^{-1}$]",

x=-0.018,y=0.38,

rotation="vertical",

size=20,

)

# format and save the figure

plt.tight_layout()

plt.savefig(str(plotsdir / "COS_STIS_compare_nuv.png"), bbox_inches="tight")

In review:¶

We've learned...

- What a Line Spread Function (LSF) is and why we might need to use it

- How to determine the correct LSF for your COS data and find it on the COS Spectral Resolution Website

- How to convolve LSFs with a template spectrum

About this Notebook¶

Author: Nat Kerman nkerman@stsci.edu

Contributors: Rachel Plesha, Julia Roman-Duval

Updated On: 2021-09-14

This tutorial was generated to be in compliance with the STScI style guides and would like to cite the Jupyter guide in particular.

Citations¶

If you use astropy, matplotlib, astroquery, or numpy for published research, please cite the

authors. Follow these links for more information about citations: