NIRISS AMI Pipeline Notebook#

Authors: R. Cooper

Last Updated: December 10, 2025

Pipeline Version: 1.20.2 (Build 12.1)

Purpose:

This notebook provides a framework for processing Near-Infrared

Imager and Slitless Spectrograph (NIRISS) Aperture Masking Interferometry (AMI) data through all

three James Webb Space Telescope (JWST) pipeline stages. Data is assumed

to be located in one observation folder according to paths set up below.

It should not be necessary to edit any cells other than in the

Configuration section unless modifying the standard

pipeline processing options.

Data: This notebook uses an example dataset from Program ID 1093 (PI: Thatte) which is the AMI commissioning program. For illustrative purposes, we will use a single target and reference star pair. Each exposure was taken in the F480W filter filter with the non-redundant mask (NRM) that enables AMI in the pupil. The observations used are observation 12 for the target and observation 15 for the reference star.

Example input data to use will be downloaded automatically unless disabled (i.e., to use local files instead).

JWST pipeline version and CRDS context:

This notebook was written for the above-specified pipeline version and associated

build context for this version of the JWST Calibration Pipeline. Information about

this and other contexts can be found in the JWST Calibration Reference Data System

(CRDS server). If you use different pipeline versions,

please refer to the table here

to determine what context to use. To learn more about the differences for the pipeline,

read the relevant

documentation.

Please note that pipeline software development is a continuous process, so results

in some cases may be slightly different if a subsequent version is used. For optimal

results, users are strongly encouraged to reprocess their data using the most recent

pipeline version and

associated CRDS context,

taking advantage of bug fixes and algorithm improvements.

Any known issues for this build are noted in the notebook.

Updates:

This notebook is regularly updated as improvements are made to the

pipeline. Find the most up to date version of this notebook at:

https://github.com/spacetelescope/jwst-pipeline-notebooks/

Recent Changes:

March 31, 2025: original notebook released

July 16, 2025: Updated to jwst 1.19.1 (no significant changes)

December 10, 2025: Updated to jwst 1.20.2 (no significant changes)

Table of Contents#

Configuration

Package Imports

Demo Mode Setup (ignore if not using demo data)

Directory Setup

Detector 1 Pipeline

Image2 Pipeline

AMI3 Pipeline

Visualize the data

1. Configuration#

Install dependencies and parameters#

To make sure that the pipeline version is compatabile with the steps

discussed below and the required dependencies and packages are installed,

you can create a fresh conda environment and install the provided

requirements.txt file:

conda create -n niriss_ami_pipeline python=3.11

conda activate niriss_ami_pipeline

pip install -r requirements.txt

Set the basic parameters to use with this notebook. These will affect what data is used, where data is located (if already in disk), and pipeline modules run in this data. The list of parameters are:

demo_mode

directories with data

pipeline modules

# Basic import necessary for configuration

import os

demo_mode must be set appropriately below.

Set demo_mode = True to run in demonstration mode. In this

mode this notebook will download example data from the Barbara A.

Mikulski Archive for Space Telescopes (MAST)

and process it through the

pipeline. This will all happen in a local directory unless modified

in Section 3

below.

Set demo_mode = False if you want to process your own data

that has already been downloaded and provide the location of the data.

# Set parameters for demo_mode, channel, band, data mode directories, and

# processing steps.

# -----------------------------Demo Mode---------------------------------

demo_mode = True

if demo_mode:

print('Running in demonstration mode using online example data!')

# --------------------------User Mode Directories------------------------

# If demo_mode = False, look for user data in these paths

if not demo_mode:

# Set directory paths for processing specific data; these will need

# to be changed to your local directory setup (below are given as

# examples)

basedir = os.path.join(os.getcwd(), '')

# Point to location of science observation data.

# Assumes both science and PSF reference data are in the same directory

# with uncalibrated data in sci_dir/uncal/ and results in stage1,

# stage2, stage3 directories

sci_dir = os.path.join(basedir, 'JWSTData/PID_1093/')

# Set which filter to process (empty will process all)

use_filter = '' # E.g., F480M

# --------------------------Set Processing Steps--------------------------

# Individual pipeline stages can be turned on/off here. Note that a later

# stage won't be able to run unless data products have already been

# produced from the prior stage.

# Science processing

dodet1 = True # calwebb_detector1

doimage2 = True # calwebb_image2

doami3 = True # calwebb_ami3

doviz = True # Visualize calwebb_ami3 output

Running in demonstration mode using online example data!

Set CRDS context and server#

Before importing CRDS and JWST modules, we need

to configure our environment. This includes defining a CRDS cache

directory in which to keep the reference files that will be used by the

calibration pipeline.

If the root directory for the local CRDS cache directory has not been set already, it will be set to create one in the home directory.

# ------------------------Set CRDS context and paths----------------------

# Each version of the calibration pipeline is associated with a specific CRDS

# context file. The pipeline will select the appropriate context file behind

# the scenes while running. However, if you wish to override the default context

# file and run the pipeline with a different context, you can set that using

# the CRDS_CONTEXT environment variable. Here we show how this is done,

# although we leave the line commented out in order to use the default context.

# If you wish to specify a different context, uncomment the line below.

#os.environ['CRDS_CONTEXT'] = 'jwst_1322.pmap' # CRDS context for 1.16.0

# Check whether the local CRDS cache directory has been set.

# If not, set it to the user home directory

if (os.getenv('CRDS_PATH') is None):

os.environ['CRDS_PATH'] = os.path.join(os.path.expanduser('~'), 'crds')

# Check whether the CRDS server URL has been set. If not, set it.

if (os.getenv('CRDS_SERVER_URL') is None):

os.environ['CRDS_SERVER_URL'] = 'https://jwst-crds.stsci.edu'

# Echo CRDS path in use

print(f"CRDS local filepath: {os.environ['CRDS_PATH']}")

print(f"CRDS file server: {os.environ['CRDS_SERVER_URL']}")

CRDS local filepath: /home/runner/crds

CRDS file server: https://jwst-crds.stsci.edu

2. Package Imports#

# Use the entire available screen width for this notebook

from IPython.display import display, HTML

display(HTML("<style>.container { width:95% !important; }</style>"))

# Basic system utilities for interacting with files

# ----------------------General Imports------------------------------------

import glob

import time

import json

from pathlib import Path

from collections import defaultdict

# Numpy for doing calculations

import numpy as np

# -----------------------Astroquery Imports--------------------------------

# ASCII files, and downloading demo files

from astroquery.mast import Observations

# For visualizing data

import matplotlib.pyplot as plt

from astropy.visualization import (MinMaxInterval, SqrtStretch,

ImageNormalize)

# For file manipulation

from astropy.io import fits

import asdf

# for JWST calibration pipeline

import jwst

import crds

from jwst.pipeline import Detector1Pipeline

from jwst.pipeline import Image2Pipeline

from jwst.pipeline import Ami3Pipeline

# JWST pipeline utilities

from jwst import datamodels

from jwst.associations import asn_from_list # Tools for creating association files

from jwst.associations.lib.rules_level3_base import DMS_Level3_Base # Definition of a Lvl3 association file

# Echo pipeline version and CRDS context in use

print(f"JWST Calibration Pipeline Version: {jwst.__version__}")

print(f"Using CRDS Context: {crds.get_context_name('jwst')}")

JWST Calibration Pipeline Version: 1.20.2

CRDS - INFO - Calibration SW Found: jwst 1.20.2 (/usr/share/miniconda/lib/python3.13/site-packages/jwst-1.20.2.dist-info)

Using CRDS Context: jwst_1464.pmap

Define convenience functions#

# Define a convenience function to select only files of a given filter from an input set

def select_filter_files(files, use_filter):

files_culled = []

if (use_filter != ''):

for file in files:

model = datamodels.open(file)

filt = model.meta.instrument.filter

if (filt == use_filter):

files_culled.append(file)

model.close()

else:

files_culled = files

return files_culled

# Define a convenience function to separate a list of input files into science and PSF reference exposures

def split_scipsf_files(files):

psffiles = []

scifiles = []

for file in files:

model = datamodels.open(file)

if model.meta.exposure.psf_reference is True:

psffiles.append(file)

else:

scifiles.append(file)

model.close()

return scifiles, psffiles

# Start a timer to keep track of runtime

time0 = time.perf_counter()

3. Demo Mode Setup (ignore if not using demo data)#

If running in demonstration mode, set up the program information to

retrieve the uncalibrated data automatically from MAST using

astroquery.

MAST has a dedicated service for JWST data retrieval, so the archive can

be searched by instrument keywords rather than just filenames or proposal IDs.

The list of searchable keywords for filtered JWST MAST queries

is here.

For illustrative purposes, we will use a single target and reference star pair. Each exposure was taken in the F480W filter filter with the non-redundant mask (NRM) that enables AMI in the pupil.

We will start with uncalibrated data products. The files are named

jw010930nn001_03102_00001_nis_uncal.fits, where nn refers to the

observation number: in this case, observation 12 for the target and

observation 15 for the reference star.

More information about the JWST file naming conventions can be found at: https://jwst-pipeline.readthedocs.io/en/latest/jwst/data_products/file_naming.html

# Set up the program information and paths for demo program

if demo_mode:

print('Running in demonstration mode and will download example data from MAST!')

# --------------Program and observation information--------------

program = '01093'

sci_observtn = ['012', '015'] # Obs 12 is the target, Obs 15 is the reference star

visit = '001'

visitgroup = '03'

seq_id = "1"

act_id = '02'

expnum = '00001'

# --------------Program and observation directories--------------

data_dir = os.path.join('.', 'nis_ami_demo_data')

sci_dir = os.path.join(data_dir, 'PID_1093')

uncal_dir = os.path.join(sci_dir, 'uncal') # Uncalibrated pipeline inputs should be here

if not os.path.exists(uncal_dir):

os.makedirs(uncal_dir)

# Create directory if it does not exist

if not os.path.isdir(data_dir):

os.mkdir(data_dir)

Running in demonstration mode and will download example data from MAST!

Identify list of science (SCI) uncalibrated files associated with visits.

# Obtain a list of observation IDs for the specified demo program

if demo_mode:

# Science data

sci_obs_id_table = Observations.query_criteria(instrument_name=["NIRISS/AMI"],

proposal_id=[program],

filters=['F480M;NRM'], # Data for Specific Filter

obs_id=['jw' + program + '*'])

# Turn the list of visits into a list of uncalibrated data files

if demo_mode:

# Define types of files to select

file_dict = {'uncal': {'product_type': 'SCIENCE',

'productSubGroupDescription': 'UNCAL',

'calib_level': [1]}}

# Science files

sci_files = []

# Loop over visits identifying uncalibrated files that are associated

# with them

for exposure in (sci_obs_id_table):

products = Observations.get_product_list(exposure)

for filetype, query_dict in file_dict.items():

filtered_products = Observations.filter_products(products, productType=query_dict['product_type'],

productSubGroupDescription=query_dict['productSubGroupDescription'],

calib_level=query_dict['calib_level'])

sci_files.extend(filtered_products['dataURI'])

# Select only the exposures we want to use based on filename

# Construct the filenames and select files based on them

filestrings = ['jw' + program + sciobs + visit + '_' + visitgroup + seq_id + act_id + '_' + expnum for sciobs in sci_observtn]

sci_files_to_download = [scifile for scifile in sci_files if any(filestr in scifile for filestr in filestrings)]

sci_files_to_download = sorted(set(sci_files_to_download))

print(f"Science files selected for downloading: {len(sci_files_to_download)}")

Science files selected for downloading: 2

Download all the uncal files and place them into the appropriate directories.

if demo_mode:

for filename in sci_files_to_download:

sci_manifest = Observations.download_file(filename,

local_path=os.path.join(uncal_dir, Path(filename).name))

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01093012001_03102_00001_nis_uncal.fits to ./nis_ami_demo_data/PID_1093/uncal/jw01093012001_03102_00001_nis_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01093015001_03102_00001_nis_uncal.fits to ./nis_ami_demo_data/PID_1093/uncal/jw01093015001_03102_00001_nis_uncal.fits ...

[Done]

4. Directory Setup#

Set up detailed paths to input/output stages here.

# Define output subdirectories to keep science data products organized

# -----------------------------Science Directories------------------------------

uncal_dir = os.path.join(sci_dir, 'uncal') # Uncalibrated pipeline inputs should be here

det1_dir = os.path.join(sci_dir, 'stage1') # calwebb_detector1 pipeline outputs will go here

image2_dir = os.path.join(sci_dir, 'stage2') # calwebb_image2 pipeline outputs will go here

ami3_dir = os.path.join(sci_dir, 'stage3') # calwebb_ami3 pipeline outputs will go here

# We need to check that the desired output directories exist, and if not create them

# Ensure filepaths for input data exist

if not os.path.exists(uncal_dir):

os.makedirs(uncal_dir)

if not os.path.exists(det1_dir):

os.makedirs(det1_dir)

if not os.path.exists(image2_dir):

os.makedirs(image2_dir)

if not os.path.exists(ami3_dir):

os.makedirs(ami3_dir)

Print the exposure parameters of all potential input files:

uncal_files = sorted(glob.glob(os.path.join(uncal_dir, '*_uncal.fits')))

# Restrict to selected filter if applicable

uncal_files = select_filter_files(uncal_files, use_filter)

for file in uncal_files:

model = datamodels.open(file)

# print file name

print(model.meta.filename)

# Print out exposure info

print(f"Instrument: {model.meta.instrument.name}")

print(f"Filter: {model.meta.instrument.filter}")

print(f"Pupil: {model.meta.instrument.pupil}")

print(f"Number of integrations: {model.meta.exposure.nints}")

print(f"Number of groups: {model.meta.exposure.ngroups}")

print(f"Readout pattern: {model.meta.exposure.readpatt}")

print(f"Dither position number: {model.meta.dither.position_number}")

print("\n")

model.close()

jw01093012001_03102_00001_nis_uncal.fits

Instrument: NIRISS

Filter: F480M

Pupil: NRM

Number of integrations: 69

Number of groups: 5

Readout pattern: NISRAPID

Dither position number: 1

jw01093015001_03102_00001_nis_uncal.fits

Instrument: NIRISS

Filter: F480M

Pupil: NRM

Number of integrations: 61

Number of groups: 12

Readout pattern: NISRAPID

Dither position number: 1

For the demo data, files should be for the NIRISS instrument

using the F480M filter in the Filter Wheel

and the NRM in the Pupil Wheel.

Likewise, both demo exposures use the NISRAPID readout pattern. The target has 5 groups per integration, and 69 integrations per exposure. The reference star has 12 groups per integration, and 61 integrations per exposure. They were taken at the same dither position; primary dither pattern position 1.

For more information about how JWST exposures are defined by up-the-ramp sampling, see the Understanding Exposure Times JDox article.

# Print out the time benchmark

time1 = time.perf_counter()

print(f"Runtime so far: {time1 - time0:0.0f} seconds")

Runtime so far: 48 seconds

5. Detector1 Pipeline#

Run the datasets through the

Detector1

stage of the pipeline to apply detector level calibrations and create a

countrate data product where slopes are fitted to the integration ramps.

These *_rateints.fits products are 3D (nintegrations x nrows x ncols)

and contain the fitted ramp slopes for each integration.

2D countrate data products (*_rate.fits) are also

created (nrows x ncols) which have been averaged over all

integrations.

By default, all steps in the Detector1 stage of the pipeline are run for

NIRISS except: the ipc correction step and the gain_scale step. Note

that while the persistence step

is set to run by default, this step does not automatically correct the

science data for persistence. The persistence step creates a

*_trapsfilled.fits file which is a model that records the number

of traps filled at each pixel at the end of an exposure. This file would be

used as an input to the persistence step, via the input_trapsfilled

argument, to correct a science exposure for persistence. Since persistence

is not well calibrated for NIRISS, we do not perform a persistence

correction and thus turn off this step to speed up calibration and to not

create files that will not be used in the subsequent analysis. This step

can be turned off when running the pipeline in Python by doing:

rate_result = Detector1Pipeline.call(uncal,steps={'persistence': {'skip': True}})

or as indicated in the cell bellow using a dictionary.

The charge_migration step

is particularly important for NIRISS images to mitigate apparent flux loss

in resampled images due to the spilling of charge from a central pixel into

its neighboring pixels (see Goudfrooij et al. 2023

for details). Charge migration occurs when the accumulated charge in a

central pixel exceeds a certain signal limit, which is ~25,000 ADU. This

step is turned on by default for NIRISS imaging mode when using CRDS

contexts of jwst_1159.pmap or later. Different signal limits for each filter are provided by the

pars-chargemigrationstep parameter files.

Users can specify a different signal limit by running this step with the

signal_threshold flag and entering another signal limit in units of ADU.

The effect is stronger when there is high contrast between a bright pixel and neighboring faint pixel,

as is the case for the strongly peaked AMI PSF.

For AMI mode, preliminary investigation shows that dark subtraction does not improve calibration, and may in fact have a detrimental effect, so we turn it off here.

# Set up a dictionary to define how the Detector1 pipeline should be configured

# Boilerplate dictionary setup

det1dict = defaultdict(dict)

# Step names are copied here for reference

det1_steps = ['group_scale', 'dq_init', 'saturation', 'ipc', 'superbias', 'refpix',

'linearity', 'persistence', 'dark_current', 'charge_migration',

'jump', 'ramp_fit', 'gain_scale']

# Overrides for whether or not certain steps should be skipped

# skipping the ipc, persistence, and dark steps

det1dict['ipc']['skip'] = True

det1dict['persistence']['skip'] = True

det1dict['dark_current']['skip'] = True

# Overrides for various reference files

# Files should be in the base local directory or provide full path

#det1dict['dq_init']['override_mask'] = 'myfile.fits' # Bad pixel mask

#det1dict['saturation']['override_saturation'] = 'myfile.fits' # Saturation

#det1dict['linearity']['override_linearity'] = 'myfile.fits' # Linearity

#det1dict['dark_current']['override_dark'] = 'myfile.fits' # Dark current subtraction

#det1dict['jump']['override_gain'] = 'myfile.fits' # Gain used by jump step

#det1dict['ramp_fit']['override_gain'] = 'myfile.fits' # Gain used by ramp fitting step

#det1dict['jump']['override_readnoise'] = 'myfile.fits' # Read noise used by jump step

#det1dict['ramp_fit']['override_readnoise'] = 'myfile.fits' # Read noise used by ramp fitting step

# Turn on multi-core processing (off by default). Choose what fraction of cores to use (quarter, half, or all)

det1dict['jump']['maximum_cores'] = 'half'

# Alter parameters of certain steps (example)

#det1dict['charge_migration']['signal_threshold'] = X

The clean_flicker_noise step removes 1/f noise from calibrated ramp images, after the jump step and prior to performing the ramp_fitting step. By default, this step is skipped in the calwebb_detector1 pipeline for all instruments and modes. Although available, this step has not been extensively tested for the NIRISS AMI subarray and is thus not recommended at the present time.

Run Detector1 stage of pipeline

# Run Detector1 stage of pipeline, specifying:

# output directory to save *_rateints.fits files

# save_results flag set to True so the *rateints.fits files are saved

# save_calibrated_ramp set to True so *ramp.fits files are saved

if dodet1:

for uncal in uncal_files:

rate_result = Detector1Pipeline.call(uncal,

output_dir=det1_dir,

steps=det1dict,

save_results=True,

save_calibrated_ramp=True)

else:

print('Skipping Detector1 processing')

2025-12-18 19:26:05,099 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_system_datalvl_0002.rmap 694 bytes (1 / 212 files) (0 / 762.3 K bytes)

2025-12-18 19:26:05,172 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_system_calver_0063.rmap 5.7 K bytes (2 / 212 files) (694 / 762.3 K bytes)

2025-12-18 19:26:05,289 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_system_0058.imap 385 bytes (3 / 212 files) (6.4 K / 762.3 K bytes)

2025-12-18 19:26:05,325 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_wavelengthrange_0024.rmap 1.4 K bytes (4 / 212 files) (6.8 K / 762.3 K bytes)

2025-12-18 19:26:05,375 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_wavecorr_0005.rmap 884 bytes (5 / 212 files) (8.2 K / 762.3 K bytes)

2025-12-18 19:26:05,419 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_superbias_0087.rmap 38.3 K bytes (6 / 212 files) (9.0 K / 762.3 K bytes)

2025-12-18 19:26:05,462 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_sirskernel_0002.rmap 704 bytes (7 / 212 files) (47.3 K / 762.3 K bytes)

2025-12-18 19:26:05,509 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_sflat_0027.rmap 20.6 K bytes (8 / 212 files) (48.0 K / 762.3 K bytes)

2025-12-18 19:26:05,590 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_saturation_0018.rmap 2.0 K bytes (9 / 212 files) (68.7 K / 762.3 K bytes)

2025-12-18 19:26:05,633 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_refpix_0015.rmap 1.6 K bytes (10 / 212 files) (70.7 K / 762.3 K bytes)

2025-12-18 19:26:05,688 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_readnoise_0025.rmap 2.6 K bytes (11 / 212 files) (72.2 K / 762.3 K bytes)

2025-12-18 19:26:05,739 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_psf_0002.rmap 687 bytes (12 / 212 files) (74.8 K / 762.3 K bytes)

2025-12-18 19:26:05,778 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pictureframe_0001.rmap 675 bytes (13 / 212 files) (75.5 K / 762.3 K bytes)

2025-12-18 19:26:05,844 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_photom_0013.rmap 958 bytes (14 / 212 files) (76.2 K / 762.3 K bytes)

2025-12-18 19:26:05,894 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pathloss_0011.rmap 1.2 K bytes (15 / 212 files) (77.1 K / 762.3 K bytes)

2025-12-18 19:26:05,932 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-whitelightstep_0001.rmap 777 bytes (16 / 212 files) (78.3 K / 762.3 K bytes)

2025-12-18 19:26:05,974 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-spec2pipeline_0013.rmap 2.1 K bytes (17 / 212 files) (79.1 K / 762.3 K bytes)

2025-12-18 19:26:06,007 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-resamplespecstep_0002.rmap 709 bytes (18 / 212 files) (81.2 K / 762.3 K bytes)

2025-12-18 19:26:06,128 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-refpixstep_0003.rmap 910 bytes (19 / 212 files) (81.9 K / 762.3 K bytes)

2025-12-18 19:26:06,164 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-outlierdetectionstep_0005.rmap 1.1 K bytes (20 / 212 files) (82.8 K / 762.3 K bytes)

2025-12-18 19:26:06,200 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-jumpstep_0005.rmap 810 bytes (21 / 212 files) (84.0 K / 762.3 K bytes)

2025-12-18 19:26:06,238 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-image2pipeline_0008.rmap 1.0 K bytes (22 / 212 files) (84.8 K / 762.3 K bytes)

2025-12-18 19:26:06,274 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-detector1pipeline_0003.rmap 1.1 K bytes (23 / 212 files) (85.8 K / 762.3 K bytes)

2025-12-18 19:26:06,414 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-darkpipeline_0003.rmap 872 bytes (24 / 212 files) (86.8 K / 762.3 K bytes)

2025-12-18 19:26:06,468 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-darkcurrentstep_0003.rmap 1.8 K bytes (25 / 212 files) (87.7 K / 762.3 K bytes)

2025-12-18 19:26:06,513 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_ote_0030.rmap 1.3 K bytes (26 / 212 files) (89.5 K / 762.3 K bytes)

2025-12-18 19:26:06,550 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_msaoper_0017.rmap 1.6 K bytes (27 / 212 files) (90.8 K / 762.3 K bytes)

2025-12-18 19:26:06,588 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_msa_0027.rmap 1.3 K bytes (28 / 212 files) (92.4 K / 762.3 K bytes)

2025-12-18 19:26:06,624 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_mask_0044.rmap 4.3 K bytes (29 / 212 files) (93.6 K / 762.3 K bytes)

2025-12-18 19:26:06,663 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_linearity_0017.rmap 1.6 K bytes (30 / 212 files) (97.9 K / 762.3 K bytes)

2025-12-18 19:26:06,698 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_ipc_0006.rmap 876 bytes (31 / 212 files) (99.5 K / 762.3 K bytes)

2025-12-18 19:26:06,740 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_ifuslicer_0017.rmap 1.5 K bytes (32 / 212 files) (100.4 K / 762.3 K bytes)

2025-12-18 19:26:06,782 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_ifupost_0019.rmap 1.5 K bytes (33 / 212 files) (101.9 K / 762.3 K bytes)

2025-12-18 19:26:06,822 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_ifufore_0017.rmap 1.5 K bytes (34 / 212 files) (103.4 K / 762.3 K bytes)

2025-12-18 19:26:06,943 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_gain_0023.rmap 1.8 K bytes (35 / 212 files) (104.9 K / 762.3 K bytes)

2025-12-18 19:26:06,983 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_fpa_0028.rmap 1.3 K bytes (36 / 212 files) (106.7 K / 762.3 K bytes)

2025-12-18 19:26:07,039 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_fore_0026.rmap 5.0 K bytes (37 / 212 files) (107.9 K / 762.3 K bytes)

2025-12-18 19:26:07,073 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_flat_0015.rmap 3.8 K bytes (38 / 212 files) (112.9 K / 762.3 K bytes)

2025-12-18 19:26:07,110 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_fflat_0028.rmap 7.2 K bytes (39 / 212 files) (116.7 K / 762.3 K bytes)

2025-12-18 19:26:07,149 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_extract1d_0018.rmap 2.3 K bytes (40 / 212 files) (123.9 K / 762.3 K bytes)

2025-12-18 19:26:07,186 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_disperser_0028.rmap 5.7 K bytes (41 / 212 files) (126.2 K / 762.3 K bytes)

2025-12-18 19:26:07,227 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_dflat_0007.rmap 1.1 K bytes (42 / 212 files) (131.9 K / 762.3 K bytes)

2025-12-18 19:26:07,265 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_dark_0083.rmap 36.4 K bytes (43 / 212 files) (133.0 K / 762.3 K bytes)

2025-12-18 19:26:07,312 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_cubepar_0015.rmap 966 bytes (44 / 212 files) (169.4 K / 762.3 K bytes)

2025-12-18 19:26:07,451 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_collimator_0026.rmap 1.3 K bytes (45 / 212 files) (170.4 K / 762.3 K bytes)

2025-12-18 19:26:07,492 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_camera_0026.rmap 1.3 K bytes (46 / 212 files) (171.7 K / 762.3 K bytes)

2025-12-18 19:26:07,530 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_barshadow_0007.rmap 1.8 K bytes (47 / 212 files) (173.0 K / 762.3 K bytes)

2025-12-18 19:26:07,564 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_area_0018.rmap 6.3 K bytes (48 / 212 files) (174.8 K / 762.3 K bytes)

2025-12-18 19:26:07,598 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_apcorr_0009.rmap 5.6 K bytes (49 / 212 files) (181.1 K / 762.3 K bytes)

2025-12-18 19:26:07,636 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_0420.imap 5.8 K bytes (50 / 212 files) (186.7 K / 762.3 K bytes)

2025-12-18 19:26:07,670 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_wavelengthrange_0008.rmap 897 bytes (51 / 212 files) (192.5 K / 762.3 K bytes)

2025-12-18 19:26:07,707 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_trappars_0004.rmap 753 bytes (52 / 212 files) (193.4 K / 762.3 K bytes)

2025-12-18 19:26:07,742 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_trapdensity_0005.rmap 705 bytes (53 / 212 files) (194.1 K / 762.3 K bytes)

2025-12-18 19:26:07,781 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_throughput_0005.rmap 1.3 K bytes (54 / 212 files) (194.8 K / 762.3 K bytes)

2025-12-18 19:26:07,819 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_superbias_0033.rmap 8.0 K bytes (55 / 212 files) (196.1 K / 762.3 K bytes)

2025-12-18 19:26:07,853 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_specwcs_0016.rmap 3.1 K bytes (56 / 212 files) (204.1 K / 762.3 K bytes)

2025-12-18 19:26:07,894 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_specprofile_0008.rmap 2.4 K bytes (57 / 212 files) (207.2 K / 762.3 K bytes)

2025-12-18 19:26:07,959 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_speckernel_0006.rmap 1.0 K bytes (58 / 212 files) (209.6 K / 762.3 K bytes)

2025-12-18 19:26:07,995 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_sirskernel_0002.rmap 700 bytes (59 / 212 files) (210.6 K / 762.3 K bytes)

2025-12-18 19:26:08,028 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_saturation_0015.rmap 829 bytes (60 / 212 files) (211.3 K / 762.3 K bytes)

2025-12-18 19:26:08,067 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_readnoise_0011.rmap 987 bytes (61 / 212 files) (212.1 K / 762.3 K bytes)

2025-12-18 19:26:08,102 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_photom_0037.rmap 1.3 K bytes (62 / 212 files) (213.1 K / 762.3 K bytes)

2025-12-18 19:26:08,247 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_persat_0007.rmap 674 bytes (63 / 212 files) (214.4 K / 762.3 K bytes)

2025-12-18 19:26:08,283 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pathloss_0003.rmap 758 bytes (64 / 212 files) (215.1 K / 762.3 K bytes)

2025-12-18 19:26:08,320 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pastasoss_0005.rmap 818 bytes (65 / 212 files) (215.8 K / 762.3 K bytes)

2025-12-18 19:26:08,353 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-undersamplecorrectionstep_0001.rmap 904 bytes (66 / 212 files) (216.6 K / 762.3 K bytes)

2025-12-18 19:26:08,389 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-tweakregstep_0012.rmap 3.1 K bytes (67 / 212 files) (217.5 K / 762.3 K bytes)

2025-12-18 19:26:08,424 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-spec2pipeline_0009.rmap 1.2 K bytes (68 / 212 files) (220.7 K / 762.3 K bytes)

2025-12-18 19:26:08,458 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-sourcecatalogstep_0002.rmap 2.3 K bytes (69 / 212 files) (221.9 K / 762.3 K bytes)

2025-12-18 19:26:08,495 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-resamplestep_0002.rmap 687 bytes (70 / 212 files) (224.2 K / 762.3 K bytes)

2025-12-18 19:26:08,546 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-outlierdetectionstep_0004.rmap 2.7 K bytes (71 / 212 files) (224.9 K / 762.3 K bytes)

2025-12-18 19:26:08,590 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-jumpstep_0007.rmap 6.4 K bytes (72 / 212 files) (227.6 K / 762.3 K bytes)

2025-12-18 19:26:08,630 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-image2pipeline_0005.rmap 1.0 K bytes (73 / 212 files) (233.9 K / 762.3 K bytes)

2025-12-18 19:26:08,663 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-detector1pipeline_0002.rmap 1.0 K bytes (74 / 212 files) (235.0 K / 762.3 K bytes)

2025-12-18 19:26:08,697 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-darkpipeline_0002.rmap 868 bytes (75 / 212 files) (236.0 K / 762.3 K bytes)

2025-12-18 19:26:08,731 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-darkcurrentstep_0001.rmap 591 bytes (76 / 212 files) (236.9 K / 762.3 K bytes)

2025-12-18 19:26:08,770 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-chargemigrationstep_0005.rmap 5.7 K bytes (77 / 212 files) (237.5 K / 762.3 K bytes)

2025-12-18 19:26:08,807 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-backgroundstep_0003.rmap 822 bytes (78 / 212 files) (243.1 K / 762.3 K bytes)

2025-12-18 19:26:08,844 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_nrm_0005.rmap 663 bytes (79 / 212 files) (243.9 K / 762.3 K bytes)

2025-12-18 19:26:08,889 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_mask_0024.rmap 1.5 K bytes (80 / 212 files) (244.6 K / 762.3 K bytes)

2025-12-18 19:26:08,924 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_linearity_0022.rmap 961 bytes (81 / 212 files) (246.1 K / 762.3 K bytes)

2025-12-18 19:26:08,961 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_ipc_0007.rmap 651 bytes (82 / 212 files) (247.0 K / 762.3 K bytes)

2025-12-18 19:26:09,002 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_gain_0011.rmap 797 bytes (83 / 212 files) (247.7 K / 762.3 K bytes)

2025-12-18 19:26:09,039 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_flat_0023.rmap 5.9 K bytes (84 / 212 files) (248.5 K / 762.3 K bytes)

2025-12-18 19:26:09,075 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_filteroffset_0010.rmap 853 bytes (85 / 212 files) (254.3 K / 762.3 K bytes)

2025-12-18 19:26:09,112 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_extract1d_0007.rmap 905 bytes (86 / 212 files) (255.2 K / 762.3 K bytes)

2025-12-18 19:26:09,158 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_drizpars_0004.rmap 519 bytes (87 / 212 files) (256.1 K / 762.3 K bytes)

2025-12-18 19:26:09,192 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_distortion_0025.rmap 3.4 K bytes (88 / 212 files) (256.6 K / 762.3 K bytes)

2025-12-18 19:26:09,227 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_dark_0037.rmap 8.1 K bytes (89 / 212 files) (260.1 K / 762.3 K bytes)

2025-12-18 19:26:09,263 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_bkg_0005.rmap 3.1 K bytes (90 / 212 files) (268.2 K / 762.3 K bytes)

2025-12-18 19:26:09,304 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_area_0014.rmap 2.7 K bytes (91 / 212 files) (271.2 K / 762.3 K bytes)

2025-12-18 19:26:09,343 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_apcorr_0010.rmap 4.3 K bytes (92 / 212 files) (273.9 K / 762.3 K bytes)

2025-12-18 19:26:09,377 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_abvegaoffset_0004.rmap 1.4 K bytes (93 / 212 files) (278.2 K / 762.3 K bytes)

2025-12-18 19:26:09,414 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_0292.imap 5.8 K bytes (94 / 212 files) (279.6 K / 762.3 K bytes)

2025-12-18 19:26:09,456 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_wavelengthrange_0011.rmap 996 bytes (95 / 212 files) (285.3 K / 762.3 K bytes)

2025-12-18 19:26:09,491 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_tsophot_0003.rmap 896 bytes (96 / 212 files) (286.3 K / 762.3 K bytes)

2025-12-18 19:26:09,528 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_trappars_0003.rmap 1.6 K bytes (97 / 212 files) (287.2 K / 762.3 K bytes)

2025-12-18 19:26:09,566 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_trapdensity_0003.rmap 1.6 K bytes (98 / 212 files) (288.8 K / 762.3 K bytes)

2025-12-18 19:26:09,603 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_superbias_0020.rmap 19.6 K bytes (99 / 212 files) (290.5 K / 762.3 K bytes)

2025-12-18 19:26:09,647 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_specwcs_0024.rmap 8.0 K bytes (100 / 212 files) (310.0 K / 762.3 K bytes)

2025-12-18 19:26:09,685 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_sirskernel_0003.rmap 671 bytes (101 / 212 files) (318.0 K / 762.3 K bytes)

2025-12-18 19:26:09,756 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_saturation_0011.rmap 2.8 K bytes (102 / 212 files) (318.7 K / 762.3 K bytes)

2025-12-18 19:26:09,794 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_regions_0002.rmap 725 bytes (103 / 212 files) (321.5 K / 762.3 K bytes)

2025-12-18 19:26:09,830 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_readnoise_0027.rmap 26.6 K bytes (104 / 212 files) (322.2 K / 762.3 K bytes)

2025-12-18 19:26:09,870 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_psfmask_0008.rmap 28.4 K bytes (105 / 212 files) (348.8 K / 762.3 K bytes)

2025-12-18 19:26:09,911 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_photom_0028.rmap 3.4 K bytes (106 / 212 files) (377.2 K / 762.3 K bytes)

2025-12-18 19:26:09,946 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_persat_0005.rmap 1.6 K bytes (107 / 212 files) (380.5 K / 762.3 K bytes)

2025-12-18 19:26:09,985 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-whitelightstep_0004.rmap 2.0 K bytes (108 / 212 files) (382.1 K / 762.3 K bytes)

2025-12-18 19:26:10,024 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-tweakregstep_0003.rmap 4.5 K bytes (109 / 212 files) (384.1 K / 762.3 K bytes)

2025-12-18 19:26:10,062 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-tsophotometrystep_0003.rmap 1.1 K bytes (110 / 212 files) (388.6 K / 762.3 K bytes)

2025-12-18 19:26:10,096 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-spec2pipeline_0009.rmap 984 bytes (111 / 212 files) (389.6 K / 762.3 K bytes)

2025-12-18 19:26:10,133 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-sourcecatalogstep_0002.rmap 4.6 K bytes (112 / 212 files) (390.6 K / 762.3 K bytes)

2025-12-18 19:26:10,169 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-resamplestep_0002.rmap 687 bytes (113 / 212 files) (395.3 K / 762.3 K bytes)

2025-12-18 19:26:10,207 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-outlierdetectionstep_0003.rmap 940 bytes (114 / 212 files) (396.0 K / 762.3 K bytes)

2025-12-18 19:26:10,242 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-jumpstep_0005.rmap 806 bytes (115 / 212 files) (396.9 K / 762.3 K bytes)

2025-12-18 19:26:10,279 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-image2pipeline_0004.rmap 1.1 K bytes (116 / 212 files) (397.7 K / 762.3 K bytes)

2025-12-18 19:26:10,314 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-detector1pipeline_0006.rmap 1.7 K bytes (117 / 212 files) (398.8 K / 762.3 K bytes)

2025-12-18 19:26:10,350 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-darkpipeline_0002.rmap 868 bytes (118 / 212 files) (400.6 K / 762.3 K bytes)

2025-12-18 19:26:10,384 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-darkcurrentstep_0001.rmap 618 bytes (119 / 212 files) (401.4 K / 762.3 K bytes)

2025-12-18 19:26:10,418 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-backgroundstep_0003.rmap 822 bytes (120 / 212 files) (402.0 K / 762.3 K bytes)

2025-12-18 19:26:10,455 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_mask_0013.rmap 4.8 K bytes (121 / 212 files) (402.9 K / 762.3 K bytes)

2025-12-18 19:26:10,495 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_linearity_0011.rmap 2.4 K bytes (122 / 212 files) (407.6 K / 762.3 K bytes)

2025-12-18 19:26:10,531 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_ipc_0003.rmap 2.0 K bytes (123 / 212 files) (410.0 K / 762.3 K bytes)

2025-12-18 19:26:10,566 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_gain_0016.rmap 2.1 K bytes (124 / 212 files) (412.0 K / 762.3 K bytes)

2025-12-18 19:26:10,599 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_flat_0028.rmap 51.7 K bytes (125 / 212 files) (414.1 K / 762.3 K bytes)

2025-12-18 19:26:10,650 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_filteroffset_0004.rmap 1.4 K bytes (126 / 212 files) (465.8 K / 762.3 K bytes)

2025-12-18 19:26:10,683 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_extract1d_0005.rmap 1.2 K bytes (127 / 212 files) (467.2 K / 762.3 K bytes)

2025-12-18 19:26:10,719 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_drizpars_0001.rmap 519 bytes (128 / 212 files) (468.4 K / 762.3 K bytes)

2025-12-18 19:26:10,754 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_distortion_0034.rmap 53.4 K bytes (129 / 212 files) (468.9 K / 762.3 K bytes)

2025-12-18 19:26:10,811 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_dark_0049.rmap 29.6 K bytes (130 / 212 files) (522.3 K / 762.3 K bytes)

2025-12-18 19:26:10,855 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_bkg_0002.rmap 7.0 K bytes (131 / 212 files) (551.9 K / 762.3 K bytes)

2025-12-18 19:26:10,889 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_area_0012.rmap 33.5 K bytes (132 / 212 files) (558.9 K / 762.3 K bytes)

2025-12-18 19:26:10,928 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_apcorr_0008.rmap 4.3 K bytes (133 / 212 files) (592.4 K / 762.3 K bytes)

2025-12-18 19:26:10,962 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_abvegaoffset_0003.rmap 1.3 K bytes (134 / 212 files) (596.6 K / 762.3 K bytes)

2025-12-18 19:26:10,999 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_0333.imap 5.7 K bytes (135 / 212 files) (597.9 K / 762.3 K bytes)

2025-12-18 19:26:11,037 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_wavelengthrange_0029.rmap 1.0 K bytes (136 / 212 files) (603.6 K / 762.3 K bytes)

2025-12-18 19:26:11,076 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_tsophot_0004.rmap 882 bytes (137 / 212 files) (604.6 K / 762.3 K bytes)

2025-12-18 19:26:11,112 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_straymask_0009.rmap 987 bytes (138 / 212 files) (605.5 K / 762.3 K bytes)

2025-12-18 19:26:11,146 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_specwcs_0047.rmap 5.9 K bytes (139 / 212 files) (606.5 K / 762.3 K bytes)

2025-12-18 19:26:11,180 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_saturation_0015.rmap 1.2 K bytes (140 / 212 files) (612.4 K / 762.3 K bytes)

2025-12-18 19:26:11,214 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_rscd_0008.rmap 1.0 K bytes (141 / 212 files) (613.6 K / 762.3 K bytes)

2025-12-18 19:26:11,251 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_resol_0006.rmap 790 bytes (142 / 212 files) (614.6 K / 762.3 K bytes)

2025-12-18 19:26:11,295 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_reset_0026.rmap 3.9 K bytes (143 / 212 files) (615.4 K / 762.3 K bytes)

2025-12-18 19:26:11,330 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_regions_0036.rmap 4.4 K bytes (144 / 212 files) (619.3 K / 762.3 K bytes)

2025-12-18 19:26:11,365 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_readnoise_0023.rmap 1.6 K bytes (145 / 212 files) (623.6 K / 762.3 K bytes)

2025-12-18 19:26:11,401 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_psfmask_0009.rmap 2.1 K bytes (146 / 212 files) (625.3 K / 762.3 K bytes)

2025-12-18 19:26:11,439 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_psf_0008.rmap 2.6 K bytes (147 / 212 files) (627.4 K / 762.3 K bytes)

2025-12-18 19:26:11,474 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_photom_0062.rmap 3.7 K bytes (148 / 212 files) (630.0 K / 762.3 K bytes)

2025-12-18 19:26:11,509 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pathloss_0005.rmap 866 bytes (149 / 212 files) (633.8 K / 762.3 K bytes)

2025-12-18 19:26:11,545 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-whitelightstep_0003.rmap 912 bytes (150 / 212 files) (634.6 K / 762.3 K bytes)

2025-12-18 19:26:11,580 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-tweakregstep_0003.rmap 1.8 K bytes (151 / 212 files) (635.5 K / 762.3 K bytes)

2025-12-18 19:26:11,629 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-tsophotometrystep_0003.rmap 2.7 K bytes (152 / 212 files) (637.4 K / 762.3 K bytes)

2025-12-18 19:26:11,668 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-spec3pipeline_0011.rmap 886 bytes (153 / 212 files) (640.0 K / 762.3 K bytes)

2025-12-18 19:26:11,712 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-spec2pipeline_0013.rmap 1.4 K bytes (154 / 212 files) (640.9 K / 762.3 K bytes)

2025-12-18 19:26:11,749 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-sourcecatalogstep_0003.rmap 1.9 K bytes (155 / 212 files) (642.3 K / 762.3 K bytes)

2025-12-18 19:26:11,789 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-resamplestep_0002.rmap 677 bytes (156 / 212 files) (644.2 K / 762.3 K bytes)

2025-12-18 19:26:11,853 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-resamplespecstep_0002.rmap 706 bytes (157 / 212 files) (644.9 K / 762.3 K bytes)

2025-12-18 19:26:11,890 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-outlierdetectionstep_0020.rmap 3.4 K bytes (158 / 212 files) (645.6 K / 762.3 K bytes)

2025-12-18 19:26:11,924 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-jumpstep_0011.rmap 1.6 K bytes (159 / 212 files) (649.0 K / 762.3 K bytes)

2025-12-18 19:26:11,966 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-image2pipeline_0010.rmap 1.1 K bytes (160 / 212 files) (650.6 K / 762.3 K bytes)

2025-12-18 19:26:12,008 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-extract1dstep_0003.rmap 807 bytes (161 / 212 files) (651.7 K / 762.3 K bytes)

2025-12-18 19:26:12,048 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-emicorrstep_0003.rmap 796 bytes (162 / 212 files) (652.5 K / 762.3 K bytes)

2025-12-18 19:26:12,086 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-detector1pipeline_0010.rmap 1.6 K bytes (163 / 212 files) (653.3 K / 762.3 K bytes)

2025-12-18 19:26:12,128 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-darkpipeline_0002.rmap 860 bytes (164 / 212 files) (654.9 K / 762.3 K bytes)

2025-12-18 19:26:12,165 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-darkcurrentstep_0002.rmap 683 bytes (165 / 212 files) (655.7 K / 762.3 K bytes)

2025-12-18 19:26:12,203 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-backgroundstep_0003.rmap 814 bytes (166 / 212 files) (656.4 K / 762.3 K bytes)

2025-12-18 19:26:12,240 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_mrsxartcorr_0002.rmap 2.2 K bytes (167 / 212 files) (657.2 K / 762.3 K bytes)

2025-12-18 19:26:12,281 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_mrsptcorr_0005.rmap 2.0 K bytes (168 / 212 files) (659.4 K / 762.3 K bytes)

2025-12-18 19:26:12,316 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_mask_0032.rmap 7.3 K bytes (169 / 212 files) (661.3 K / 762.3 K bytes)

2025-12-18 19:26:12,355 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_linearity_0018.rmap 2.8 K bytes (170 / 212 files) (668.6 K / 762.3 K bytes)

2025-12-18 19:26:12,390 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_ipc_0008.rmap 700 bytes (171 / 212 files) (671.4 K / 762.3 K bytes)

2025-12-18 19:26:12,432 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_gain_0013.rmap 3.9 K bytes (172 / 212 files) (672.1 K / 762.3 K bytes)

2025-12-18 19:26:12,480 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_fringefreq_0003.rmap 1.4 K bytes (173 / 212 files) (676.1 K / 762.3 K bytes)

2025-12-18 19:26:12,522 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_fringe_0019.rmap 3.9 K bytes (174 / 212 files) (677.5 K / 762.3 K bytes)

2025-12-18 19:26:12,571 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_flat_0071.rmap 15.7 K bytes (175 / 212 files) (681.4 K / 762.3 K bytes)

2025-12-18 19:26:12,612 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_filteroffset_0027.rmap 2.1 K bytes (176 / 212 files) (697.1 K / 762.3 K bytes)

2025-12-18 19:26:12,650 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_extract1d_0021.rmap 1.4 K bytes (177 / 212 files) (699.2 K / 762.3 K bytes)

2025-12-18 19:26:12,700 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_emicorr_0004.rmap 663 bytes (178 / 212 files) (700.6 K / 762.3 K bytes)

2025-12-18 19:26:12,738 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_drizpars_0002.rmap 511 bytes (179 / 212 files) (701.3 K / 762.3 K bytes)

2025-12-18 19:26:12,778 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_distortion_0042.rmap 4.8 K bytes (180 / 212 files) (701.8 K / 762.3 K bytes)

2025-12-18 19:26:12,812 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_dark_0037.rmap 4.4 K bytes (181 / 212 files) (706.6 K / 762.3 K bytes)

2025-12-18 19:26:12,850 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_cubepar_0017.rmap 800 bytes (182 / 212 files) (711.0 K / 762.3 K bytes)

2025-12-18 19:26:12,886 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_bkg_0003.rmap 712 bytes (183 / 212 files) (711.8 K / 762.3 K bytes)

2025-12-18 19:26:12,922 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_area_0015.rmap 866 bytes (184 / 212 files) (712.5 K / 762.3 K bytes)

2025-12-18 19:26:12,962 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_apcorr_0022.rmap 5.0 K bytes (185 / 212 files) (713.3 K / 762.3 K bytes)

2025-12-18 19:26:13,005 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_abvegaoffset_0003.rmap 1.3 K bytes (186 / 212 files) (718.3 K / 762.3 K bytes)

2025-12-18 19:26:13,046 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_0468.imap 5.9 K bytes (187 / 212 files) (719.6 K / 762.3 K bytes)

2025-12-18 19:26:13,083 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_trappars_0004.rmap 903 bytes (188 / 212 files) (725.5 K / 762.3 K bytes)

2025-12-18 19:26:13,118 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_trapdensity_0006.rmap 930 bytes (189 / 212 files) (726.4 K / 762.3 K bytes)

2025-12-18 19:26:13,153 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_superbias_0017.rmap 3.8 K bytes (190 / 212 files) (727.3 K / 762.3 K bytes)

2025-12-18 19:26:13,197 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_saturation_0009.rmap 779 bytes (191 / 212 files) (731.1 K / 762.3 K bytes)

2025-12-18 19:26:13,231 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_readnoise_0014.rmap 1.3 K bytes (192 / 212 files) (731.9 K / 762.3 K bytes)

2025-12-18 19:26:13,273 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_photom_0014.rmap 1.1 K bytes (193 / 212 files) (733.1 K / 762.3 K bytes)

2025-12-18 19:26:13,314 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_persat_0006.rmap 884 bytes (194 / 212 files) (734.2 K / 762.3 K bytes)

2025-12-18 19:26:13,350 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_pars-tweakregstep_0002.rmap 850 bytes (195 / 212 files) (735.1 K / 762.3 K bytes)

2025-12-18 19:26:13,400 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_pars-sourcecatalogstep_0001.rmap 636 bytes (196 / 212 files) (736.0 K / 762.3 K bytes)

2025-12-18 19:26:13,440 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_pars-outlierdetectionstep_0001.rmap 654 bytes (197 / 212 files) (736.6 K / 762.3 K bytes)

2025-12-18 19:26:13,483 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_pars-image2pipeline_0005.rmap 974 bytes (198 / 212 files) (737.3 K / 762.3 K bytes)

2025-12-18 19:26:13,519 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_pars-detector1pipeline_0002.rmap 1.0 K bytes (199 / 212 files) (738.2 K / 762.3 K bytes)

2025-12-18 19:26:13,555 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_pars-darkpipeline_0002.rmap 856 bytes (200 / 212 files) (739.3 K / 762.3 K bytes)

2025-12-18 19:26:13,594 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_mask_0023.rmap 1.1 K bytes (201 / 212 files) (740.1 K / 762.3 K bytes)

2025-12-18 19:26:13,633 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_linearity_0015.rmap 925 bytes (202 / 212 files) (741.2 K / 762.3 K bytes)

2025-12-18 19:26:13,671 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_ipc_0003.rmap 614 bytes (203 / 212 files) (742.1 K / 762.3 K bytes)

2025-12-18 19:26:13,709 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_gain_0010.rmap 890 bytes (204 / 212 files) (742.7 K / 762.3 K bytes)

2025-12-18 19:26:13,746 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_flat_0009.rmap 1.1 K bytes (205 / 212 files) (743.6 K / 762.3 K bytes)

2025-12-18 19:26:13,784 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_distortion_0011.rmap 1.2 K bytes (206 / 212 files) (744.7 K / 762.3 K bytes)

2025-12-18 19:26:13,824 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_dark_0017.rmap 4.3 K bytes (207 / 212 files) (746.0 K / 762.3 K bytes)

2025-12-18 19:26:13,863 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_area_0010.rmap 1.2 K bytes (208 / 212 files) (750.3 K / 762.3 K bytes)

2025-12-18 19:26:13,900 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_apcorr_0004.rmap 4.0 K bytes (209 / 212 files) (751.4 K / 762.3 K bytes)

2025-12-18 19:26:13,936 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_abvegaoffset_0002.rmap 1.3 K bytes (210 / 212 files) (755.4 K / 762.3 K bytes)

2025-12-18 19:26:13,972 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_0124.imap 5.1 K bytes (211 / 212 files) (756.6 K / 762.3 K bytes)

2025-12-18 19:26:14,014 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_1464.pmap 580 bytes (212 / 212 files) (761.7 K / 762.3 K bytes)

2025-12-18 19:26:14,215 - CRDS - ERROR - Error determining best reference for 'pars-darkcurrentstep' = No match found.

2025-12-18 19:26:14,219 - CRDS - INFO - Fetching /home/runner/crds/references/jwst/niriss/jwst_niriss_pars-chargemigrationstep_0038.asdf 2.1 K bytes (1 / 1 files) (0 / 2.1 K bytes)

2025-12-18 19:26:14,261 - stpipe.step - INFO - PARS-CHARGEMIGRATIONSTEP parameters found: /home/runner/crds/references/jwst/niriss/jwst_niriss_pars-chargemigrationstep_0038.asdf

2025-12-18 19:26:14,272 - CRDS - INFO - Fetching /home/runner/crds/references/jwst/niriss/jwst_niriss_pars-jumpstep_0072.asdf 1.6 K bytes (1 / 1 files) (0 / 1.6 K bytes)

2025-12-18 19:26:14,311 - stpipe.step - INFO - PARS-JUMPSTEP parameters found: /home/runner/crds/references/jwst/niriss/jwst_niriss_pars-jumpstep_0072.asdf

2025-12-18 19:26:14,325 - CRDS - INFO - Fetching /home/runner/crds/references/jwst/niriss/jwst_niriss_pars-detector1pipeline_0001.asdf 1.1 K bytes (1 / 1 files) (0 / 1.1 K bytes)

2025-12-18 19:26:14,367 - stpipe.pipeline - INFO - PARS-DETECTOR1PIPELINE parameters found: /home/runner/crds/references/jwst/niriss/jwst_niriss_pars-detector1pipeline_0001.asdf

2025-12-18 19:26:14,382 - stpipe.step - INFO - Detector1Pipeline instance created.

2025-12-18 19:26:14,383 - stpipe.step - INFO - GroupScaleStep instance created.

2025-12-18 19:26:14,384 - stpipe.step - INFO - DQInitStep instance created.

2025-12-18 19:26:14,385 - stpipe.step - INFO - EmiCorrStep instance created.

2025-12-18 19:26:14,386 - stpipe.step - INFO - SaturationStep instance created.

2025-12-18 19:26:14,387 - stpipe.step - INFO - IPCStep instance created.

2025-12-18 19:26:14,387 - stpipe.step - INFO - SuperBiasStep instance created.

2025-12-18 19:26:14,388 - stpipe.step - INFO - RefPixStep instance created.

2025-12-18 19:26:14,389 - stpipe.step - INFO - RscdStep instance created.

2025-12-18 19:26:14,391 - stpipe.step - INFO - FirstFrameStep instance created.

2025-12-18 19:26:14,392 - stpipe.step - INFO - LastFrameStep instance created.

2025-12-18 19:26:14,392 - stpipe.step - INFO - LinearityStep instance created.

2025-12-18 19:26:14,393 - stpipe.step - INFO - DarkCurrentStep instance created.

2025-12-18 19:26:14,394 - stpipe.step - INFO - ResetStep instance created.

2025-12-18 19:26:14,395 - stpipe.step - INFO - PersistenceStep instance created.

2025-12-18 19:26:14,396 - stpipe.step - INFO - ChargeMigrationStep instance created.

2025-12-18 19:26:14,398 - stpipe.step - INFO - JumpStep instance created.

2025-12-18 19:26:14,399 - stpipe.step - INFO - CleanFlickerNoiseStep instance created.

2025-12-18 19:26:14,400 - stpipe.step - INFO - RampFitStep instance created.

2025-12-18 19:26:14,401 - stpipe.step - INFO - GainScaleStep instance created.

2025-12-18 19:26:14,545 - stpipe.step - INFO - Step Detector1Pipeline running with args ('./nis_ami_demo_data/PID_1093/uncal/jw01093012001_03102_00001_nis_uncal.fits',).

2025-12-18 19:26:14,566 - stpipe.step - INFO - Step Detector1Pipeline parameters are:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: ./nis_ami_demo_data/PID_1093/stage1

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: True

skip: False

suffix: None

search_output_file: True

input_dir: ''

save_calibrated_ramp: True

steps:

group_scale:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

dq_init:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

emicorr:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: True

suffix: None

search_output_file: True

input_dir: ''

algorithm: joint

nints_to_phase: None

nbins: None

scale_reference: True

onthefly_corr_freq: None

use_n_cycles: 3

fit_ints_separately: False

user_supplied_reffile: None

save_intermediate_results: False

saturation:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

n_pix_grow_sat: 1

use_readpatt: True

ipc:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: True

suffix: None

search_output_file: True

input_dir: ''

superbias:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

refpix:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

odd_even_columns: True

use_side_ref_pixels: True

side_smoothing_length: 11

side_gain: 1.0

odd_even_rows: True

ovr_corr_mitigation_ftr: 3.0

preserve_irs2_refpix: False

irs2_mean_subtraction: False

refpix_algorithm: median

sigreject: 4.0

gaussmooth: 1.0

halfwidth: 30

rscd:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

firstframe:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

bright_use_group1: True

lastframe:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

linearity:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

dark_current:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: True

suffix: None

search_output_file: True

input_dir: ''

dark_output: None

average_dark_current: None

reset:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

persistence:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: True

suffix: None

search_output_file: True

input_dir: ''

input_trapsfilled: ''

flag_pers_cutoff: 40.0

save_persistence: False

save_trapsfilled: True

modify_input: False

charge_migration:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

signal_threshold: 14465.0

jump:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

rejection_threshold: 4.0

three_group_rejection_threshold: 6.0

four_group_rejection_threshold: 5.0

maximum_cores: half

flag_4_neighbors: False

max_jump_to_flag_neighbors: 200.0

min_jump_to_flag_neighbors: 10.0

after_jump_flag_dn1: 1000

after_jump_flag_time1: 90

after_jump_flag_dn2: 0

after_jump_flag_time2: 0

expand_large_events: True

min_sat_area: 5

min_jump_area: 15.0

expand_factor: 1.75

use_ellipses: False

sat_required_snowball: True

min_sat_radius_extend: 5.0

sat_expand: 0

edge_size: 20

mask_snowball_core_next_int: True

snowball_time_masked_next_int: 4000

find_showers: False

max_shower_amplitude: 4.0

extend_snr_threshold: 1.2

extend_min_area: 90

extend_inner_radius: 1.0

extend_outer_radius: 2.6

extend_ellipse_expand_ratio: 1.1

time_masked_after_shower: 15.0

min_diffs_single_pass: 10

max_extended_radius: 100

minimum_groups: 3

minimum_sigclip_groups: 100

only_use_ints: True

clean_flicker_noise:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: True

suffix: None

search_output_file: True

input_dir: ''

autoparam: False

fit_method: median

fit_by_channel: False

background_method: median

background_box_size: None

mask_science_regions: False

apply_flat_field: False

n_sigma: 2.0

fit_histogram: False

single_mask: True

user_mask: None

save_mask: False

save_background: False

save_noise: False

ramp_fit:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

algorithm: OLS_C

int_name: ''

save_opt: False

opt_name: ''

suppress_one_group: True

firstgroup: None

lastgroup: None

maximum_cores: '1'

gain_scale:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

2025-12-18 19:26:14,591 - stpipe.pipeline - INFO - Prefetching reference files for dataset: 'jw01093012001_03102_00001_nis_uncal.fits' reftypes = ['gain', 'linearity', 'mask', 'readnoise', 'refpix', 'reset', 'rscd', 'saturation', 'sirskernel', 'superbias']

2025-12-18 19:26:14,593 - CRDS - INFO - Fetching /home/runner/crds/references/jwst/niriss/jwst_niriss_gain_0006.fits 16.8 M bytes (1 / 7 files) (0 / 289.7 M bytes)

2025-12-18 19:26:14,777 - CRDS - INFO - Fetching /home/runner/crds/references/jwst/niriss/jwst_niriss_linearity_0017.fits 205.5 M bytes (2 / 7 files) (16.8 M / 289.7 M bytes)

2025-12-18 19:26:19,984 - CRDS - INFO - Fetching /home/runner/crds/references/jwst/niriss/jwst_niriss_mask_0016.fits 16.8 M bytes (3 / 7 files) (222.3 M / 289.7 M bytes)

2025-12-18 19:26:20,286 - CRDS - INFO - Fetching /home/runner/crds/references/jwst/niriss/jwst_niriss_readnoise_0005.fits 16.8 M bytes (4 / 7 files) (239.1 M / 289.7 M bytes)

2025-12-18 19:26:20,647 - CRDS - INFO - Fetching /home/runner/crds/references/jwst/niriss/jwst_niriss_saturation_0015.fits 33.6 M bytes (5 / 7 files) (255.9 M / 289.7 M bytes)

2025-12-18 19:26:21,366 - CRDS - INFO - Fetching /home/runner/crds/references/jwst/niriss/jwst_niriss_sirskernel_0001.asdf 67.4 K bytes (6 / 7 files) (289.5 M / 289.7 M bytes)

2025-12-18 19:26:21,421 - CRDS - INFO - Fetching /home/runner/crds/references/jwst/niriss/jwst_niriss_superbias_0182.fits 97.9 K bytes (7 / 7 files) (289.6 M / 289.7 M bytes)

2025-12-18 19:26:21,482 - stpipe.pipeline - INFO - Prefetch for GAIN reference file is '/home/runner/crds/references/jwst/niriss/jwst_niriss_gain_0006.fits'.

2025-12-18 19:26:21,483 - stpipe.pipeline - INFO - Prefetch for LINEARITY reference file is '/home/runner/crds/references/jwst/niriss/jwst_niriss_linearity_0017.fits'.

2025-12-18 19:26:21,483 - stpipe.pipeline - INFO - Prefetch for MASK reference file is '/home/runner/crds/references/jwst/niriss/jwst_niriss_mask_0016.fits'.

2025-12-18 19:26:21,484 - stpipe.pipeline - INFO - Prefetch for READNOISE reference file is '/home/runner/crds/references/jwst/niriss/jwst_niriss_readnoise_0005.fits'.

2025-12-18 19:26:21,485 - stpipe.pipeline - INFO - Prefetch for REFPIX reference file is 'N/A'.

2025-12-18 19:26:21,485 - stpipe.pipeline - INFO - Prefetch for RESET reference file is 'N/A'.

2025-12-18 19:26:21,486 - stpipe.pipeline - INFO - Prefetch for RSCD reference file is 'N/A'.

2025-12-18 19:26:21,486 - stpipe.pipeline - INFO - Prefetch for SATURATION reference file is '/home/runner/crds/references/jwst/niriss/jwst_niriss_saturation_0015.fits'.

2025-12-18 19:26:21,487 - stpipe.pipeline - INFO - Prefetch for SIRSKERNEL reference file is '/home/runner/crds/references/jwst/niriss/jwst_niriss_sirskernel_0001.asdf'.

2025-12-18 19:26:21,488 - stpipe.pipeline - INFO - Prefetch for SUPERBIAS reference file is '/home/runner/crds/references/jwst/niriss/jwst_niriss_superbias_0182.fits'.

2025-12-18 19:26:21,488 - jwst.pipeline.calwebb_detector1 - INFO - Starting calwebb_detector1 ...

2025-12-18 19:26:21,733 - stpipe.step - INFO - Step group_scale running with args (<RampModel(69, 5, 80, 80) from jw01093012001_03102_00001_nis_uncal.fits>,).

2025-12-18 19:26:21,751 - jwst.group_scale.group_scale_step - INFO - NFRAMES and FRMDIVSR are equal; correction not needed

2025-12-18 19:26:21,751 - jwst.group_scale.group_scale_step - INFO - Step will be skipped

2025-12-18 19:26:21,753 - stpipe.step - INFO - Step group_scale done

2025-12-18 19:26:21,900 - stpipe.step - INFO - Step dq_init running with args (<RampModel(69, 5, 80, 80) from jw01093012001_03102_00001_nis_uncal.fits>,).

2025-12-18 19:26:21,911 - jwst.dq_init.dq_init_step - INFO - Using MASK reference file /home/runner/crds/references/jwst/niriss/jwst_niriss_mask_0016.fits

2025-12-18 19:26:21,992 - stdatamodels.dynamicdq - WARNING - Keyword NON_LINEAR does not correspond to an existing DQ mnemonic, so will be ignored

2025-12-18 19:26:22,025 - jwst.dq_init.dq_initialization - INFO - Extracting mask subarray to match science data

2025-12-18 19:26:22,035 - CRDS - INFO - Calibration SW Found: jwst 1.20.2 (/usr/share/miniconda/lib/python3.13/site-packages/jwst-1.20.2.dist-info)

2025-12-18 19:26:23,272 - stpipe.step - INFO - Step dq_init done

2025-12-18 19:26:23,427 - stpipe.step - INFO - Step saturation running with args (<RampModel(69, 5, 80, 80) from jw01093012001_03102_00001_nis_uncal.fits>,).

2025-12-18 19:26:23,448 - jwst.saturation.saturation_step - INFO - Using SATURATION reference file /home/runner/crds/references/jwst/niriss/jwst_niriss_saturation_0015.fits

2025-12-18 19:26:23,449 - jwst.saturation.saturation_step - INFO - Using SUPERBIAS reference file /home/runner/crds/references/jwst/niriss/jwst_niriss_superbias_0182.fits

2025-12-18 19:26:23,481 - stdatamodels.dynamicdq - WARNING - Keyword LOWILLUM does not correspond to an existing DQ mnemonic, so will be ignored

2025-12-18 19:26:23,482 - stdatamodels.dynamicdq - WARNING - Keyword LOWRESP does not correspond to an existing DQ mnemonic, so will be ignored

2025-12-18 19:26:23,488 - stdatamodels.dynamicdq - WARNING - Keyword UNCERTAIN does not correspond to an existing DQ mnemonic, so will be ignored

2025-12-18 19:26:23,511 - jwst.saturation.saturation - INFO - Extracting reference file subarray to match science data

2025-12-18 19:26:23,518 - jwst.saturation.saturation - INFO - Using read_pattern with nframes 1

2025-12-18 19:26:23,586 - stcal.saturation.saturation - INFO - Detected 0 saturated pixels

2025-12-18 19:26:23,588 - stcal.saturation.saturation - INFO - Detected 0 A/D floor pixels

2025-12-18 19:26:23,592 - stpipe.step - INFO - Step saturation done

2025-12-18 19:26:23,750 - stpipe.step - INFO - Step ipc running with args (<RampModel(69, 5, 80, 80) from jw01093012001_03102_00001_nis_uncal.fits>,).

2025-12-18 19:26:23,751 - stpipe.step - INFO - Step skipped.

2025-12-18 19:26:23,907 - stpipe.step - INFO - Step superbias running with args (<RampModel(69, 5, 80, 80) from jw01093012001_03102_00001_nis_uncal.fits>,).

2025-12-18 19:26:23,934 - jwst.superbias.superbias_step - INFO - Using SUPERBIAS reference file /home/runner/crds/references/jwst/niriss/jwst_niriss_superbias_0182.fits

2025-12-18 19:26:23,957 - stpipe.step - INFO - Step superbias done

2025-12-18 19:26:24,118 - stpipe.step - INFO - Step refpix running with args (<RampModel(69, 5, 80, 80) from jw01093012001_03102_00001_nis_uncal.fits>,).

2025-12-18 19:26:24,138 - jwst.refpix.reference_pixels - INFO - NIR subarray data

2025-12-18 19:26:24,141 - jwst.refpix.reference_pixels - INFO - Single readout amplifier used

2025-12-18 19:26:24,142 - jwst.refpix.reference_pixels - INFO - No valid reference pixels. This step will have no effect.

2025-12-18 19:26:25,073 - stpipe.step - INFO - Step refpix done

2025-12-18 19:26:25,230 - stpipe.step - INFO - Step linearity running with args (<RampModel(69, 5, 80, 80) from jw01093012001_03102_00001_nis_uncal.fits>,).

2025-12-18 19:26:25,253 - jwst.linearity.linearity_step - INFO - Using Linearity reference file /home/runner/crds/references/jwst/niriss/jwst_niriss_linearity_0017.fits

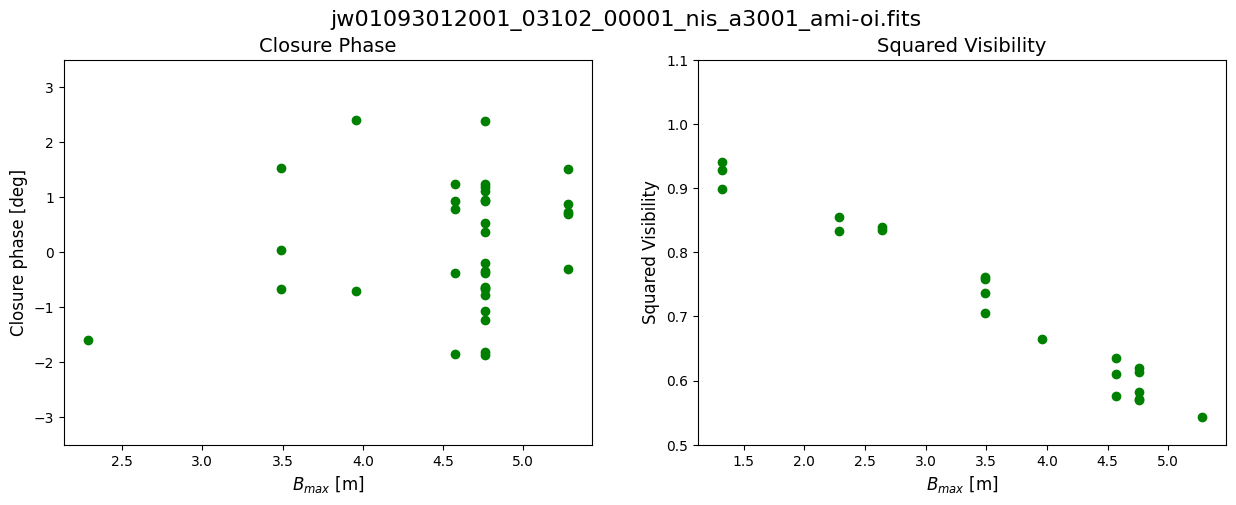

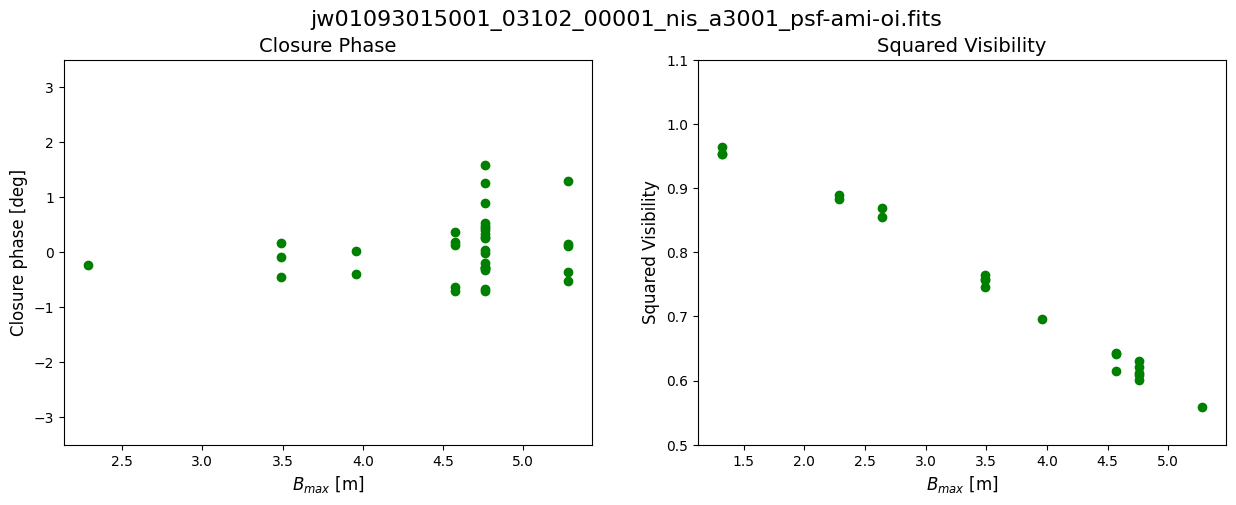

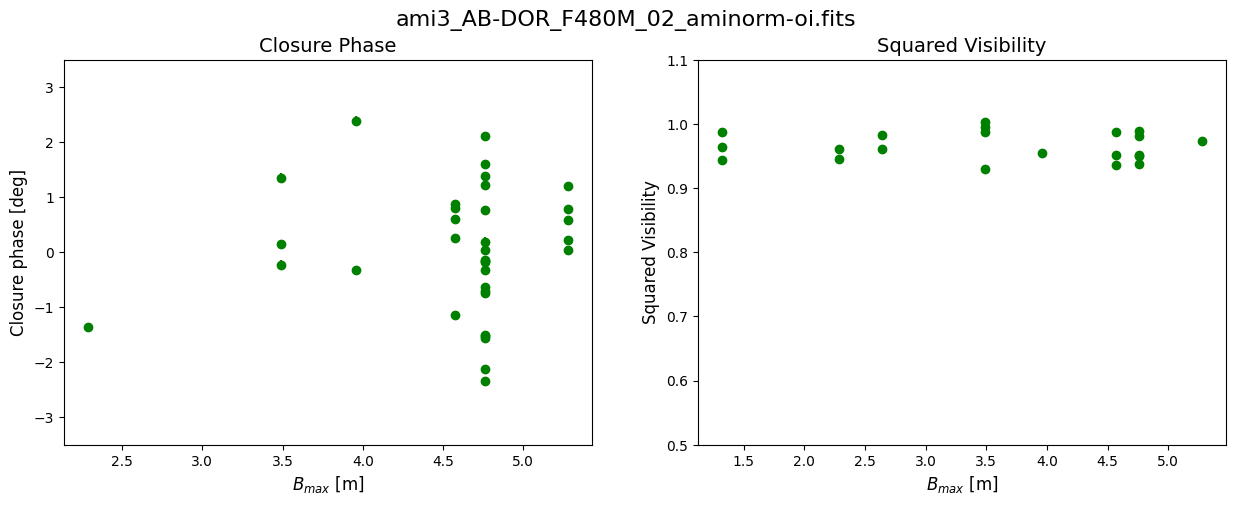

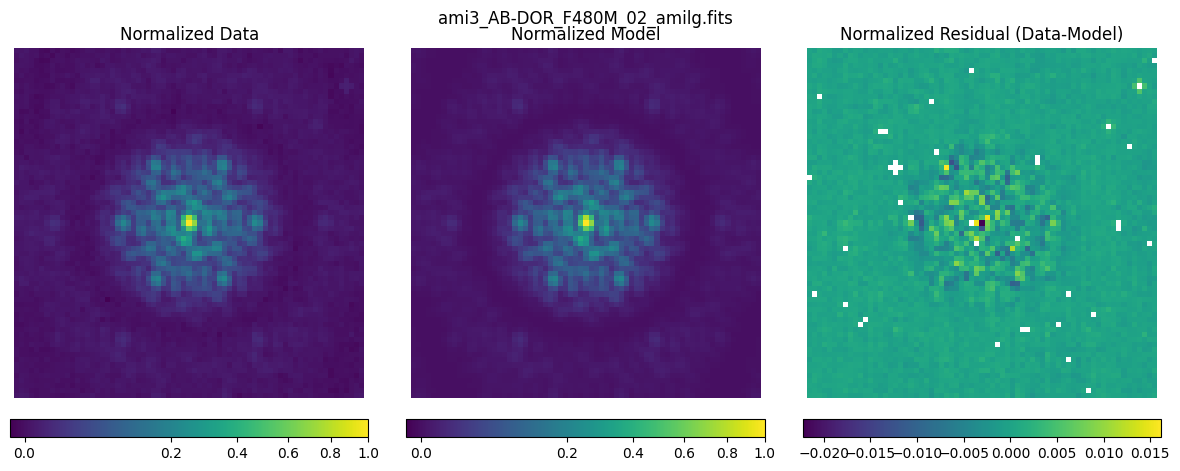

2025-12-18 19:26:25,309 - stdatamodels.dynamicdq - WARNING - Keyword LOWILLUM does not correspond to an existing DQ mnemonic, so will be ignored