MIRI Coronagraphy Pipeline Notebook#

Authors: B. Nickson; MIRI branch

Last Updated: November 10, 2025

Pipeline Version: 1.20.2 (Build 12.1)

Purpose:

This notebook provides a framework for processing generic Mid-Infrared Instrument (MIRI) Coronagraphic data through all three James Webb Space Telescope (JWST) pipeline stages. Data is assumed to be located in separate observation folders according to the paths set up below. Editing cells other than those in the Configuration should not be necessary unless the standard pipeline processing options are modified.

Data:

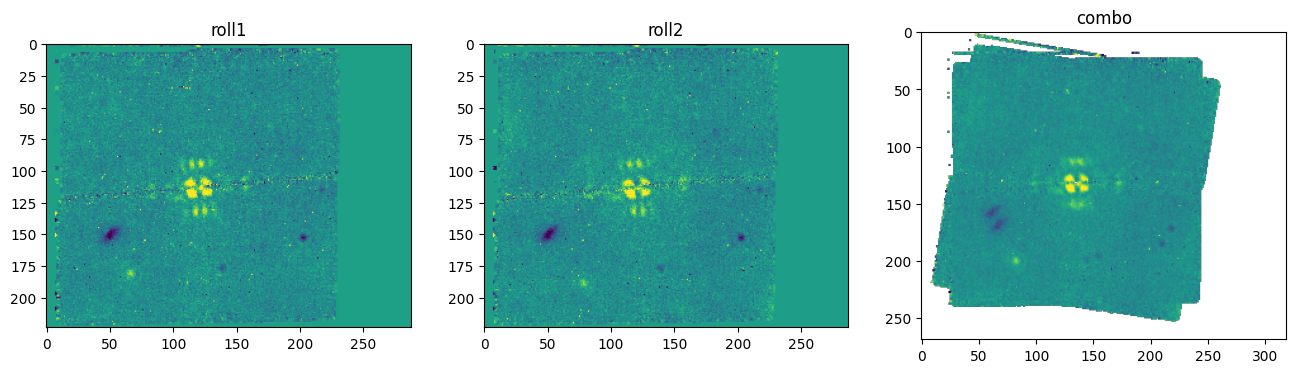

This example is set up to use F1550C coronagraphic observations of the super-Jupiter exoplanet HIP 65426 b, obtained by Program ID 1386 (PI: S. Hinkley). It incorporates observations of the exoplanet host star HIP 65426 at two separate roll angles (1 exposure each); a PSF reference observation of the nearby star HIP 65219, taken with a 9-pt small grid dither pattern (9 exposures total); a background observation associated with the target star, taken with a 2-pt dither (two exposures); and a background observation associated with the PSF reference target, taken with a 2-pt dither (two exposures).

The relevant observation numbers are:

Science observations: 8, 9

Science backgrounds: 30

Reference observations: 7

Reference backgrounds: 31

Example input data to use will be downloaded automatically unless disabled (i.e., to use local files instead).

JWST pipeline version and CRDS context:

This notebook was written for the above-specified pipeline version and associated build context for this version of the JWST Calibration Pipeline. Information about this and other contexts can be found in the JWST Calibration Reference Data System (CRDS server). If you use different pipeline versions, please refer to the table here to determine what context to use. To learn more about the differences for the pipeline, read the relevant documentation.

Please note that pipeline software development is a continuous process, so results in some cases may be slightly different if a subsequent version is used. For optimal results, users are strongly encouraged to reprocess their data using the most recent pipeline version and associated CRDS context, taking advantage of bug fixes and algorithm improvements. Any known issues for this build are noted in the notebook.

Updates:

This notebook is regularly updated as improvements are made to the pipeline. Find the most up to date version of this notebook at:

spacetelescope/jwst-pipeline-notebooks

Recent Changes:

Jan 28, 2025: Migrate from the Coronagraphy_ExambleNB notebook, update to Build 11.2 (jwst 1.17.1).

May 5, 2025: Updated to jwst 1.18.0 (no significant changes)

July 16, 2025: Updated to jwst 1.19.1 (no significant changes)

November 10, 2025: Updated to jwst 1.20.2 (no significant changes)

Table of Contents#

Configuration

Package Imports

Demo Mode Setup

Directory Setup

Detector1 Pipeline

Image2 Pipeline

Coron3 Pipeline

Plot the spectra

1.-Configuration#

Set basic parameters to use with this notebook. These will affect what data is used, where data is located (if already in disk), and pipeline modules run on this data. The list of parameters are as follows:

demo_mode

directories with data

mask

filter

pipeline modules

# Basic import necessary for configuration

import os

demo_mode must be set appropriately below.

Set demo_mode = True to run in demonstration mode. In this mode, this

notebook will download example data from the

Barbara A. Mikulski Archive for Space Telescopes (MAST) and process it through the pipeline.

This will all happen in a local directory unless modified

in Section 3 below.

Set demo_mode = False if you want to process your own data that has already

been downloaded and provide the location of the data.

# Set parameters for demo_mode, mask, filter, data mode directories, and

# processing steps.

# -------------------------------Demo Mode---------------------------------

demo_mode = True

if demo_mode:

print('Running in demonstration mode using online example data!')

# -------------------------Data Mode Directories---------------------------

# If demo_mode = False, look for user data in these paths

if not demo_mode:

# Set directory paths for processing specific data; these will need

# to be changed to your local directory setup (below are given as

# examples)

basedir = os.path.join(os.path.expanduser('~'), 'FlightData1386/')

# Point to where science observation data are

# Assumes uncalibrated data in sci_r1_dir/uncal/ and sci_r2_dir/uncal/,

# and results in stage1, stage2, stage3 directories

sci_r1_dir = os.path.join(basedir, 'sci_r1/')

sci_r2_dir = os.path.join(basedir, 'sci_r2/')

# Point to where reference target observation data are

# Assumes uncalibrated data in ref_dir/uncal/ and results in stage1,

# stage2, stage3 directories

ref_targ_dir = os.path.join(basedir, 'ref_targ/')

# Point to where background observation data are

# Assumes uncalibrated data in sci_bg_dir/uncal/ and ref_targ_bg_dir/uncal/,

# and results in stage1, stage2 directories

bg_sci_dir = os.path.join(basedir, 'bg_sci/')

bg_ref_targ_dir = os.path.join(basedir, 'bg_ref_targ/')

# --------------------------Set Processing Steps--------------------------

# Whether or not to process only data from a given coronagraphic mask/

# filter (useful if overriding reference files)

# Note that BOTH parameters must be set in order to work

use_mask = '4QPM_1550' # '4QPM_1065', '4QPM_1140', '4QPM_1550', or 'LYOT_2300'

use_filter = 'F1550C' # 'F1065C', 'F1140C', 'F1550C', or 'F2300C'

# Individual pipeline stages can be turned on/off here. Note that a later

# stage won't be able to run unless data products have already been

# produced from the prior stage.

# Science processing

dodet1 = True # calwebb_detector1

doimage2 = True # calwebb_image2

docoron3 = True # calwebb_coron3

# Background processing

dodet1bg = True # calwebb_detector1

doimage2bg = True # calwebb_image2

Running in demonstration mode using online example data!

Set CRDS context and server#

Before importing CRDS and JWST modules, we need to configure our environment. This includes defining a CRDS cache directory in which to keep the reference files that will be used by the calibration pipeline.

If the root directory for the local CRDS cache directory has not been set already, it will be set to create one in the home directory.

# ------------------------Set CRDS context and paths----------------------

# Each version of the calibration pipeline is associated with a specific CRDS

# context file. The pipeline will select the appropriate context file behind

# the scenes while running. However, if you wish to override the default context

# file and run the pipeline with a different context, you can set that using

# the CRDS_CONTEXT environment variable. Here we show how this is done,

# although we leave the line commented out in order to use the default context.

# If you wish to specify a different context, uncomment the line below.

#%env CRDS_CONTEXT jwst_1322.pmap

# Check whether the local CRDS cache directory has been set.

# If not, set it to the user home directory

if (os.getenv('CRDS_PATH') is None):

os.environ['CRDS_PATH'] = os.path.join(os.path.expanduser('~'), 'crds')

# Check whether the CRDS server URL has been set. If not, set it.

if (os.getenv('CRDS_SERVER_URL') is None):

os.environ['CRDS_SERVER_URL'] = 'https://jwst-crds.stsci.edu'

# Echo CRDS path in use

print('CRDS local filepath:', os.environ['CRDS_PATH'])

print('CRDS file server:', os.environ['CRDS_SERVER_URL'])

CRDS local filepath: /home/runner/crds

CRDS file server: https://jwst-crds.stsci.edu

2.-Package Imports#

# Use the entire available screen width for this notebook

from IPython.display import display, HTML

display(HTML("<style>.container { width:95% !important; }</style>"))

# Basic system utilities for interacting with files

# ----------------------General Imports------------------------------------

import glob

#import copy

import time

from pathlib import Path

import re

# Numpy for doing calculations

import numpy as np

# -----------------------Astropy Imports-----------------------------------

# Astropy utilities for opening FITS and ASCII files, and downloading demo files

from astropy.io import fits

from astropy.wcs import WCS

from astropy.coordinates import SkyCoord

#from astropy import time

from astroquery.mast import Observations

# -----------------------Plotting Imports----------------------------------

# Matplotlib for making plots

import matplotlib.pyplot as plt

# --------------JWST Calibration Pipeline Imports---------------------------

# Import the base JWST and calibration reference files packages

import jwst

import crds

# JWST pipelines (each encompassing many steps)

from jwst.pipeline import Detector1Pipeline

from jwst.pipeline import Image2Pipeline

from jwst.pipeline import Coron3Pipeline

# JWST pipeline utilities

from jwst import datamodels # JWST datamodels

from jwst.associations import asn_from_list as afl # Tools for creating association files

from jwst.associations.lib.rules_level2_base import DMSLevel2bBase # Definition of a Lvl2 association file

from jwst.associations.lib.rules_level3_base import DMS_Level3_Base # Definition of a Lvl3 association file

from jwst.stpipe import Step # Import the wrapper class for pipeline steps

# Echo pipeline version and CRDS context in use

print("JWST Calibration Pipeline Version = {}".format(jwst.__version__))

print("Using CRDS Context = {}".format(crds.get_context_name('jwst')))

JWST Calibration Pipeline Version = 1.20.2

CRDS - INFO - Calibration SW Found: jwst 1.20.2 (/usr/share/miniconda/lib/python3.13/site-packages/jwst-1.20.2.dist-info)

Using CRDS Context = jwst_1464.pmap

Define convenience functions#

Define a convenience function to select only files of a given coronagraph mask/filter from an input set

# Define a convenience function to select only files of a given coronagraph mask/filter from an input set

def select_mask_filter_files(files, use_mask, use_filter):

"""

Filter FITS files based on mask and filter criteria from their headers.

Parameters:

-----------

files : array-like

List of FITS file paths to process

use_mask : str

Mask value to match in FITS header 'CORONMSK' key

use_filter : str

Filter value to match in FITS header 'FILTER' key

Returns:

--------

numpy.ndarray

Filtered array of file paths matching the criteria

"""

# Make paths absolute paths

for i in range(len(files)):

files[i] = os.path.abspath(files[i])

# Convert files to numpy array if it isn't already

files = np.asarray(files)

# If either mask or filter is empty, return all files

if not use_mask or not use_filter:

return files

try:

# Initialize boolean array for keeping track of matches

keep = np.zeros(len(files), dtype=bool)

# Process each file

for i in range(len(files)):

try:

with fits.open(files[i]) as hdu:

hdu.verify()

hdr = hdu[0].header

# Check if requred header keywords exist

if ('CORONMSK' in hdr and 'FILTER' in hdr):

if hdr['CORONMSK'] == use_mask and hdr['FILTER'] == use_filter:

keep[i] = True

files[i] = os.path.abspath(files[i])

except (OSError, ValueError) as e:

print(f" Warning: could not process file {files[i]}: {str(e)}")

# Return filtered files

indx = np.where(keep)

return files[indx]

except Exception as e:

print(f"Error processing files: {str(e)}")

return files # Return original array in case of failure

# Start a timer to keep track of runtime

time0 = time.perf_counter()

3.-Demo Mode Setup (ignore if not using demo data)#

If running in demonstration mode, set up the program information to

retrieve the uncalibrated data automatically from MAST using

astroquery.

MAST allows for flexibility of searching by the proposal ID and the

observation ID instead of just filenames.

For illustrative purposes, we focus on data taken through the MIRI

F1550C filter

and start with uncalibrated raw data products (uncal.fits). The files use the following naming schema:

jw01386<obs>001_04101_0000<dith>_mirimage_uncal.fits, where obs refers to the observation number and dith refers to the

dither step number.

More information about the JWST file naming conventions can be found at: https://jwst-pipeline.readthedocs.io/en/latest/jwst/data_products/file_naming.html

# Set up the program information and paths for demo program

if demo_mode:

print('Running in demonstration mode and will download example data from MAST!')

program = "01386"

sci_r1_observtn = "008"

sci_r2_observtn = "009"

ref_targ_observtn = "007"

bg_sci_observtn = "030"

bg_ref_targ_observtn = "031"

# ----------Define the base and observation directories----------

basedir = os.path.join('.', 'miri_coro_demo_data')

download_dir = basedir

sci_r1_dir = os.path.join(basedir, 'Obs' + sci_r1_observtn)

sci_r2_dir = os.path.join(basedir, 'Obs' + sci_r2_observtn)

ref_targ_dir = os.path.join(basedir, 'Obs' + ref_targ_observtn)

bg_sci_dir = os.path.join(basedir, 'Obs' + bg_sci_observtn)

bg_ref_targ_dir = os.path.join(basedir, 'Obs' + bg_ref_targ_observtn)

uncal_sci_r1_dir = os.path.join(sci_r1_dir, 'uncal')

uncal_sci_r2_dir = os.path.join(sci_r2_dir, 'uncal')

uncal_ref_targ_dir = os.path.join(ref_targ_dir, 'uncal')

uncal_bg_sci_dir = os.path.join(bg_sci_dir, 'uncal')

uncal_bg_ref_targ_dir = os.path.join(bg_ref_targ_dir, 'uncal')

# Ensure filepaths for input data exist

input_dirs = [uncal_sci_r1_dir, uncal_sci_r2_dir, uncal_ref_targ_dir, uncal_bg_sci_dir, uncal_bg_ref_targ_dir]

for dir in input_dirs:

if not os.path.exists(dir):

os.makedirs(dir)

Running in demonstration mode and will download example data from MAST!

Identify list of uncalibrated files associated with visits.

# Obtain a list of observation IDs for the specified demo program

if demo_mode:

obs_id_table = Observations.query_criteria(instrument_name=["MIRI/CORON"],

provenance_name=["CALJWST"],

proposal_id=[program])

# Turn the list of visits into a list of uncalibrated data files

if demo_mode:

# Define types of files to select

file_dict = {'uncal': {'product_type': 'SCIENCE', 'productSubGroupDescription': 'UNCAL', 'calib_level': [1]}}

# Loop over visits identifying uncalibrated files that are associated with them

files_to_download = []

for exposure in (obs_id_table):

products = Observations.get_product_list(exposure)

for filetype, query_dict in file_dict.items():

filtered_products = Observations.filter_products(products, productType=query_dict['product_type'],

productSubGroupDescription=query_dict['productSubGroupDescription'],

calib_level=query_dict['calib_level'])

files_to_download.extend(filtered_products['dataURI'])

# Cull to a unique list of files for each observation type

# Science roll 1

sci_r1_files_to_download = []

sci_r1_files_to_download = np.unique([i for i in files_to_download if str(program + sci_r1_observtn) in i])

# Science roll 2

sci_r2_files_to_download = []

sci_r2_files_to_download = np.unique([i for i in files_to_download if str(program + sci_r2_observtn) in i])

# PSF Reference taraget data

ref_targ_files_to_download = []

ref_targ_files_to_download = np.unique([i for i in files_to_download if str(program + ref_targ_observtn) in i])

# Background files (science assoc.)

bg_sci_files_to_download = []

bg_sci_files_to_download = np.unique([i for i in files_to_download if str(program + bg_sci_observtn) in i])

# Background files (reference target assoc.)

bg_ref_targ_files_to_download = []

bg_ref_targ_files_to_download = np.unique([i for i in files_to_download if str(program + bg_ref_targ_observtn) in i])

print("Science files selected for downloading: ", len(sci_r1_files_to_download) + len(sci_r1_files_to_download))

print("PSF Reference target files selected for downloading: ", len(ref_targ_files_to_download))

print("Background selected for downloading: ", len(bg_sci_files_to_download) + len(bg_ref_targ_files_to_download))

Science files selected for downloading: 6

PSF Reference target files selected for downloading: 11

Background selected for downloading: 4

For the demo example, there should be 6 Science files, 11 PSF Reference files and 4 Background files selected for downloading.

Download all the uncal files and place them into the appropriate directories.

if demo_mode:

for filename in sci_r1_files_to_download:

sci_r1_manifest = Observations.download_file(filename, local_path=os.path.join(uncal_sci_r1_dir, Path(filename).name))

for filename in sci_r2_files_to_download:

sci_r2_manifest = Observations.download_file(filename, local_path=os.path.join(uncal_sci_r2_dir, Path(filename).name))

for filename in ref_targ_files_to_download:

ref_targ_manifest = Observations.download_file(filename, local_path=os.path.join(uncal_ref_targ_dir, Path(filename).name))

for filename in bg_sci_files_to_download:

bg_manifest = Observations.download_file(filename, local_path=os.path.join(uncal_bg_sci_dir, Path(filename).name))

for filename in bg_ref_targ_files_to_download:

bg_ref_targ_manifest = Observations.download_file(filename, local_path=os.path.join(uncal_bg_ref_targ_dir, Path(filename).name))

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386008001_02101_00001_mirimage_uncal.fits to ./miri_coro_demo_data/Obs008/uncal/jw01386008001_02101_00001_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386008001_02101_00002_mirimage_uncal.fits to ./miri_coro_demo_data/Obs008/uncal/jw01386008001_02101_00002_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386008001_04101_00001_mirimage_uncal.fits to ./miri_coro_demo_data/Obs008/uncal/jw01386008001_04101_00001_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386009001_02101_00001_mirimage_uncal.fits to ./miri_coro_demo_data/Obs009/uncal/jw01386009001_02101_00001_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386009001_02101_00002_mirimage_uncal.fits to ./miri_coro_demo_data/Obs009/uncal/jw01386009001_02101_00002_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386009001_04101_00001_mirimage_uncal.fits to ./miri_coro_demo_data/Obs009/uncal/jw01386009001_04101_00001_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386007001_02101_00001_mirimage_uncal.fits to ./miri_coro_demo_data/Obs007/uncal/jw01386007001_02101_00001_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386007001_02101_00002_mirimage_uncal.fits to ./miri_coro_demo_data/Obs007/uncal/jw01386007001_02101_00002_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386007001_04101_00001_mirimage_uncal.fits to ./miri_coro_demo_data/Obs007/uncal/jw01386007001_04101_00001_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386007001_04101_00002_mirimage_uncal.fits to ./miri_coro_demo_data/Obs007/uncal/jw01386007001_04101_00002_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386007001_04101_00003_mirimage_uncal.fits to ./miri_coro_demo_data/Obs007/uncal/jw01386007001_04101_00003_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386007001_04101_00004_mirimage_uncal.fits to ./miri_coro_demo_data/Obs007/uncal/jw01386007001_04101_00004_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386007001_04101_00005_mirimage_uncal.fits to ./miri_coro_demo_data/Obs007/uncal/jw01386007001_04101_00005_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386007001_04101_00006_mirimage_uncal.fits to ./miri_coro_demo_data/Obs007/uncal/jw01386007001_04101_00006_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386007001_04101_00007_mirimage_uncal.fits to ./miri_coro_demo_data/Obs007/uncal/jw01386007001_04101_00007_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386007001_04101_00008_mirimage_uncal.fits to ./miri_coro_demo_data/Obs007/uncal/jw01386007001_04101_00008_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386007001_04101_00009_mirimage_uncal.fits to ./miri_coro_demo_data/Obs007/uncal/jw01386007001_04101_00009_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386030001_02101_00001_mirimage_uncal.fits to ./miri_coro_demo_data/Obs030/uncal/jw01386030001_02101_00001_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386030001_03101_00001_mirimage_uncal.fits to ./miri_coro_demo_data/Obs030/uncal/jw01386030001_03101_00001_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386031001_02101_00001_mirimage_uncal.fits to ./miri_coro_demo_data/Obs031/uncal/jw01386031001_02101_00001_mirimage_uncal.fits ...

[Done]

Downloading URL https://mast.stsci.edu/api/v0.1/Download/file?uri=mast:JWST/product/jw01386031001_03101_00001_mirimage_uncal.fits to ./miri_coro_demo_data/Obs031/uncal/jw01386031001_03101_00001_mirimage_uncal.fits ...

[Done]

4.-Directory Setup#

Set up detailed paths to input/output stages here. We will set up individual stage1/ and stage2/ sub directories for each observation, but a single stage3/ directory for the combined calwebb_coron3 output products.

# Define output subdirectories to keep science data products organized

# Sci Roll 1

uncal_sci_r1_dir = os.path.join(sci_r1_dir, 'uncal') # uncal inputs go here

det1_sci_r1_dir = os.path.join(sci_r1_dir, 'stage1') # calwebb_detector1 pipeline outputs will go here

image2_sci_r1_dir = os.path.join(sci_r1_dir, 'stage2') # calwebb_image2 pipeline outputs will go here

# Sci Roll 2

uncal_sci_r2_dir = os.path.join(sci_r2_dir, 'uncal') # uncal inputs go here

det1_sci_r2_dir = os.path.join(sci_r2_dir, 'stage1') # calwebb_detector1 pipeline outputs will go here

image2_sci_r2_dir = os.path.join(sci_r2_dir, 'stage2') # calwebb_image2 pipeline outputs will go here

# Define output subdirectories to keep PSF reference target data products organized

uncal_ref_targ_dir = os.path.join(ref_targ_dir, 'uncal') # uncal inputs go here

det1_ref_targ_dir = os.path.join(ref_targ_dir, 'stage1') # calwebb_detector1 pipeline outputs will go here

image2_ref_targ_dir = os.path.join(ref_targ_dir, 'stage2') # calwebb_image2 pipeline outputs will go here

# Define output subdirectories to keep background data products organized

# Sci Bkg

uncal_bg_sci_dir = os.path.join(bg_sci_dir, 'uncal') # uncal inputs go here

det1_bg_sci_dir = os.path.join(bg_sci_dir, 'stage1') # calwebb_detector1 pipeline outputs will go here

image2_bg_sci_dir = os.path.join(bg_sci_dir, 'stage2') # calwebb_image2 pipeline outputs will go here

# Ref target Bkg

uncal_bg_ref_targ_dir = os.path.join(bg_ref_targ_dir, 'uncal') # uncal inputs go here

det1_bg_ref_targ_dir = os.path.join(bg_ref_targ_dir, 'stage1') # calwebb_detector1 pipeline outputs will go here

image2_bg_ref_targ_dir = os.path.join(bg_ref_targ_dir, 'stage2') # calwebb_image2 pipeline outputs will go here

coron3_dir = os.path.join(basedir, 'stage3')

# We need to check that the desired output directories exist, and if not create them

det1_dirs = [det1_sci_r1_dir, det1_sci_r2_dir, det1_ref_targ_dir, det1_bg_sci_dir, det1_bg_ref_targ_dir]

image2_dirs = [image2_sci_r1_dir, image2_sci_r2_dir, image2_ref_targ_dir, image2_bg_sci_dir, image2_bg_ref_targ_dir]

for dir in det1_dirs:

if not os.path.exists(dir):

os.makedirs(dir)

for dir in image2_dirs:

if not os.path.exists(dir):

os.makedirs(dir)

if not os.path.exists(coron3_dir):

os.makedirs(coron3_dir)

# Print out the time benchmark

time1 = time.perf_counter()

print(f"Runtime so far: {time1 - time0:0.4f} seconds")

Runtime so far: 458.7402 seconds

5.-Detector1 Pipeline#

In this section, we process our uncalibrated data through the calwebb_detector1 pipeline to create Stage 1 data products. For coronagraphic exposures, these data products include a *_rate.fits file (a 2D countrate product, based on averaging over all integrations in the exposure), but specifically also a *_rateints.fits file, a 3D countrate product, that contains the individual results of each integration, wherein 2D countrate images for each integration are stacked along the 3rd axis of the data cubes (ncols x nrows x nints). These data products have units of DN/s.

See https://jwst-docs.stsci.edu/jwst-science-calibration-pipeline/stages-of-jwst-data-processing/calwebb_detector1

By default, all steps in the calwebb_detector1 are run for MIRI except: the ipc and charge_migration steps. There are also several steps performed for MIRI data that are not performed for other instruments. These include: emicorr, firstframe, lastframe, reset and rscd.

E.g., turn on detection of cosmic ray showers.

# Set up a dictionary to define how the Detector1 pipeline should be configured

# Boilerplate dictionary setup

det1dict = {}

det1dict['group_scale'], det1dict['dq_init'], det1dict['emicorr'], det1dict['saturation'] = {}, {}, {}, {}

det1dict['firstframe'], det1dict['lastframe'], det1dict['reset'], det1dict['linearity'], det1dict['rscd'] = {}, {}, {}, {}, {}

det1dict['dark_current'], det1dict['refpix'], det1dict['jump'], det1dict['ramp_fit'], det1dict['gain_scale'] = {}, {}, {}, {}, {}

det1dict['clean_flicker_noise'] = {}

# Overrides for whether or not certain steps should be skipped (example)

# skipping refpix step

#det1dict['refpix']['skip'] = True

# Overrides for various reference files

# Files should be in the base local directory or provide full path

#det1dict['dq_init']['override_mask'] = 'myfile.fits' # Bad pixel mask

#det1dict['saturation']['override_saturation'] = 'myfile.fits' # Saturation

#det1dict['reset']['override_reset'] = 'myfile.fits' # Reset

#det1dict['linearity']['override_linearity'] = 'myfile.fits' # Linearity

#det1dict['rscd']['override_rscd'] = 'myfile.fits' # RSCD

#det1dict['dark_current']['override_dark'] = 'myfile.fits' # Dark current subtraction

#det1dict['jump']['override_gain'] = 'myfile.fits' # Gain used by jump step

#det1dict['ramp_fit']['override_gain'] = 'myfile.fits' # Gain used by ramp fitting step

#det1dict['jump']['override_readnoise'] = 'myfile.fits' # Read noise used by jump step

#det1dict['ramp_fit']['override_readnoise'] = 'myfile.fits' # Read noise used by ramp fitting step

# Turn on multi-core processing (off by default). Choose what fraction of cores to use (quarter, half, or all)

det1dict['jump']['maximum_cores'] = 'half'

# Save the frame-averaged dark data created during the dark current subtraction step

#det1dict['dark_current']['dark_output'] = 'dark.fits' # Frame-averaged dark

# Turn on detection of cosmic ray showers (off by default)

#det1dict['jump']['find_showers'] = True

For more information see Tips and Trick for working with the JWST Pipeline

# Define a new step called XplyStep that multiplies everything by 1.0

# I.e., it does nothing, but could be changed to do something more interesting.

class XplyStep(Step):

spec = '''

'''

class_alias = 'xply'

def process(self, input_data):

with datamodels.open(input_data) as model:

result = model.copy()

sci = result.data

sci = sci * 1.0

result.data = sci

self.log.info('Multiplied everything by one in custom step!')

return result

# And here we'll insert it into our pipeline dictionary to be run at the end right after the gain_scale step

det1dict['gain_scale']['post_hooks'] = [XplyStep]

Calibrating Science Files#

Look for input science files and run calwebb_detector1 pipeline using the call method. For the demo example there should be 2 input science files, one for the observation at roll 1 (Obs 8) and one for the observation at roll 2 (Obs 9).

uncal_sci_r1_dir

'./miri_coro_demo_data/Obs008/uncal'

# Look for input files of the form *uncal.fits from the science observation

sstring1 = os.path.join(uncal_sci_r1_dir, 'jw*mirimage*uncal.fits')

sstring2 = os.path.join(uncal_sci_r2_dir, 'jw*mirimage*uncal.fits')

uncal_sci_r1_files = sorted(glob.glob(sstring1))

uncal_sci_r2_files = sorted(glob.glob(sstring2))

# Check that these are the correct mask/filter to use

uncal_sci_r1_files = select_mask_filter_files(uncal_sci_r1_files, use_mask, use_filter)

uncal_sci_r2_files = select_mask_filter_files(uncal_sci_r2_files, use_mask, use_filter)

print('Found ' + str((len(uncal_sci_r1_files) + len(uncal_sci_r2_files))) + ' science input files')

Found 2 science input files

# Run the pipeline on these input files by a simple loop over files using

# our custom parameter dictionary

if dodet1:

for file in uncal_sci_r1_files:

Detector1Pipeline.call(file, steps=det1dict, save_results=True, output_dir=det1_sci_r1_dir)

for file in uncal_sci_r2_files:

Detector1Pipeline.call(file, steps=det1dict, save_results=True, output_dir=det1_sci_r2_dir)

else:

print('Skipping Detector1 processing for SCI data')

2025-12-18 19:33:57,811 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_system_datalvl_0002.rmap 694 bytes (1 / 212 files) (0 / 762.3 K bytes)

2025-12-18 19:33:57,921 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_system_calver_0063.rmap 5.7 K bytes (2 / 212 files) (694 / 762.3 K bytes)

2025-12-18 19:33:58,032 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_system_0058.imap 385 bytes (3 / 212 files) (6.4 K / 762.3 K bytes)

2025-12-18 19:33:58,126 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_wavelengthrange_0024.rmap 1.4 K bytes (4 / 212 files) (6.8 K / 762.3 K bytes)

2025-12-18 19:33:58,251 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_wavecorr_0005.rmap 884 bytes (5 / 212 files) (8.2 K / 762.3 K bytes)

2025-12-18 19:33:58,341 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_superbias_0087.rmap 38.3 K bytes (6 / 212 files) (9.0 K / 762.3 K bytes)

2025-12-18 19:33:58,458 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_sirskernel_0002.rmap 704 bytes (7 / 212 files) (47.3 K / 762.3 K bytes)

2025-12-18 19:33:58,593 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_sflat_0027.rmap 20.6 K bytes (8 / 212 files) (48.0 K / 762.3 K bytes)

2025-12-18 19:33:58,703 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_saturation_0018.rmap 2.0 K bytes (9 / 212 files) (68.7 K / 762.3 K bytes)

2025-12-18 19:33:58,810 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_refpix_0015.rmap 1.6 K bytes (10 / 212 files) (70.7 K / 762.3 K bytes)

2025-12-18 19:33:58,928 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_readnoise_0025.rmap 2.6 K bytes (11 / 212 files) (72.2 K / 762.3 K bytes)

2025-12-18 19:33:59,017 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_psf_0002.rmap 687 bytes (12 / 212 files) (74.8 K / 762.3 K bytes)

2025-12-18 19:33:59,531 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pictureframe_0001.rmap 675 bytes (13 / 212 files) (75.5 K / 762.3 K bytes)

2025-12-18 19:33:59,623 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_photom_0013.rmap 958 bytes (14 / 212 files) (76.2 K / 762.3 K bytes)

2025-12-18 19:33:59,722 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pathloss_0011.rmap 1.2 K bytes (15 / 212 files) (77.1 K / 762.3 K bytes)

2025-12-18 19:33:59,818 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-whitelightstep_0001.rmap 777 bytes (16 / 212 files) (78.3 K / 762.3 K bytes)

2025-12-18 19:33:59,916 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-spec2pipeline_0013.rmap 2.1 K bytes (17 / 212 files) (79.1 K / 762.3 K bytes)

2025-12-18 19:34:00,004 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-resamplespecstep_0002.rmap 709 bytes (18 / 212 files) (81.2 K / 762.3 K bytes)

2025-12-18 19:34:00,096 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-refpixstep_0003.rmap 910 bytes (19 / 212 files) (81.9 K / 762.3 K bytes)

2025-12-18 19:34:00,189 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-outlierdetectionstep_0005.rmap 1.1 K bytes (20 / 212 files) (82.8 K / 762.3 K bytes)

2025-12-18 19:34:00,279 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-jumpstep_0005.rmap 810 bytes (21 / 212 files) (84.0 K / 762.3 K bytes)

2025-12-18 19:34:00,371 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-image2pipeline_0008.rmap 1.0 K bytes (22 / 212 files) (84.8 K / 762.3 K bytes)

2025-12-18 19:34:00,472 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-detector1pipeline_0003.rmap 1.1 K bytes (23 / 212 files) (85.8 K / 762.3 K bytes)

2025-12-18 19:34:00,562 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-darkpipeline_0003.rmap 872 bytes (24 / 212 files) (86.8 K / 762.3 K bytes)

2025-12-18 19:34:00,661 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_pars-darkcurrentstep_0003.rmap 1.8 K bytes (25 / 212 files) (87.7 K / 762.3 K bytes)

2025-12-18 19:34:00,754 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_ote_0030.rmap 1.3 K bytes (26 / 212 files) (89.5 K / 762.3 K bytes)

2025-12-18 19:34:00,846 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_msaoper_0017.rmap 1.6 K bytes (27 / 212 files) (90.8 K / 762.3 K bytes)

2025-12-18 19:34:00,941 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_msa_0027.rmap 1.3 K bytes (28 / 212 files) (92.4 K / 762.3 K bytes)

2025-12-18 19:34:01,037 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_mask_0044.rmap 4.3 K bytes (29 / 212 files) (93.6 K / 762.3 K bytes)

2025-12-18 19:34:01,132 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_linearity_0017.rmap 1.6 K bytes (30 / 212 files) (97.9 K / 762.3 K bytes)

2025-12-18 19:34:01,218 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_ipc_0006.rmap 876 bytes (31 / 212 files) (99.5 K / 762.3 K bytes)

2025-12-18 19:34:01,311 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_ifuslicer_0017.rmap 1.5 K bytes (32 / 212 files) (100.4 K / 762.3 K bytes)

2025-12-18 19:34:01,404 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_ifupost_0019.rmap 1.5 K bytes (33 / 212 files) (101.9 K / 762.3 K bytes)

2025-12-18 19:34:01,492 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_ifufore_0017.rmap 1.5 K bytes (34 / 212 files) (103.4 K / 762.3 K bytes)

2025-12-18 19:34:01,580 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_gain_0023.rmap 1.8 K bytes (35 / 212 files) (104.9 K / 762.3 K bytes)

2025-12-18 19:34:01,710 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_fpa_0028.rmap 1.3 K bytes (36 / 212 files) (106.7 K / 762.3 K bytes)

2025-12-18 19:34:01,820 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_fore_0026.rmap 5.0 K bytes (37 / 212 files) (107.9 K / 762.3 K bytes)

2025-12-18 19:34:01,911 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_flat_0015.rmap 3.8 K bytes (38 / 212 files) (112.9 K / 762.3 K bytes)

2025-12-18 19:34:02,003 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_fflat_0028.rmap 7.2 K bytes (39 / 212 files) (116.7 K / 762.3 K bytes)

2025-12-18 19:34:02,098 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_extract1d_0018.rmap 2.3 K bytes (40 / 212 files) (123.9 K / 762.3 K bytes)

2025-12-18 19:34:02,187 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_disperser_0028.rmap 5.7 K bytes (41 / 212 files) (126.2 K / 762.3 K bytes)

2025-12-18 19:34:02,280 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_dflat_0007.rmap 1.1 K bytes (42 / 212 files) (131.9 K / 762.3 K bytes)

2025-12-18 19:34:02,381 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_dark_0083.rmap 36.4 K bytes (43 / 212 files) (133.0 K / 762.3 K bytes)

2025-12-18 19:34:02,495 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_cubepar_0015.rmap 966 bytes (44 / 212 files) (169.4 K / 762.3 K bytes)

2025-12-18 19:34:02,585 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_collimator_0026.rmap 1.3 K bytes (45 / 212 files) (170.4 K / 762.3 K bytes)

2025-12-18 19:34:02,676 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_camera_0026.rmap 1.3 K bytes (46 / 212 files) (171.7 K / 762.3 K bytes)

2025-12-18 19:34:02,773 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_barshadow_0007.rmap 1.8 K bytes (47 / 212 files) (173.0 K / 762.3 K bytes)

2025-12-18 19:34:02,882 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_area_0018.rmap 6.3 K bytes (48 / 212 files) (174.8 K / 762.3 K bytes)

2025-12-18 19:34:02,992 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_apcorr_0009.rmap 5.6 K bytes (49 / 212 files) (181.1 K / 762.3 K bytes)

2025-12-18 19:34:03,086 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nirspec_0420.imap 5.8 K bytes (50 / 212 files) (186.7 K / 762.3 K bytes)

2025-12-18 19:34:03,185 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_wavelengthrange_0008.rmap 897 bytes (51 / 212 files) (192.5 K / 762.3 K bytes)

2025-12-18 19:34:03,275 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_trappars_0004.rmap 753 bytes (52 / 212 files) (193.4 K / 762.3 K bytes)

2025-12-18 19:34:03,369 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_trapdensity_0005.rmap 705 bytes (53 / 212 files) (194.1 K / 762.3 K bytes)

2025-12-18 19:34:03,464 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_throughput_0005.rmap 1.3 K bytes (54 / 212 files) (194.8 K / 762.3 K bytes)

2025-12-18 19:34:03,557 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_superbias_0033.rmap 8.0 K bytes (55 / 212 files) (196.1 K / 762.3 K bytes)

2025-12-18 19:34:03,651 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_specwcs_0016.rmap 3.1 K bytes (56 / 212 files) (204.1 K / 762.3 K bytes)

2025-12-18 19:34:03,755 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_specprofile_0008.rmap 2.4 K bytes (57 / 212 files) (207.2 K / 762.3 K bytes)

2025-12-18 19:34:03,847 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_speckernel_0006.rmap 1.0 K bytes (58 / 212 files) (209.6 K / 762.3 K bytes)

2025-12-18 19:34:03,938 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_sirskernel_0002.rmap 700 bytes (59 / 212 files) (210.6 K / 762.3 K bytes)

2025-12-18 19:34:04,027 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_saturation_0015.rmap 829 bytes (60 / 212 files) (211.3 K / 762.3 K bytes)

2025-12-18 19:34:04,131 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_readnoise_0011.rmap 987 bytes (61 / 212 files) (212.1 K / 762.3 K bytes)

2025-12-18 19:34:04,237 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_photom_0037.rmap 1.3 K bytes (62 / 212 files) (213.1 K / 762.3 K bytes)

2025-12-18 19:34:04,331 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_persat_0007.rmap 674 bytes (63 / 212 files) (214.4 K / 762.3 K bytes)

2025-12-18 19:34:04,421 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pathloss_0003.rmap 758 bytes (64 / 212 files) (215.1 K / 762.3 K bytes)

2025-12-18 19:34:04,513 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pastasoss_0005.rmap 818 bytes (65 / 212 files) (215.8 K / 762.3 K bytes)

2025-12-18 19:34:04,605 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-undersamplecorrectionstep_0001.rmap 904 bytes (66 / 212 files) (216.6 K / 762.3 K bytes)

2025-12-18 19:34:04,714 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-tweakregstep_0012.rmap 3.1 K bytes (67 / 212 files) (217.5 K / 762.3 K bytes)

2025-12-18 19:34:04,867 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-spec2pipeline_0009.rmap 1.2 K bytes (68 / 212 files) (220.7 K / 762.3 K bytes)

2025-12-18 19:34:04,964 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-sourcecatalogstep_0002.rmap 2.3 K bytes (69 / 212 files) (221.9 K / 762.3 K bytes)

2025-12-18 19:34:06,060 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-resamplestep_0002.rmap 687 bytes (70 / 212 files) (224.2 K / 762.3 K bytes)

2025-12-18 19:34:06,155 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-outlierdetectionstep_0004.rmap 2.7 K bytes (71 / 212 files) (224.9 K / 762.3 K bytes)

2025-12-18 19:34:06,245 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-jumpstep_0007.rmap 6.4 K bytes (72 / 212 files) (227.6 K / 762.3 K bytes)

2025-12-18 19:34:06,332 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-image2pipeline_0005.rmap 1.0 K bytes (73 / 212 files) (233.9 K / 762.3 K bytes)

2025-12-18 19:34:06,419 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-detector1pipeline_0002.rmap 1.0 K bytes (74 / 212 files) (235.0 K / 762.3 K bytes)

2025-12-18 19:34:06,511 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-darkpipeline_0002.rmap 868 bytes (75 / 212 files) (236.0 K / 762.3 K bytes)

2025-12-18 19:34:06,600 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-darkcurrentstep_0001.rmap 591 bytes (76 / 212 files) (236.9 K / 762.3 K bytes)

2025-12-18 19:34:06,693 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-chargemigrationstep_0005.rmap 5.7 K bytes (77 / 212 files) (237.5 K / 762.3 K bytes)

2025-12-18 19:34:06,781 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_pars-backgroundstep_0003.rmap 822 bytes (78 / 212 files) (243.1 K / 762.3 K bytes)

2025-12-18 19:34:06,875 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_nrm_0005.rmap 663 bytes (79 / 212 files) (243.9 K / 762.3 K bytes)

2025-12-18 19:34:06,962 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_mask_0024.rmap 1.5 K bytes (80 / 212 files) (244.6 K / 762.3 K bytes)

2025-12-18 19:34:07,057 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_linearity_0022.rmap 961 bytes (81 / 212 files) (246.1 K / 762.3 K bytes)

2025-12-18 19:34:07,159 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_ipc_0007.rmap 651 bytes (82 / 212 files) (247.0 K / 762.3 K bytes)

2025-12-18 19:34:07,249 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_gain_0011.rmap 797 bytes (83 / 212 files) (247.7 K / 762.3 K bytes)

2025-12-18 19:34:07,337 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_flat_0023.rmap 5.9 K bytes (84 / 212 files) (248.5 K / 762.3 K bytes)

2025-12-18 19:34:07,428 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_filteroffset_0010.rmap 853 bytes (85 / 212 files) (254.3 K / 762.3 K bytes)

2025-12-18 19:34:07,517 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_extract1d_0007.rmap 905 bytes (86 / 212 files) (255.2 K / 762.3 K bytes)

2025-12-18 19:34:07,611 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_drizpars_0004.rmap 519 bytes (87 / 212 files) (256.1 K / 762.3 K bytes)

2025-12-18 19:34:07,716 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_distortion_0025.rmap 3.4 K bytes (88 / 212 files) (256.6 K / 762.3 K bytes)

2025-12-18 19:34:07,805 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_dark_0037.rmap 8.1 K bytes (89 / 212 files) (260.1 K / 762.3 K bytes)

2025-12-18 19:34:07,892 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_bkg_0005.rmap 3.1 K bytes (90 / 212 files) (268.2 K / 762.3 K bytes)

2025-12-18 19:34:07,990 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_area_0014.rmap 2.7 K bytes (91 / 212 files) (271.2 K / 762.3 K bytes)

2025-12-18 19:34:08,079 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_apcorr_0010.rmap 4.3 K bytes (92 / 212 files) (273.9 K / 762.3 K bytes)

2025-12-18 19:34:08,166 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_abvegaoffset_0004.rmap 1.4 K bytes (93 / 212 files) (278.2 K / 762.3 K bytes)

2025-12-18 19:34:08,256 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_niriss_0292.imap 5.8 K bytes (94 / 212 files) (279.6 K / 762.3 K bytes)

2025-12-18 19:34:08,347 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_wavelengthrange_0011.rmap 996 bytes (95 / 212 files) (285.3 K / 762.3 K bytes)

2025-12-18 19:34:08,432 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_tsophot_0003.rmap 896 bytes (96 / 212 files) (286.3 K / 762.3 K bytes)

2025-12-18 19:34:08,521 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_trappars_0003.rmap 1.6 K bytes (97 / 212 files) (287.2 K / 762.3 K bytes)

2025-12-18 19:34:08,609 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_trapdensity_0003.rmap 1.6 K bytes (98 / 212 files) (288.8 K / 762.3 K bytes)

2025-12-18 19:34:08,724 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_superbias_0020.rmap 19.6 K bytes (99 / 212 files) (290.5 K / 762.3 K bytes)

2025-12-18 19:34:08,839 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_specwcs_0024.rmap 8.0 K bytes (100 / 212 files) (310.0 K / 762.3 K bytes)

2025-12-18 19:34:08,932 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_sirskernel_0003.rmap 671 bytes (101 / 212 files) (318.0 K / 762.3 K bytes)

2025-12-18 19:34:09,018 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_saturation_0011.rmap 2.8 K bytes (102 / 212 files) (318.7 K / 762.3 K bytes)

2025-12-18 19:34:09,103 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_regions_0002.rmap 725 bytes (103 / 212 files) (321.5 K / 762.3 K bytes)

2025-12-18 19:34:09,191 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_readnoise_0027.rmap 26.6 K bytes (104 / 212 files) (322.2 K / 762.3 K bytes)

2025-12-18 19:34:09,324 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_psfmask_0008.rmap 28.4 K bytes (105 / 212 files) (348.8 K / 762.3 K bytes)

2025-12-18 19:34:09,435 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_photom_0028.rmap 3.4 K bytes (106 / 212 files) (377.2 K / 762.3 K bytes)

2025-12-18 19:34:09,520 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_persat_0005.rmap 1.6 K bytes (107 / 212 files) (380.5 K / 762.3 K bytes)

2025-12-18 19:34:09,606 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-whitelightstep_0004.rmap 2.0 K bytes (108 / 212 files) (382.1 K / 762.3 K bytes)

2025-12-18 19:34:09,690 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-tweakregstep_0003.rmap 4.5 K bytes (109 / 212 files) (384.1 K / 762.3 K bytes)

2025-12-18 19:34:09,785 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-tsophotometrystep_0003.rmap 1.1 K bytes (110 / 212 files) (388.6 K / 762.3 K bytes)

2025-12-18 19:34:09,878 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-spec2pipeline_0009.rmap 984 bytes (111 / 212 files) (389.6 K / 762.3 K bytes)

2025-12-18 19:34:09,963 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-sourcecatalogstep_0002.rmap 4.6 K bytes (112 / 212 files) (390.6 K / 762.3 K bytes)

2025-12-18 19:34:10,058 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-resamplestep_0002.rmap 687 bytes (113 / 212 files) (395.3 K / 762.3 K bytes)

2025-12-18 19:34:10,156 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-outlierdetectionstep_0003.rmap 940 bytes (114 / 212 files) (396.0 K / 762.3 K bytes)

2025-12-18 19:34:10,242 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-jumpstep_0005.rmap 806 bytes (115 / 212 files) (396.9 K / 762.3 K bytes)

2025-12-18 19:34:10,336 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-image2pipeline_0004.rmap 1.1 K bytes (116 / 212 files) (397.7 K / 762.3 K bytes)

2025-12-18 19:34:10,422 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-detector1pipeline_0006.rmap 1.7 K bytes (117 / 212 files) (398.8 K / 762.3 K bytes)

2025-12-18 19:34:10,531 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-darkpipeline_0002.rmap 868 bytes (118 / 212 files) (400.6 K / 762.3 K bytes)

2025-12-18 19:34:10,623 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-darkcurrentstep_0001.rmap 618 bytes (119 / 212 files) (401.4 K / 762.3 K bytes)

2025-12-18 19:34:10,725 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_pars-backgroundstep_0003.rmap 822 bytes (120 / 212 files) (402.0 K / 762.3 K bytes)

2025-12-18 19:34:10,813 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_mask_0013.rmap 4.8 K bytes (121 / 212 files) (402.9 K / 762.3 K bytes)

2025-12-18 19:34:10,908 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_linearity_0011.rmap 2.4 K bytes (122 / 212 files) (407.6 K / 762.3 K bytes)

2025-12-18 19:34:10,995 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_ipc_0003.rmap 2.0 K bytes (123 / 212 files) (410.0 K / 762.3 K bytes)

2025-12-18 19:34:11,086 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_gain_0016.rmap 2.1 K bytes (124 / 212 files) (412.0 K / 762.3 K bytes)

2025-12-18 19:34:11,172 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_flat_0028.rmap 51.7 K bytes (125 / 212 files) (414.1 K / 762.3 K bytes)

2025-12-18 19:34:11,298 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_filteroffset_0004.rmap 1.4 K bytes (126 / 212 files) (465.8 K / 762.3 K bytes)

2025-12-18 19:34:11,385 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_extract1d_0005.rmap 1.2 K bytes (127 / 212 files) (467.2 K / 762.3 K bytes)

2025-12-18 19:34:11,470 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_drizpars_0001.rmap 519 bytes (128 / 212 files) (468.4 K / 762.3 K bytes)

2025-12-18 19:34:11,563 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_distortion_0034.rmap 53.4 K bytes (129 / 212 files) (468.9 K / 762.3 K bytes)

2025-12-18 19:34:11,695 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_dark_0049.rmap 29.6 K bytes (130 / 212 files) (522.3 K / 762.3 K bytes)

2025-12-18 19:34:11,805 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_bkg_0002.rmap 7.0 K bytes (131 / 212 files) (551.9 K / 762.3 K bytes)

2025-12-18 19:34:11,899 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_area_0012.rmap 33.5 K bytes (132 / 212 files) (558.9 K / 762.3 K bytes)

2025-12-18 19:34:12,008 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_apcorr_0008.rmap 4.3 K bytes (133 / 212 files) (592.4 K / 762.3 K bytes)

2025-12-18 19:34:12,105 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_abvegaoffset_0003.rmap 1.3 K bytes (134 / 212 files) (596.6 K / 762.3 K bytes)

2025-12-18 19:34:12,194 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_nircam_0333.imap 5.7 K bytes (135 / 212 files) (597.9 K / 762.3 K bytes)

2025-12-18 19:34:12,284 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_wavelengthrange_0029.rmap 1.0 K bytes (136 / 212 files) (603.6 K / 762.3 K bytes)

2025-12-18 19:34:12,376 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_tsophot_0004.rmap 882 bytes (137 / 212 files) (604.6 K / 762.3 K bytes)

2025-12-18 19:34:12,463 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_straymask_0009.rmap 987 bytes (138 / 212 files) (605.5 K / 762.3 K bytes)

2025-12-18 19:34:12,555 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_specwcs_0047.rmap 5.9 K bytes (139 / 212 files) (606.5 K / 762.3 K bytes)

2025-12-18 19:34:12,642 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_saturation_0015.rmap 1.2 K bytes (140 / 212 files) (612.4 K / 762.3 K bytes)

2025-12-18 19:34:12,749 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_rscd_0008.rmap 1.0 K bytes (141 / 212 files) (613.6 K / 762.3 K bytes)

2025-12-18 19:34:12,837 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_resol_0006.rmap 790 bytes (142 / 212 files) (614.6 K / 762.3 K bytes)

2025-12-18 19:34:12,929 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_reset_0026.rmap 3.9 K bytes (143 / 212 files) (615.4 K / 762.3 K bytes)

2025-12-18 19:34:13,022 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_regions_0036.rmap 4.4 K bytes (144 / 212 files) (619.3 K / 762.3 K bytes)

2025-12-18 19:34:13,111 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_readnoise_0023.rmap 1.6 K bytes (145 / 212 files) (623.6 K / 762.3 K bytes)

2025-12-18 19:34:13,201 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_psfmask_0009.rmap 2.1 K bytes (146 / 212 files) (625.3 K / 762.3 K bytes)

2025-12-18 19:34:13,289 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_psf_0008.rmap 2.6 K bytes (147 / 212 files) (627.4 K / 762.3 K bytes)

2025-12-18 19:34:13,380 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_photom_0062.rmap 3.7 K bytes (148 / 212 files) (630.0 K / 762.3 K bytes)

2025-12-18 19:34:13,467 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pathloss_0005.rmap 866 bytes (149 / 212 files) (633.8 K / 762.3 K bytes)

2025-12-18 19:34:13,554 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-whitelightstep_0003.rmap 912 bytes (150 / 212 files) (634.6 K / 762.3 K bytes)

2025-12-18 19:34:13,641 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-tweakregstep_0003.rmap 1.8 K bytes (151 / 212 files) (635.5 K / 762.3 K bytes)

2025-12-18 19:34:13,734 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-tsophotometrystep_0003.rmap 2.7 K bytes (152 / 212 files) (637.4 K / 762.3 K bytes)

2025-12-18 19:34:13,827 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-spec3pipeline_0011.rmap 886 bytes (153 / 212 files) (640.0 K / 762.3 K bytes)

2025-12-18 19:34:13,930 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-spec2pipeline_0013.rmap 1.4 K bytes (154 / 212 files) (640.9 K / 762.3 K bytes)

2025-12-18 19:34:14,026 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-sourcecatalogstep_0003.rmap 1.9 K bytes (155 / 212 files) (642.3 K / 762.3 K bytes)

2025-12-18 19:34:14,115 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-resamplestep_0002.rmap 677 bytes (156 / 212 files) (644.2 K / 762.3 K bytes)

2025-12-18 19:34:14,206 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-resamplespecstep_0002.rmap 706 bytes (157 / 212 files) (644.9 K / 762.3 K bytes)

2025-12-18 19:34:14,301 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-outlierdetectionstep_0020.rmap 3.4 K bytes (158 / 212 files) (645.6 K / 762.3 K bytes)

2025-12-18 19:34:14,388 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-jumpstep_0011.rmap 1.6 K bytes (159 / 212 files) (649.0 K / 762.3 K bytes)

2025-12-18 19:34:14,477 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-image2pipeline_0010.rmap 1.1 K bytes (160 / 212 files) (650.6 K / 762.3 K bytes)

2025-12-18 19:34:14,566 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-extract1dstep_0003.rmap 807 bytes (161 / 212 files) (651.7 K / 762.3 K bytes)

2025-12-18 19:34:14,654 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-emicorrstep_0003.rmap 796 bytes (162 / 212 files) (652.5 K / 762.3 K bytes)

2025-12-18 19:34:14,749 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-detector1pipeline_0010.rmap 1.6 K bytes (163 / 212 files) (653.3 K / 762.3 K bytes)

2025-12-18 19:34:14,841 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-darkpipeline_0002.rmap 860 bytes (164 / 212 files) (654.9 K / 762.3 K bytes)

2025-12-18 19:34:14,927 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-darkcurrentstep_0002.rmap 683 bytes (165 / 212 files) (655.7 K / 762.3 K bytes)

2025-12-18 19:34:15,017 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_pars-backgroundstep_0003.rmap 814 bytes (166 / 212 files) (656.4 K / 762.3 K bytes)

2025-12-18 19:34:15,104 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_mrsxartcorr_0002.rmap 2.2 K bytes (167 / 212 files) (657.2 K / 762.3 K bytes)

2025-12-18 19:34:15,198 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_mrsptcorr_0005.rmap 2.0 K bytes (168 / 212 files) (659.4 K / 762.3 K bytes)

2025-12-18 19:34:15,287 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_mask_0032.rmap 7.3 K bytes (169 / 212 files) (661.3 K / 762.3 K bytes)

2025-12-18 19:34:15,375 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_linearity_0018.rmap 2.8 K bytes (170 / 212 files) (668.6 K / 762.3 K bytes)

2025-12-18 19:34:15,465 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_ipc_0008.rmap 700 bytes (171 / 212 files) (671.4 K / 762.3 K bytes)

2025-12-18 19:34:15,553 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_gain_0013.rmap 3.9 K bytes (172 / 212 files) (672.1 K / 762.3 K bytes)

2025-12-18 19:34:15,638 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_fringefreq_0003.rmap 1.4 K bytes (173 / 212 files) (676.1 K / 762.3 K bytes)

2025-12-18 19:34:15,724 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_fringe_0019.rmap 3.9 K bytes (174 / 212 files) (677.5 K / 762.3 K bytes)

2025-12-18 19:34:15,818 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_flat_0071.rmap 15.7 K bytes (175 / 212 files) (681.4 K / 762.3 K bytes)

2025-12-18 19:34:15,925 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_filteroffset_0027.rmap 2.1 K bytes (176 / 212 files) (697.1 K / 762.3 K bytes)

2025-12-18 19:34:16,012 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_extract1d_0021.rmap 1.4 K bytes (177 / 212 files) (699.2 K / 762.3 K bytes)

2025-12-18 19:34:16,102 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_emicorr_0004.rmap 663 bytes (178 / 212 files) (700.6 K / 762.3 K bytes)

2025-12-18 19:34:16,189 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_drizpars_0002.rmap 511 bytes (179 / 212 files) (701.3 K / 762.3 K bytes)

2025-12-18 19:34:16,275 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_distortion_0042.rmap 4.8 K bytes (180 / 212 files) (701.8 K / 762.3 K bytes)

2025-12-18 19:34:16,368 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_dark_0037.rmap 4.4 K bytes (181 / 212 files) (706.6 K / 762.3 K bytes)

2025-12-18 19:34:16,452 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_cubepar_0017.rmap 800 bytes (182 / 212 files) (711.0 K / 762.3 K bytes)

2025-12-18 19:34:16,535 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_bkg_0003.rmap 712 bytes (183 / 212 files) (711.8 K / 762.3 K bytes)

2025-12-18 19:34:16,619 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_area_0015.rmap 866 bytes (184 / 212 files) (712.5 K / 762.3 K bytes)

2025-12-18 19:34:16,703 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_apcorr_0022.rmap 5.0 K bytes (185 / 212 files) (713.3 K / 762.3 K bytes)

2025-12-18 19:34:16,787 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_abvegaoffset_0003.rmap 1.3 K bytes (186 / 212 files) (718.3 K / 762.3 K bytes)

2025-12-18 19:34:16,875 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_miri_0468.imap 5.9 K bytes (187 / 212 files) (719.6 K / 762.3 K bytes)

2025-12-18 19:34:16,963 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_trappars_0004.rmap 903 bytes (188 / 212 files) (725.5 K / 762.3 K bytes)

2025-12-18 19:34:17,051 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_trapdensity_0006.rmap 930 bytes (189 / 212 files) (726.4 K / 762.3 K bytes)

2025-12-18 19:34:17,138 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_superbias_0017.rmap 3.8 K bytes (190 / 212 files) (727.3 K / 762.3 K bytes)

2025-12-18 19:34:17,223 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_saturation_0009.rmap 779 bytes (191 / 212 files) (731.1 K / 762.3 K bytes)

2025-12-18 19:34:17,311 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_readnoise_0014.rmap 1.3 K bytes (192 / 212 files) (731.9 K / 762.3 K bytes)

2025-12-18 19:34:17,407 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_photom_0014.rmap 1.1 K bytes (193 / 212 files) (733.1 K / 762.3 K bytes)

2025-12-18 19:34:17,489 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_persat_0006.rmap 884 bytes (194 / 212 files) (734.2 K / 762.3 K bytes)

2025-12-18 19:34:17,579 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_pars-tweakregstep_0002.rmap 850 bytes (195 / 212 files) (735.1 K / 762.3 K bytes)

2025-12-18 19:34:17,667 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_pars-sourcecatalogstep_0001.rmap 636 bytes (196 / 212 files) (736.0 K / 762.3 K bytes)

2025-12-18 19:34:17,756 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_pars-outlierdetectionstep_0001.rmap 654 bytes (197 / 212 files) (736.6 K / 762.3 K bytes)

2025-12-18 19:34:17,846 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_pars-image2pipeline_0005.rmap 974 bytes (198 / 212 files) (737.3 K / 762.3 K bytes)

2025-12-18 19:34:17,934 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_pars-detector1pipeline_0002.rmap 1.0 K bytes (199 / 212 files) (738.2 K / 762.3 K bytes)

2025-12-18 19:34:18,031 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_pars-darkpipeline_0002.rmap 856 bytes (200 / 212 files) (739.3 K / 762.3 K bytes)

2025-12-18 19:34:18,119 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_mask_0023.rmap 1.1 K bytes (201 / 212 files) (740.1 K / 762.3 K bytes)

2025-12-18 19:34:18,210 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_linearity_0015.rmap 925 bytes (202 / 212 files) (741.2 K / 762.3 K bytes)

2025-12-18 19:34:18,295 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_ipc_0003.rmap 614 bytes (203 / 212 files) (742.1 K / 762.3 K bytes)

2025-12-18 19:34:18,390 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_gain_0010.rmap 890 bytes (204 / 212 files) (742.7 K / 762.3 K bytes)

2025-12-18 19:34:18,478 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_flat_0009.rmap 1.1 K bytes (205 / 212 files) (743.6 K / 762.3 K bytes)

2025-12-18 19:34:18,571 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_distortion_0011.rmap 1.2 K bytes (206 / 212 files) (744.7 K / 762.3 K bytes)

2025-12-18 19:34:18,665 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_dark_0017.rmap 4.3 K bytes (207 / 212 files) (746.0 K / 762.3 K bytes)

2025-12-18 19:34:18,769 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_area_0010.rmap 1.2 K bytes (208 / 212 files) (750.3 K / 762.3 K bytes)

2025-12-18 19:34:18,862 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_apcorr_0004.rmap 4.0 K bytes (209 / 212 files) (751.4 K / 762.3 K bytes)

2025-12-18 19:34:18,946 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_abvegaoffset_0002.rmap 1.3 K bytes (210 / 212 files) (755.4 K / 762.3 K bytes)

2025-12-18 19:34:19,037 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_fgs_0124.imap 5.1 K bytes (211 / 212 files) (756.6 K / 762.3 K bytes)

2025-12-18 19:34:19,127 - CRDS - INFO - Fetching /home/runner/crds/mappings/jwst/jwst_1464.pmap 580 bytes (212 / 212 files) (761.7 K / 762.3 K bytes)

2025-12-18 19:34:19,763 - CRDS - INFO - Fetching /home/runner/crds/references/jwst/miri/jwst_miri_pars-emicorrstep_0003.asdf 1.0 K bytes (1 / 1 files) (0 / 1.0 K bytes)

2025-12-18 19:34:19,854 - stpipe.step - INFO - PARS-EMICORRSTEP parameters found: /home/runner/crds/references/jwst/miri/jwst_miri_pars-emicorrstep_0003.asdf

2025-12-18 19:34:19,872 - CRDS - INFO - Fetching /home/runner/crds/references/jwst/miri/jwst_miri_pars-darkcurrentstep_0001.asdf 936 bytes (1 / 1 files) (0 / 936 bytes)

2025-12-18 19:34:19,961 - stpipe.step - INFO - PARS-DARKCURRENTSTEP parameters found: /home/runner/crds/references/jwst/miri/jwst_miri_pars-darkcurrentstep_0001.asdf

2025-12-18 19:34:19,971 - CRDS - INFO - Fetching /home/runner/crds/references/jwst/miri/jwst_miri_pars-jumpstep_0004.asdf 1.9 K bytes (1 / 1 files) (0 / 1.9 K bytes)

2025-12-18 19:34:20,061 - stpipe.step - INFO - PARS-JUMPSTEP parameters found: /home/runner/crds/references/jwst/miri/jwst_miri_pars-jumpstep_0004.asdf

2025-12-18 19:34:20,073 - CRDS - INFO - Fetching /home/runner/crds/references/jwst/miri/jwst_miri_pars-detector1pipeline_0008.asdf 1.7 K bytes (1 / 1 files) (0 / 1.7 K bytes)

2025-12-18 19:34:20,161 - stpipe.pipeline - INFO - PARS-DETECTOR1PIPELINE parameters found: /home/runner/crds/references/jwst/miri/jwst_miri_pars-detector1pipeline_0008.asdf

2025-12-18 19:34:20,179 - stpipe.step - INFO - Detector1Pipeline instance created.

2025-12-18 19:34:20,180 - stpipe.step - INFO - GroupScaleStep instance created.

2025-12-18 19:34:20,181 - stpipe.step - INFO - DQInitStep instance created.

2025-12-18 19:34:20,182 - stpipe.step - INFO - EmiCorrStep instance created.

2025-12-18 19:34:20,182 - stpipe.step - INFO - SaturationStep instance created.

2025-12-18 19:34:20,183 - stpipe.step - INFO - IPCStep instance created.

2025-12-18 19:34:20,184 - stpipe.step - INFO - SuperBiasStep instance created.

2025-12-18 19:34:20,185 - stpipe.step - INFO - RefPixStep instance created.

2025-12-18 19:34:20,186 - stpipe.step - INFO - RscdStep instance created.

2025-12-18 19:34:20,186 - stpipe.step - INFO - FirstFrameStep instance created.

2025-12-18 19:34:20,187 - stpipe.step - INFO - LastFrameStep instance created.

2025-12-18 19:34:20,188 - stpipe.step - INFO - LinearityStep instance created.

2025-12-18 19:34:20,189 - stpipe.step - INFO - DarkCurrentStep instance created.

2025-12-18 19:34:20,189 - stpipe.step - INFO - ResetStep instance created.

2025-12-18 19:34:20,190 - stpipe.step - INFO - PersistenceStep instance created.

2025-12-18 19:34:20,191 - stpipe.step - INFO - ChargeMigrationStep instance created.

2025-12-18 19:34:20,192 - stpipe.step - INFO - JumpStep instance created.

2025-12-18 19:34:20,193 - stpipe.step - INFO - CleanFlickerNoiseStep instance created.

2025-12-18 19:34:20,194 - stpipe.step - INFO - RampFitStep instance created.

2025-12-18 19:34:20,195 - stpipe.step - INFO - GainScaleStep instance created.

2025-12-18 19:34:20,196 - stpipe.step - INFO - XplyStep instance created.

2025-12-18 19:34:20,330 - stpipe.step - INFO - Step Detector1Pipeline running with args (np.str_('/home/runner/work/jwst-pipeline-notebooks/jwst-pipeline-notebooks/notebooks/MIRI/Coronagraphy/miri_coro_demo_data/Obs008/uncal/jw01386008001_04101_00001_mirimage_uncal.fits'),).

2025-12-18 19:34:20,351 - stpipe.step - INFO - Step Detector1Pipeline parameters are:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: ./miri_coro_demo_data/Obs008/stage1

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: True

skip: False

suffix: None

search_output_file: True

input_dir: ''

save_calibrated_ramp: False

steps:

group_scale:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

dq_init:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

emicorr:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

algorithm: joint

nints_to_phase: None

nbins: None

scale_reference: True

onthefly_corr_freq: None

use_n_cycles: 3

fit_ints_separately: False

user_supplied_reffile: None

save_intermediate_results: False

saturation:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

n_pix_grow_sat: 1

use_readpatt: True

ipc:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: True

suffix: None

search_output_file: True

input_dir: ''

superbias:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

refpix:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: True

suffix: None

search_output_file: True

input_dir: ''

odd_even_columns: True

use_side_ref_pixels: True

side_smoothing_length: 11

side_gain: 1.0

odd_even_rows: True

ovr_corr_mitigation_ftr: 3.0

preserve_irs2_refpix: False

irs2_mean_subtraction: False

refpix_algorithm: median

sigreject: 4.0

gaussmooth: 1.0

halfwidth: 30

rscd:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

firstframe:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

bright_use_group1: True

lastframe:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

linearity:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

dark_current:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

dark_output: None

average_dark_current: 1.0

reset:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

persistence:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

input_trapsfilled: ''

flag_pers_cutoff: 40.0

save_persistence: False

save_trapsfilled: True

modify_input: False

charge_migration:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: True

suffix: None

search_output_file: True

input_dir: ''

signal_threshold: 25000.0

jump:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

rejection_threshold: 4.0

three_group_rejection_threshold: 6.0

four_group_rejection_threshold: 5.0

maximum_cores: half

flag_4_neighbors: True

max_jump_to_flag_neighbors: 1000

min_jump_to_flag_neighbors: 30

after_jump_flag_dn1: 500

after_jump_flag_time1: 15

after_jump_flag_dn2: 1000

after_jump_flag_time2: 3000

expand_large_events: False

min_sat_area: 1

min_jump_area: 0

expand_factor: 0

use_ellipses: False

sat_required_snowball: False

min_sat_radius_extend: 0.0

sat_expand: 0

edge_size: 0

mask_snowball_core_next_int: True

snowball_time_masked_next_int: 4000

find_showers: False

max_shower_amplitude: 4.0

extend_snr_threshold: 3.0

extend_min_area: 50

extend_inner_radius: 1

extend_outer_radius: 2.6

extend_ellipse_expand_ratio: 1.1

time_masked_after_shower: 30

min_diffs_single_pass: 10

max_extended_radius: 200

minimum_groups: 3

minimum_sigclip_groups: 100

only_use_ints: True

clean_flicker_noise:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: True

suffix: None

search_output_file: True

input_dir: ''