BOTS Time Series Observations#

Use case: Bright Object Time Series; extracting exoplanet spectra.

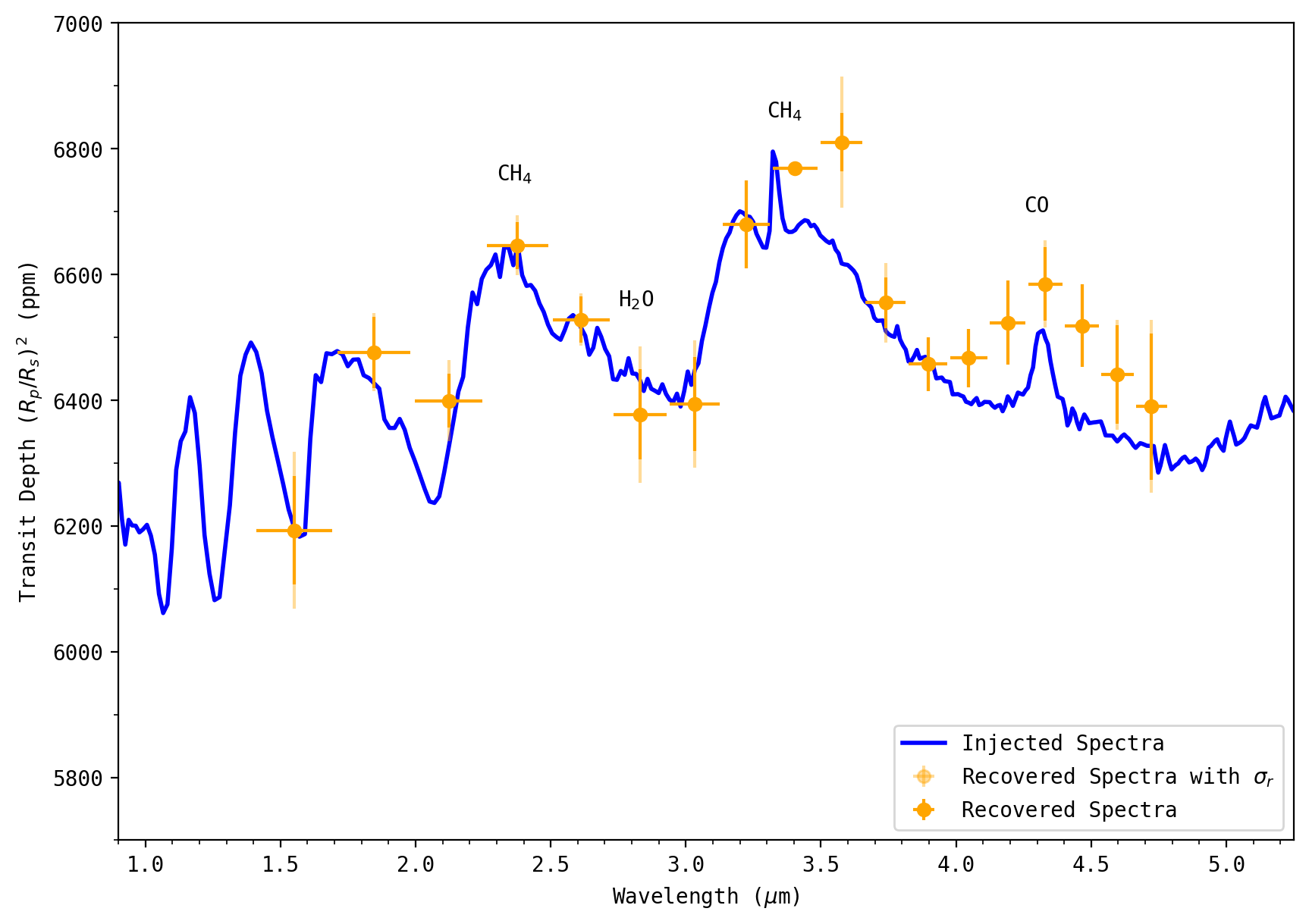

Data: JWST simulated NIRSpec data from ground-based campaign; GJ436b spectra from the Goyal et al. (2018).

Tools: scikit, lmfit, scipy, matplotlip, astropy, pandas.

Cross-intrument: .

Documentation: This notebook is part of a STScI’s larger post-pipeline Data Analysis Tools Ecosystem.

Author: David K. Sing (dsing@jhu.edu)

Last updated: 2 July 2020

Introduction#

This notebook uses time series JWST NIRSpec data taken during a ground-based campaign to illustrate extracting exoplanet spectra from time-series observations.

The data are derived from the ISIM-CV3, the cryovacuum campaign of the JWST Integrated Science Instrument Module (ISIM), that took place at Goddard Space Flight Center during the winter 2015-2016 (Kimble et al. 2016). The data can be found at https://www.cosmos.esa.int/web/jwst-nirspec/test-data, and detailed and insightful report of the data by G. Giardino, S. Birkmann, P. Ferruit, B. Dorner, B. Rauscher can be found here: ftp://ftp.cosmos.esa.int/jwstlib/ReleasedCV3dataTimeSeries/CV3_TimeSeries_PRM.tgz

This NIRSpec time series dataset has had a transit light curve injected at the pixel-level, which closely mimics a bright object time series (BOTS) observation of a transiting exoplanet. In this case, a GJ436b spectra from the Goyal et al. (2018) exoplanet grid was selected (clear atmosphere at solar metallicity). With an actual NIRSpec dataset, the noise properties of the detector, jitter, and the effects on extracting exoplanet spectra from time-series observations can more accurately simulated.

Broadly the aim of this notebook is to work with these time series observations to:

Extract 1D spectra from the 2D spectral images.

Define a time series model to fit to the wavelength dependent transit light curve.

Fit each time series wavelength bin of the 1D spectra, measuring the desired quantity \(R_{pl}(\lambda)/R_{star}\).

Produce a measured transmission spectrum that can then be compared to models.

The example outputs the fit light curves for each spectral bin, along with fitting statistics.

Load packages#

This notebook uses packages (matplotlib, astropy, scipy, glob, lmfit, pickle, os, sklearn) which can all be installed in a standard fashion through pip.

Several routines to calculate limb-darkening and a transit model were extracted from ExoTiC-ISm (Laginja & Wakeford 2020 ; https://github.com/hrwakeford/ExoTiC-ISM), and slightly adapted. The full set of stellar models used for the limb-darkening calculation can also be downloaded from ExoTiC-ISM, as this notebook only downloads and loads the single stellar model used to generate the limb darkening.

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.ticker as ticker

from matplotlib.backends.backend_pdf import PdfPages

from astropy.utils.data import download_file

from astropy.table import Table

from astropy.io import fits, ascii

from astropy.modeling.models import custom_model

from astropy.modeling.fitting import LevMarLSQFitter

from scipy.interpolate import interp1d, splev, splrep

from scipy.io import readsav

from scipy import stats

import glob

import lmfit

import pickle

from os import path, mkdir

from sklearn.linear_model import LinearRegression

import pandas as pd

import os

import shutil

Setup Parameters#

Parameters of the fit include directories where the data and limb darkening stellar models are held, along with properties of the planet and star. The stellar and planet values that have been entered here (modeled after GJ436) are the same as was used to model the injected transit. Note, the 4500K stellar model used to inject the transit was hotter than GJ436A.

# SETUP ----------------------------------------------

# Setup directories

save_directory = './notebookrun2/' # Local directory to save files to

data_directory = './' # Local data to work with fits files if desired

# Setup Detector Properties & Rednoise measurement timescale

gain = 1.0 # 2D spectra has already converted to counts, gain of detector is 1.0

binmeasure = 256 # Binning technique to measure rednoise, choose bin size to evaluate sigma_r

number_of_images = 8192 # Number of images in the dataset

# Setup Planet Properties

grating = 'NIRSpecPrism'

ld_model = '3D' # 3D/1D stellar model choice (transit was injected with the 3D model)

# Setup Stellar Properties for Limb-Darkening Calculation

Teff = 4500 # Effective Temperature (K)

logg = 4.5 # Surface Gravity

M_H = 0.0 # Stellar Metallicity log_10[M/H]

Rstar = 0.455 # Planet radius (in units of solar radii Run)

# Setup Transit parameters (can get from NASA exoplanet archive)

t0 = 2454865.084034 # bjd time of inferior conjunction

per = 2.64389803 # orbital period (days) BJD_TDB

rp = 0.0804 # Planet radius (in units of stellar radii)

a_Rs = 14.54 # Semi-major axis (input a/Rstar so units of stellar radii)

inc = 86.858 * (2*np.pi/360) # Orbital inclination (in degrees->radians)

ecc = 0.0 # Eccentricity

omega = 0.0 * (2*np.pi/360) # Longitude of periastron (in degrees->radians)

rho_star = (3*np.pi)/(6.67259E-8*(per*86400)**2)*(a_Rs)**3 # Stellar Density (g/cm^3) from a/Rs

# a_Rs=(rho_star*6.67259E-8*per_sec*per_sec/(3*np.pi))**(1/3) # a/Rs from Stellar Density (g/cm^3)

# Create local directories

if not path.exists(save_directory):

mkdir(save_directory) # Create a new directory to save outputs to if needed

if not path.exists(save_directory+'3DGrid'):

mkdir(save_directory+'3DGrid') # Create new directory to save

limb_dark_directory = save_directory # Point to limb darkeing directory contaning 3DGrid/ directory

Download and Load NIRSpec data#

The fits images are loaded, and information including the image date and science spectra are saved.

A default flux offset value BZERO is also taken from the header and subtracted from every science frame.

Reading in the 2^13 fits files is slow. To speed things up, we created a pickle file of the for first instance the fits images are loaded. This 1GB pickle file is loaded instead of reading the fits files if found.

Alternatively, the fits files can be downloaded here: https://data.science.stsci.edu/redirect/JWST/jwst-data_analysis_tools/transit_spectroscopy_notebook/Archive.Trace_SLIT_A_1600_SRAD-PRM-PS-6007102143_37803_JLAB88_injected.tar.gz. The images are in a tar.gz archvie, which needs to be un-archived and data_directory variable set to the directory in the SETUP cell above.

The cell below downloads the 1GB JWST data pickle file, and several other files needed.

# Download 1GB NIRSpec Data

fn_jw = save_directory+'jwst_data.pickle'

if not path.exists(fn_jw):

fn = download_file('https://data.science.stsci.edu/redirect/JWST/jwst-data_analysis_tools/transit_spectroscopy_notebook/jwst_data.pickle')

dest = shutil.move(fn, save_directory+'jwst_data.pickle')

print('JWST Data Download Complete')

# Download further files needed, move to local directory for easier repeated access

fn_sens = download_file('https://data.science.stsci.edu/redirect/JWST/jwst-data_analysis_tools/transit_spectroscopy_notebook/NIRSpec.prism.sensitivity.sav')

dest = shutil.move(fn_sens, save_directory+'NIRSpec.prism.sensitivity.sav') # Move files to save_directory

fn_ld = download_file('https://data.science.stsci.edu/redirect/JWST/jwst-data_analysis_tools/transit_spectroscopy_notebook/3DGrid/mmu_t45g45m00v05.flx')

destld = shutil.move(fn_ld, save_directory+'3DGrid/mmu_t45g45m00v05.flx')

JWST Data Download Complete

Loads the Pickle File data. Alternatly, the data can be read from the original fits files.

if path.exists(fn_jw):

dbfile = open(fn_jw, 'rb') # for reading also binary mode is important

jwst_data = pickle.load(dbfile)

print('Loading JWST data from Pickle File')

bjd = jwst_data['bjd']

wsdata_all = jwst_data['wsdata_all']

shx = jwst_data['shx']

shy = jwst_data['shy']

common_mode = jwst_data['common_mode']

all_spec = jwst_data['all_spec']

exposure_length = jwst_data['exposure_length']

dbfile.close()

print('Done')

elif not path.exists(fn_jw):

# Load all fits images

# Arrays created for BJD time, and the white light curve total_counts

list = glob.glob(data_directory+"*.fits")

index_of_images = np.arange(number_of_images)

bjd = np.zeros((number_of_images))

exposure_length = np.zeros((number_of_images))

all_spec = np.zeros((32, 512, number_of_images))

for i in index_of_images:

img = list[i]

print(img)

hdul = fits.open(img)

# hdul.info()

bjd_image = hdul[0].header['BJD_TDB']

BZERO = hdul[0].header['BZERO'] # flux value offset

bjd[i] = bjd_image

expleng = hdul[0].header['INTTIME'] # Total integration time for one MULTIACCUM (seconds)

exposure_length[i] = expleng/86400. # Total integration time for one MULTIACCUM (days)

print(bjd[i])

data = hdul[0].data

# total counts in image

# total_counts[i]=gain*np.sum(data[11:18,170:200]-BZERO) # total counts in 12 pix wide aperture around pixel 60 image

all_spec[:, :, i] = gain * (data-BZERO) # Load all spectra into an array subtract flux value offset

hdul.close()

# Sort data

srt = np.argsort(bjd) # index to sort

bjd = bjd[srt]

# total_counts=total_counts[srt]

exposure_length = exposure_length[srt]

all_spec[:, :, :] = all_spec[:, :, srt]

# Get Wavelength of Data

file_wave = download_file('https://data.science.stsci.edu/redirect/JWST/jwst-data_analysis_tools/transit_spectroscopy_notebook/JWST_NIRSpec_wavelength_microns.txt')

f = open(file_wave, 'r')

wsdata_all = np.genfromtxt(f)

print('wsdata size :', wsdata_all.shape)

print('Data wavelength Loaded :', wsdata_all)

print('wsdata new size :', wsdata_all.shape)

# Read in Detrending parameters

# Mean of parameter must be 0.0 to be properly normalized

# Idealy standard deviation of parameter = 1.0

file_xy = download_file('https://data.science.stsci.edu/redirect/JWST/jwst-data_analysis_tools/transit_spectroscopy_notebook/JWST_NIRSpec_Xposs_Yposs_CM_detrending.txt')

f = open(file_xy, 'r')

data = np.genfromtxt(f, delimiter=',')

shx = data[:, 0]

shy = data[:, 1]

common_mode = data[:, 2]

# Store Data in a pickle file

jwst_data = {'bjd': bjd, 'wsdata_all': wsdata_all, 'shx': shx, 'shy': shy, 'common_mode': common_mode, 'all_spec': all_spec, 'exposure_length': exposure_length}

dbfile = open('jwst_data.pickle', 'ab') # Its important to use binary mode

pickle.dump(jwst_data, dbfile)

dbfile.close()

Loading JWST data from Pickle File

Done

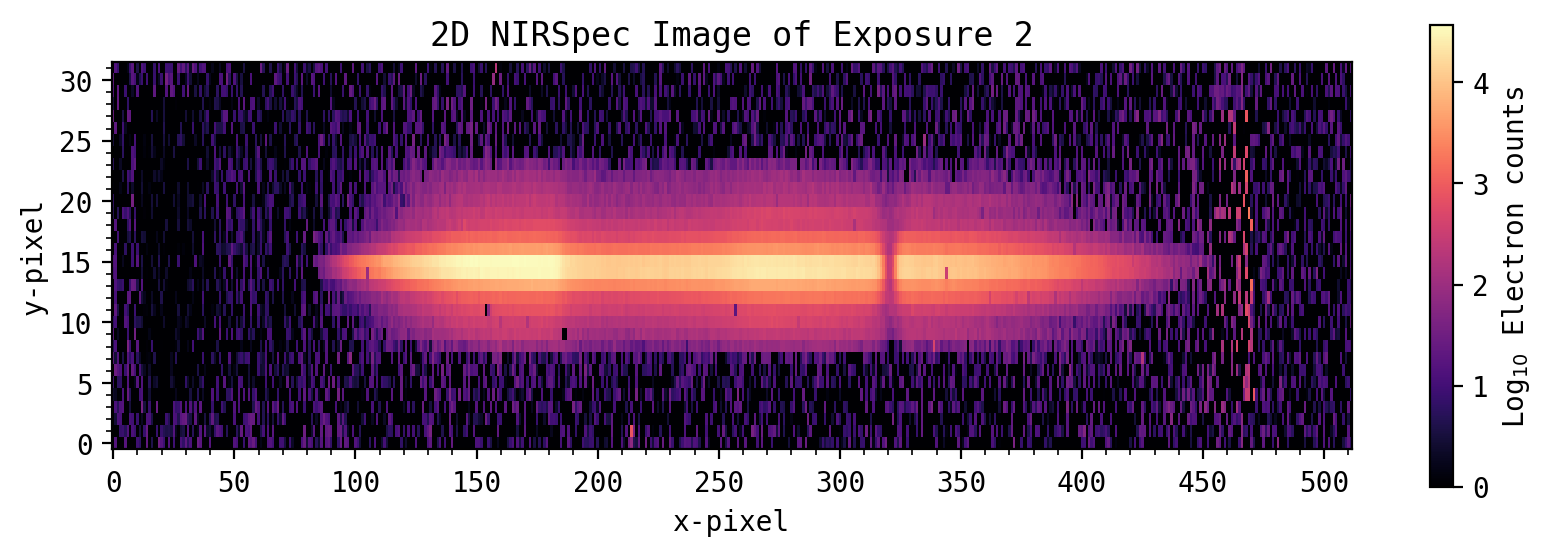

Visualizing the 2D spectral data#

expnum = 2 # Choose Exposure number to view

plt.rcParams['figure.figsize'] = [10.0, 3.0] # Figure dimensions

plt.rcParams['figure.dpi'] = 200 # Resolution

plt.rcParams['savefig.dpi'] = 200

plt.rcParams['image.aspect'] = 5 # Aspect ratio (the CCD is quite long!!!)

plt.cmap = plt.cm.magma

plt.cmap.set_bad('k', 1.)

plt.rcParams['image.cmap'] = 'magma' # Colormap

plt.rcParams['image.interpolation'] = 'none'

plt.rcParams['image.origin'] = 'lower'

plt.rcParams['font.family'] = "monospace"

plt.rcParams['font.monospace'] = 'DejaVu Sans Mono'

img = all_spec[:, :, expnum]

zeros = np.where(img <= 0) # Plot on a log scale, so set zero or negative values to a small number

img[zeros] = 1E-10

fig, axs = plt.subplots()

f = axs.imshow(np.log10(img), vmin=0) # Plot image

plt.xlabel('x-pixel')

plt.ylabel('y-pixel')

axs.yaxis.set_major_locator(ticker.MultipleLocator(5))

axs.yaxis.set_minor_locator(ticker.MultipleLocator(1))

axs.xaxis.set_major_locator(ticker.MultipleLocator(50))

axs.xaxis.set_minor_locator(ticker.MultipleLocator(10))

plt.title('2D NIRSpec Image of Exposure ' + str(expnum))

fig.colorbar(f, label='Log$_{10}$ Electron counts', ax=axs)

plt.show()

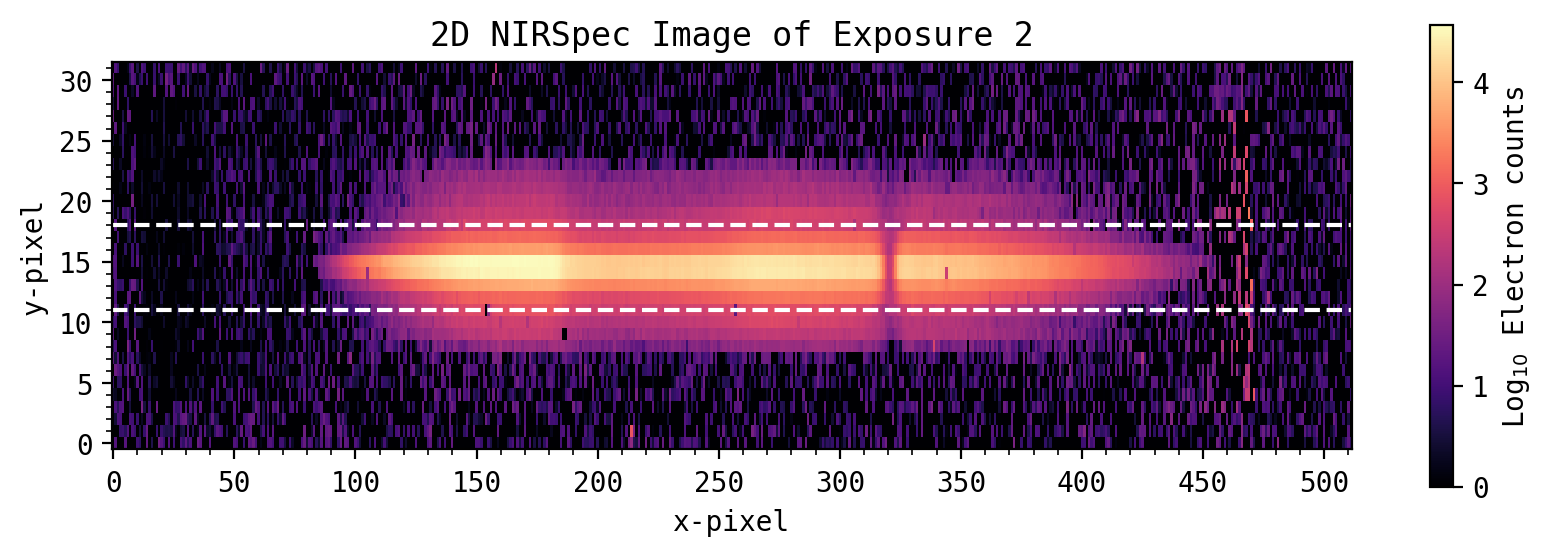

Extract 1D spectra from 2D array of images#

Ideally, extracting 1D spectra from the 2D images would use optimal aperture extraction along a fit trace with routines equivalent to IRAF/apall. This functionality is not yet available in astro-py.

Several processing steps have already been applied. The 2D spectra here have been flat field corrected, and 1/f noise has been removed from each pixel by subtracting the median count rate from the un-illuminated pixels along each column (see Giardino et al. for more information about 1/f noise). Each 2D image has also been aligned in the X and Y directions, such that each pixel corresponds to the same wavelength. As the CV3 test had no requirements for flux stability, the ~1% flux variations from the LED have also been removed.

For spectral extraction, the example here simply uses a simple summed box. The 8192 2D spectra have been pre-loaded into a numpy array. The spectra peaks at pixel Y=16. For each column, an aperature sum is taken over Y-axis pixels 11 to 18, which contains most of the spectrum counts. Wider aperture would add more counts, but also introduces more noise.

Further cleaning steps are not done here

Ideally, the pixels flagged as bad for various reasons should be cleaned.

Cosmic rays should be identified and removed.

all_spec.shape

y_lower = 11 # Lower extraction aperture

y_upper = 18 # Upper extraction aperture

all_spec_1D = np.sum(all_spec[y_lower:y_upper, :, :], axis=0) # Sum along Y-axis from pixels 11 to 18

# Plot

plt.rcParams['figure.figsize'] = [10.0, 3.0] # Figure dimensions

plt.rcParams['figure.dpi'] = 200 # Resolution

plt.rcParams['savefig.dpi'] = 200

plt.rcParams['image.aspect'] = 5 # Aspect ratio (the CCD is quite long!!!)

plt.cmap = plt.cm.magma

plt.cmap.set_bad('k', 1.)

plt.rcParams['image.cmap'] = 'magma' # Colormap

plt.rcParams['image.interpolation'] = 'none'

plt.rcParams['image.origin'] = 'lower'

plt.rcParams['font.family'] = "monospace"

plt.rcParams['font.monospace'] = 'DejaVu Sans Mono'

img = all_spec[:, :, expnum]

zeros = np.where(img <= 0) # Plot on a log scale, so set zero or negative values to a small number

img[zeros] = 1E-10

fig, axs = plt.subplots()

f = axs.imshow(np.log10(img), vmin=0) # Plot image

plt.xlabel('x-pixel')

plt.ylabel('y-pixel')

axs.yaxis.set_major_locator(ticker.MultipleLocator(5))

axs.yaxis.set_minor_locator(ticker.MultipleLocator(1))

axs.xaxis.set_major_locator(ticker.MultipleLocator(50))

axs.xaxis.set_minor_locator(ticker.MultipleLocator(10))

plt.axhline(y_lower, color='w', ls='dashed')

plt.axhline(y_upper, color='w', ls='dashed')

plt.title('2D NIRSpec Image of Exposure '+str(expnum))

fig.colorbar(f, label='Log$_{10}$ Electron counts', ax=axs)

plt.show()

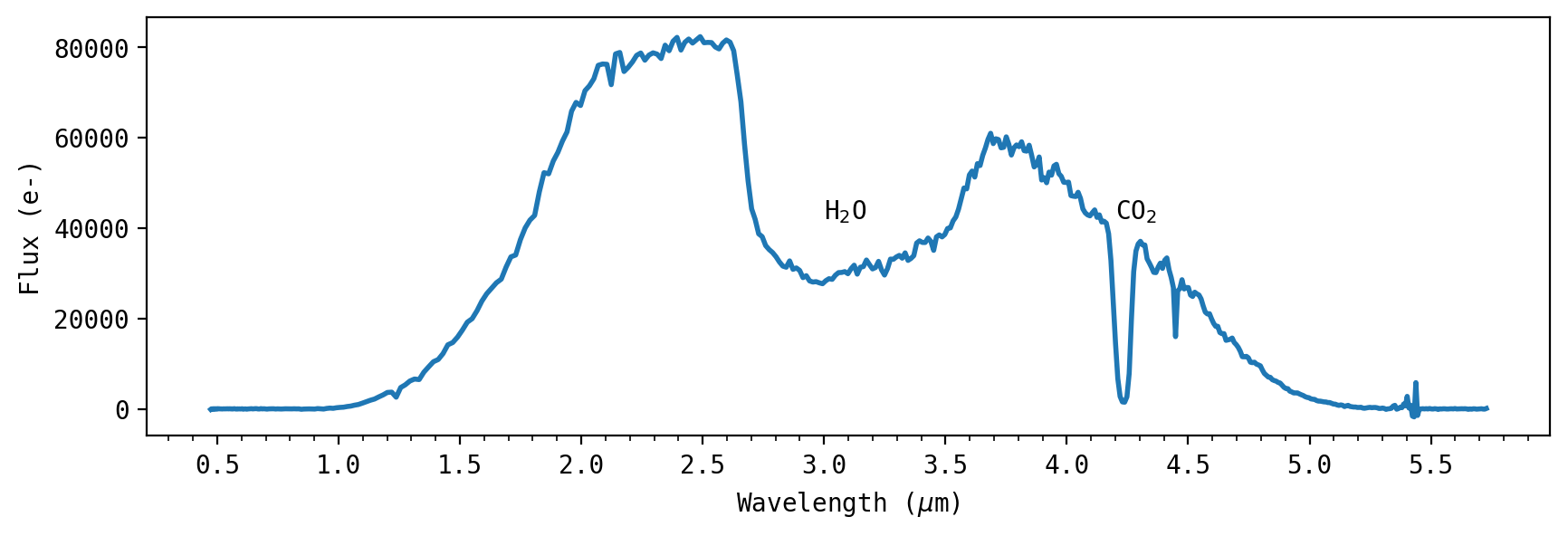

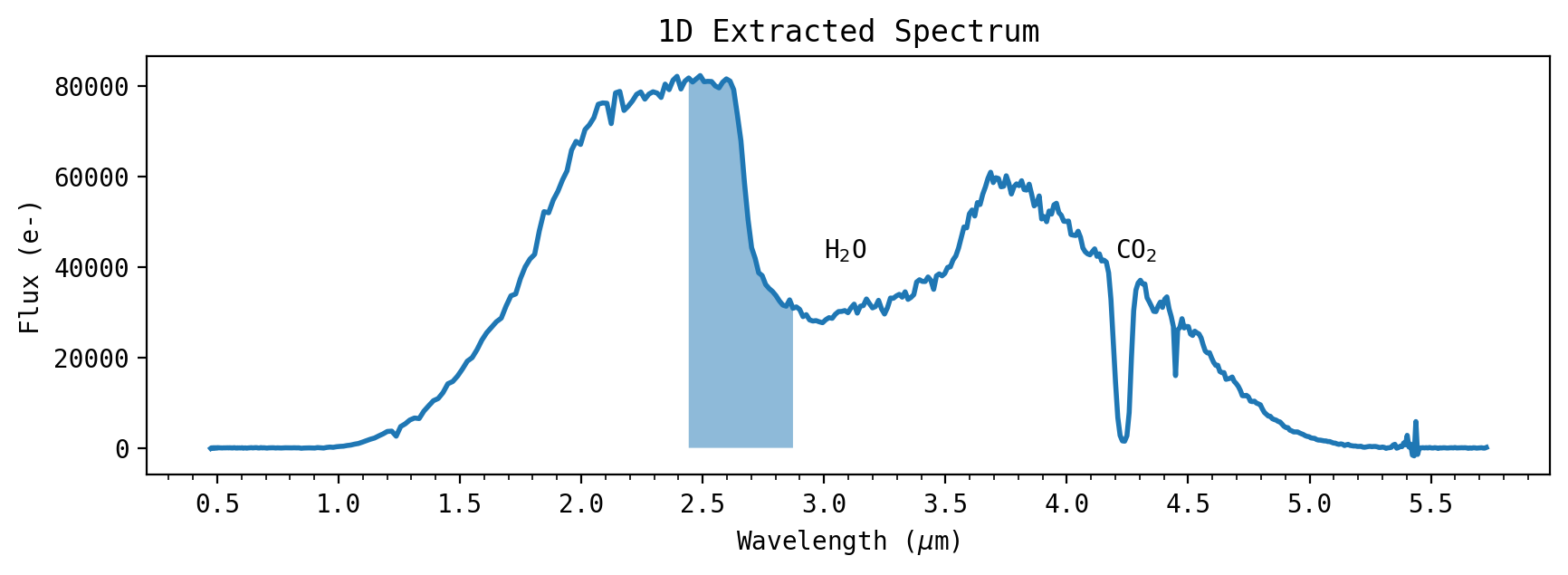

Visualizing the 1D spectral data#

fig, axs = plt.subplots()

f = plt.plot(wsdata_all, all_spec_1D[:, 0], linewidth=2, zorder=0) # overplot Transit model at data

plt.xlabel(r'Wavelength ($\mu$m)')

plt.ylabel('Flux (e-)')

axs.xaxis.set_major_locator(ticker.MultipleLocator(0.5))

axs.xaxis.set_minor_locator(ticker.MultipleLocator(0.1))

plt.annotate('H$_2$O', xy=(3.0, 42000))

plt.annotate('CO$_2$', xy=(4.2, 42000))

plt.show()

The CV3 test observed a lamp with a similar PSF as JWST will have, and has significant counts from about 1.5 to 4.5 \(\mu\)m.

The cryogenic test chamber had CO\(_2\) and H\(_2\)O ice buildup on the window, which can be seen as spectral absorption features in the 2D spectra.

Calculate Orbital Phase and a separate fine grid model used for plotting purposes#

# Calculate Orbital Phase

phase = (bjd-t0) / (per) # phase in days relative to T0 ephemeris

phase = phase - np.fix(phase[number_of_images-1]) # Have current phase occur at value 0.0

t_fine = np.linspace(np.min(bjd), np.max(bjd), 1000) # times at which to calculate light curve

phase_fine = (t_fine-t0)/(per) # phase in days relative to T0 ephemeris

phase_fine = phase_fine-np.fix(phase[number_of_images-1]) # Have current phase occur at value 0.0

b0 = a_Rs * np.sqrt((np.sin(phase * 2 * np.pi)) ** 2 + (np.cos(inc) * np.cos(phase * 2 * np.pi)) ** 2)

intransit = (b0-rp < 1.0E0).nonzero() # Select indicies between first and fourth contact

outtransit = (b0-rp > 1.0E0).nonzero() # Select indicies out of transit

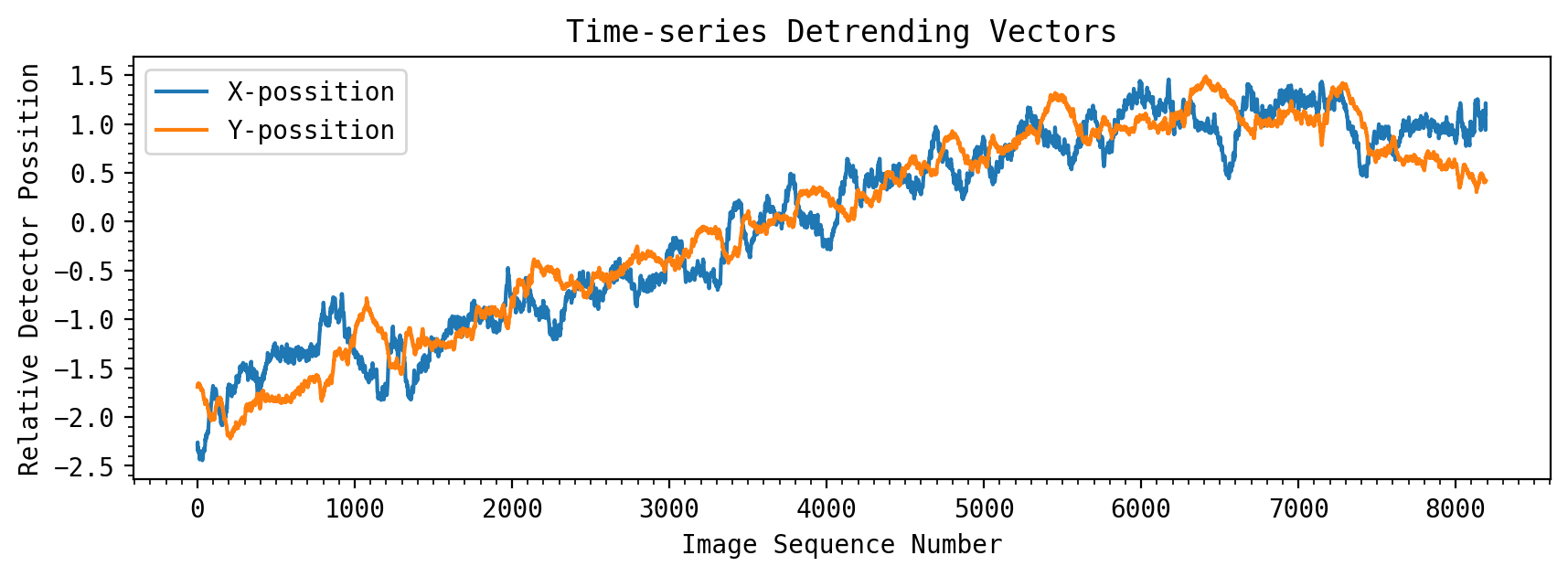

Dealing with Systematic Drift On the Detector#

The CV3 test assessed the stability of the instrument by introducing a large spatial jitter and drift. This resulted in a significant X,Y movement of the spectra on the 2D detector. While this bulk shift has been removed which aligns the spectra, intra- and inter- pixel sensitivities introduce flux variations which need to be removed. The jitter from the CV3 test was more than 30 mas, which is ~4X larger than the JWST stability requirement. Thus, in orbit these detector effects are expected to be significantly smaller, but they will still be present and will need to be modeled and removed from time series observations.

The detector X, Y positions here were measured from cross-correlation of the 2D images (collapsing the spectra along one dimension first), and are saved in arrays \(shx\) and \(shy\). These detending vectors would ideally be measured using the trace position values from the spectral extraction of each integration, as that could also accurately measure integration-to-integration how the spectra spatially changed on the detector.

The detector shifts have original amplitudes near 0.2 pixels, though the vectors have had initial normalization. For detrending purposes, these arrays should have a mean of 0 and standard deviation of 1.0.

A residual color-dependent trend with the LED lamp can also been seen in the CV3 data, which can be partly removed by scaling original common-mode lamp trend, which was measured using the CV3 white light curve.

shx_tmp = shx / np.mean(shx) - 1.0E0 # Set Mean around 0.0

shx_detrend = shx_tmp/np.std(shx_tmp) # Set standard deviation to 1.0

shy_tmp = shy / np.mean(shy) - 1.0E0 # Set Mean around 0.0

shy_detrend = shy_tmp/np.std(shy_tmp) # Set standard deviation to 1.0

cm = common_mode / np.mean(common_mode) - 1.0E0

cm_detrend = cm/np.std(cm)

fig, axs = plt.subplots()

plt.plot(shx_detrend, label='X-possition')

plt.plot(shy_detrend, label='Y-possition')

plt.xlabel('Image Sequence Number')

plt.ylabel('Relative Detector Possition')

plt.title('Time-series Detrending Vectors')

axs.xaxis.set_major_locator(ticker.MultipleLocator(1000))

axs.xaxis.set_minor_locator(ticker.MultipleLocator(100))

axs.yaxis.set_major_locator(ticker.MultipleLocator(0.5))

axs.yaxis.set_minor_locator(ticker.MultipleLocator(0.1))

plt.legend()

plt.show()

Create arrays of the vectors used for detrending.#

From Sing et al. 2019: Systematic errors are often removed by a parameterized deterministic model, where the non-transit photometric trends are found to correlate with a number \(n\) of external parameters (or optical state vectors, \(X\)). These parameters describe changes in the instrument or other external factors as a function of time during the observations, and are fit with a coefficient for each optical state parameter, \(p_n\), to model and remove (or detrend) the photometric light curves.

When including systematic trends, the total parameterized model of the flux measurements over time, \(f(t)\), can be modeled as a combination of the theoretical transit model, \(T(t,\theta)\) (which depends upon the transit parameters \(\theta\)), the total baseline flux detected from the star, \(F_0\), and the systematics error model \(S(x)\) giving,

\(f(t) = T(t,\theta)\times F_0 \times S(x)\).

We will use a linear model for the instrument systematic effects.

\(S(x)= p_1 x + p_2 y + p_3 x^2 + p_4 y^2 + p_5 x y + p_6 cm + p_7 \phi \)

\(cm\) is the common_mode trend, and \(\phi\) is a linear time trend which helps remove changing H\(_2\)O ice within the H\(_2\)O spectral feature.

shx = shx_detrend

shy = shy_detrend

common_mode = cm_detrend

XX = np.array([shx, shy, shx**2, shy**2, shx*shy, common_mode, np.ones(number_of_images)]) # Detrending array without linear time trend

XX = np.transpose(XX)

XXX = np.array([shx, shy, shx**2, shy**2, shx*shy, common_mode, phase, np.ones(number_of_images)]) # Detrending array with with linear time trend

XXX = np.transpose(XXX)

Linear Regression can be used to quickly determine the parameters \(p_n\) using the out-of-transit data.

Here, we take a wavelength bin of the data (pixels 170 to 200) to make a time series. The out-of-transit points are selected and a linear regression of \(S(x)\) is done to determine the optical state parameters \(p_n\)

pix1 = 170 # wavelength bin lower range

pix2 = 200 # wavelength bin upper range

y = np.sum(all_spec_1D[pix1:pix2, :], axis=0) # flux over a selected wavelength bin

msize = plt.rcParams['lines.markersize'] ** 2. # default marker size

plt.rcParams['figure.figsize'] = [10.0, 3.0] # Figure dimensions

fig, axs = plt.subplots()

f = plt.plot(wsdata_all, all_spec_1D[:, 0], linewidth=2, zorder=0) # Plot Region of wavelength bin

plt.fill_between(wsdata_all[pix1:pix2], 0, all_spec_1D[pix1:pix2, 0], alpha=0.5)

plt.xlabel(r'Wavelength ($\mu$m)')

plt.ylabel('Flux (e-)')

plt.title('1D Extracted Spectrum')

axs.xaxis.set_major_locator(ticker.MultipleLocator(0.5))

axs.xaxis.set_minor_locator(ticker.MultipleLocator(0.1))

plt.annotate('H$_2$O', xy=(3.0, 42000))

plt.annotate('CO$_2$', xy=(4.2, 42000))

plt.show()

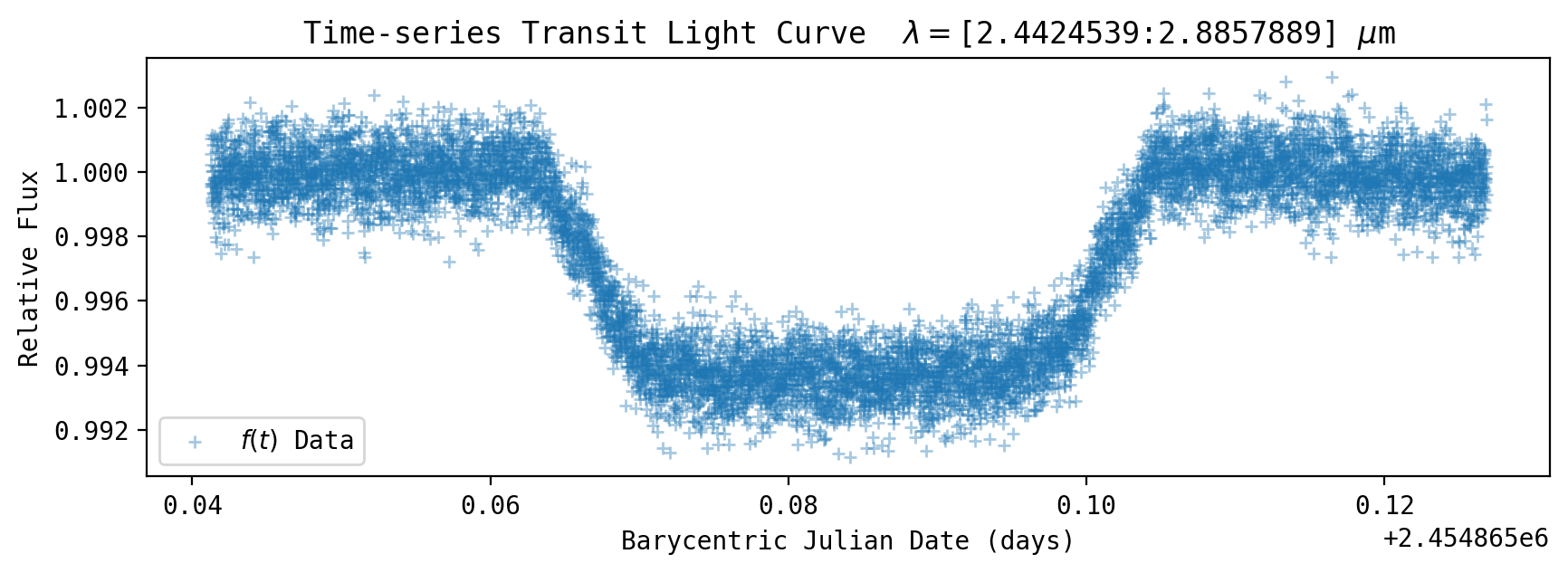

fig, axs = plt.subplots()

plt.scatter(bjd, y/np.mean(y[outtransit]), label='$f(t)$ Data', zorder=1, s=msize*0.75, linewidth=1, alpha=0.4, marker='+', edgecolors='blue')

plt.xlabel('Barycentric Julian Date (days)')

plt.ylabel('Relative Flux')

plt.title(r'Time-series Transit Light Curve $\lambda=$['+str(wsdata_all[pix1])+':'+str(wsdata_all[pix2]) + r'] $\mu$m')

plt.legend()

plt.show()

regressor = LinearRegression()

regressor.fit(XX[outtransit], y[outtransit]/np.mean(y[outtransit]))

print('Linear Regression Coefficients:')

print(regressor.coef_)

/tmp/ipykernel_2145/1502773371.py:21: UserWarning: You passed a edgecolor/edgecolors ('blue') for an unfilled marker ('+'). Matplotlib is ignoring the edgecolor in favor of the facecolor. This behavior may change in the future.

plt.scatter(bjd, y/np.mean(y[outtransit]), label='$f(t)$ Data', zorder=1, s=msize*0.75, linewidth=1, alpha=0.4, marker='+', edgecolors='blue')

Linear Regression Coefficients:

[ 2.35589645e-04 2.33200386e-04 -1.43527088e-04 -4.65560797e-05

1.94065907e-04 -4.51855007e-04 0.00000000e+00]

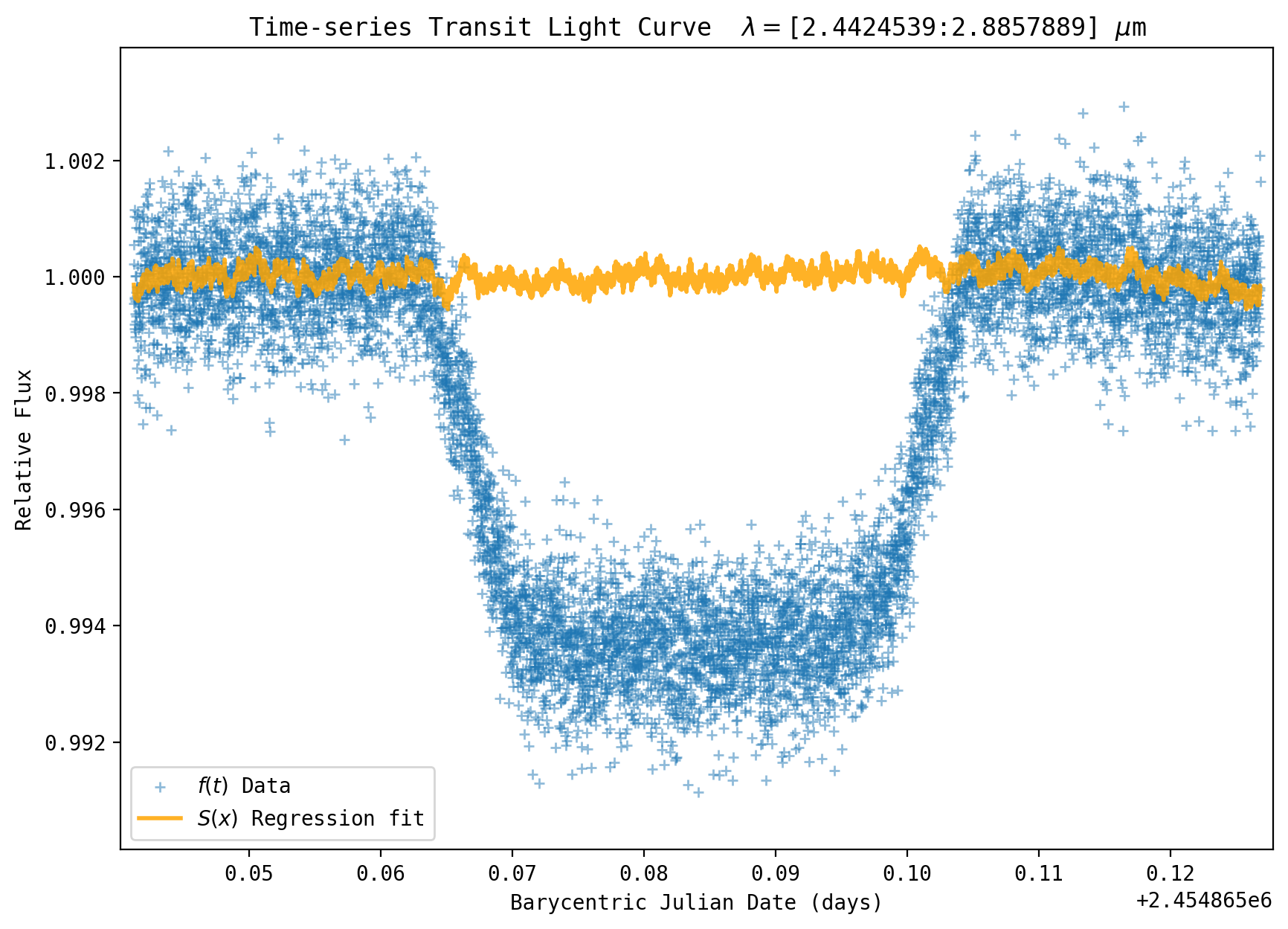

The coefficients are on the order of ~10\(^{-4}\) so the trends have an amplitude on the order of 100’s of ppm.

Visualize the fit

yfit = regressor.predict(XX) # Project the fit over the whole time series

plt.rcParams['figure.figsize'] = [10.0, 7.0] # Figure dimensions

msize = plt.rcParams['lines.markersize'] ** 2. # default marker size

plt.scatter(bjd, y/np.mean(y[outtransit]), label='$f(t)$ Data', zorder=1, s=msize*0.75, linewidth=1, alpha=0.5, marker='+', edgecolors='blue')

f = plt.plot(bjd, yfit, label='$S(x)$ Regression fit ', linewidth=2, color='orange', zorder=2, alpha=0.85)

plt.xlabel('Barycentric Julian Date (days)')

plt.ylabel('Relative Flux')

plt.title(r'Time-series Transit Light Curve $\lambda=$['+str(wsdata_all[pix1])+':'+str(wsdata_all[pix2]) + r'] $\mu$m')

axs.xaxis.set_major_locator(ticker.MultipleLocator(0.01))

axs.xaxis.set_minor_locator(ticker.MultipleLocator(0.005))

axs.yaxis.set_major_locator(ticker.MultipleLocator(0.002))

axs.yaxis.set_minor_locator(ticker.MultipleLocator(0.001))

yplot = y / np.mean(y[outtransit])

plt.ylim(yplot.min() * 0.999, yplot.max()*1.001)

plt.xlim(bjd.min()-0.001, bjd.max()+0.001)

plt.legend(loc='lower left')

plt.show()

/tmp/ipykernel_2145/3911644354.py:5: UserWarning: You passed a edgecolor/edgecolors ('blue') for an unfilled marker ('+'). Matplotlib is ignoring the edgecolor in favor of the facecolor. This behavior may change in the future.

plt.scatter(bjd, y/np.mean(y[outtransit]), label='$f(t)$ Data', zorder=1, s=msize*0.75, linewidth=1, alpha=0.5, marker='+', edgecolors='blue')

Transit and Limb-Darkening Model Functions#

Define a functions used by the fitting routines. These which will take the transit and systematic parameters and create our full transit light curve model

\(model = T(t,\theta)\times F_0 \times S(x)\)

compares it to the data

\(y = f(t)\)

by returning the residuals

\((y-model)/(\sigma_y)\)

To calculate the transit model, here we use Mandel and Agol (2002) as coded in python by H. Wakeford (ExoTiC-ISM).

To calculate the stellar limb-darkening, we use the procedure from Sing et al. (2010) which uses stellar models and fits for non-linear limb darkening coefficients, with a module as coded in python by H. Wakeford (ExoTiC-ISM).

A new orbit is first calculated based on the system parameters of \(a/R_{star}\), the cosine of the inclination \(cos(i)\), and the orbital phase \(\phi\). The inputs are the orbit distance between the planet-star center \(b\) at each phase, limb-darkening parameters (\(c_1,c_2,c_3,c_4\)), and the planet-to-star radius ratio \(R_p/R_{star}\).

@custom_model

def nonlinear_limb_darkening(x, c0=0.0, c1=0.0, c2=0.0, c3=0.0):

"""

Define non-linear limb darkening model with four parameters c0, c1, c2, c3.

"""

model = (1. - (c0 * (1. - x ** (1. / 2)) + c1 * (1. - x ** (2. / 2)) + c2 * (1. - x ** (3. / 2)) + c3 *

(1. - x ** (4. / 2))))

return model

@custom_model

def quadratic_limb_darkening(x, aLD=0.0, bLD=0.0):

"""

Define linear limb darkening model with parameters aLD and bLD.

"""

model = 1. - aLD * (1. - x) - bLD * (1. - x) ** (4. / 2.)

return model

def limb_dark_fit(grating, wsdata, M_H, Teff, logg, dirsen, ld_model='1D'):

"""

Calculates stellar limb-darkening coefficients for a given wavelength bin.

Currently supports:

HST STIS G750L, G750M, G430L gratings

HST WFC3 UVIS/G280, IR/G102, IR/G141 grisms

What is used for 1D models - Kurucz (?)

Procedure from Sing et al. (2010, A&A, 510, A21).

Uses 3D limb darkening from Magic et al. (2015, A&A, 573, 90).

Uses photon FLUX Sum over (lambda*dlamba).

:param grating: string; grating to use ('G430L','G750L','G750M', 'G280', 'G102', 'G141')

:param wsdata: array; data wavelength solution

:param M_H: float; stellar metallicity

:param Teff: float; stellar effective temperature (K)

:param logg: float; stellar gravity

:param dirsen: string; path to main limb darkening directory

:param ld_model: string; '1D' or '3D', makes choice between limb darkening models; default is 1D

:return: uLD: float; linear limb darkening coefficient

aLD, bLD: float; quadratic limb darkening coefficients

cp1, cp2, cp3, cp4: float; three-parameter limb darkening coefficients

c1, c2, c3, c4: float; non-linear limb-darkening coefficients

"""

print('You are using the', str(ld_model), 'limb darkening models.')

if ld_model == '1D':

direc = os.path.join(dirsen, 'Kurucz')

print('Current Directories Entered:')

print(' ' + dirsen)

print(' ' + direc)

# Select metallicity

M_H_Grid = np.array([-0.1, -0.2, -0.3, -0.5, -1.0, -1.5, -2.0, -2.5, -3.0, -3.5, -4.0, -4.5, -5.0, 0.0, 0.1, 0.2, 0.3, 0.5, 1.0])

M_H_Grid_load = np.array([0, 1, 2, 3, 5, 7, 8, 9, 10, 11, 12, 13, 14, 17, 20, 21, 22, 23, 24])

optM = (abs(M_H - M_H_Grid)).argmin()

MH_ind = M_H_Grid_load[optM]

# Determine which model is to be used, by using the input metallicity M_H to figure out the file name we need

direc = 'Kurucz'

file_list = 'kuruczlist.sav'

sav1 = readsav(os.path.join(dirsen, file_list))

model = bytes.decode(sav1['li'][MH_ind]) # Convert object of type "byte" to "string"

# Select Teff and subsequently logg

Teff_Grid = np.array([3500, 3750, 4000, 4250, 4500, 4750, 5000, 5250, 5500, 5750, 6000, 6250, 6500])

optT = (abs(Teff - Teff_Grid)).argmin()

logg_Grid = np.array([4.0, 4.5, 5.0])

optG = (abs(logg - logg_Grid)).argmin()

if logg_Grid[optG] == 4.0:

Teff_Grid_load = np.array([8, 19, 30, 41, 52, 63, 74, 85, 96, 107, 118, 129, 138])

elif logg_Grid[optG] == 4.5:

Teff_Grid_load = np.array([9, 20, 31, 42, 53, 64, 75, 86, 97, 108, 119, 129, 139])

elif logg_Grid[optG] == 5.0:

Teff_Grid_load = np.array([10, 21, 32, 43, 54, 65, 76, 87, 98, 109, 120, 130, 140])

# Where in the model file is the section for the Teff we want? Index T_ind tells us that.

T_ind = Teff_Grid_load[optT]

header_rows = 3 # How many rows in each section we ignore for the data reading

data_rows = 1221 # How many rows of data we read

line_skip_data = (T_ind + 1) * header_rows + T_ind * data_rows # Calculate how many lines in the model file we need to skip in order to get to the part we need (for the Teff we want).

line_skip_header = T_ind * (data_rows + header_rows)

# Read the header, in case we want to have the actual Teff, logg and M_H info.

# headerinfo is a pandas object.

headerinfo = pd.read_csv(os.path.join(dirsen, direc, model), delim_whitespace=True, header=None,

skiprows=line_skip_header, nrows=1)

Teff_model = headerinfo[1].values[0]

logg_model = headerinfo[3].values[0]

MH_model = headerinfo[6].values[0]

MH_model = float(MH_model[1:-1])

print('\nClosest values to your inputs:')

print('Teff: ', Teff_model)

print('M_H: ', MH_model)

print('log(g): ', logg_model)

# Read the data; data is a pandas object.

data = pd.read_csv(os.path.join(dirsen, direc, model), delim_whitespace=True, header=None,

skiprows=line_skip_data, nrows=data_rows)

# Unpack the data

ws = data[0].values * 10 # Import wavelength data

f0 = data[1].values / (ws * ws)

f1 = data[2].values * f0 / 100000.

f2 = data[3].values * f0 / 100000.

f3 = data[4].values * f0 / 100000.

f4 = data[5].values * f0 / 100000.

f5 = data[6].values * f0 / 100000.

f6 = data[7].values * f0 / 100000.

f7 = data[8].values * f0 / 100000.

f8 = data[9].values * f0 / 100000.

f9 = data[10].values * f0 / 100000.

f10 = data[11].values * f0 / 100000.

f11 = data[12].values * f0 / 100000.

f12 = data[13].values * f0 / 100000.

f13 = data[14].values * f0 / 100000.

f14 = data[15].values * f0 / 100000.

f15 = data[16].values * f0 / 100000.

f16 = data[17].values * f0 / 100000.

# Make single big array of them

fcalc = np.array([f0, f1, f2, f3, f4, f5, f6, f7, f8, f9, f10, f11, f12, f13, f14, f15, f16])

phot1 = np.zeros(fcalc.shape[0])

# Define mu

mu = np.array([1.000, .900, .800, .700, .600, .500, .400, .300, .250, .200, .150, .125, .100, .075, .050, .025, .010])

# Passed on to main body of function are: ws, fcalc, phot1, mu

elif ld_model == '3D':

direc = os.path.join(dirsen, '3DGrid')

print('Current Directories Entered:')

print(' ' + dirsen)

print(' ' + direc)

# Select metallicity

M_H_Grid = np.array([-3.0, -2.0, -1.0, 0.0]) # Available metallicity values in 3D models

M_H_Grid_load = ['30', '20', '10', '00'] # The according identifiers to individual available M_H values

optM = (abs(M_H - M_H_Grid)).argmin() # Find index at which the closes M_H values from available values is to the input M_H.

# Select Teff

Teff_Grid = np.array([4000, 4500, 5000, 5500, 5777, 6000, 6500, 7000]) # Available Teff values in 3D models

optT = (abs(Teff - Teff_Grid)).argmin() # Find index at which the Teff values is, that is closest to input Teff.

# Select logg, depending on Teff. If several logg possibilities are given for one Teff, pick the one that is

# closest to user input (logg).

if Teff_Grid[optT] == 4000:

logg_Grid = np.array([1.5, 2.0, 2.5])

optG = (abs(logg - logg_Grid)).argmin()

elif Teff_Grid[optT] == 4500:

logg_Grid = np.array([2.0, 2.5, 3.0, 3.5, 4.0, 4.5, 5.0])

optG = (abs(logg - logg_Grid)).argmin()

elif Teff_Grid[optT] == 5000:

logg_Grid = np.array([2.0, 2.5, 3.0, 3.5, 4.0, 4.5, 5.0])

optG = (abs(logg - logg_Grid)).argmin()

elif Teff_Grid[optT] == 5500:

logg_Grid = np.array([3.0, 3.5, 4.0, 4.5, 5.0])

optG = (abs(logg - logg_Grid)).argmin()

elif Teff_Grid[optT] == 5777:

logg_Grid = np.array([4.4])

optG = 0

elif Teff_Grid[optT] == 6000:

logg_Grid = np.array([3.5, 4.0, 4.5])

optG = (abs(logg - logg_Grid)).argmin()

elif Teff_Grid[optT] == 6500:

logg_Grid = np.array([4.0, 4.5])

optG = (abs(logg - logg_Grid)).argmin()

elif Teff_Grid[optT] == 7000:

logg_Grid = np.array([4.5])

optG = 0

# Select Teff and Log g. Mtxt, Ttxt and Gtxt are then put together as string to load correct files.

Mtxt = M_H_Grid_load[optM]

Ttxt = "{:2.0f}".format(Teff_Grid[optT] / 100)

if Teff_Grid[optT] == 5777:

Ttxt = "{:4.0f}".format(Teff_Grid[optT])

Gtxt = "{:2.0f}".format(logg_Grid[optG] * 10)

#

file = 'mmu_t' + Ttxt + 'g' + Gtxt + 'm' + Mtxt + 'v05.flx'

print('Filename:', file)

# Read data from IDL .sav file

sav = readsav(os.path.join(direc, file)) # readsav reads an IDL .sav file

ws = sav['mmd'].lam[0] # read in wavelength

flux = sav['mmd'].flx # read in flux

Teff_model = Teff_Grid[optT]

logg_model = logg_Grid[optG]

MH_model = str(M_H_Grid[optM])

print('\nClosest values to your inputs:')

print('Teff : ', Teff_model)

print('M_H : ', MH_model)

print('log(g): ', logg_model)

f0 = flux[0]

f1 = flux[1]

f2 = flux[2]

f3 = flux[3]

f4 = flux[4]

f5 = flux[5]

f6 = flux[6]

f7 = flux[7]

f8 = flux[8]

f9 = flux[9]

f10 = flux[10]

# Make single big array of them

fcalc = np.array([f0, f1, f2, f3, f4, f5, f6, f7, f8, f9, f10])

phot1 = np.zeros(fcalc.shape[0])

# Mu from grid

# 0.00000 0.0100000 0.0500000 0.100000 0.200000 0.300000 0.500000 0.700000 0.800000 0.900000 1.00000

mu = sav['mmd'].mu

# Passed on to main body of function are: ws, fcalc, phot1, mu

# Load response function and interpolate onto kurucz model grid

# FOR STIS

if grating == 'G430L':

sav = readsav(os.path.join(dirsen, 'G430L.STIS.sensitivity.sav')) # wssens,sensitivity

wssens = sav['wssens']

sensitivity = sav['sensitivity']

wdel = 3

if grating == 'G750M':

sav = readsav(os.path.join(dirsen, 'G750M.STIS.sensitivity.sav')) # wssens, sensitivity

wssens = sav['wssens']

sensitivity = sav['sensitivity']

wdel = 0.554

if grating == 'G750L':

sav = readsav(os.path.join(dirsen, 'G750L.STIS.sensitivity.sav')) # wssens, sensitivity

wssens = sav['wssens']

sensitivity = sav['sensitivity']

wdel = 4.882

# FOR WFC3

if grating == 'G141': # http://www.stsci.edu/hst/acs/analysis/reference_files/synphot_tables.html

sav = readsav(os.path.join(dirsen, 'G141.WFC3.sensitivity.sav')) # wssens, sensitivity

wssens = sav['wssens']

sensitivity = sav['sensitivity']

wdel = 1

if grating == 'G102': # http://www.stsci.edu/hst/acs/analysis/reference_files/synphot_tables.html

sav = readsav(os.path.join(dirsen, 'G141.WFC3.sensitivity.sav')) # wssens, sensitivity

wssens = sav['wssens']

sensitivity = sav['sensitivity']

wdel = 1

if grating == 'G280': # http://www.stsci.edu/hst/acs/analysis/reference_files/synphot_tables.html

sav = readsav(os.path.join(dirsen, 'G280.WFC3.sensitivity.sav')) # wssens, sensitivity

wssens = sav['wssens']

sensitivity = sav['sensitivity']

wdel = 1

# FOR JWST

if grating == 'NIRSpecPrism': # http://www.stsci.edu/hst/acs/analysis/reference_files/synphot_tables.html

sav = readsav(os.path.join(dirsen, 'NIRSpec.prism.sensitivity.sav')) # wssens, sensitivity

wssens = sav['wssens']

sensitivity = sav['sensitivity']

wdel = 12

widek = np.arange(len(wsdata))

wsHST = wssens

wsHST = np.concatenate((np.array([wsHST[0] - wdel - wdel, wsHST[0] - wdel]),

wsHST,

np.array([wsHST[len(wsHST) - 1] + wdel,

wsHST[len(wsHST) - 1] + wdel + wdel])))

respoutHST = sensitivity / np.max(sensitivity)

respoutHST = np.concatenate((np.zeros(2), respoutHST, np.zeros(2)))

inter_resp = interp1d(wsHST, respoutHST, bounds_error=False, fill_value=0)

respout = inter_resp(ws) # interpolate sensitivity curve onto model wavelength grid

wsdata = np.concatenate((np.array([wsdata[0] - wdel - wdel, wsdata[0] - wdel]), wsdata,

np.array([wsdata[len(wsdata) - 1] + wdel, wsdata[len(wsdata) - 1] + wdel + wdel])))

respwavebin = wsdata / wsdata * 0.0

widek = widek + 2 # need to add two indicies to compensate for padding with 2 zeros

respwavebin[widek] = 1.0

data_resp = interp1d(wsdata, respwavebin, bounds_error=False, fill_value=0)

reswavebinout = data_resp(ws) # interpolate data onto model wavelength grid

# Integrate over the spectra to make synthetic photometric points.

for i in range(fcalc.shape[0]): # Loop over spectra at diff angles

fcal = fcalc[i, :]

Tot = int_tabulated(ws, ws * respout * reswavebinout)

phot1[i] = (int_tabulated(ws, ws * respout * reswavebinout * fcal, sort=True)) / Tot

if ld_model == '1D':

yall = phot1 / phot1[0]

elif ld_model == '3D':

yall = phot1 / phot1[10]

# Co = np.zeros((6, 4)) # NOT-REUSED

# A = [0.0, 0.0, 0.0, 0.0] # c1, c2, c3, c4 # NOT-REUSED

x = mu[1:] # wavelength

y = yall[1:] # flux

# weights = x / x # NOT-REUSED

# Start fitting the different models

fitter = LevMarLSQFitter()

# Fit a four parameter non-linear limb darkening model and get fitted variables, c1, c2, c3, c4.

corot_4_param = nonlinear_limb_darkening()

corot_4_param = fitter(corot_4_param, x, y)

c1, c2, c3, c4 = corot_4_param.parameters

# Fit a three parameter non-linear limb darkening model and get fitted variables, cp2, cp3, cp4 (cp1 = 0).

corot_3_param = nonlinear_limb_darkening()

corot_3_param.c0.fixed = True # 3 param is just 4 param with c0 = 0.0

corot_3_param = fitter(corot_3_param, x, y)

cp1, cp2, cp3, cp4 = corot_3_param.parameters

# Fit a quadratic limb darkening model and get fitted parameters aLD and bLD.

quadratic = quadratic_limb_darkening()

quadratic = fitter(quadratic, x, y)

aLD, bLD = quadratic.parameters

# Fit a linear limb darkening model and get fitted variable uLD.

linear = nonlinear_limb_darkening()

linear.c0.fixed = True

linear.c2.fixed = True

linear.c3.fixed = True

linear = fitter(linear, x, y)

uLD = linear.c1.value

print('\nLimb darkening parameters:')

print("4param \t{:0.8f}\t{:0.8f}\t{:0.8f}\t{:0.8f}".format(c1, c2, c3, c4))

print("3param \t{:0.8f}\t{:0.8f}\t{:0.8f}".format(cp2, cp3, cp4))

print("Quad \t{:0.8f}\t{:0.8f}".format(aLD, bLD))

print("Linear \t{:0.8f}".format(uLD))

return uLD, c1, c2, c3, c4, cp1, cp2, cp3, cp4, aLD, bLD

def int_tabulated(X, F, sort=False):

Xsegments = len(X) - 1

# Sort vectors into ascending order.

if not sort:

ii = np.argsort(X)

X = X[ii]

F = F[ii]

while (Xsegments % 4) != 0:

Xsegments = Xsegments + 1

Xmin = np.min(X)

Xmax = np.max(X)

# Uniform step size.

h = (Xmax + 0.0 - Xmin) / Xsegments

# Compute the interpolates at Xgrid.

# x values of interpolates >> Xgrid = h * FINDGEN(Xsegments + 1L) + Xmin

z = splev(h * np.arange(Xsegments + 1) + Xmin, splrep(X, F))

# Compute the integral using the 5-point Newton-Cotes formula.

ii = (np.arange((len(z) - 1) / 4, dtype=int) + 1) * 4

return np.sum(2.0 * h * (7.0 * (z[ii - 4] + z[ii]) + 32.0 * (z[ii - 3] + z[ii - 1]) + 12.0 * z[ii - 2]) / 45.0)

Now define the transit model function#

def occultnl(rl, c1, c2, c3, c4, b0):

"""

MANDEL & AGOL (2002) transit model.

:param rl: float, transit depth (Rp/R*)

:param c1: float, limb darkening parameter 1

:param c2: float, limb darkening parameter 2

:param c3: float, limb darkening parameter 3

:param c4: float, limb darkening parameter 4

:param b0: impact parameter in stellar radii

:return: mulimb0: limb-darkened transit model, mulimbf: lightcurves for each component that you put in the model

"""

mulimb0 = occultuniform(b0, rl)

bt0 = b0

fac = np.max(np.abs(mulimb0 - 1))

if fac == 0:

fac = 1e-6 # DKS edit

omega = 4 * ((1 - c1 - c2 - c3 - c4) / 4 + c1 / 5 + c2 / 6 + c3 / 7 + c4 / 8)

nb = len(b0)

indx = np.where(mulimb0 != 1.0)[0]

if len(indx) == 0:

indx = -1

mulimb = mulimb0[indx]

mulimbf = np.zeros((5, nb))

mulimbf[0, :] = mulimbf[0, :] + 1.

mulimbf[1, :] = mulimbf[1, :] + 0.8

mulimbf[2, :] = mulimbf[2, :] + 2 / 3

mulimbf[3, :] = mulimbf[3, :] + 4 / 7

mulimbf[4, :] = mulimbf[4, :] + 0.5

nr = np.int64(2)

dmumax = 1.0

while (dmumax > fac * 1.e-3) and (nr <= 131072):

# print(nr)

mulimbp = mulimb

nr = nr * 2

dt = 0.5 * np.pi / nr

t = dt * np.arange(nr + 1)

th = t + 0.5 * dt

r = np.sin(t)

sig = np.sqrt(np.cos(th[nr - 1]))

mulimbhalf = sig ** 3 * mulimb0[indx] / (1 - r[nr - 1])

mulimb1 = sig ** 4 * mulimb0[indx] / (1 - r[nr - 1])

mulimb3half = sig ** 5 * mulimb0[indx] / (1 - r[nr - 1])

mulimb2 = sig ** 6 * mulimb0[indx] / (1 - r[nr - 1])

for i in range(1, nr):

mu = occultuniform(b0[indx] / r[i], rl / r[i])

sig1 = np.sqrt(np.cos(th[i - 1]))

sig2 = np.sqrt(np.cos(th[i]))

mulimbhalf = mulimbhalf + r[i] ** 2 * mu * (sig1 ** 3 / (r[i] - r[i - 1]) - sig2 ** 3 / (r[i + 1] - r[i]))

mulimb1 = mulimb1 + r[i] ** 2 * mu * (sig1 ** 4 / (r[i] - r[i - 1]) - sig2 ** 4 / (r[i + 1] - r[i]))

mulimb3half = mulimb3half + r[i] ** 2 * mu * (sig1 ** 5 / (r[i] - r[i - 1]) - sig2 ** 5 / (r[i + 1] - r[i]))

mulimb2 = mulimb2 + r[i] ** 2 * mu * (sig1 ** 6 / (r[i] - r[i - 1]) - sig2 ** 6 / (r[i + 1] - r[i]))

mulimb = ((1 - c1 - c2 - c3 - c4) * mulimb0[

indx] + c1 * mulimbhalf * dt + c2 * mulimb1 * dt + c3 * mulimb3half * dt + c4 * mulimb2 * dt) / omega

ix1 = np.where(mulimb + mulimbp != 0.)[0]

if len(ix1) == 0:

ix1 = -1

# print(ix1)

# python cannot index on single values so you need to use atlest_1d for the below to work when mulimb is a single value

dmumax = np.max(np.abs(np.atleast_1d(mulimb)[ix1] - np.atleast_1d(mulimbp)[ix1]) / (

np.atleast_1d(mulimb)[ix1] + np.atleast_1d(mulimbp)[ix1]))

mulimbf[0, indx] = np.atleast_1d(mulimb0)[indx]

mulimbf[1, indx] = mulimbhalf * dt

mulimbf[2, indx] = mulimb1 * dt

mulimbf[3, indx] = mulimb3half * dt

mulimbf[4, indx] = mulimb2 * dt

np.atleast_1d(mulimb0)[indx] = mulimb

b0 = bt0

return mulimb0, mulimbf

def occultuniform(b0, w):

"""

Compute the lightcurve for occultation of a uniform source without microlensing (Mandel & Agol 2002).

:param b0: array; impact parameter in units of stellar radii

:param w: array; occulting star size in units of stellar radius

:return: muo1: float; fraction of flux at each b0 for a uniform source

"""

if np.abs(w - 0.5) < 1.0e-3:

w = 0.5

nb = len(np.atleast_1d(b0))

muo1 = np.zeros(nb)

for i in range(nb):

# substitute z=b0(i) to shorten expressions

z = np.atleast_1d(b0)[i]

# z = z.value # stripping it of astropy units

if z >= 1+w:

muo1[i] = 1.0

continue

if w >= 1 and z <= w-1:

muo1[i] = 0.0

continue

if z >= np.abs(1-w) and z <= 1+w:

kap1 = np.arccos(np.min(np.append((1 - w ** 2 + z ** 2) / 2 / z, 1.)))

kap0 = np.arccos(np.min(np.append((w ** 2 + z ** 2 - 1) / 2 / w / z, 1.)))

lambdae = w ** 2 * kap0 + kap1

lambdae = (lambdae - 0.5 * np.sqrt(np.max(np.append(4. * z ** 2 - (1 + z ** 2 - w ** 2) ** 2, 0.)))) / np.pi

muo1[i] = 1 - lambdae

if z <= 1-w:

muo1[i] = 1 - w ** 2

continue

return muo1

Now define the function to generate the transit light curve and compare it to the data#

# Functions to call and calculate models

def residual(p, phase, x, y, err, c1, c2, c3, c4):

# calculate new orbit

b0 = p['a_Rs'].value * np.sqrt((np.sin(phase * 2 * np.pi)) ** 2 + (p['cosinc'].value * np.cos(phase * 2 * np.pi)) ** 2)

# Select indicies between first and fourth contact

intransit = (b0-p['rprs'].value < 1.0E0).nonzero()

# Make light curve model, set all values initially to 1.0

light_curve = b0/b0

mulimb0, mulimbf = occultnl(p['rprs'].value, c1, c2, c3, c4, b0[intransit]) # Madel and Agol

light_curve[intransit] = mulimb0

model = (light_curve) * p['f0'].value * (p['Fslope'].value * phase + p['xsh'].value * shx + p['x2sh'].value * shx**2. + p['ysh'].value * shy + p['y2sh'].value * shy**2. + p['xysh'].value * shy * shx + p['comm'].value * common_mode + 1.0) # transit model is baseline flux X transit model X systematics model

chi2now = np.sum((y-model)**2/err**2)

res = np.std((y-model)/p['f0'].value)

print("rprs: ", p['rprs'].value, "current chi^2=", chi2now, ' scatter ', res, end="\r")

return (y-model)/err

# return np.sum((y-model)**2/err**2)

A function is also defined to return just the transit model \(T(t,\theta)\)

def model_fine(p): # Make Transit model with a fine grid for plotting purposes

b0 = p['a_Rs'].value * np.sqrt((np.sin(phase_fine * 2 * np.pi)) ** 2 + (p['cosinc'].value * np.cos(phase_fine * 2 * np.pi)) ** 2)

mulimb0, mulimbf = occultnl(p['rprs'].value, c1, c2, c3, c4, b0) # Madel and Agol

model_fine = mulimb0

return model_fine

Now add a transit model to the Example Light curve. Here, we’ve compute the limb darkening coefficients, then use them in the transit light curve.

wave1 = wsdata_all[pix1]

wave2 = wsdata_all[pix2]

bin_wave_index = ((wsdata_all > wave1) & (wsdata_all <= wave2)).nonzero()

wsdata = wsdata_all[bin_wave_index]*1E4 # Select wavelength bin values (um=> angstroms)

_uLD, c1, c2, c3, c4, _cp1, _cp2, _cp3, _cp4, aLD, bLD = limb_dark_fit(grating, wsdata, M_H, Teff, logg, limb_dark_directory, ld_model)

You are using the 3D limb darkening models.

Current Directories Entered:

./notebookrun2/

./notebookrun2/3DGrid

Filename: mmu_t45g45m00v05.flx

Closest values to your inputs:

Teff : 4500

M_H : 0.0

log(g): 4.5

Limb darkening parameters:

4param 0.87682038 -0.73755073 0.57722987 -0.19560354

3param 2.19231523 -3.11195028 1.36536758

Quad 0.02781793 0.37167807

Linear 0.34303528

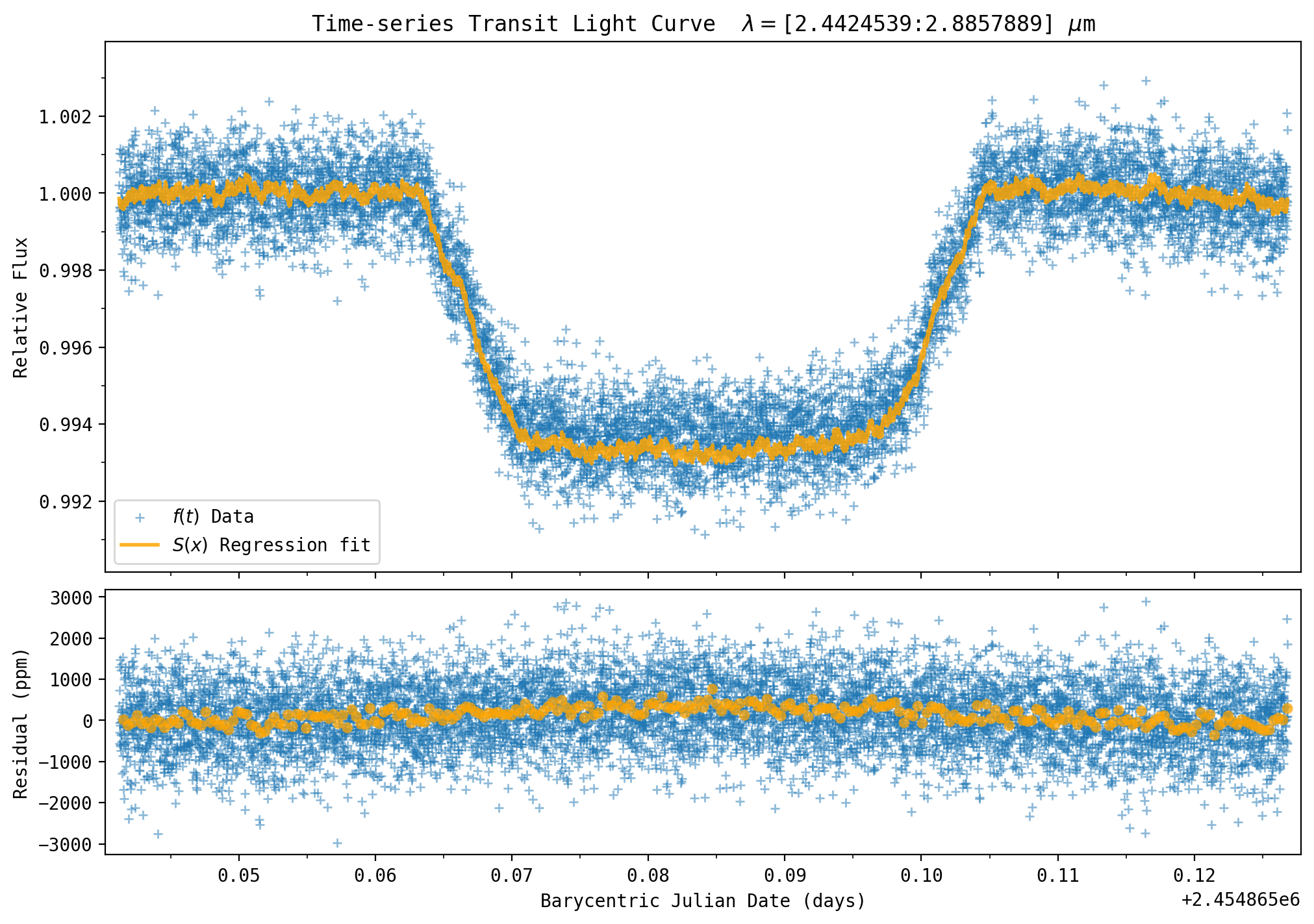

Now run the transit model.

The transit parameters such as inclination and \(a/R_{star}\) have been setup at the beginning of the notebook.

# Run the Transit Model

rl = 0.0825 # Planet-to-star Radius Ratio

b0 = a_Rs * np.sqrt((np.sin(phase * 2 * np.pi)) ** 2 + (np.cos(inc) * np.cos(phase * 2 * np.pi)) ** 2)

intransit = (b0-rl < 1.0E0).nonzero() # Select indicies between first and fourth contact

mulimb0, mulimbf = occultnl(rl, c1, c2, c3, c4, b0) # Mandel & Agol non-linear limb darkened transit model

model = mulimb0*yfit

# plot

plt.rcParams['figure.figsize'] = [10.0, 7.0] # Figure dimensions

msize = plt.rcParams['lines.markersize'] ** 2. # default marker size

fig = plt.figure(constrained_layout=True)

gs = fig.add_gridspec(3, 1, hspace=0.00, wspace=0.00)

ax1 = fig.add_subplot(gs[0:2, :])

ax1.scatter(bjd, y/np.mean(y[outtransit]), label='$f(t)$ Data', zorder=1, s=msize*0.75, linewidth=1, alpha=0.5, marker='+', edgecolors='blue')

ax1.plot(bjd, model, label='$S(x)$ Regression fit ', linewidth=2, color='orange', zorder=2, alpha=0.85)

ax1.xaxis.set_ticklabels([])

plt.ylabel('Relative Flux')

plt.title(r'Time-series Transit Light Curve $\lambda=$['+str(wsdata_all[pix1])+':'+str(wsdata_all[pix2]) + r'] $\mu$m')

ax1.xaxis.set_major_locator(ticker.MultipleLocator(0.01))

ax1.xaxis.set_minor_locator(ticker.MultipleLocator(0.005))

ax1.yaxis.set_major_locator(ticker.MultipleLocator(0.002))

ax1.yaxis.set_minor_locator(ticker.MultipleLocator(0.001))

yplot = y/np.mean(y[outtransit])

plt.ylim(yplot.min()*0.999, yplot.max()*1.001)

plt.xlim(bjd.min()-0.001, bjd.max()+0.001)

plt.legend()

fig.add_subplot(ax1)

# Residual

ax2 = fig.add_subplot(gs[2, :])

ax2.scatter(bjd, 1E6*(y/np.mean(y[outtransit])-model), label='$f(t)$ Data', zorder=1, s=msize*0.75, linewidth=1, alpha=0.5, marker='+', edgecolors='blue')

wsb, wsb_bin_edges, binnumber = stats.binned_statistic(bjd, 1E6*(y/np.mean(y[outtransit])-model), bins=256)

plt.scatter(wsb_bin_edges[1:], wsb, linewidth=2, alpha=0.75, facecolors='orange', edgecolors='none', marker='o', zorder=25)

plt.xlabel('Barycentric Julian Date (days)')

plt.ylabel('Residual (ppm)')

ax2.xaxis.set_major_locator(ticker.MultipleLocator(0.01))

ax2.xaxis.set_minor_locator(ticker.MultipleLocator(0.005))

yplot = y / np.mean(y[outtransit])

plt.xlim(bjd.min()-0.001, bjd.max()+0.001)

fig.add_subplot(ax2)

plt.show()

# print chi^2 value

err = np.sqrt(y) / np.mean(y[outtransit])

print('Chi^2 = '+str(np.sum((y/np.mean(y[outtransit])-model)**2/err**2)))

print('Residual Standard Deviation : '+str(1E6*np.std((y/np.mean(y[outtransit])-model)))+' ppm')

print('256 Bin Standard Deviation :'+str(np.std(wsb))+' ppm')

/tmp/ipykernel_2145/1695522111.py:16: UserWarning: You passed a edgecolor/edgecolors ('blue') for an unfilled marker ('+'). Matplotlib is ignoring the edgecolor in favor of the facecolor. This behavior may change in the future.

ax1.scatter(bjd, y/np.mean(y[outtransit]), label='$f(t)$ Data', zorder=1, s=msize*0.75, linewidth=1, alpha=0.5, marker='+', edgecolors='blue')

/tmp/ipykernel_2145/1695522111.py:32: UserWarning: You passed a edgecolor/edgecolors ('blue') for an unfilled marker ('+'). Matplotlib is ignoring the edgecolor in favor of the facecolor. This behavior may change in the future.

ax2.scatter(bjd, 1E6*(y/np.mean(y[outtransit])-model), label='$f(t)$ Data', zorder=1, s=msize*0.75, linewidth=1, alpha=0.5, marker='+', edgecolors='blue')

Chi^2 = 9265.443675786024

Residual Standard Deviation : 789.8093551317617 ppm

256 Bin Standard Deviation :196.83308658203643 ppm

Note that the model transit depth is a little too deep compared to the data. The planet radius needs to be smaller, and the parameter \(rl\) is closer to 0.08. As an exercise you can re-run the above cell changing the planet radius to \(rl\)=0.0805 and compare the \(\chi^2\) value to the previous default value (\(\chi^2\)=9265.4 at \(rl\) = 0.0825).

FIT Transit Light Curves#

Now we can fit each light curve, optimizing the fit parameters with a least-squares fit. Here a Levenberg-Marquart fit is used to find a \(\chi^2\) minimum and estimate uncertainties using the lmfit package (https://lmfit.github.io/lmfit-py/fitting.html).

In practice, first we would fit the white light curve, which consists of summing over all of the wavelengths in the entire 1D spectra. This can then be used to fit for the system parameters such as inclination and transit time, and then the spectroscopic channels are then fixed to these values as they are wavelength-independent. However, the CV3 data required the overall variations of the lamp to be removed, which prevents our use of using this data for a white light curve analysis. Here, we proceed to fitting for the spectroscopic light curve bins.

The steps are as follows:

1) Wavelength Bin is selected

2) Limb-darkening coefficients are calculated from a stellar model for each bin.

3) An initial linear regression is performed on the out-of-transit data to start the

systematic fit parameters, this greatly speeds up the fit as those parameters start

near their global minimum.

4) The fit is started, and some statistics are output during the minimization

5) Once the best-fit is found, a number of statistics are displayed

6) Finally, several plots are generated which are stored as PDFs and the next bin is started.

These steps are performed for each spectral bin.

In this example, the planet radius is set to vary in the fit along with the baseline flux and instrument systematic parameters.

Setup Wavelengths to fit over#

The spectra must be binned in wavelength to get sufficient counts to reach ~100 ppm levels needed. The spectra has significant counts from about pixel 100 to 400, we start at pixel \(k0\) and bin the spectra by \(wk\) pixels.

Several arrays are also defined.

k0 = 113 # 98 #100

kend = 392 # 422

wk = 15

number_of_bins = int((kend-k0)/wk)

wsd = np.zeros((number_of_bins))

werr = np.zeros((number_of_bins))

rprs = np.zeros((number_of_bins))

rerr = np.zeros((number_of_bins))

sig_r = np.zeros((number_of_bins))

sig_w = np.zeros((number_of_bins))

beta = np.zeros((number_of_bins))

depth = np.zeros((number_of_bins))

depth_err = np.zeros((number_of_bins))

Loop Over Wavelength Bins Fitting Each Lightcurve#

Note this step takes considerable time to complete (~20 min, few minutes/bin)#

Each wavelength bin is fit for the transit+systematics model. Various outputs including plots are saved. Can skip to the next cells to load pre-computed results.

k = k0 # wavelength to start

# --------------------------------------------------------------------------

# Loop over wavelength bins and fit for each one

for bin in range(0, number_of_bins):

# Select wavelength bin

wave1 = wsdata_all[k]

wave2 = wsdata_all[k+wk]

# Indicies to select for wavelgth bin

bin_wave_index = ((wsdata_all > wave1) & (wsdata_all <= wave2)).nonzero()

# make light curve bin

wave_bin_counts = np.sum(all_spec_1D[k+1:k+wk, :], axis=0) # Sum Wavelength pixels

wave_bin_counts_err = np.sqrt(wave_bin_counts) # adopt photon noise for errors

# Calculate Limb Darkening

wsdata = wsdata_all[bin_wave_index]*1E4 # Select wavelength bin values (um=> angstroms)

_uLD, c1, c2, c3, c4, _cp1, _cp2, _cp3, _cp4, aLD, bLD = limb_dark_fit(grating, wsdata, M_H, Teff, logg, limb_dark_directory, ld_model)

print('\nc1 = {}'.format(c1))

print('c2 = {}'.format(c2))

print('c3 = {}'.format(c3))

print('c4 = {}'.format(c4))

print('')

# u = [c1,c2,c3,c4] # limb darkening coefficients

# u = [aLD, bLD]

# Make initial model

# Setup LMFIT

x = bjd # X data

y = wave_bin_counts # Y data

err = wave_bin_counts_err # Y Error

# Perform Quick Linear regression on out-of-transit data to obtain accurate starting Detector fit values

if wave1 > 2.7 and wave1 < 3.45:

regressor.fit(XXX[outtransit], y[outtransit]/np.mean(y[outtransit]))

else:

regressor.fit(XX[outtransit], y[outtransit]/np.mean(y[outtransit]))

# create a set of Parameters for LMFIT https://lmfit.github.io/lmfit-py/parameters.html

# class Parameter(name, value=None, vary=True, min=- inf, max=inf, expr=None, brute_step=None, user_data=None)¶

# Set vary=0 to fix

# Set vary=1 to fit

p = lmfit.Parameters() # object to store L-M fit Parameters # Parameter Name

p.add('cosinc', value=np.cos(inc), vary=0) # inclination, vary cos(inclin)

p.add('rho_star', value=rho_star, vary=0) # stellar density

p.add('a_Rs', value=a_Rs, vary=0) # a/Rstar

p.add('rprs', value=rp, vary=1, min=0, max=1) # planet-to-star radius ratio

p.add('t0', value=t0, vary=0) # Transit T0

p.add('f0', value=np.mean(y[outtransit]), vary=1, min=0) # Baseline Flux

p.add('ecc', value=ecc, vary=0, min=0, max=1) # eccentricity

p.add('omega', value=omega, vary=0) # arguments of periatron

# Turn on a linear slope in water feature to account for presumably changing H2O ice builtup on widow during cryogenic test

if wave1 > 2.7 and wave1 < 3.45:

p.add('Fslope', value=regressor.coef_[6], vary=1) # Orbital phase

else:

p.add('Fslope', value=0, vary=0) # Orbital phase

p.add('xsh', value=regressor.coef_[0], vary=1) # Detector X-shift detrending

p.add('ysh', value=regressor.coef_[1], vary=1) # Detector X-shift detrending

p.add('x2sh', value=regressor.coef_[2], vary=1) # Detector X^2-shift detrending

p.add('y2sh', value=regressor.coef_[3], vary=1) # Detector Y^2-shift detrending

p.add('xysh', value=regressor.coef_[4], vary=1) # Detector X*Y detrending

p.add('comm', value=regressor.coef_[5], vary=1) # Common-Mode detrending

# Perform Minimization https://lmfit.github.io/lmfit-py/fitting.html

# create Minimizer

# mini = lmfit.Minimizer(residual, p, nan_policy='omit',fcn_args=(phase,x,y,err)

print('')

print('Fitting Bin', bin, ' Wavelength =', np.mean(wsdata)/1E4, ' Range= [', wave1, ':', wave2, ']')

# solve with Levenberg-Marquardt using the

result = lmfit.minimize(residual, params=p, args=(phase, x, y, err, c1, c2, c3, c4))

# result = mini.minimize(method='emcee')

print('')

print('Re-Fitting Bin', bin, ' Wavelength =', np.mean(wsdata)/1E4, ' Range= [', wave1, ':', wave2, ']')

# --------------------------------------------------------------------------

print("")

print("redchi", result.redchi)

print("chi2", result.chisqr)

print("nfree", result.nfree)

print("bic", result.bic)

print("aic", result.aic)

print("L-M FIT Variable")

print(lmfit.fit_report(result.params))

text_file = open(save_directory+'JWST_NIRSpec_Prism_fit_light_curve_bin'+str(bin)+'_statistics.txt', "w")

n = text_file.write("\nredchi "+str(result.redchi))

n = text_file.write("\nchi2 "+str(result.chisqr))

n = text_file.write("\nnfree "+str(result.nfree))

n = text_file.write("\nbic "+str(result.bic))

n = text_file.write("\naic "+str(result.aic))

n = text_file.write(lmfit.fit_report(result.params))

# file-output.py

# Update with best-fit parameters

p['rho_star'].value = result.params['rho_star'].value

p['cosinc'].value = result.params['cosinc'].value

p['rprs'].value = result.params['rprs'].value

p['t0'].value = result.params['t0'].value

p['f0'].value = result.params['f0'].value

p['Fslope'].value = result.params['Fslope'].value

p['xsh'].value = result.params['xsh'].value

p['ysh'].value = result.params['ysh'].value

p['x2sh'].value = result.params['x2sh'].value

p['y2sh'].value = result.params['y2sh'].value

p['xysh'].value = result.params['xysh'].value

p['comm'].value = result.params['comm'].value

# Update Fit Spectra arrays

wsd[bin] = np.mean(wsdata)/1E4

werr[bin] = (wsdata.max()-wsdata.min())/2E4

rprs[bin] = result.params['rprs'].value

rerr[bin] = result.params['rprs'].stderr

# Calculate Bestfit Model

final_model = y-result.residual*err

final_model_fine = model_fine(p)

# More Stats

resid = (y-final_model)/p['f0'].value

residppm = 1E6*(y-final_model)/p['f0'].value

residerr = err/p['f0'].value

sigma = np.std((y-final_model)/p['f0'].value)*1E6

print("Residual standard deviation (ppm) : ", 1E6*np.std((y-final_model)/p['f0'].value))

print("Photon noise (ppm) : ", (1/np.sqrt(p['f0'].value))*1E6)

print("Photon noise performance (%) : ", (1/np.sqrt(p['f0'].value))*1E6 / (sigma) * 100)

n = text_file.write("\nResidual standard deviation (ppm) : "+str(1E6*np.std((y-final_model)/p['f0'].value)))

n = text_file.write("\nPhoton noise (ppm) : "+str((1/np.sqrt(p['f0'].value))*1E6))

n = text_file.write("\nPhoton noise performance (%) : "+str((1/np.sqrt(p['f0'].value))*1E6 / (sigma) * 100))

# Measure Rednoise with Binning Technique

sig0 = np.std(resid)

bins = number_of_images / binmeasure

wsb, wsb_bin_edges, binnumber = stats.binned_statistic(bjd, resid, bins=bins)

sig_binned = np.std(wsb)

sigrednoise = np.sqrt(sig_binned**2-sig0**2/binmeasure)

if np.isnan(sigrednoise):

sigrednoise = 0 # if no rednoise detected, set to zero

sigwhite = np.sqrt(sig0**2-sigrednoise**2)

sigrednoise = np.sqrt(sig_binned**2-sigwhite**2/binmeasure)

if np.isnan(sigrednoise):

sigrednoise = 0 # if no rednoise detected, set to zero

beta[bin] = np.sqrt(sig0**2+binmeasure*sigrednoise**2)/sig0

print("White noise (ppm) : ", 1E6*sigwhite)

print("Red noise (ppm) : ", 1E6*sigrednoise)

print("Transit depth measured error (ppm) : ", 2E6*result.params['rprs'].value*result.params['rprs'].stderr)

n = text_file.write("\nWhite noise (ppm) : "+str(1E6*sigwhite))

n = text_file.write("\nRed noise (ppm) : "+str(1E6*sigrednoise))

n = text_file.write("\nTransit depth measured error (ppm) : "+str(2E6*result.params['rprs'].value*result.params['rprs'].stderr))

text_file.close()

depth[bin] = 1E6*result.params['rprs'].value**2

depth_err[bin] = 2E6*result.params['rprs'].value*result.params['rprs'].stderr

sig_r[bin] = sigrednoise*1E6

sig_w[bin] = sigwhite*1E6

# --------------------------------------------------------------------------

# ---------------------------------------------------------

# Write Fit Spectra to ascii file

ascii_data = Table([wsd, werr, rprs, rerr, depth, depth_err, sig_w, sig_r, beta], names=['Wavelength Center (um)', 'Wavelength half-width (um)', 'Rp/Rs', 'Rp/Rs 1-sigma error', 'Transit Depth (ppm)', 'Transit Depth error', 'Sigma_white (ppm)', 'Sigma_red (ppm)', 'Beta Rednoise Inflation factor'])

ascii.write(ascii_data, save_directory+'JWST_NIRSpec_Prism_fit_transmission_spectra.csv', format='csv', overwrite=True)

# ---------------------------------------------------------

msize = plt.rcParams['lines.markersize'] ** 2. # default marker size

# Plot data models

# plot

plt.rcParams['figure.figsize'] = [10.0, 7.0] # Figure dimensions

msize = plt.rcParams['lines.markersize'] ** 2. # default marker size

fig = plt.figure(constrained_layout=True)

gs = fig.add_gridspec(3, 1, hspace=0.00, wspace=0.00)

ax1 = fig.add_subplot(gs[0:2, :])

ax1.scatter(x, y/p['f0'].value, s=msize*0.75, linewidth=1, zorder=0, alpha=0.5, marker='+', edgecolors='blue')

ax1.plot(x, final_model/p['f0'].value, linewidth=1, color='orange', alpha=0.8, zorder=15) # overplot Transit model at data

ax1.xaxis.set_ticklabels([])

plt.ylabel('Relative Flux')

plt.title(r'Time-series Transit Light Curve $\lambda=$['+str(wave1)+':'+str(wave2) + r'] $\mu$m')

ax1.xaxis.set_major_locator(ticker.MultipleLocator(0.01))

ax1.xaxis.set_minor_locator(ticker.MultipleLocator(0.005))

ax1.yaxis.set_major_locator(ticker.MultipleLocator(0.002))

ax1.yaxis.set_minor_locator(ticker.MultipleLocator(0.001))

yplot = y/np.mean(y[outtransit])

plt.ylim(yplot.min()*0.999, yplot.max()*1.001)

plt.xlim(bjd.min()-0.001, bjd.max()+0.001)

fig.add_subplot(ax1)

# Residual

ax2 = fig.add_subplot(gs[2, :])

ax2.scatter(x, residppm, s=msize*0.75, linewidth=1, alpha=0.5, marker='+', edgecolors='blue', zorder=0) # overplot Transit model at data

wsb, wsb_bin_edges, binnumber = stats.binned_statistic(bjd, residppm, bins=256)

plt.scatter(wsb_bin_edges[1:], wsb, linewidth=2, alpha=0.75, facecolors='orange', edgecolors='none', marker='o', zorder=25)

plt.xlabel('Barycentric Julian Date (days)')

plt.ylabel('Residual (ppm)')

plt.plot([bjd.min(), bjd.max()], [0, 0], color='black', zorder=10)

plt.plot([bjd.min(), bjd.max()], [sigma, sigma], linestyle='--', color='black', zorder=15)

plt.plot([bjd.min(), bjd.max()], [-sigma, -sigma], linestyle='--', color='black', zorder=20)

ax2.xaxis.set_major_locator(ticker.MultipleLocator(0.01))

ax2.xaxis.set_minor_locator(ticker.MultipleLocator(0.005))

yplot = y/np.mean(y[outtransit])

plt.xlim(bjd.min()-0.001, bjd.max()+0.001)

fig.add_subplot(ax2)

# save

pp = PdfPages(save_directory+'JWST_NIRSpec_Prism_fit_light_curve_bin'+str(bin)+'_lightcurve.pdf')

plt.savefig(pp, format='pdf')

pp.close()

plt.clf()

# plot systematic corrected light curve

b0 = p['a_Rs'].value * np.sqrt((np.sin(phase * 2 * np.pi)) ** 2 + (p['cosinc'].value * np.cos(phase * 2 * np.pi)) ** 2)

intransit = (b0-p['rprs'].value < 1.0E0).nonzero()

light_curve = b0/b0

mulimb0, mulimbf = occultnl(p['rprs'].value, c1, c2, c3, c4, b0[intransit]) # Madel and Agol

light_curve[intransit] = mulimb0

fig, axs = plt.subplots()

plt.scatter(x, light_curve+resid, s=msize*0.75, linewidth=1, zorder=0, alpha=0.5, marker='+', edgecolors='blue')

plt.xlabel('BJD')

plt.ylabel('Relative Flux')

plt.plot(x, light_curve, linewidth=2, color='orange', alpha=0.8, zorder=15) # overplot Transit model at data

pp = PdfPages(save_directory+'JWST_NIRSpec_Prism_fit_light_curve_bin'+str(bin)+'_corrected.pdf')

plt.savefig(pp, format='pdf')

pp.close()

plt.clf()

plt.close('all') # close all figures

# --------------------------------------------------------------------------

k = k + wk # step wavelength index to next bin

print('** Can Now View Output PDFs in ', save_directory)

You are using the 3D limb darkening models.

Current Directories Entered:

./notebookrun2/

./notebookrun2/3DGrid

Filename: mmu_t45g45m00v05.flx

Closest values to your inputs:

Teff : 4500

M_H : 0.0

log(g): 4.5

Limb darkening parameters:

4param 0.93487504 -0.53100790 0.43228942 -0.16657071

3param 2.59284576 -3.50115296 1.49775298

Quad 0.08088662 0.45653998

Linear 0.46807470

c1 = 0.9348750381814881

c2 = -0.5310078997267651

c3 = 0.43228941801541876

c4 = -0.16657071491026204

Fitting Bin 0 Wavelength = 1.5502650866666667 Range= [ 1.3906398 : 1.6900786 ]

rprs: 0.08040000000000003 current chi^2= 13259.13166655233 scatter 0.002395991127564558

rprs: 0.08040000000000003 current chi^2= 13259.13166655233 scatter 0.002395991127564558

rprs: 0.08040000000000003 current chi^2= 13259.13166655233 scatter 0.002395991127564558

rprs: 0.08040270774358477 current chi^2= 13259.38074200222 scatter 0.0023959973363420354

rprs: 0.08040000000000003 current chi^2= 13248.278737808054 scatter 0.0023959681306094903

rprs: 0.08040000000000003 current chi^2= 13259.130280097768 scatter 0.002395991001516553

rprs: 0.08040000000000003 current chi^2= 13259.131429252546 scatter 0.002395991105986051

rprs: 0.08040000000000003 current chi^2= 13259.128587330806 scatter 0.0023959911661302655

rprs: 0.08040000000000003 current chi^2= 13259.130052164794 scatter 0.002395991156807991

rprs: 0.08040000000000003 current chi^2= 13259.128612551267 scatter 0.0023959911746075746

rprs: 0.08040000000000003 current chi^2= 13259.131010275192 scatter 0.002395991067794625

rprs: 0.07871465070060435 current chi^2= 13085.427976810099 scatter 0.002392291458873503

rprs: 0.07871734914035922 current chi^2= 13085.430446645514 scatter 0.002392291573664804

rprs: 0.07871465070060435 current chi^2= 13085.587014370758 scatter 0.0023922675490652486

rprs: 0.07871465070060435 current chi^2= 13085.427963429927 scatter 0.002392291457736874

rprs: 0.07871465070060435 current chi^2= 13085.427976247262 scatter 0.002392291458820945

rprs: 0.07871465070060435 current chi^2= 13085.427966650637 scatter 0.002392291460651128

rprs: 0.07871465070060435 current chi^2= 13085.427969542667 scatter 0.0023922914603166693

rprs: 0.07871465070060435 current chi^2= 13085.427963002876 scatter 0.002392291461733644

rprs: 0.07871465070060435 current chi^2= 13085.42796888552 scatter 0.0023922914582929604

rprs: 0.07869507850297419 current chi^2= 13085.417570023197 scatter 0.0023922893084169137

rprs: 0.07869777683160512 current chi^2= 13085.417701212195 scatter 0.0023922893094966723

rprs: 0.07869507850297419 current chi^2= 13085.645099230947 scatter 0.002392265381629663

rprs: 0.07869507850297419 current chi^2= 13085.417570306718 scatter 0.0023922893085273553

rprs: 0.07869507850297419 current chi^2= 13085.417570023383 scatter 0.002392289308415772

rprs: 0.07869507850297419 current chi^2= 13085.41757009202 scatter 0.0023922893085145626

rprs: 0.07869507850297419 current chi^2= 13085.41757007312 scatter 0.0023922893084357293

rprs: 0.07869507850297419 current chi^2= 13085.417570193436 scatter 0.002392289308529068

rprs: 0.07869507850297419 current chi^2= 13085.41757008867 scatter 0.002392289308566094

rprs: 0.07869514603866212 current chi^2= 13085.417569994974 scatter 0.0023922892879302293

rprs: 0.07869514603866212 current chi^2= 13085.417569994974 scatter 0.0023922892879302293

Re-Fitting Bin 0 Wavelength = 1.5502650866666667 Range= [ 1.3906398 : 1.6900786 ]

redchi 1.598902440126463

chi2 13085.417569994974

nfree 8184

bic 3908.731693909619

aic 3852.6443871313845

L-M FIT Variable

[[Variables]]

cosinc: 0.05481076 (fixed)

rho_star: 8.320569 (fixed)

a_Rs: 14.54 (fixed)

rprs: 0.07869515 +/- 5.4580e-04 (0.69%) (init = 0.0804)

t0: 2454865 (fixed)

f0: 278433.709 +/- 22.2711416 (0.01%) (init = 278410.6)

ecc: 0 (fixed)

omega: 0 (fixed)

Fslope: 0 (fixed)

xsh: -0.00112032 +/- 9.9569e-05 (8.89%) (init = -0.001091413)

ysh: 3.3600e-05 +/- 9.2623e-05 (275.66%) (init = 0.0002380423)

x2sh: -4.1703e-04 +/- 2.2616e-04 (54.23%) (init = -0.0003157139)

y2sh: -3.3390e-04 +/- 2.2424e-04 (67.16%) (init = -0.0001744718)

xysh: 6.7719e-04 +/- 4.4533e-04 (65.76%) (init = 0.0003395803)

comm: 5.4547e-04 +/- 8.2561e-05 (15.14%) (init = 0.0002697908)

[[Correlations]] (unreported correlations are < 0.100)

C(y2sh, xysh) = -0.9820

C(x2sh, xysh) = -0.9755

C(x2sh, y2sh) = +0.9376

C(rprs, f0) = +0.8090

C(xsh, ysh) = -0.6325

C(xsh, comm) = -0.4878

C(f0, x2sh) = -0.3857

C(f0, y2sh) = -0.3280

C(ysh, comm) = -0.3010

C(f0, xysh) = +0.2739

C(xsh, x2sh) = +0.2427

C(xsh, xysh) = -0.2134

C(rprs, x2sh) = -0.1917

C(xysh, comm) = +0.1795

C(xsh, y2sh) = +0.1757

C(x2sh, comm) = -0.1724

C(rprs, xsh) = -0.1644

C(y2sh, comm) = -0.1486

C(rprs, y2sh) = -0.1369

C(rprs, ysh) = +0.1341

C(f0, xsh) = -0.1185

/opt/hostedtoolcache/Python/3.11.12/x64/lib/python3.11/site-packages/uncertainties/core.py:1024: UserWarning: Using UFloat objects with std_dev==0 may give unexpected results.

warn("Using UFloat objects with std_dev==0 may give unexpected results.")

Residual standard deviation (ppm) : 2392.2892879302294

Photon noise (ppm) : 1895.1303791485568

Photon noise performance (%) : 79.21827801972032

White noise (ppm) : 2387.1294434056217

Red noise (ppm) : 157.34481188723825

Transit depth measured error (ppm) : 85.9031200241312

/tmp/ipykernel_2145/3968974178.py:175: UserWarning: You passed a edgecolor/edgecolors ('blue') for an unfilled marker ('+'). Matplotlib is ignoring the edgecolor in favor of the facecolor. This behavior may change in the future.

ax1.scatter(x, y/p['f0'].value, s=msize*0.75, linewidth=1, zorder=0, alpha=0.5, marker='+', edgecolors='blue')

/tmp/ipykernel_2145/3968974178.py:190: UserWarning: You passed a edgecolor/edgecolors ('blue') for an unfilled marker ('+'). Matplotlib is ignoring the edgecolor in favor of the facecolor. This behavior may change in the future.

ax2.scatter(x, residppm, s=msize*0.75, linewidth=1, alpha=0.5, marker='+', edgecolors='blue', zorder=0) # overplot Transit model at data

/tmp/ipykernel_2145/3968974178.py:216: UserWarning: You passed a edgecolor/edgecolors ('blue') for an unfilled marker ('+'). Matplotlib is ignoring the edgecolor in favor of the facecolor. This behavior may change in the future.

plt.scatter(x, light_curve+resid, s=msize*0.75, linewidth=1, zorder=0, alpha=0.5, marker='+', edgecolors='blue')

** Can Now View Output PDFs in ./notebookrun2/

You are using the 3D limb darkening models.

Current Directories Entered:

./notebookrun2/

./notebookrun2/3DGrid

Filename: mmu_t45g45m00v05.flx

Closest values to your inputs:

Teff : 4500

M_H : 0.0

log(g): 4.5

Limb darkening parameters:

4param 1.12080978 -0.97843649 0.75312747 -0.25673904

3param 2.76671190 -3.96262651 1.73859744

Quad 0.01052381 0.47328338

Linear 0.41191185

c1 = 1.1208097816289275

c2 = -0.9784364859067153

c3 = 0.7531274724186052

c4 = -0.25673904162133504

Fitting Bin 1 Wavelength = 1.84545072 Range= [ 1.6900786 : 1.9783379 ]

rprs: 0.08040000000000003 current chi^2= 14409.258018796532 scatter 0.0015901487499034492

rprs: 0.08040000000000003 current chi^2= 14409.258018796532 scatter 0.0015901487499034492

rprs: 0.08040000000000003 current chi^2= 14409.258018796532 scatter 0.0015901487499034492

rprs: 0.08040270774358477 current chi^2= 14409.640927768778 scatter 0.001590146481393384

rprs: 0.08040000000000003 current chi^2= 14384.26032306856 scatter 0.0015901324693844408

rprs: 0.08040000000000003 current chi^2= 14409.256908299743 scatter 0.0015901486882368267

rprs: 0.08040000000000003 current chi^2= 14409.257916219321 scatter 0.0015901487443378867

rprs: 0.08040000000000003 current chi^2= 14409.25555294551 scatter 0.0015901487404224768

rprs: 0.08040000000000003 current chi^2= 14409.2576202528 scatter 0.0015901487487454792

rprs: 0.08040000000000003 current chi^2= 14409.25456157607 scatter 0.0015901487376063068

rprs: 0.08040000000000003 current chi^2= 14409.256671850297 scatter 0.001590148674606289

rprs: 0.08047646010433446 current chi^2= 14107.324261228376 scatter 0.0015893596671447225

rprs: 0.08047916825772544 current chi^2= 14107.324659469552 scatter 0.0015893596876831995

rprs: 0.08047646010433446 current chi^2= 14107.879803752661 scatter 0.0015893437275776225

rprs: 0.08047646010433446 current chi^2= 14107.324262182954 scatter 0.001589359667150261

rprs: 0.08047646010433446 current chi^2= 14107.324261352853 scatter 0.0015893596670279642

rprs: 0.08047646010433446 current chi^2= 14107.32426131755 scatter 0.001589359667148644

rprs: 0.08047646010433446 current chi^2= 14107.32426127115 scatter 0.001589359667143888

rprs: 0.08047646010433446 current chi^2= 14107.324261251102 scatter 0.0015893596671449827

rprs: 0.08047646010433446 current chi^2= 14107.324262107395 scatter 0.001589359667272411

rprs: 0.08047594215086162 current chi^2= 14107.324257120452 scatter 0.0015893597540903442

rprs: 0.08047594215086162 current chi^2= 14107.324257120452 scatter 0.0015893597540903442

Re-Fitting Bin 1 Wavelength = 1.84545072 Range= [ 1.6900786 : 1.9783379 ]

redchi 1.7237688486217562

chi2 14107.324257120452

nfree 8184

bic 4524.734588062798

aic 4468.647281284564

L-M FIT Variable

[[Variables]]

cosinc: 0.05481076 (fixed)

rho_star: 8.320569 (fixed)

a_Rs: 14.54 (fixed)

rprs: 0.08047594 +/- 3.5164e-04 (0.44%) (init = 0.0804)

t0: 2454865 (fixed)

f0: 679862.650 +/- 36.2424298 (0.01%) (init = 679718.7)

ecc: 0 (fixed)

omega: 0 (fixed)

Fslope: 0 (fixed)

xsh: -0.00119414 +/- 6.6177e-05 (5.54%) (init = -0.001210365)

ysh: -5.0144e-04 +/- 6.1567e-05 (12.28%) (init = -0.0004319937)

x2sh: -1.1190e-04 +/- 1.5033e-04 (134.34%) (init = -8.991835e-05)

y2sh: -6.4120e-05 +/- 1.4905e-04 (232.45%) (init = -1.47937e-05)

xysh: -3.4287e-05 +/- 2.9598e-04 (863.24%) (init = -0.0001337487)

comm: 0.00107817 +/- 5.4864e-05 (5.09%) (init = 0.001005529)

[[Correlations]] (unreported correlations are < 0.100)

C(y2sh, xysh) = -0.9820

C(x2sh, xysh) = -0.9755

C(x2sh, y2sh) = +0.9376

C(rprs, f0) = +0.8102

C(xsh, ysh) = -0.6328

C(xsh, comm) = -0.4875

C(f0, x2sh) = -0.3863

C(f0, y2sh) = -0.3290

C(ysh, comm) = -0.3011

C(f0, xysh) = +0.2747

C(xsh, x2sh) = +0.2431

C(xsh, xysh) = -0.2139

C(rprs, x2sh) = -0.1930

C(xysh, comm) = +0.1791

C(xsh, y2sh) = +0.1762

C(x2sh, comm) = -0.1719

C(rprs, xsh) = -0.1654

C(y2sh, comm) = -0.1482

C(rprs, y2sh) = -0.1388

C(rprs, ysh) = +0.1364

C(f0, xsh) = -0.1196

/opt/hostedtoolcache/Python/3.11.12/x64/lib/python3.11/site-packages/uncertainties/core.py:1024: UserWarning: Using UFloat objects with std_dev==0 may give unexpected results.

warn("Using UFloat objects with std_dev==0 may give unexpected results.")

Residual standard deviation (ppm) : 1589.3597540903443

Photon noise (ppm) : 1212.8006149210241

Photon noise performance (%) : 76.30749500229793

White noise (ppm) : 1588.7050678099595

Red noise (ppm) : 45.70298588985219