PSF Photometry#

Use case: PSF photometry, creating a PSF, derive Color-Magnitude Diagram.

Data: NIRCam simulated images of the Large Magellanic Cloud (LMC) Astrometric Calibration Field obtained using MIRAGE and run through the JWST pipeline. The simulations are obtained using a 4-point subpixel dither for three pairs of wide filters: F070W, F115W, and F200W for the SW channel, and F277W, F356W, and F444W for the LW channel. We simulated only 1 NIRCam SW detector (i.e., “NRCB1”). For this example, we use Level-2 images (.cal, calibrated but not rectified) for two SW filters (F115W and F200W) and derive the photometry in each of them. The images for the other filters are also available and can be used to test the notebook and/or different filter combinations.

Tools: photutils.

Cross-intrument: NIRSpec, NIRISS, MIRI.

Documentation: This notebook is part of a STScI’s larger post-pipeline Data Analysis Tools Ecosystem.

PSF Photometry can be obtained using:

single model obtained from STPSF

grid of PSF models from STPSF

single effective PSF (ePSF)

Work in Progress:#

create a grid of ePSF and perform reduction using the ePSF grid

use the ePSF grid to perturbate the STPSF model

The notebook shows:

how to obtain the PSF model from STPSF (or build an ePSF)

how to perform PSF photometry on the image

how to cross-match the catalogs of the different images

how to derive and apply photometric zeropoint

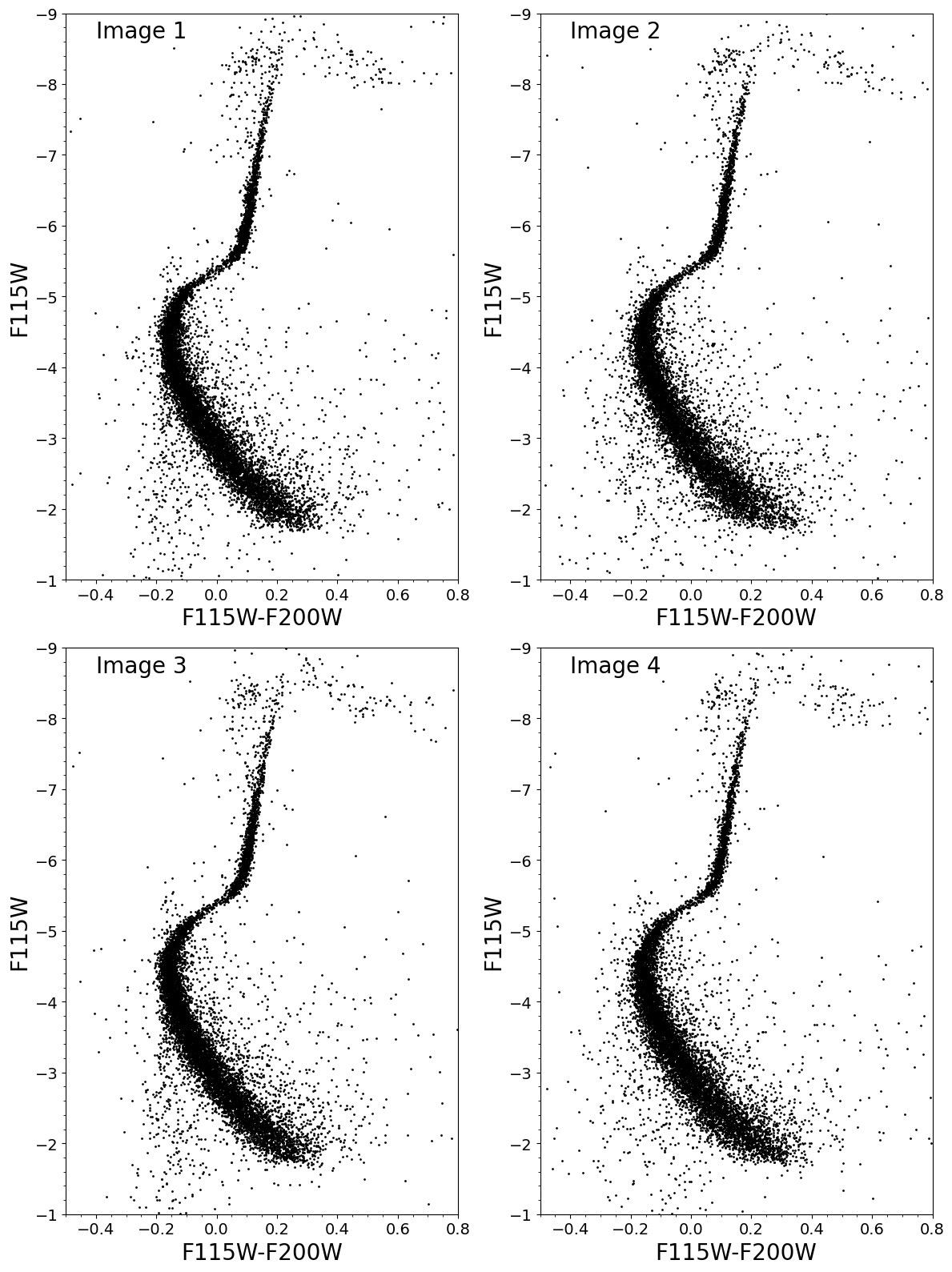

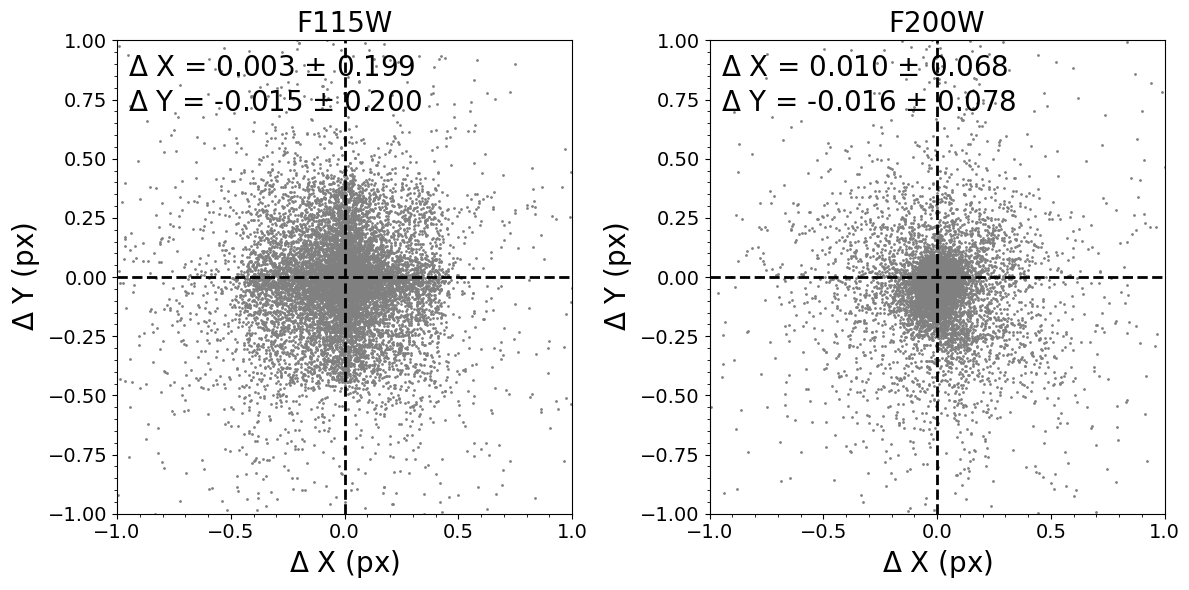

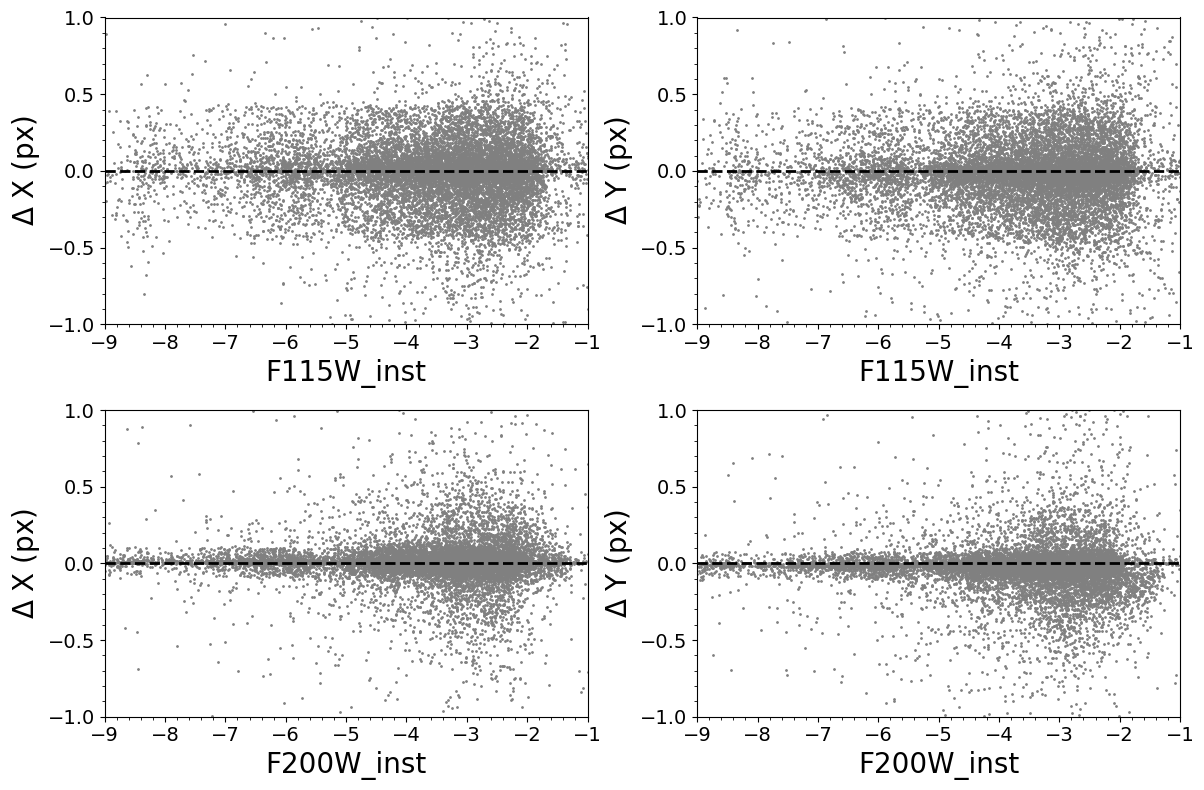

Final plots show:

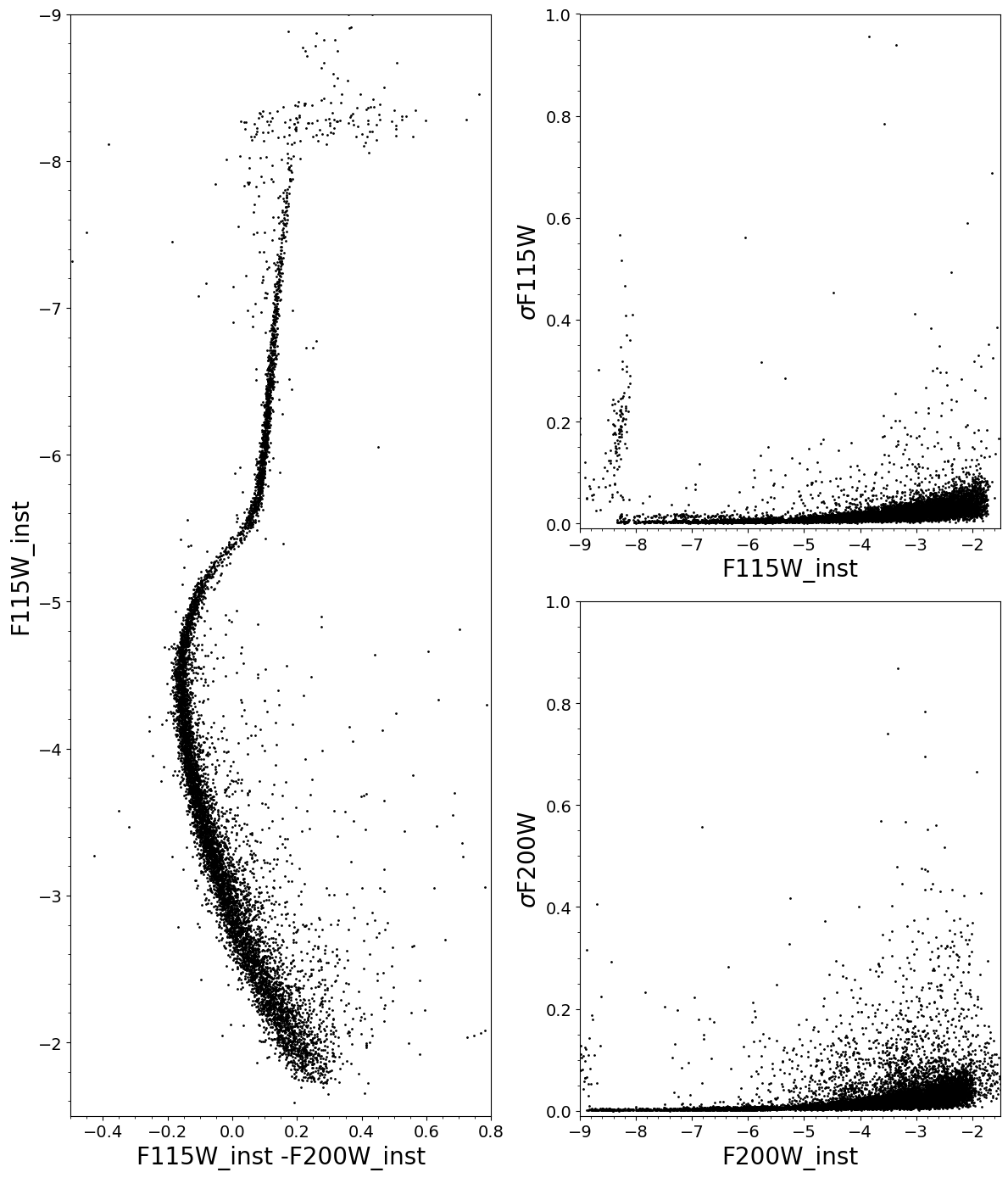

Instrumental Color-Magnitude Diagrams for the 4 images

Instrumental Color-Magnitude Diagrams and errors

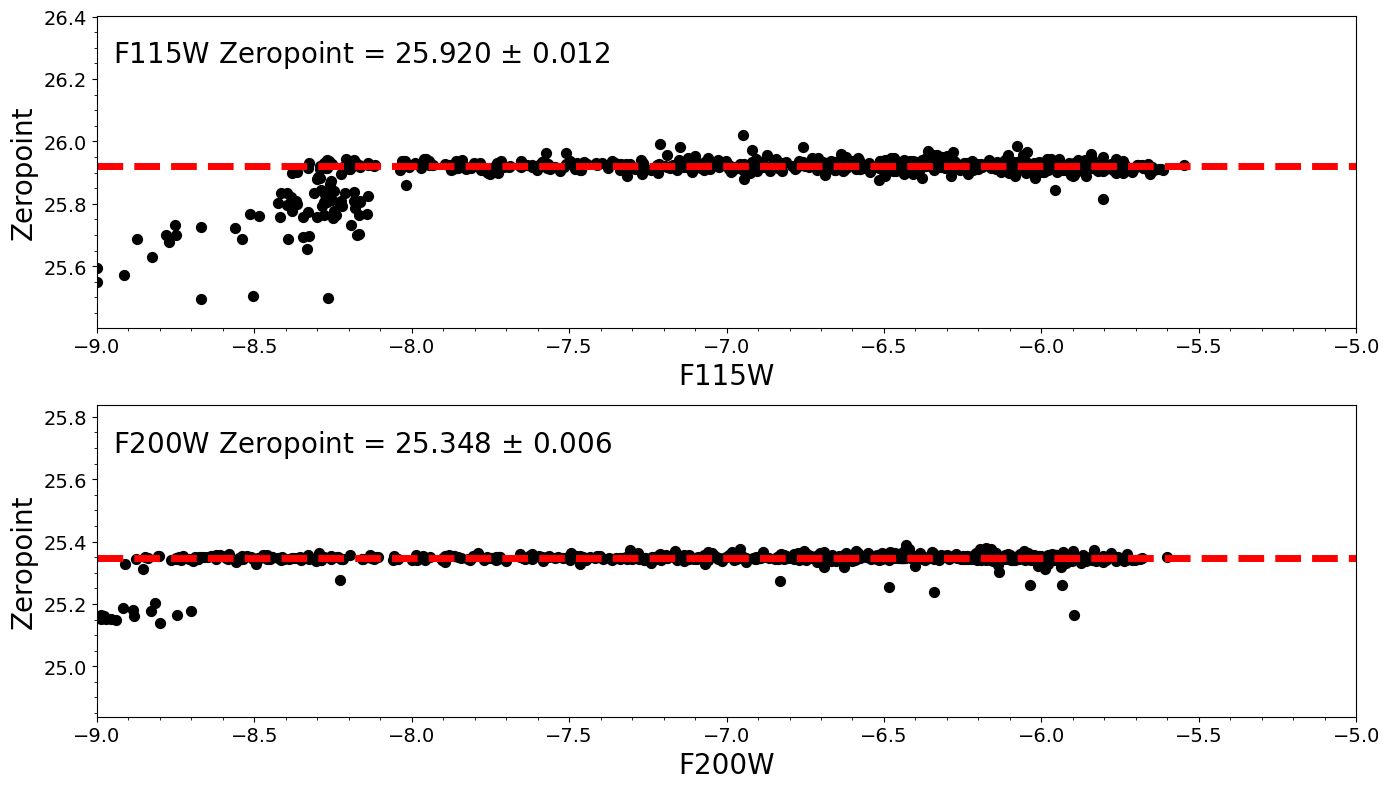

Magnitudes Zeropoints

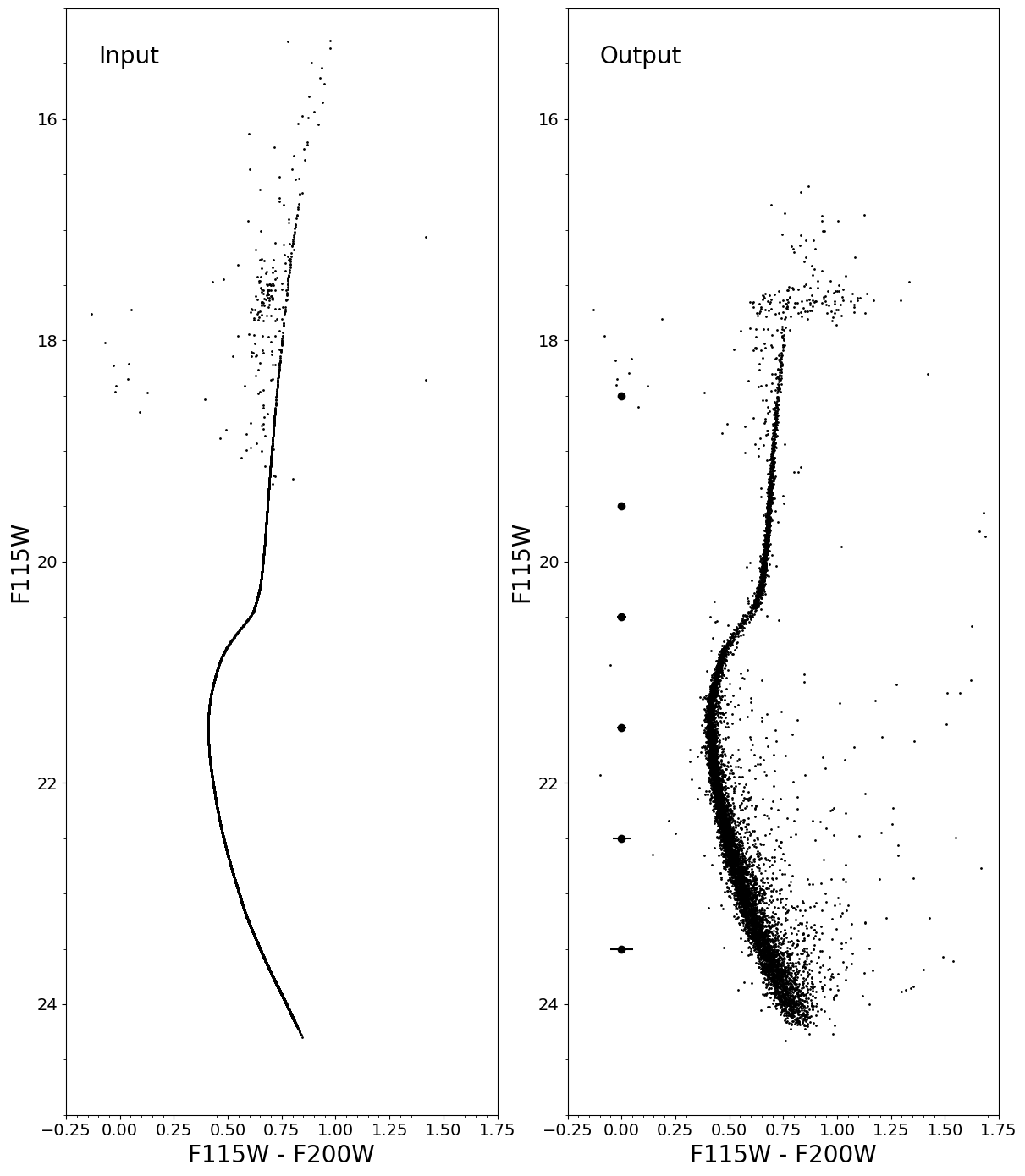

Calibrated Color-Magnitude Diagram (compared with Input Color-Magnitude Diagram)

Comparison between input and output photometry

Note on pysynphot: Data files for pysynphot are distributed separately by the Calibration Reference Data System (CRDS). They are expected to follow a certain directory structure under the root directory, identified by the PYSYN_CDBS environment variable that must be set prior to using this package. In the example below, the root directory is arbitrarily named /my/local/dir/trds/.

export PYSYN_CDBS=/my/local/dir/trds/

See documentation here for the configuration and download of the data files.

Imports#

import glob as glob

import os

import tarfile

import requests

import time

import warnings

from urllib import request

from urllib.parse import urlparse

import numpy as np

import pandas as pd

import stpsf

from astropy import units as u

from astropy.coordinates import SkyCoord, match_coordinates_sky

from astropy.io import fits

from astropy.modeling.fitting import LevMarLSQFitter

from astropy.nddata import NDData

from astropy.stats import sigma_clipped_stats

from astropy.table import QTable, Table

from astropy.utils.exceptions import AstropyUserWarning

from astropy.visualization import simple_norm

from jwst.datamodels import ImageModel

from photutils.aperture import (CircularAnnulus, CircularAperture,

aperture_photometry)

from photutils.background import MADStdBackgroundRMS, MMMBackground

from photutils.detection import DAOStarFinder

from photutils.psf import (EPSFBuilder, GriddedPSFModel, IterativePSFPhotometry,

SourceGrouper, extract_stars)

Import Plotting Functions#

%matplotlib inline

from matplotlib import pyplot as plt

import matplotlib.ticker as ticker

plt.rcParams['image.cmap'] = 'viridis'

plt.rcParams['image.origin'] = 'lower'

plt.rcParams['axes.titlesize'] = plt.rcParams['axes.labelsize'] = 14

plt.rcParams['xtick.labelsize'] = plt.rcParams['ytick.labelsize'] = 14

font1 = {'family': 'helvetica', 'color': 'black', 'weight': 'normal', 'size': '12'}

font2 = {'family': 'helvetica', 'color': 'black', 'weight': 'normal', 'size': '20'}

Download STPSF and Synphot Data#

# Set environmental variables

os.environ["STPSF_PATH"] = "./data/stpsf-data"

# STPSF Data

stpsf_url = 'https://stsci.box.com/shared/static/kqfolg2bfzqc4mjkgmujo06d3iaymahv.gz'

stpsf_file = './stpsf-data-LATEST.tar.gz'

stpsf_folder = "./data"

def download_file(url, dest_path, timeout=60):

parsed_url = urlparse(url)

if parsed_url.scheme not in ["http", "https"]:

raise ValueError(f"Unsupported URL scheme: {parsed_url.scheme}")

response = requests.get(url, stream=True, timeout=timeout)

response.raise_for_status()

with open(dest_path, "wb") as f:

for chunk in response.iter_content(chunk_size=8192):

f.write(chunk)

# Gather stpsf files

stpsfExist = os.path.exists(stpsf_folder)

if not stpsfExist:

os.makedirs(stpsf_folder)

download_file(stpsf_url, stpsf_file)

gzf = tarfile.open(stpsf_file)

gzf.extractall(stpsf_folder, filter='data')

# Set environmental variables

os.environ["PYSYN_CDBS"] = "./grp/redcat/trds/"

# Synphot data

synphot_url = 'http://ssb.stsci.edu/trds/tarfiles/synphot5.tar.gz'

synphot_file = './synphot5.tar.gz'

synphot_folder = './grp'

# Gather synphot files

if not os.path.exists(synphot_folder):

os.makedirs(synphot_folder)

request.urlretrieve(synphot_url, synphot_file)

gzf = tarfile.open(synphot_file)

gzf.extractall('./', filter='data')

Load the images and create some useful dictionaries#

We load all the images and we create a dictionary that contains all of them, divided by detectors and filters. This is useful to check which detectors and filters are available and to decide if we want to perform the photometry on all of them or only on a subset (for example, only on the SW filters).

We also create a dictionary with some useful parameters for the analysis. The dictionary contains the photometric zeropoints (from MIRAGE configuration files) and the NIRCam point spread function (PSF) FWHM, from the NIRCam Point Spread Function JDox page. The FWHM are calculated from the analysis of the expected NIRCam PSFs simulated with STPSF.

Note: this dictionary will be updated once the values for zeropoints and FWHM will be available for each detectors after commissioning.

Hence, we have two dictionaries:

dictionary for the single Level-2 calibrated images

dictionary with some other useful parameters

dict_images = {'NRCA1': {}, 'NRCA2': {}, 'NRCA3': {}, 'NRCA4': {}, 'NRCA5': {},

'NRCB1': {}, 'NRCB2': {}, 'NRCB3': {}, 'NRCB4': {}, 'NRCB5': {}}

dict_filter_short = {}

dict_filter_long = {}

ff_short = []

det_short = []

det_long = []

ff_long = []

detlist_short = []

detlist_long = []

filtlist_short = []

filtlist_long = []

if not glob.glob('./*cal*fits'):

print("Downloading images")

boxlink_images_lev2 = 'https://data.science.stsci.edu/redirect/JWST/jwst-data_analysis_tools/stellar_photometry/images_level2.tar.gz'

boxfile_images_lev2 = './images_level2.tar.gz'

request.urlretrieve(boxlink_images_lev2, boxfile_images_lev2)

tar = tarfile.open(boxfile_images_lev2, 'r')

tar.extractall(filter='data')

images_dir = './'

images = sorted(glob.glob(os.path.join(images_dir, "*cal.fits")))

else:

images_dir = './'

images = sorted(glob.glob(os.path.join(images_dir, "*cal.fits")))

for image in images:

im = fits.open(image)

f = im[0].header['FILTER']

d = im[0].header['DETECTOR']

if d == 'NRCBLONG':

d = 'NRCB5'

elif d == 'NRCALONG':

d = 'NRCA5'

else:

d = d

wv = float(f[1:3])

if wv > 24:

ff_long.append(f)

det_long.append(d)

else:

ff_short.append(f)

det_short.append(d)

detlist_short = sorted(list(dict.fromkeys(det_short)))

detlist_long = sorted(list(dict.fromkeys(det_long)))

unique_list_filters_short = []

unique_list_filters_long = []

for x in ff_short:

if x not in unique_list_filters_short:

dict_filter_short.setdefault(x, {})

for x in ff_long:

if x not in unique_list_filters_long:

dict_filter_long.setdefault(x, {})

for d_s in detlist_short:

dict_images[d_s] = dict_filter_short

for d_l in detlist_long:

dict_images[d_l] = dict_filter_long

filtlist_short = sorted(list(dict.fromkeys(dict_filter_short)))

filtlist_long = sorted(list(dict.fromkeys(dict_filter_long)))

if len(dict_images[d][f]) == 0:

dict_images[d][f] = {'images': [image]}

else:

dict_images[d][f]['images'].append(image)

print("Available Detectors for SW channel:", detlist_short)

print("Available Detectors for LW channel:", detlist_long)

print("Available SW Filters:", filtlist_short)

print("Available LW Filters:", filtlist_long)

Downloading images

Available Detectors for SW channel: ['NRCB1']

Available Detectors for LW channel: ['NRCB5']

Available SW Filters: ['F070W', 'F115W', 'F200W']

Available LW Filters: ['F277W', 'F356W', 'F444W']

filters = ['F070W', 'F090W', 'F115W', 'F140M', 'F150W2', 'F150W', 'F162M', 'F164N', 'F182M',

'F187N', 'F200W', 'F210M', 'F212N', 'F250M', 'F277W', 'F300M', 'F322W2', 'F323N',

'F335M', 'F356W', 'F360M', 'F405N', 'F410M', 'F430M', 'F444W', 'F460M', 'F466N', 'F470N', 'F480M']

psf_fwhm = [0.987, 1.103, 1.298, 1.553, 1.628, 1.770, 1.801, 1.494, 1.990, 2.060, 2.141, 2.304, 2.341, 1.340,

1.444, 1.585, 1.547, 1.711, 1.760, 1.830, 1.901, 2.165, 2.179, 2.300, 2.302, 2.459, 2.507, 2.535, 2.574]

zp_modA = [25.7977, 25.9686, 25.8419, 24.8878, 27.0048, 25.6536, 24.6957, 22.3073, 24.8258, 22.1775, 25.3677, 24.3296,

22.1036, 22.7850, 23.5964, 24.8239, 23.6452, 25.3648, 20.8604, 23.5873, 24.3778, 23.4778, 20.5588,

23.2749, 22.3584, 23.9731, 21.9502, 20.0428, 19.8869, 21.9002]

zp_modB = [25.7568, 25.9771, 25.8041, 24.8738, 26.9821, 25.6279, 24.6767, 22.2903, 24.8042, 22.1499, 25.3391, 24.2909,

22.0574, 22.7596, 23.5011, 24.6792, 23.5769, 25.3455, 20.8631, 23.4885, 24.3883, 23.4555, 20.7007,

23.2763, 22.4677, 24.1562, 22.0422, 20.1430, 20.0173, 22.4086]

dict_utils = {filters[i]: {'psf fwhm': psf_fwhm[i], 'VegaMAG zp modA': zp_modA[i],

'VegaMAG zp modB': zp_modB[i]} for i in range(len(filters))}

Select the detectors and/or filters for the analysis#

If we are interested only in some filters (and/or some detectors) in the analysis, as in this example, we can select the Level-2 calibrated images from the dictionary for those filters (detectors) and analyze only those images.

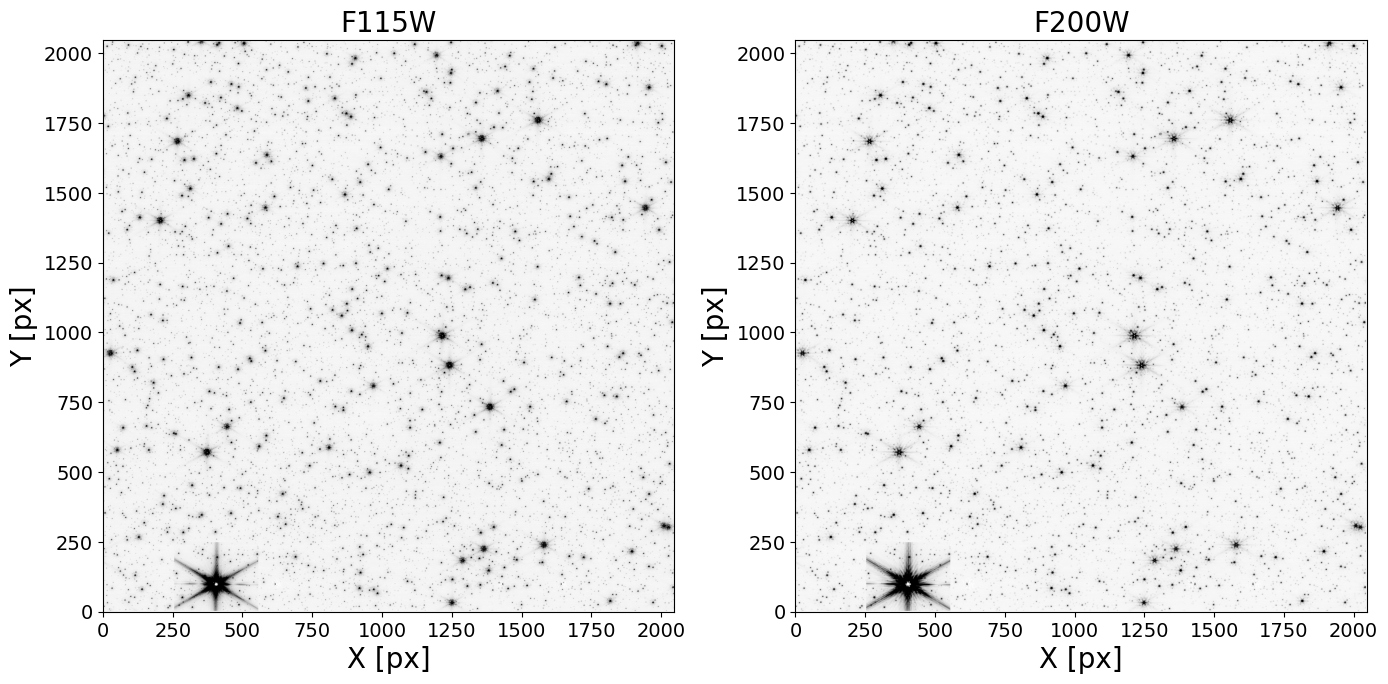

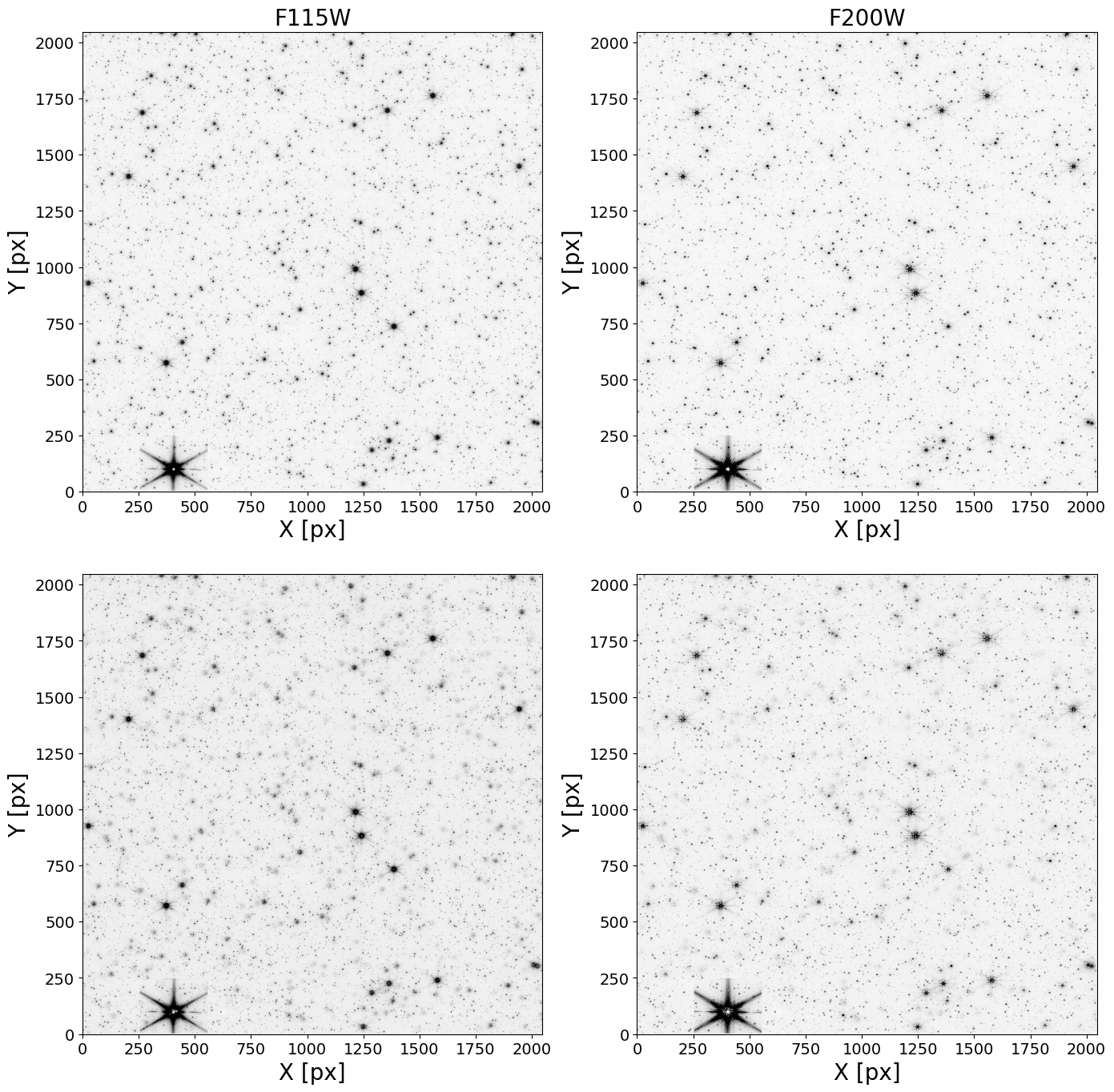

In this particular example, we analyze images for filters F115W and F200W for the detector NRCB1.

dets_short = ['NRCB1'] # detector of interest in this example

filts_short = ['F115W', 'F200W'] # filters of interest in this example

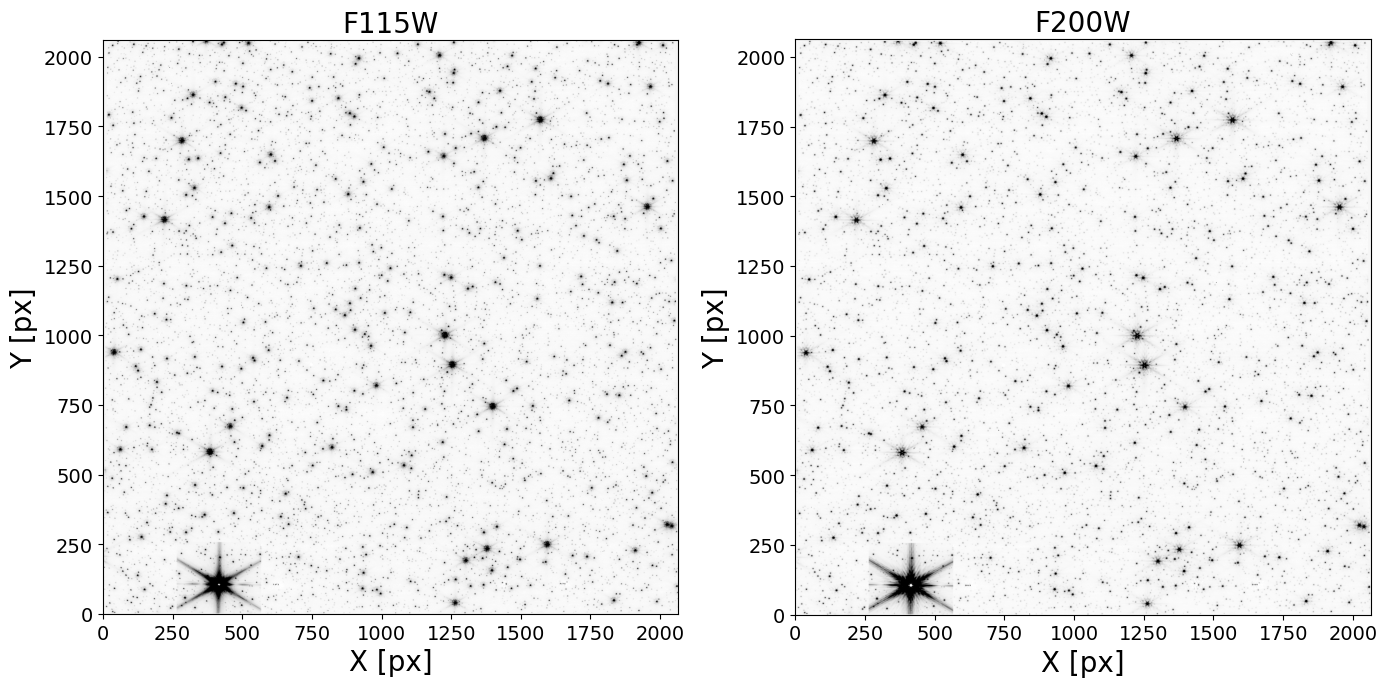

Display the images#

Check that our images do not present artifacts and can be used in the analysis.

fig, ax = plt.subplots(ncols=2, figsize=(14, 14))

for det in dets_short:

for i, filt in enumerate(filts_short):

image = fits.open(dict_images[det][filt]['images'][0])

data_sb = image[1].data

norm = simple_norm(data_sb, 'sqrt', percent=99.)

ax[i].imshow(data_sb, norm=norm, cmap='Greys')

ax[i].set_xlabel("X [px]", fontdict=font2)

ax[i].set_ylabel("Y [px]", fontdict=font2)

ax[i].set_title(filt, fontdict=font2)

plt.tight_layout()

2025-05-15 19:19:05,238 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:05,239 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:05,252 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:05,258 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:05,285 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:05,538 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:05,547 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:05,549 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:05,765 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:05,775 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:05,777 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:06,232 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:06,233 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:06,235 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:06,440 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:06,441 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:06,450 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:06,452 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:06,457 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:06,659 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:06,668 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:06,670 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

Create the PSF models#

I. Create the PSF model using STPSF#

We create a dictionary that will contain the PSFs created using STPSF for the detectors and filters selected above.

dict_psfs_stpsf = {}

for det in dets_short:

dict_psfs_stpsf.setdefault(det, {})

for j, filt in enumerate(filts_short):

dict_psfs_stpsf[det].setdefault(filt, {})

dict_psfs_stpsf[det][filt]['psf model grid'] = None

dict_psfs_stpsf[det][filt]['psf model single'] = None

The function below creates a single PSF or a grid of PSFs and allows one to save the PSF as a fits file. The model PSFs are stored by default in the psf dictionary. For the grid of PSFs, users can select the number of PSFs to be created. The PSF can be created detector sampled or oversampled (the oversampling factor can be changed inside the function).

Note: The default source spectrum is, if pysynphot is installed, a G2V star spectrum from Castelli & Kurucz (2004). Without pysynphot, the default is a simple flat spectrum such that the same number of photons are detected at each wavelength.

def create_psf_model(det='NRCB1', fov=11, create_grid=False, num=9, save_psf=False, detsampled=False):

nrc = stpsf.NIRCam()

nrc.detector = det

nrc.filter = filt

src = stpsf.specFromSpectralType('G5V', catalog='phoenix')

if detsampled:

print("Creating a detector sampled PSF")

fov = 21

else:

print("Creating an oversampled PSF")

fov = fov

print(f"Using a {fov} px fov")

if create_grid:

print("")

print(f"Creating a grid of PSF for filter {filt} and detector {det}")

print("")

outname = f'nircam_{det}_{filt}_fovp{fov}_samp4_npsf{num}.fits'

if os.path.exists(outname):

grid_psf = GriddedPSFModel.read(outname)

else:

grid_psf = nrc.psf_grid(num_psfs=num, oversample=4, source=src, all_detectors=False,

fov_pixels=fov, use_detsampled_psf=detsampled,

save=save_psf)

dict_psfs_stpsf[det][filt]['psf model grid'] = grid_psf

else:

print("")

print(f"Creating a single PSF for filter {filt} and detector {det}")

print("")

outname = f'nircam_{det}_{filt}_fovp{fov}_samp4_npsf{num}.fits'

if os.path.exists(outname):

single_psf = GriddedPSFModel.read(outname)

else:

single_psf = nrc.psf_grid(num_psfs=1, oversample=4, source=src, all_detectors=False,

fov_pixels=fov, use_detsampled_psf=detsampled,

save=save_psf)

dict_psfs_stpsf[det][filt]['psf model single'] = single_psf

return dict_psfs_stpsf

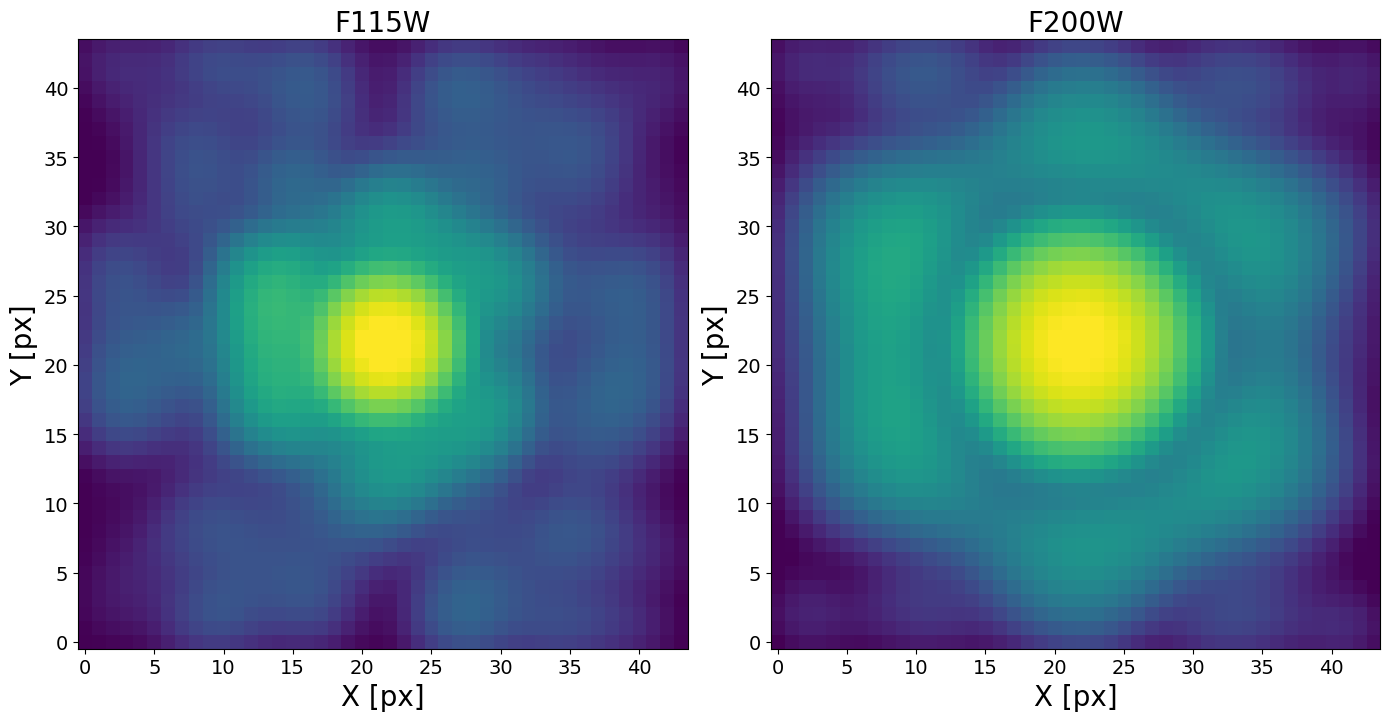

Single PSF model#

for det in dets_short:

for filt in filts_short:

create_psf_model(fov=11, num=1, create_grid=False, save_psf=True, detsampled=False)

2025-05-15 19:19:07,173 - stpipe - WARNING - /opt/hostedtoolcache/Python/3.11.12/x64/lib/python3.11/site-packages/stsynphot/spectrum.py:615: AstropyUserWarning: Failed to load Vega spectrum from ./grp/redcat/trds//calspec/alpha_lyr_stis_011.fits; Functionality involving Vega will be severely limited: FileNotFoundError(2, 'No such file or directory')

warnings.warn(

Creating an oversampled PSF

Using a 11 px fov

Creating a single PSF for filter F115W and detector NRCB1

Running instrument: NIRCam, filter: F115W

Running detector: NRCB1

Position 1/1: (1023, 1023) pixels

Position 1/1 centroid: (np.float64(21.60990978939513), np.float64(21.35084523556168))

Saving file: nircam_nrcb1_f115w_fovp11_samp4_npsf1.fits

Creating an oversampled PSF

Using a 11 px fov

Creating a single PSF for filter F200W and detector NRCB1

Running instrument: NIRCam, filter: F200W

Running detector: NRCB1

Position 1/1: (1023, 1023) pixels

Position 1/1 centroid: (np.float64(21.636708563807037), np.float64(21.310194586557518))

Saving file: nircam_nrcb1_f200w_fovp11_samp4_npsf1.fits

Display the single PSF models#

fig, ax = plt.subplots(ncols=2, figsize=(14, 14))

for det in dets_short:

for i, filt in enumerate(filts_short):

img = dict_psfs_stpsf[det][filt]['psf model single'].data[0]

norm_epsf = simple_norm(img, 'log', percent=99.)

ax[i].imshow(img, norm=norm_epsf)

ax[i].set_xlabel('X [px]', fontdict=font2)

ax[i].set_ylabel('Y [px]', fontdict=font2)

ax[i].set_title(filt, fontdict=font2)

plt.tight_layout()

2025-05-15 19:19:21,972 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:21,973 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:21,985 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:21,990 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,018 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,051 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,060 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,062 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,090 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,098 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,100 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,192 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,193 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,194 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,214 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,215 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,229 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,230 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,236 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,256 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,265 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:19:22,267 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

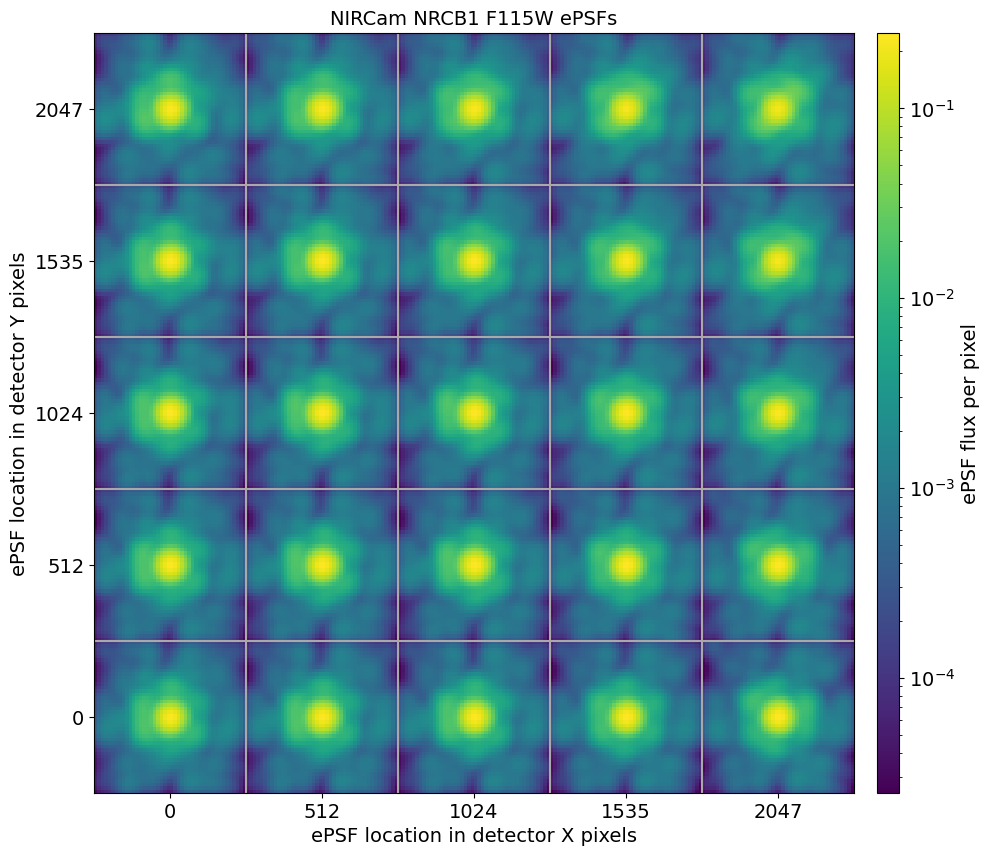

PSF grid#

for det in dets_short:

for filt in filts_short:

create_psf_model(fov=11, num=25, create_grid=True, save_psf=True, detsampled=False)

Creating an oversampled PSF

Using a 11 px fov

Creating a grid of PSF for filter F115W and detector NRCB1

Running instrument: NIRCam, filter: F115W

Running detector: NRCB1

Position 1/25: (0, 0) pixels

Position 1/25 centroid: (np.float64(21.414239727296508), np.float64(21.278125202808823))

Position 2/25: (0, 512) pixels

Position 2/25 centroid: (np.float64(21.5070194497243), np.float64(21.2024365666066))

Position 3/25: (0, 1024) pixels

Position 3/25 centroid: (np.float64(21.58885372862631), np.float64(21.130535340017943))

Position 4/25: (0, 1535) pixels

Position 4/25 centroid: (np.float64(21.70185693347241), np.float64(21.18204895055869))

Position 5/25: (0, 2047) pixels

Position 5/25 centroid: (np.float64(21.803263397923683), np.float64(21.236911974969313))

Position 6/25: (512, 0) pixels

Position 6/25 centroid: (np.float64(21.399673750334703), np.float64(21.302371393412347))

Position 7/25: (512, 512) pixels

Position 7/25 centroid: (np.float64(21.50886727220409), np.float64(21.32610359648041))

Position 8/25: (512, 1024) pixels

Position 8/25 centroid: (np.float64(21.617824797318352), np.float64(21.258377829641923))

Position 9/25: (512, 1535) pixels

Position 9/25 centroid: (np.float64(21.74142310828882), np.float64(21.309397781890244))

Position 10/25: (512, 2047) pixels

Position 10/25 centroid: (np.float64(21.82795697277073), np.float64(21.297228543929137))

Position 11/25: (1024, 0) pixels

Position 11/25 centroid: (np.float64(21.429040873530745), np.float64(21.318357379800315))

Position 12/25: (1024, 512) pixels

Position 12/25 centroid: (np.float64(21.50479081832898), np.float64(21.368720802556986))

Position 13/25: (1024, 1024) pixels

Position 13/25 centroid: (np.float64(21.610045617107634), np.float64(21.350996489683464))

Position 14/25: (1024, 1535) pixels

Position 14/25 centroid: (np.float64(21.74214090660759), np.float64(21.37697770855501))

Position 15/25: (1024, 2047) pixels

Position 15/25 centroid: (np.float64(21.865608907888127), np.float64(21.352734978464394))

Position 16/25: (1535, 0) pixels

Position 16/25 centroid: (np.float64(21.432868293317128), np.float64(21.468381142896543))

Position 17/25: (1535, 512) pixels

Position 17/25 centroid: (np.float64(21.495978210592597), np.float64(21.504452807081467))

Position 18/25: (1535, 1024) pixels

Position 18/25 centroid: (np.float64(21.597316422886312), np.float64(21.479621413514145))

Position 19/25: (1535, 1535) pixels

Position 19/25 centroid: (np.float64(21.70638293711345), np.float64(21.48075133303023))

Position 20/25: (1535, 2047) pixels

Position 20/25 centroid: (np.float64(21.869195680707886), np.float64(21.500939148174236))

Position 21/25: (2047, 0) pixels

Position 21/25 centroid: (np.float64(21.457193439251768), np.float64(21.69686140782905))

Position 22/25: (2047, 512) pixels

Position 22/25 centroid: (np.float64(21.53744071638886), np.float64(21.65280776862352))

Position 23/25: (2047, 1024) pixels

Position 23/25 centroid: (np.float64(21.589573106179028), np.float64(21.58401931192337))

Position 24/25: (2047, 1535) pixels

Position 24/25 centroid: (np.float64(21.72034084572062), np.float64(21.572958385149008))

Position 25/25: (2047, 2047) pixels

Position 25/25 centroid: (np.float64(21.83278743176354), np.float64(21.51145381969895))

Saving file: nircam_nrcb1_f115w_fovp11_samp4_npsf25.fits

Creating an oversampled PSF

Using a 11 px fov

Creating a grid of PSF for filter F200W and detector NRCB1

Running instrument: NIRCam, filter: F200W

Running detector: NRCB1

Position 1/25: (0, 0) pixels

Position 1/25 centroid: (np.float64(21.47656785727052), np.float64(21.193124910773882))

Position 2/25: (0, 512) pixels

Position 2/25 centroid: (np.float64(21.534426597150937), np.float64(21.133717870527924))

Position 3/25: (0, 1024) pixels

Position 3/25 centroid: (np.float64(21.590552240603255), np.float64(21.086646943269514))

Position 4/25: (0, 1535) pixels

Position 4/25 centroid: (np.float64(21.720626353067722), np.float64(21.1437870027739))

Position 5/25: (0, 2047) pixels

Position 5/25 centroid: (np.float64(21.840342057488808), np.float64(21.205165585789203))

Position 6/25: (512, 0) pixels

Position 6/25 centroid: (np.float64(21.45894758436686), np.float64(21.218909790717355))

Position 7/25: (512, 512) pixels

Position 7/25 centroid: (np.float64(21.541527669835176), np.float64(21.25464826533736))

Position 8/25: (512, 1024) pixels

Position 8/25 centroid: (np.float64(21.62758700371923), np.float64(21.213967491532614))

Position 9/25: (512, 1535) pixels

Position 9/25 centroid: (np.float64(21.763529015270514), np.float64(21.27904890116982))

Position 10/25: (512, 2047) pixels

Position 10/25 centroid: (np.float64(21.86390055120178), np.float64(21.289904729874028))

Position 11/25: (1024, 0) pixels

Position 11/25 centroid: (np.float64(21.48312892903334), np.float64(21.24220408389993))

Position 12/25: (1024, 512) pixels

Position 12/25 centroid: (np.float64(21.54132497684362), np.float64(21.30263025913289))

Position 13/25: (1024, 1024) pixels

Position 13/25 centroid: (np.float64(21.636891190962604), np.float64(21.310412406817495))

Position 14/25: (1024, 1535) pixels

Position 14/25 centroid: (np.float64(21.77822678210732), np.float64(21.359623595922052))

Position 15/25: (1024, 2047) pixels

Position 15/25 centroid: (np.float64(21.908644143480565), np.float64(21.364055005892805))

Position 16/25: (1535, 0) pixels

Position 16/25 centroid: (np.float64(21.499896950245198), np.float64(21.38853604532252))

Position 17/25: (1535, 512) pixels

Position 17/25 centroid: (np.float64(21.554353522311178), np.float64(21.43578745988458))

Position 18/25: (1535, 1024) pixels

Position 18/25 centroid: (np.float64(21.657165103091767), np.float64(21.434463068411162))

Position 19/25: (1535, 1535) pixels

Position 19/25 centroid: (np.float64(21.770258618303174), np.float64(21.46372381812464))

Position 20/25: (1535, 2047) pixels

Position 20/25 centroid: (np.float64(21.93092149825916), np.float64(21.505763383105883))

Position 21/25: (2047, 0) pixels

Position 21/25 centroid: (np.float64(21.542857519951543), np.float64(21.58223408985083))

Position 22/25: (2047, 512) pixels

Position 22/25 centroid: (np.float64(21.62272224228701), np.float64(21.559592579876973))

Position 23/25: (2047, 1024) pixels

Position 23/25 centroid: (np.float64(21.676888008849637), np.float64(21.52638261230662))

Position 24/25: (2047, 1535) pixels

Position 24/25 centroid: (np.float64(21.80285061932636), np.float64(21.535307661477546))

Position 25/25: (2047, 2047) pixels

Position 25/25 centroid: (np.float64(21.900911542326913), np.float64(21.49862255103234))

Saving file: nircam_nrcb1_f200w_fovp11_samp4_npsf25.fits

Display the PSFs grid#

We show for 1 filter (F115W) the grid of PSFs and the difference from the mean

griddedpsfmodel = dict_psfs_stpsf[dets_short[0]][filts_short[0]]['psf model grid']

fig = griddedpsfmodel.plot_grid(figsize=(10, 10))

fig = griddedpsfmodel.plot_grid(figsize=(10, 10), deltas=True, cmap='viridis', vmax_scale=0.3)

II. Create the PSF model building an Effective PSF (ePSF)#

More information on the photutils Effective PSF can be found here.

Select the stars from the images we want to use for building the PSF. We use the DAOStarFinder function to find bright stars in the images (setting a high detection threshold). DAOStarFinder detects stars in an image using the DAOFIND (Stetson 1987) algorithm. DAOFIND searches images for local density maxima that have a peak amplitude greater than

threshold(approximately; threshold is applied to a convolved image) and have a size and shape similar to the defined 2D Gaussian kernel.

Note: The threshold and the maximum distance to the closest neighbour depend on the user science case (i.e.; number of stars in the field of view, crowding, number of bright sources, minimum number of stars required to build the ePSF, etc.) and must be modified accordingly.Build the effective PSF (excluding objects for which the bounding box exceed the detector edge) using EPSBuilder function.

We create a dictionary that contains the effective PSF for the detectors and filters selected above.

dict_psfs_epsf = {}

for det in dets_short:

dict_psfs_epsf.setdefault(det, {})

for j, filt in enumerate(filts_short):

dict_psfs_epsf[det].setdefault(filt, {})

dict_psfs_epsf[det][filt]['table psf stars'] = {}

dict_psfs_epsf[det][filt]['epsf single'] = {}

dict_psfs_epsf[det][filt]['epsf grid'] = {}

for i in np.arange(0, len(dict_images[det][filt]['images']), 1):

dict_psfs_epsf[det][filt]['table psf stars'][i + 1] = None

dict_psfs_epsf[det][filt]['epsf single'][i + 1] = None

dict_psfs_epsf[det][filt]['epsf grid'][i + 1] = None

Note that the unit of the Level-2 and Level-3 Images from the pipeline is MJy/sr (hence a surface brightness). The actual unit of the image can be checked from the header keyword BUNIT. The scalar conversion constant is copied to the header keyword PHOTMJSR, which gives the conversion from DN/s to MJy/steradian. For our analysis we revert back to DN/s.

def find_stars_epsf(img_num, filt_num, det='NRCA1', filt='F070W', dist_sel=False):

bkgrms = MADStdBackgroundRMS()

mmm_bkg = MMMBackground()

image = fits.open(dict_images[det][filt]['images'][img_num])

data_sb = image[1].data

imh = image[1].header

print(f"Finding PSF stars on image {img_num + 1} of filter {filt}, detector {det}")

data = data_sb / imh['PHOTMJSR']

units = imh['BUNIT']

print(f"Conversion factor from {units} to DN/s for filter {filt}: {imh['PHOTMJSR']}")

sigma_psf = dict_utils[filt]['psf fwhm']

print(f"FWHM for the filter {filt}: {sigma_psf} px")

std = bkgrms(data)

bkg = mmm_bkg(data)

daofind = DAOStarFinder(threshold=th[filt_num] * std + bkg, fwhm=sigma_psf, roundhi=1.0, roundlo=-1.0,

sharplo=0.30, sharphi=1.40)

psf_stars = daofind(data)

dict_psfs_epsf[det][filt]['table psf stars'][img_num + 1] = psf_stars

if dist_sel:

print("")

print("Calculating closest neigbhour distance")

d = []

daofind_tot = DAOStarFinder(threshold=10 * std + bkg, fwhm=sigma_psf, roundhi=1.0, roundlo=-1.0,

sharplo=0.30, sharphi=1.40)

stars_tot = daofind_tot(data)

x_tot = stars_tot['xcentroid']

y_tot = stars_tot['ycentroid']

for xx, yy in zip(psf_stars['xcentroid'], psf_stars['ycentroid']):

sep = []

dist = np.sqrt((x_tot - xx)**2 + (y_tot - yy)**2)

sep = np.sort(dist)[1:2][0]

d.append(sep)

psf_stars['min distance'] = d

mask_dist = (psf_stars['min distance'] > min_sep[filt_num])

psf_stars = psf_stars[mask_dist]

dict_psfs_epsf[det][filt]['table psf stars'][img_num + 1] = psf_stars

print("Minimum distance required:", min_sep[filt_num], "px")

print("")

print(f"Number of isolated sources found in the image used to build ePSF for {filt}: {len(psf_stars)}")

print("-----------------------------------------------------")

print("")

else:

print("")

print(f"Number of sources used to build ePSF for {filt}: {len(psf_stars)}")

print("--------------------------------------------")

print("")

tic = time.perf_counter()

th = [700, 500] # threshold level for the two filters (length must match number of filters analyzed)

min_sep = [10, 10] # minimum separation acceptable for ePSF stars from closest neighbour

for det in dets_short:

for j, filt in enumerate(filts_short):

for i in np.arange(0, len(dict_images[det][filt]['images']), 1):

find_stars_epsf(i, j, det=det, filt=filt, dist_sel=False)

toc = time.perf_counter()

print("Elapsed Time for finding stars:", toc - tic)

Finding PSF stars on image 1 of filter F115W, detector NRCB1

Conversion factor from MJy/sr to DN/s for filter F115W: 3.821892261505127

FWHM for the filter F115W: 1.298 px

Number of sources used to build ePSF for F115W: 1306

--------------------------------------------

Finding PSF stars on image 2 of filter F115W, detector NRCB1

Conversion factor from MJy/sr to DN/s for filter F115W: 3.821892261505127

FWHM for the filter F115W: 1.298 px

Number of sources used to build ePSF for F115W: 1324

--------------------------------------------

Finding PSF stars on image 3 of filter F115W, detector NRCB1

Conversion factor from MJy/sr to DN/s for filter F115W: 3.821892261505127

FWHM for the filter F115W: 1.298 px

Number of sources used to build ePSF for F115W: 1307

--------------------------------------------

Finding PSF stars on image 4 of filter F115W, detector NRCB1

Conversion factor from MJy/sr to DN/s for filter F115W: 3.821892261505127

FWHM for the filter F115W: 1.298 px

Number of sources used to build ePSF for F115W: 1316

--------------------------------------------

Finding PSF stars on image 1 of filter F200W, detector NRCB1

Conversion factor from MJy/sr to DN/s for filter F200W: 2.564082860946655

FWHM for the filter F200W: 2.141 px

Number of sources used to build ePSF for F200W: 1276

--------------------------------------------

Finding PSF stars on image 2 of filter F200W, detector NRCB1

Conversion factor from MJy/sr to DN/s for filter F200W: 2.564082860946655

FWHM for the filter F200W: 2.141 px

Number of sources used to build ePSF for F200W: 1287

--------------------------------------------

Finding PSF stars on image 3 of filter F200W, detector NRCB1

Conversion factor from MJy/sr to DN/s for filter F200W: 2.564082860946655

FWHM for the filter F200W: 2.141 px

Number of sources used to build ePSF for F200W: 1291

--------------------------------------------

Finding PSF stars on image 4 of filter F200W, detector NRCB1

Conversion factor from MJy/sr to DN/s for filter F200W: 2.564082860946655

FWHM for the filter F200W: 2.141 px

Number of sources used to build ePSF for F200W: 1278

--------------------------------------------

Elapsed Time for finding stars: 11.995874712999978

II. Build Effective PSF#

def build_epsf(det='NRCA1', filt='F070W'):

mmm_bkg = MMMBackground()

image = fits.open(dict_images[det][filt]['images'][i])

data_sb = image[1].data

imh = image[1].header

data = data_sb / imh['PHOTMJSR']

hsize = (sizes[j] - 1) / 2

x = dict_psfs_epsf[det][filt]['table psf stars'][i + 1]['xcentroid']

y = dict_psfs_epsf[det][filt]['table psf stars'][i + 1]['ycentroid']

mask = ((x > hsize) & (x < (data.shape[1] - 1 - hsize)) & (y > hsize) & (y < (data.shape[0] - 1 - hsize)))

stars_tbl = Table()

stars_tbl['x'] = x[mask]

stars_tbl['y'] = y[mask]

bkg = mmm_bkg(data)

data_bkgsub = data.copy()

data_bkgsub -= bkg

nddata = NDData(data=data_bkgsub)

stars = extract_stars(nddata, stars_tbl, size=sizes[j])

print(f"Creating ePSF for image {i + 1} of filter {filt}, detector {det}")

epsf_builder = EPSFBuilder(oversampling=oversample, maxiters=3, progress_bar=False)

epsf, fitted_stars = epsf_builder(stars)

dict_psfs_epsf[det][filt]['epsf single'][i + 1] = epsf

Note: here we limit the maximum number of iterations to 3 (to limit it’s run time), but in practice one should use about 10 or more iterations.

tic = time.perf_counter()

sizes = [11, 11] # size of the cutout (extract region) for each PSF star - must match number of filters analyzed

oversample = 4

for det in dets_short:

for j, filt in enumerate(filts_short):

for i in np.arange(0, len(dict_images[det][filt]['images']), 1):

with warnings.catch_warnings():

# ignore warnings about stars close to image edge

warnings.simplefilter("ignore", category=AstropyUserWarning)

build_epsf(det=det, filt=filt)

toc = time.perf_counter()

print("Time to build the Effective PSF:", toc - tic)

Creating ePSF for image 1 of filter F115W, detector NRCB1

Creating ePSF for image 2 of filter F115W, detector NRCB1

Creating ePSF for image 3 of filter F115W, detector NRCB1

Creating ePSF for image 4 of filter F115W, detector NRCB1

Creating ePSF for image 1 of filter F200W, detector NRCB1

Creating ePSF for image 2 of filter F200W, detector NRCB1

Creating ePSF for image 3 of filter F200W, detector NRCB1

Creating ePSF for image 4 of filter F200W, detector NRCB1

Time to build the Effective PSF: 95.17481150700002

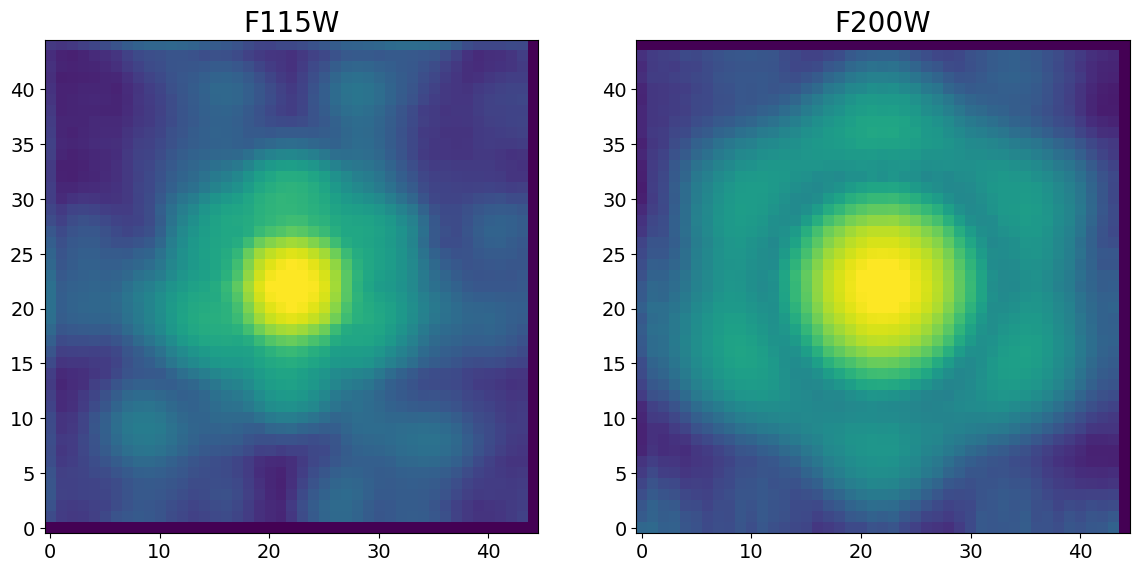

Display the ePSFs#

We display only 1 ePSF for each filter

fig, ax = plt.subplots(ncols=2, figsize=(14, 14))

for det in dets_short:

for i, filt in enumerate(filts_short):

img = dict_psfs_epsf[det][filt]['epsf single'][i + 1].data

norm_epsf = simple_norm(img, 'log', percent=99.)

ax[i].imshow(img, norm=norm_epsf)

ax[i].set_title(filt, fontdict=font2)

2025-05-15 19:23:09,028 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:23:09,029 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:23:09,055 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:23:09,069 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:23:09,095 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:23:09,174 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:23:09,175 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:23:09,197 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:23:09,203 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

2025-05-15 19:23:09,224 - stpipe - WARNING - findfont: Font family 'helvetica' not found.

Work in Progress - Build a grid of effective PSF#

Two functions:

count PSF stars in the grid

create a gridded ePSF

The purpose of the first function is to count how many good PSF stars are in each sub-region defined by the grid number N. The function should start from the number provided by the user and iterate until the minimum grid size 2x2. Depending on the number of PSF stars that the users want in each cell of the grid, they can choose the appropriate grid size or modify the threshold values for the stars detection, selected when creating the single ePSF (in the Finding stars cell above).

The second function creates a grid of PSFs with EPSFBuilder. The function will return a a GriddedEPSFModel object containing a 3D array of N × n × n. The 3D array represents the N number of 2D n × n ePSFs created. It should include a grid_xypos key which will state the position of the PSF on the detector for each of the PSFs. The order of the tuples in grid_xypos refers to the number the PSF is in the 3D array.

I. Counting PSF stars in each region of the grid#

def count_PSFstars_grid(grid_points=5, size=15, min_numpsf=40):

num_grid_calc = np.arange(2, grid_points + 1, 1)

num_grid_calc = num_grid_calc[::-1]

for num in num_grid_calc:

print(f"Calculating the number of PSF stars in a {num} x {num} grid")

print("")

image = fits.open(dict_images[det][filt]['images'][i])

data_sb = image[1].data

points = np.int16((data_sb.shape[0] / num) / 2)

x_center = np.arange(points, 2 * points * (num), 2 * points)

y_center = np.arange(points, 2 * points * (num), 2 * points)

centers = np.array(np.meshgrid(x_center, y_center)).T.reshape(-1, 2)

for n, val in enumerate(centers):

x = dict_psfs_epsf[det][filt]['table psf stars'][i + 1]['xcentroid']

y = dict_psfs_epsf[det][filt]['table psf stars'][i + 1]['ycentroid']

# flux = dict_psfs_epsf[det][filt]['table psf stars'][i + 1]['flux']

half_size = (size - 1) / 2

lim1 = val[0] - points + half_size

lim2 = val[0] + points - half_size

lim3 = val[1] - points + half_size

lim4 = val[1] + points - half_size

test = (x > lim1) & (x < lim2) & (y > lim3) & (y < lim4)

if np.count_nonzero(test) < min_numpsf:

print(f"Center Coordinates of grid cell {i + 1} are ({val[0]}, {val[1]}) --- Not enough PSF stars in the cell (number of PSF stars < {min_numpsf})")

else:

print(f"Center Coordinate of grid cell {n + 1} are ({val[0]}, {val[1]}) --- Number of PSF stars: {np.count_nonzero(test)}")

print("")

for det in dets_short:

for j, filt in enumerate(filts_short):

for i in np.arange(0, len(dict_images[det][filt]['images']), 1):

print(f"Analyzing image {i + 1} of filter {filt}, detector {det}")

print("")

count_PSFstars_grid(grid_points=5, size=15, min_numpsf=40)

Analyzing image 1 of filter F115W, detector NRCB1

Calculating the number of PSF stars in a 5 x 5 grid

Center Coordinate of grid cell 1 are (204, 204) --- Number of PSF stars: 72

Center Coordinate of grid cell 2 are (204, 612) --- Number of PSF stars: 46

Center Coordinate of grid cell 3 are (204, 1020) --- Number of PSF stars: 51

Center Coordinate of grid cell 4 are (204, 1428) --- Number of PSF stars: 48

Center Coordinate of grid cell 5 are (204, 1836) --- Number of PSF stars: 51

Center Coordinate of grid cell 6 are (612, 204) --- Number of PSF stars: 55

Center Coordinate of grid cell 7 are (612, 612) --- Number of PSF stars: 51

Center Coordinate of grid cell 8 are (612, 1020) --- Number of PSF stars: 45

Center Coordinate of grid cell 9 are (612, 1428) --- Number of PSF stars: 40

Center Coordinate of grid cell 10 are (612, 1836) --- Number of PSF stars: 57

Center Coordinate of grid cell 11 are (1020, 204) --- Number of PSF stars: 47

Center Coordinate of grid cell 12 are (1020, 612) --- Number of PSF stars: 50

Center Coordinate of grid cell 13 are (1020, 1020) --- Number of PSF stars: 55

Center Coordinates of grid cell 1 are (1020, 1428) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinate of grid cell 15 are (1020, 1836) --- Number of PSF stars: 43

Center Coordinate of grid cell 16 are (1428, 204) --- Number of PSF stars: 50

Center Coordinate of grid cell 17 are (1428, 612) --- Number of PSF stars: 44

Center Coordinates of grid cell 1 are (1428, 1020) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinate of grid cell 19 are (1428, 1428) --- Number of PSF stars: 47

Center Coordinate of grid cell 20 are (1428, 1836) --- Number of PSF stars: 50

Center Coordinate of grid cell 21 are (1836, 204) --- Number of PSF stars: 45

Center Coordinate of grid cell 22 are (1836, 612) --- Number of PSF stars: 50

Center Coordinate of grid cell 23 are (1836, 1020) --- Number of PSF stars: 54

Center Coordinate of grid cell 24 are (1836, 1428) --- Number of PSF stars: 44

Center Coordinate of grid cell 25 are (1836, 1836) --- Number of PSF stars: 40

Calculating the number of PSF stars in a 4 x 4 grid

Center Coordinate of grid cell 1 are (256, 256) --- Number of PSF stars: 122

Center Coordinate of grid cell 2 are (256, 768) --- Number of PSF stars: 73

Center Coordinate of grid cell 3 are (256, 1280) --- Number of PSF stars: 70

Center Coordinate of grid cell 4 are (256, 1792) --- Number of PSF stars: 90

Center Coordinate of grid cell 5 are (768, 256) --- Number of PSF stars: 84

Center Coordinate of grid cell 6 are (768, 768) --- Number of PSF stars: 75

Center Coordinate of grid cell 7 are (768, 1280) --- Number of PSF stars: 84

Center Coordinate of grid cell 8 are (768, 1792) --- Number of PSF stars: 81

Center Coordinate of grid cell 9 are (1280, 256) --- Number of PSF stars: 77

Center Coordinate of grid cell 10 are (1280, 768) --- Number of PSF stars: 87

Center Coordinate of grid cell 11 are (1280, 1280) --- Number of PSF stars: 59

Center Coordinate of grid cell 12 are (1280, 1792) --- Number of PSF stars: 75

Center Coordinate of grid cell 13 are (1792, 256) --- Number of PSF stars: 75

Center Coordinate of grid cell 14 are (1792, 768) --- Number of PSF stars: 64

Center Coordinate of grid cell 15 are (1792, 1280) --- Number of PSF stars: 76

Center Coordinate of grid cell 16 are (1792, 1792) --- Number of PSF stars: 67

Calculating the number of PSF stars in a 3 x 3 grid

Center Coordinate of grid cell 1 are (341, 341) --- Number of PSF stars: 186

Center Coordinate of grid cell 2 are (341, 1023) --- Number of PSF stars: 129

Center Coordinate of grid cell 3 are (341, 1705) --- Number of PSF stars: 149

Center Coordinate of grid cell 4 are (1023, 341) --- Number of PSF stars: 132

Center Coordinate of grid cell 5 are (1023, 1023) --- Number of PSF stars: 151

Center Coordinate of grid cell 6 are (1023, 1705) --- Number of PSF stars: 132

Center Coordinate of grid cell 7 are (1705, 341) --- Number of PSF stars: 132

Center Coordinate of grid cell 8 are (1705, 1023) --- Number of PSF stars: 118

Center Coordinate of grid cell 9 are (1705, 1705) --- Number of PSF stars: 126

Calculating the number of PSF stars in a 2 x 2 grid

Center Coordinate of grid cell 1 are (512, 512) --- Number of PSF stars: 359

Center Coordinate of grid cell 2 are (512, 1536) --- Number of PSF stars: 331

Center Coordinate of grid cell 3 are (1536, 512) --- Number of PSF stars: 307

Center Coordinate of grid cell 4 are (1536, 1536) --- Number of PSF stars: 286

Analyzing image 2 of filter F115W, detector NRCB1

Calculating the number of PSF stars in a 5 x 5 grid

Center Coordinate of grid cell 1 are (204, 204) --- Number of PSF stars: 74

Center Coordinate of grid cell 2 are (204, 612) --- Number of PSF stars: 49

Center Coordinate of grid cell 3 are (204, 1020) --- Number of PSF stars: 50

Center Coordinate of grid cell 4 are (204, 1428) --- Number of PSF stars: 45

Center Coordinate of grid cell 5 are (204, 1836) --- Number of PSF stars: 48

Center Coordinate of grid cell 6 are (612, 204) --- Number of PSF stars: 59

Center Coordinate of grid cell 7 are (612, 612) --- Number of PSF stars: 53

Center Coordinate of grid cell 8 are (612, 1020) --- Number of PSF stars: 43

Center Coordinate of grid cell 9 are (612, 1428) --- Number of PSF stars: 46

Center Coordinate of grid cell 10 are (612, 1836) --- Number of PSF stars: 62

Center Coordinate of grid cell 11 are (1020, 204) --- Number of PSF stars: 50

Center Coordinate of grid cell 12 are (1020, 612) --- Number of PSF stars: 49

Center Coordinate of grid cell 13 are (1020, 1020) --- Number of PSF stars: 55

Center Coordinate of grid cell 14 are (1020, 1428) --- Number of PSF stars: 42

Center Coordinate of grid cell 15 are (1020, 1836) --- Number of PSF stars: 42

Center Coordinate of grid cell 16 are (1428, 204) --- Number of PSF stars: 55

Center Coordinate of grid cell 17 are (1428, 612) --- Number of PSF stars: 43

Center Coordinates of grid cell 2 are (1428, 1020) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinate of grid cell 19 are (1428, 1428) --- Number of PSF stars: 47

Center Coordinate of grid cell 20 are (1428, 1836) --- Number of PSF stars: 50

Center Coordinate of grid cell 21 are (1836, 204) --- Number of PSF stars: 41

Center Coordinate of grid cell 22 are (1836, 612) --- Number of PSF stars: 47

Center Coordinate of grid cell 23 are (1836, 1020) --- Number of PSF stars: 59

Center Coordinate of grid cell 24 are (1836, 1428) --- Number of PSF stars: 44

Center Coordinates of grid cell 2 are (1836, 1836) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Calculating the number of PSF stars in a 4 x 4 grid

Center Coordinate of grid cell 1 are (256, 256) --- Number of PSF stars: 138

Center Coordinate of grid cell 2 are (256, 768) --- Number of PSF stars: 79

Center Coordinate of grid cell 3 are (256, 1280) --- Number of PSF stars: 75

Center Coordinate of grid cell 4 are (256, 1792) --- Number of PSF stars: 82

Center Coordinate of grid cell 5 are (768, 256) --- Number of PSF stars: 84

Center Coordinate of grid cell 6 are (768, 768) --- Number of PSF stars: 73

Center Coordinate of grid cell 7 are (768, 1280) --- Number of PSF stars: 84

Center Coordinate of grid cell 8 are (768, 1792) --- Number of PSF stars: 86

Center Coordinate of grid cell 9 are (1280, 256) --- Number of PSF stars: 80

Center Coordinate of grid cell 10 are (1280, 768) --- Number of PSF stars: 82

Center Coordinate of grid cell 11 are (1280, 1280) --- Number of PSF stars: 61

Center Coordinate of grid cell 12 are (1280, 1792) --- Number of PSF stars: 69

Center Coordinate of grid cell 13 are (1792, 256) --- Number of PSF stars: 72

Center Coordinate of grid cell 14 are (1792, 768) --- Number of PSF stars: 62

Center Coordinate of grid cell 15 are (1792, 1280) --- Number of PSF stars: 70

Center Coordinate of grid cell 16 are (1792, 1792) --- Number of PSF stars: 73

Calculating the number of PSF stars in a 3 x 3 grid

Center Coordinate of grid cell 1 are (341, 341) --- Number of PSF stars: 201

Center Coordinate of grid cell 2 are (341, 1023) --- Number of PSF stars: 134

Center Coordinate of grid cell 3 are (341, 1705) --- Number of PSF stars: 150

Center Coordinate of grid cell 4 are (1023, 341) --- Number of PSF stars: 139

Center Coordinate of grid cell 5 are (1023, 1023) --- Number of PSF stars: 143

Center Coordinate of grid cell 6 are (1023, 1705) --- Number of PSF stars: 133

Center Coordinate of grid cell 7 are (1705, 341) --- Number of PSF stars: 126

Center Coordinate of grid cell 8 are (1705, 1023) --- Number of PSF stars: 123

Center Coordinate of grid cell 9 are (1705, 1705) --- Number of PSF stars: 125

Calculating the number of PSF stars in a 2 x 2 grid

Center Coordinate of grid cell 1 are (512, 512) --- Number of PSF stars: 378

Center Coordinate of grid cell 2 are (512, 1536) --- Number of PSF stars: 333

Center Coordinate of grid cell 3 are (1536, 512) --- Number of PSF stars: 303

Center Coordinate of grid cell 4 are (1536, 1536) --- Number of PSF stars: 280

Analyzing image 3 of filter F115W, detector NRCB1

Calculating the number of PSF stars in a 5 x 5 grid

Center Coordinate of grid cell 1 are (204, 204) --- Number of PSF stars: 73

Center Coordinate of grid cell 2 are (204, 612) --- Number of PSF stars: 50

Center Coordinate of grid cell 3 are (204, 1020) --- Number of PSF stars: 47

Center Coordinate of grid cell 4 are (204, 1428) --- Number of PSF stars: 45

Center Coordinate of grid cell 5 are (204, 1836) --- Number of PSF stars: 46

Center Coordinate of grid cell 6 are (612, 204) --- Number of PSF stars: 57

Center Coordinate of grid cell 7 are (612, 612) --- Number of PSF stars: 48

Center Coordinate of grid cell 8 are (612, 1020) --- Number of PSF stars: 47

Center Coordinate of grid cell 9 are (612, 1428) --- Number of PSF stars: 46

Center Coordinate of grid cell 10 are (612, 1836) --- Number of PSF stars: 58

Center Coordinate of grid cell 11 are (1020, 204) --- Number of PSF stars: 46

Center Coordinate of grid cell 12 are (1020, 612) --- Number of PSF stars: 52

Center Coordinate of grid cell 13 are (1020, 1020) --- Number of PSF stars: 56

Center Coordinate of grid cell 14 are (1020, 1428) --- Number of PSF stars: 44

Center Coordinates of grid cell 3 are (1020, 1836) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinate of grid cell 16 are (1428, 204) --- Number of PSF stars: 48

Center Coordinate of grid cell 17 are (1428, 612) --- Number of PSF stars: 41

Center Coordinates of grid cell 3 are (1428, 1020) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinate of grid cell 19 are (1428, 1428) --- Number of PSF stars: 51

Center Coordinate of grid cell 20 are (1428, 1836) --- Number of PSF stars: 53

Center Coordinate of grid cell 21 are (1836, 204) --- Number of PSF stars: 43

Center Coordinate of grid cell 22 are (1836, 612) --- Number of PSF stars: 49

Center Coordinate of grid cell 23 are (1836, 1020) --- Number of PSF stars: 52

Center Coordinate of grid cell 24 are (1836, 1428) --- Number of PSF stars: 46

Center Coordinates of grid cell 3 are (1836, 1836) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Calculating the number of PSF stars in a 4 x 4 grid

Center Coordinate of grid cell 1 are (256, 256) --- Number of PSF stars: 128

Center Coordinate of grid cell 2 are (256, 768) --- Number of PSF stars: 77

Center Coordinate of grid cell 3 are (256, 1280) --- Number of PSF stars: 69

Center Coordinate of grid cell 4 are (256, 1792) --- Number of PSF stars: 81

Center Coordinate of grid cell 5 are (768, 256) --- Number of PSF stars: 81

Center Coordinate of grid cell 6 are (768, 768) --- Number of PSF stars: 73

Center Coordinate of grid cell 7 are (768, 1280) --- Number of PSF stars: 87

Center Coordinate of grid cell 8 are (768, 1792) --- Number of PSF stars: 79

Center Coordinate of grid cell 9 are (1280, 256) --- Number of PSF stars: 75

Center Coordinate of grid cell 10 are (1280, 768) --- Number of PSF stars: 82

Center Coordinate of grid cell 11 are (1280, 1280) --- Number of PSF stars: 65

Center Coordinate of grid cell 12 are (1280, 1792) --- Number of PSF stars: 74

Center Coordinate of grid cell 13 are (1792, 256) --- Number of PSF stars: 71

Center Coordinate of grid cell 14 are (1792, 768) --- Number of PSF stars: 65

Center Coordinate of grid cell 15 are (1792, 1280) --- Number of PSF stars: 75

Center Coordinate of grid cell 16 are (1792, 1792) --- Number of PSF stars: 69

Calculating the number of PSF stars in a 3 x 3 grid

Center Coordinate of grid cell 1 are (341, 341) --- Number of PSF stars: 191

Center Coordinate of grid cell 2 are (341, 1023) --- Number of PSF stars: 127

Center Coordinate of grid cell 3 are (341, 1705) --- Number of PSF stars: 147

Center Coordinate of grid cell 4 are (1023, 341) --- Number of PSF stars: 135

Center Coordinate of grid cell 5 are (1023, 1023) --- Number of PSF stars: 140

Center Coordinate of grid cell 6 are (1023, 1705) --- Number of PSF stars: 133

Center Coordinate of grid cell 7 are (1705, 341) --- Number of PSF stars: 131

Center Coordinate of grid cell 8 are (1705, 1023) --- Number of PSF stars: 120

Center Coordinate of grid cell 9 are (1705, 1705) --- Number of PSF stars: 131

Calculating the number of PSF stars in a 2 x 2 grid

Center Coordinate of grid cell 1 are (512, 512) --- Number of PSF stars: 366

Center Coordinate of grid cell 2 are (512, 1536) --- Number of PSF stars: 320

Center Coordinate of grid cell 3 are (1536, 512) --- Number of PSF stars: 297

Center Coordinate of grid cell 4 are (1536, 1536) --- Number of PSF stars: 292

Analyzing image 4 of filter F115W, detector NRCB1

Calculating the number of PSF stars in a 5 x 5 grid

Center Coordinate of grid cell 1 are (204, 204) --- Number of PSF stars: 66

Center Coordinate of grid cell 2 are (204, 612) --- Number of PSF stars: 46

Center Coordinate of grid cell 3 are (204, 1020) --- Number of PSF stars: 47

Center Coordinate of grid cell 4 are (204, 1428) --- Number of PSF stars: 48

Center Coordinate of grid cell 5 are (204, 1836) --- Number of PSF stars: 51

Center Coordinate of grid cell 6 are (612, 204) --- Number of PSF stars: 59

Center Coordinate of grid cell 7 are (612, 612) --- Number of PSF stars: 52

Center Coordinate of grid cell 8 are (612, 1020) --- Number of PSF stars: 47

Center Coordinate of grid cell 9 are (612, 1428) --- Number of PSF stars: 44

Center Coordinate of grid cell 10 are (612, 1836) --- Number of PSF stars: 63

Center Coordinate of grid cell 11 are (1020, 204) --- Number of PSF stars: 47

Center Coordinate of grid cell 12 are (1020, 612) --- Number of PSF stars: 50

Center Coordinate of grid cell 13 are (1020, 1020) --- Number of PSF stars: 56

Center Coordinate of grid cell 14 are (1020, 1428) --- Number of PSF stars: 41

Center Coordinate of grid cell 15 are (1020, 1836) --- Number of PSF stars: 41

Center Coordinate of grid cell 16 are (1428, 204) --- Number of PSF stars: 54

Center Coordinate of grid cell 17 are (1428, 612) --- Number of PSF stars: 45

Center Coordinate of grid cell 18 are (1428, 1020) --- Number of PSF stars: 40

Center Coordinate of grid cell 19 are (1428, 1428) --- Number of PSF stars: 50

Center Coordinate of grid cell 20 are (1428, 1836) --- Number of PSF stars: 50

Center Coordinate of grid cell 21 are (1836, 204) --- Number of PSF stars: 42

Center Coordinate of grid cell 22 are (1836, 612) --- Number of PSF stars: 51

Center Coordinate of grid cell 23 are (1836, 1020) --- Number of PSF stars: 55

Center Coordinate of grid cell 24 are (1836, 1428) --- Number of PSF stars: 42

Center Coordinates of grid cell 4 are (1836, 1836) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Calculating the number of PSF stars in a 4 x 4 grid

Center Coordinate of grid cell 1 are (256, 256) --- Number of PSF stars: 121

Center Coordinate of grid cell 2 are (256, 768) --- Number of PSF stars: 76

Center Coordinate of grid cell 3 are (256, 1280) --- Number of PSF stars: 69

Center Coordinate of grid cell 4 are (256, 1792) --- Number of PSF stars: 90

Center Coordinate of grid cell 5 are (768, 256) --- Number of PSF stars: 86

Center Coordinate of grid cell 6 are (768, 768) --- Number of PSF stars: 76

Center Coordinate of grid cell 7 are (768, 1280) --- Number of PSF stars: 84

Center Coordinate of grid cell 8 are (768, 1792) --- Number of PSF stars: 83

Center Coordinate of grid cell 9 are (1280, 256) --- Number of PSF stars: 79

Center Coordinate of grid cell 10 are (1280, 768) --- Number of PSF stars: 89

Center Coordinate of grid cell 11 are (1280, 1280) --- Number of PSF stars: 63

Center Coordinate of grid cell 12 are (1280, 1792) --- Number of PSF stars: 71

Center Coordinate of grid cell 13 are (1792, 256) --- Number of PSF stars: 72

Center Coordinate of grid cell 14 are (1792, 768) --- Number of PSF stars: 67

Center Coordinate of grid cell 15 are (1792, 1280) --- Number of PSF stars: 71

Center Coordinate of grid cell 16 are (1792, 1792) --- Number of PSF stars: 70

Calculating the number of PSF stars in a 3 x 3 grid

Center Coordinate of grid cell 1 are (341, 341) --- Number of PSF stars: 188

Center Coordinate of grid cell 2 are (341, 1023) --- Number of PSF stars: 131

Center Coordinate of grid cell 3 are (341, 1705) --- Number of PSF stars: 155

Center Coordinate of grid cell 4 are (1023, 341) --- Number of PSF stars: 138

Center Coordinate of grid cell 5 are (1023, 1023) --- Number of PSF stars: 145

Center Coordinate of grid cell 6 are (1023, 1705) --- Number of PSF stars: 132

Center Coordinate of grid cell 7 are (1705, 341) --- Number of PSF stars: 134

Center Coordinate of grid cell 8 are (1705, 1023) --- Number of PSF stars: 121

Center Coordinate of grid cell 9 are (1705, 1705) --- Number of PSF stars: 124

Calculating the number of PSF stars in a 2 x 2 grid

Center Coordinate of grid cell 1 are (512, 512) --- Number of PSF stars: 366

Center Coordinate of grid cell 2 are (512, 1536) --- Number of PSF stars: 333

Center Coordinate of grid cell 3 are (1536, 512) --- Number of PSF stars: 312

Center Coordinate of grid cell 4 are (1536, 1536) --- Number of PSF stars: 281

Analyzing image 1 of filter F200W, detector NRCB1

Calculating the number of PSF stars in a 5 x 5 grid

Center Coordinate of grid cell 1 are (204, 204) --- Number of PSF stars: 81

Center Coordinate of grid cell 2 are (204, 612) --- Number of PSF stars: 49

Center Coordinate of grid cell 3 are (204, 1020) --- Number of PSF stars: 45

Center Coordinate of grid cell 4 are (204, 1428) --- Number of PSF stars: 46

Center Coordinate of grid cell 5 are (204, 1836) --- Number of PSF stars: 46

Center Coordinate of grid cell 6 are (612, 204) --- Number of PSF stars: 65

Center Coordinate of grid cell 7 are (612, 612) --- Number of PSF stars: 46

Center Coordinate of grid cell 8 are (612, 1020) --- Number of PSF stars: 43

Center Coordinates of grid cell 1 are (612, 1428) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinate of grid cell 10 are (612, 1836) --- Number of PSF stars: 57

Center Coordinate of grid cell 11 are (1020, 204) --- Number of PSF stars: 43

Center Coordinate of grid cell 12 are (1020, 612) --- Number of PSF stars: 47

Center Coordinate of grid cell 13 are (1020, 1020) --- Number of PSF stars: 55

Center Coordinates of grid cell 1 are (1020, 1428) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinates of grid cell 1 are (1020, 1836) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinate of grid cell 16 are (1428, 204) --- Number of PSF stars: 46

Center Coordinate of grid cell 17 are (1428, 612) --- Number of PSF stars: 41

Center Coordinate of grid cell 18 are (1428, 1020) --- Number of PSF stars: 41

Center Coordinate of grid cell 19 are (1428, 1428) --- Number of PSF stars: 46

Center Coordinate of grid cell 20 are (1428, 1836) --- Number of PSF stars: 57

Center Coordinate of grid cell 21 are (1836, 204) --- Number of PSF stars: 43

Center Coordinate of grid cell 22 are (1836, 612) --- Number of PSF stars: 44

Center Coordinate of grid cell 23 are (1836, 1020) --- Number of PSF stars: 49

Center Coordinate of grid cell 24 are (1836, 1428) --- Number of PSF stars: 42

Center Coordinates of grid cell 1 are (1836, 1836) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Calculating the number of PSF stars in a 4 x 4 grid

Center Coordinate of grid cell 1 are (256, 256) --- Number of PSF stars: 149

Center Coordinate of grid cell 2 are (256, 768) --- Number of PSF stars: 79

Center Coordinate of grid cell 3 are (256, 1280) --- Number of PSF stars: 65

Center Coordinate of grid cell 4 are (256, 1792) --- Number of PSF stars: 81

Center Coordinate of grid cell 5 are (768, 256) --- Number of PSF stars: 75

Center Coordinate of grid cell 6 are (768, 768) --- Number of PSF stars: 68

Center Coordinate of grid cell 7 are (768, 1280) --- Number of PSF stars: 80

Center Coordinate of grid cell 8 are (768, 1792) --- Number of PSF stars: 77

Center Coordinate of grid cell 9 are (1280, 256) --- Number of PSF stars: 71

Center Coordinate of grid cell 10 are (1280, 768) --- Number of PSF stars: 89

Center Coordinate of grid cell 11 are (1280, 1280) --- Number of PSF stars: 57

Center Coordinate of grid cell 12 are (1280, 1792) --- Number of PSF stars: 71

Center Coordinate of grid cell 13 are (1792, 256) --- Number of PSF stars: 72

Center Coordinate of grid cell 14 are (1792, 768) --- Number of PSF stars: 56

Center Coordinate of grid cell 15 are (1792, 1280) --- Number of PSF stars: 70

Center Coordinate of grid cell 16 are (1792, 1792) --- Number of PSF stars: 69

Calculating the number of PSF stars in a 3 x 3 grid

Center Coordinate of grid cell 1 are (341, 341) --- Number of PSF stars: 211

Center Coordinate of grid cell 2 are (341, 1023) --- Number of PSF stars: 121

Center Coordinate of grid cell 3 are (341, 1705) --- Number of PSF stars: 142

Center Coordinate of grid cell 4 are (1023, 341) --- Number of PSF stars: 127

Center Coordinate of grid cell 5 are (1023, 1023) --- Number of PSF stars: 145

Center Coordinate of grid cell 6 are (1023, 1705) --- Number of PSF stars: 123

Center Coordinate of grid cell 7 are (1705, 341) --- Number of PSF stars: 123

Center Coordinate of grid cell 8 are (1705, 1023) --- Number of PSF stars: 107

Center Coordinate of grid cell 9 are (1705, 1705) --- Number of PSF stars: 128

Calculating the number of PSF stars in a 2 x 2 grid

Center Coordinate of grid cell 1 are (512, 512) --- Number of PSF stars: 376

Center Coordinate of grid cell 2 are (512, 1536) --- Number of PSF stars: 308

Center Coordinate of grid cell 3 are (1536, 512) --- Number of PSF stars: 291

Center Coordinate of grid cell 4 are (1536, 1536) --- Number of PSF stars: 277

Analyzing image 2 of filter F200W, detector NRCB1

Calculating the number of PSF stars in a 5 x 5 grid

Center Coordinate of grid cell 1 are (204, 204) --- Number of PSF stars: 78

Center Coordinate of grid cell 2 are (204, 612) --- Number of PSF stars: 48

Center Coordinate of grid cell 3 are (204, 1020) --- Number of PSF stars: 45

Center Coordinate of grid cell 4 are (204, 1428) --- Number of PSF stars: 40

Center Coordinate of grid cell 5 are (204, 1836) --- Number of PSF stars: 47

Center Coordinate of grid cell 6 are (612, 204) --- Number of PSF stars: 77

Center Coordinate of grid cell 7 are (612, 612) --- Number of PSF stars: 45

Center Coordinates of grid cell 2 are (612, 1020) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinate of grid cell 9 are (612, 1428) --- Number of PSF stars: 43

Center Coordinate of grid cell 10 are (612, 1836) --- Number of PSF stars: 60

Center Coordinate of grid cell 11 are (1020, 204) --- Number of PSF stars: 47

Center Coordinate of grid cell 12 are (1020, 612) --- Number of PSF stars: 48

Center Coordinate of grid cell 13 are (1020, 1020) --- Number of PSF stars: 56

Center Coordinates of grid cell 2 are (1020, 1428) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinates of grid cell 2 are (1020, 1836) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinate of grid cell 16 are (1428, 204) --- Number of PSF stars: 49

Center Coordinate of grid cell 17 are (1428, 612) --- Number of PSF stars: 42

Center Coordinate of grid cell 18 are (1428, 1020) --- Number of PSF stars: 42

Center Coordinate of grid cell 19 are (1428, 1428) --- Number of PSF stars: 47

Center Coordinate of grid cell 20 are (1428, 1836) --- Number of PSF stars: 51

Center Coordinate of grid cell 21 are (1836, 204) --- Number of PSF stars: 40

Center Coordinate of grid cell 22 are (1836, 612) --- Number of PSF stars: 47

Center Coordinate of grid cell 23 are (1836, 1020) --- Number of PSF stars: 53

Center Coordinate of grid cell 24 are (1836, 1428) --- Number of PSF stars: 41

Center Coordinates of grid cell 2 are (1836, 1836) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Calculating the number of PSF stars in a 4 x 4 grid

Center Coordinate of grid cell 1 are (256, 256) --- Number of PSF stars: 155

Center Coordinate of grid cell 2 are (256, 768) --- Number of PSF stars: 77

Center Coordinate of grid cell 3 are (256, 1280) --- Number of PSF stars: 63

Center Coordinate of grid cell 4 are (256, 1792) --- Number of PSF stars: 80

Center Coordinate of grid cell 5 are (768, 256) --- Number of PSF stars: 80

Center Coordinate of grid cell 6 are (768, 768) --- Number of PSF stars: 69

Center Coordinate of grid cell 7 are (768, 1280) --- Number of PSF stars: 81

Center Coordinate of grid cell 8 are (768, 1792) --- Number of PSF stars: 80

Center Coordinate of grid cell 9 are (1280, 256) --- Number of PSF stars: 77

Center Coordinate of grid cell 10 are (1280, 768) --- Number of PSF stars: 91

Center Coordinate of grid cell 11 are (1280, 1280) --- Number of PSF stars: 57

Center Coordinate of grid cell 12 are (1280, 1792) --- Number of PSF stars: 67

Center Coordinate of grid cell 13 are (1792, 256) --- Number of PSF stars: 68

Center Coordinate of grid cell 14 are (1792, 768) --- Number of PSF stars: 58

Center Coordinate of grid cell 15 are (1792, 1280) --- Number of PSF stars: 66

Center Coordinate of grid cell 16 are (1792, 1792) --- Number of PSF stars: 67

Calculating the number of PSF stars in a 3 x 3 grid

Center Coordinate of grid cell 1 are (341, 341) --- Number of PSF stars: 216

Center Coordinate of grid cell 2 are (341, 1023) --- Number of PSF stars: 119

Center Coordinate of grid cell 3 are (341, 1705) --- Number of PSF stars: 142

Center Coordinate of grid cell 4 are (1023, 341) --- Number of PSF stars: 132

Center Coordinate of grid cell 5 are (1023, 1023) --- Number of PSF stars: 147

Center Coordinate of grid cell 6 are (1023, 1705) --- Number of PSF stars: 124

Center Coordinate of grid cell 7 are (1705, 341) --- Number of PSF stars: 124

Center Coordinate of grid cell 8 are (1705, 1023) --- Number of PSF stars: 110

Center Coordinate of grid cell 9 are (1705, 1705) --- Number of PSF stars: 121

Calculating the number of PSF stars in a 2 x 2 grid

Center Coordinate of grid cell 1 are (512, 512) --- Number of PSF stars: 385

Center Coordinate of grid cell 2 are (512, 1536) --- Number of PSF stars: 309

Center Coordinate of grid cell 3 are (1536, 512) --- Number of PSF stars: 301

Center Coordinate of grid cell 4 are (1536, 1536) --- Number of PSF stars: 263

Analyzing image 3 of filter F200W, detector NRCB1

Calculating the number of PSF stars in a 5 x 5 grid

Center Coordinate of grid cell 1 are (204, 204) --- Number of PSF stars: 76

Center Coordinate of grid cell 2 are (204, 612) --- Number of PSF stars: 52

Center Coordinate of grid cell 3 are (204, 1020) --- Number of PSF stars: 41

Center Coordinate of grid cell 4 are (204, 1428) --- Number of PSF stars: 45

Center Coordinate of grid cell 5 are (204, 1836) --- Number of PSF stars: 46

Center Coordinate of grid cell 6 are (612, 204) --- Number of PSF stars: 69

Center Coordinate of grid cell 7 are (612, 612) --- Number of PSF stars: 43

Center Coordinate of grid cell 8 are (612, 1020) --- Number of PSF stars: 44

Center Coordinate of grid cell 9 are (612, 1428) --- Number of PSF stars: 45

Center Coordinate of grid cell 10 are (612, 1836) --- Number of PSF stars: 55

Center Coordinate of grid cell 11 are (1020, 204) --- Number of PSF stars: 46

Center Coordinate of grid cell 12 are (1020, 612) --- Number of PSF stars: 51

Center Coordinate of grid cell 13 are (1020, 1020) --- Number of PSF stars: 54

Center Coordinates of grid cell 3 are (1020, 1428) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinates of grid cell 3 are (1020, 1836) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinate of grid cell 16 are (1428, 204) --- Number of PSF stars: 46

Center Coordinates of grid cell 3 are (1428, 612) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinate of grid cell 18 are (1428, 1020) --- Number of PSF stars: 42

Center Coordinate of grid cell 19 are (1428, 1428) --- Number of PSF stars: 46

Center Coordinate of grid cell 20 are (1428, 1836) --- Number of PSF stars: 55

Center Coordinate of grid cell 21 are (1836, 204) --- Number of PSF stars: 43

Center Coordinate of grid cell 22 are (1836, 612) --- Number of PSF stars: 45

Center Coordinate of grid cell 23 are (1836, 1020) --- Number of PSF stars: 51

Center Coordinate of grid cell 24 are (1836, 1428) --- Number of PSF stars: 43

Center Coordinates of grid cell 3 are (1836, 1836) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Calculating the number of PSF stars in a 4 x 4 grid

Center Coordinate of grid cell 1 are (256, 256) --- Number of PSF stars: 156

Center Coordinate of grid cell 2 are (256, 768) --- Number of PSF stars: 76

Center Coordinate of grid cell 3 are (256, 1280) --- Number of PSF stars: 66

Center Coordinate of grid cell 4 are (256, 1792) --- Number of PSF stars: 79

Center Coordinate of grid cell 5 are (768, 256) --- Number of PSF stars: 76

Center Coordinate of grid cell 6 are (768, 768) --- Number of PSF stars: 69

Center Coordinate of grid cell 7 are (768, 1280) --- Number of PSF stars: 82

Center Coordinate of grid cell 8 are (768, 1792) --- Number of PSF stars: 74

Center Coordinate of grid cell 9 are (1280, 256) --- Number of PSF stars: 71

Center Coordinate of grid cell 10 are (1280, 768) --- Number of PSF stars: 88

Center Coordinate of grid cell 11 are (1280, 1280) --- Number of PSF stars: 57

Center Coordinate of grid cell 12 are (1280, 1792) --- Number of PSF stars: 70

Center Coordinate of grid cell 13 are (1792, 256) --- Number of PSF stars: 70

Center Coordinate of grid cell 14 are (1792, 768) --- Number of PSF stars: 61

Center Coordinate of grid cell 15 are (1792, 1280) --- Number of PSF stars: 69

Center Coordinate of grid cell 16 are (1792, 1792) --- Number of PSF stars: 71

Calculating the number of PSF stars in a 3 x 3 grid

Center Coordinate of grid cell 1 are (341, 341) --- Number of PSF stars: 218

Center Coordinate of grid cell 2 are (341, 1023) --- Number of PSF stars: 115

Center Coordinate of grid cell 3 are (341, 1705) --- Number of PSF stars: 145

Center Coordinate of grid cell 4 are (1023, 341) --- Number of PSF stars: 131

Center Coordinate of grid cell 5 are (1023, 1023) --- Number of PSF stars: 146

Center Coordinate of grid cell 6 are (1023, 1705) --- Number of PSF stars: 123

Center Coordinate of grid cell 7 are (1705, 341) --- Number of PSF stars: 124

Center Coordinate of grid cell 8 are (1705, 1023) --- Number of PSF stars: 113

Center Coordinate of grid cell 9 are (1705, 1705) --- Number of PSF stars: 127

Calculating the number of PSF stars in a 2 x 2 grid

Center Coordinate of grid cell 1 are (512, 512) --- Number of PSF stars: 383

Center Coordinate of grid cell 2 are (512, 1536) --- Number of PSF stars: 305

Center Coordinate of grid cell 3 are (1536, 512) --- Number of PSF stars: 294

Center Coordinate of grid cell 4 are (1536, 1536) --- Number of PSF stars: 275

Analyzing image 4 of filter F200W, detector NRCB1

Calculating the number of PSF stars in a 5 x 5 grid

Center Coordinate of grid cell 1 are (204, 204) --- Number of PSF stars: 73

Center Coordinate of grid cell 2 are (204, 612) --- Number of PSF stars: 47

Center Coordinate of grid cell 3 are (204, 1020) --- Number of PSF stars: 42

Center Coordinate of grid cell 4 are (204, 1428) --- Number of PSF stars: 46

Center Coordinate of grid cell 5 are (204, 1836) --- Number of PSF stars: 49

Center Coordinate of grid cell 6 are (612, 204) --- Number of PSF stars: 73

Center Coordinate of grid cell 7 are (612, 612) --- Number of PSF stars: 45

Center Coordinate of grid cell 8 are (612, 1020) --- Number of PSF stars: 42

Center Coordinate of grid cell 9 are (612, 1428) --- Number of PSF stars: 41

Center Coordinate of grid cell 10 are (612, 1836) --- Number of PSF stars: 57

Center Coordinate of grid cell 11 are (1020, 204) --- Number of PSF stars: 45

Center Coordinate of grid cell 12 are (1020, 612) --- Number of PSF stars: 45

Center Coordinate of grid cell 13 are (1020, 1020) --- Number of PSF stars: 54

Center Coordinates of grid cell 4 are (1020, 1428) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinates of grid cell 4 are (1020, 1836) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinate of grid cell 16 are (1428, 204) --- Number of PSF stars: 52

Center Coordinate of grid cell 17 are (1428, 612) --- Number of PSF stars: 41

Center Coordinate of grid cell 18 are (1428, 1020) --- Number of PSF stars: 45

Center Coordinate of grid cell 19 are (1428, 1428) --- Number of PSF stars: 46

Center Coordinate of grid cell 20 are (1428, 1836) --- Number of PSF stars: 51

Center Coordinates of grid cell 4 are (1836, 204) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Center Coordinate of grid cell 22 are (1836, 612) --- Number of PSF stars: 49

Center Coordinate of grid cell 23 are (1836, 1020) --- Number of PSF stars: 55

Center Coordinate of grid cell 24 are (1836, 1428) --- Number of PSF stars: 42

Center Coordinates of grid cell 4 are (1836, 1836) --- Not enough PSF stars in the cell (number of PSF stars < 40)

Calculating the number of PSF stars in a 4 x 4 grid

Center Coordinate of grid cell 1 are (256, 256) --- Number of PSF stars: 143

Center Coordinate of grid cell 2 are (256, 768) --- Number of PSF stars: 75

Center Coordinate of grid cell 3 are (256, 1280) --- Number of PSF stars: 64

Center Coordinate of grid cell 4 are (256, 1792) --- Number of PSF stars: 85

Center Coordinate of grid cell 5 are (768, 256) --- Number of PSF stars: 78

Center Coordinate of grid cell 6 are (768, 768) --- Number of PSF stars: 69

Center Coordinate of grid cell 7 are (768, 1280) --- Number of PSF stars: 80

Center Coordinate of grid cell 8 are (768, 1792) --- Number of PSF stars: 75

Center Coordinate of grid cell 9 are (1280, 256) --- Number of PSF stars: 76

Center Coordinate of grid cell 10 are (1280, 768) --- Number of PSF stars: 88

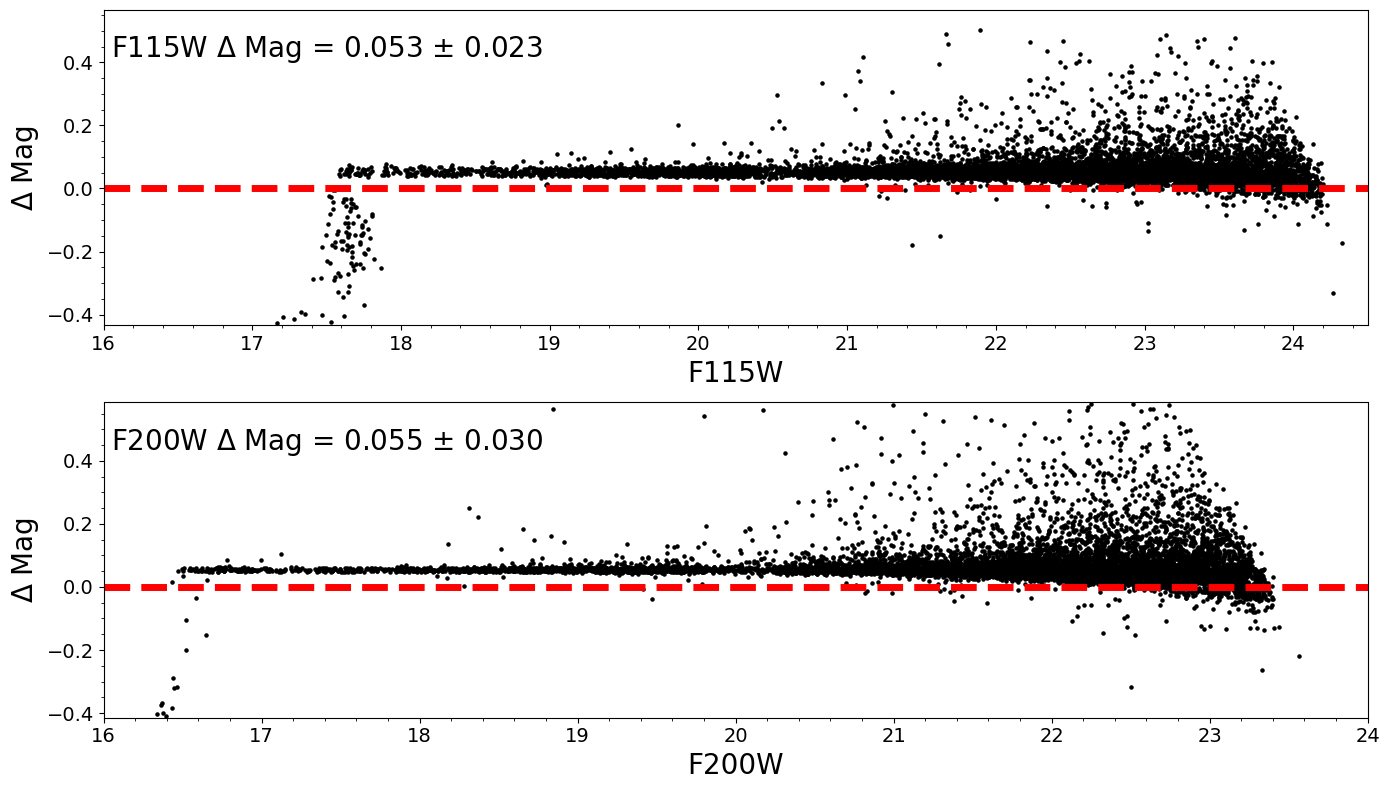

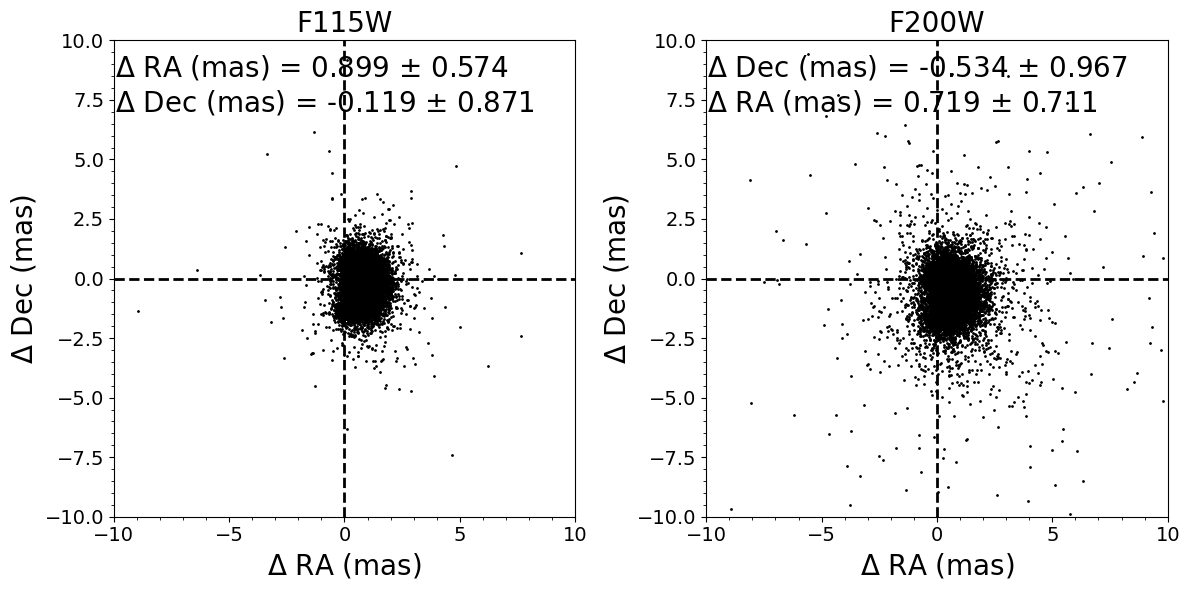

Center Coordinate of grid cell 11 are (1280, 1280) --- Number of PSF stars: 58