This notebook is now part of the JWST-pipeline-notebooks repository and will be removed from this repository by November 30, 2024. Please access the notebook at its new location.

Advanced: NIRISS Imaging Tutorial Notebook#

Table of Contents#

Introduction

Examining uncalibrated data products

Stage 1 Processing

Stage 2 Processing

Stage 3 Processing

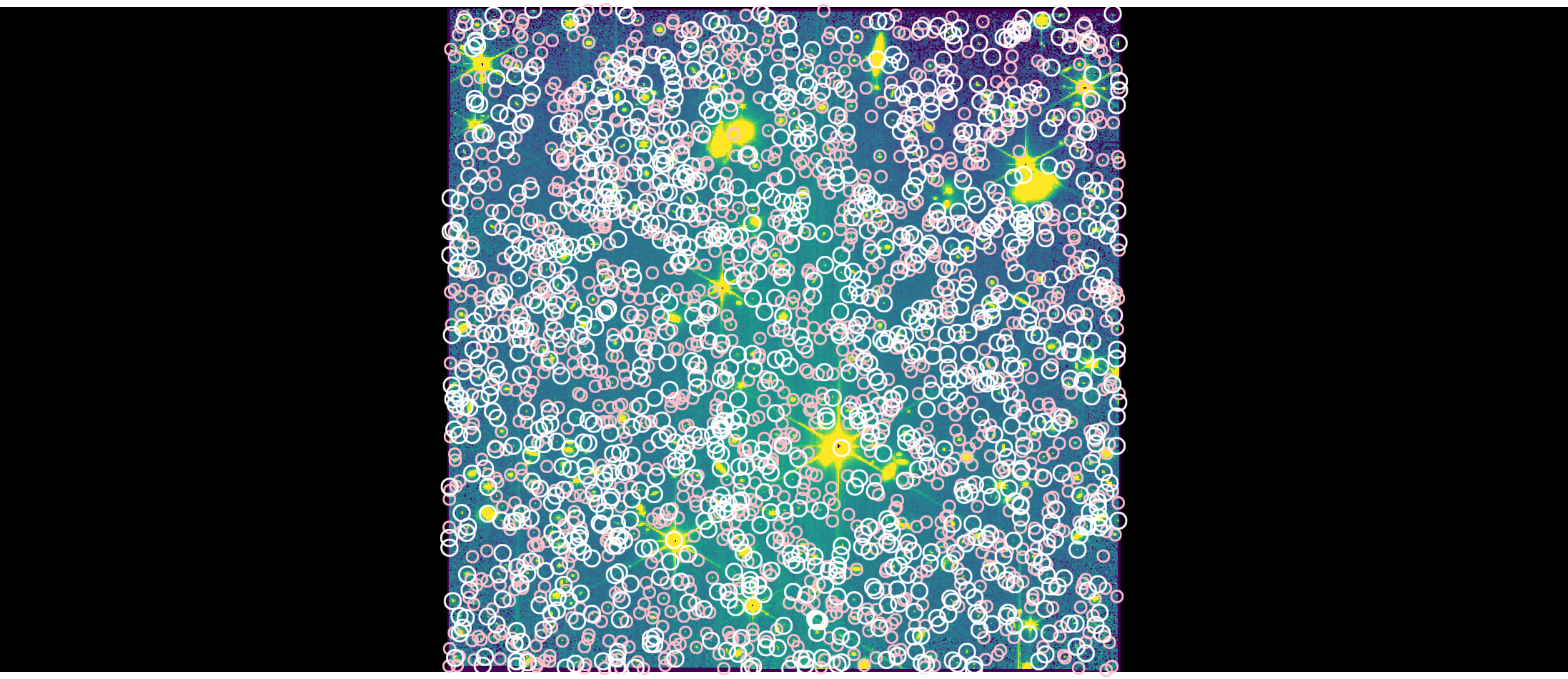

Visualize Detected Sources

Date published: January 24, 2024

1. Introduction#

In this notebook, we will process a NIRISS imaging dataset through the JWST calibration pipeline. The example dataset is from Program ID 1475 (PI: Boyer, CoI: Volk) which is a sky flat calibration program. NIRCam is used as the primary instrument with NIRISS as a coordinated parallel instrument. The NIRISS imaging dataset uses a 17-step dither pattern.

For illustrative purposes, we focus on data taken through the NIRISS F150W filter and start with uncalibrated data products. The files are named

jw01475006001_02201_000nn_nis_uncal.fits, where nn refers to the dither step number which ranges from 01 - 17. See the “File Naming Schemes” documentation to learn more about the file naming convention.

In this notebook, we assume all uncalibrated fits files are saved in a directory named 1475_f150w.

Install pipeline and dependencies#

To make sure that the pipeline version is compatabile with the steps discussed below and the required dependencies and packages are installed, you can create a fresh conda environment and install the provided requirements.txt file:

conda create -n niriss_imaging_pipeline python=3.11

conda activate niriss_imaging_pipeline

pip install -r requirements.txt

Imports#

import os

import glob

import zipfile

import numpy as np

import urllib.request

from IPython.display import Image

# For visualizing images

import jdaviz

from jdaviz import Imviz

# Astropy routines for visualizing detected sources:

from astropy.table import Table

from astropy.coordinates import SkyCoord

# Configure CRDS

os.environ["CRDS_PATH"] = 'crds_cache'

os.environ["CRDS_SERVER_URL"] = "https://jwst-crds.stsci.edu"

# for JWST calibration pipeline

import jwst

from jwst import datamodels

from jwst.pipeline import Detector1Pipeline, Image2Pipeline, Image3Pipeline

from jwst.associations import asn_from_list

from jwst.associations.lib.rules_level3_base import DMS_Level3_Base

# To confirm which version of the pipeline you're running:

print(f"jwst pipeline version: {jwst.__version__}")

print(f"jdaviz version: {jdaviz.__version__}")

jwst pipeline version: 1.16.1

jdaviz version: 4.0.0

Download uncalibrated data products#

# APT program ID number:

pid = '01475'

# Set up directory to download uncalibrated data files:

data_dir = '1475_f150w/'

# Create directory if it does not exist

if not os.path.isdir(data_dir):

os.mkdir(data_dir)

# Download uncalibrated data from Box into current directory:

boxlink = 'https://data.science.stsci.edu/redirect/JWST/jwst-data_analysis_tools/niriss_imaging/1475_f150w.zip'

boxfile = os.path.join(data_dir, '1475_f150w.zip')

urllib.request.urlretrieve(boxlink, boxfile)

zf = zipfile.ZipFile(boxfile, 'r')

zf.extractall()

2. Examining uncalibrated data products#

uncal_files = sorted(glob.glob(os.path.join(data_dir, '*_uncal.fits')))

Look at the first file to determine exposure parameters and practice using JWST datamodels#

# print file name

print(uncal_files[0])

# Open file as JWST datamodel

examine = datamodels.open(uncal_files[0])

# Print out exposure info

print("Instrument: " + examine.meta.instrument.name)

print("Filter: " + examine.meta.instrument.filter)

print("Pupil: " + examine.meta.instrument.pupil)

print("Number of integrations: {}".format(examine.meta.exposure.nints))

print("Number of groups: {}".format(examine.meta.exposure.ngroups))

print("Readout pattern: " + examine.meta.exposure.readpatt)

print("Dither position number: {}".format(examine.meta.dither.position_number))

print("\n")

1475_f150w/jw01475006001_02201_00001_nis_uncal.fits

Instrument: NIRISS

Filter: CLEAR

Pupil: F150W

Number of integrations: 1

Number of groups: 16

Readout pattern: NIS

Dither position number: 1

From the above, we confirm that the data file is for the NIRISS instrument using the F150W filter in the Pupil Wheel crossed with the CLEAR filter in the Filter Wheel. This observation uses the NIS readout pattern, 16 groups per integration, and 1 integration per exposure. This data file is the 1st dither position in this exposure sequence. For more information about how JWST exposures are defined by up-the-ramp sampling, see the Understanding Exposure Times JDox article.

This metadata will be the same for all exposures in this observation other than the dither position number.

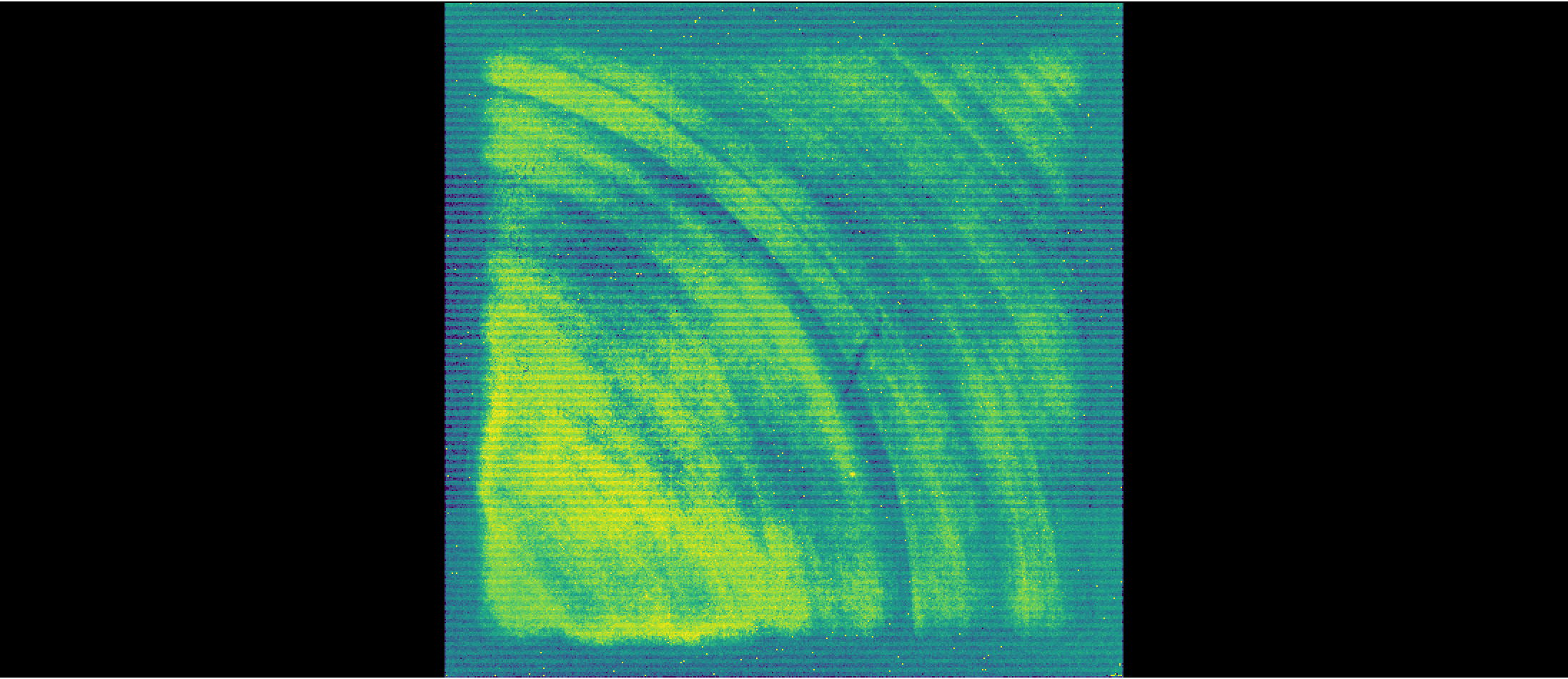

Display uncalibrated image#

We can visualize an uncalibrated dataset that will show detector artifacts that will be removed when calibrating the data through the DETECTOR1 stage of the pipeline. Uncalibrated data files thus are 4D: nintegrations x ngroups x nrows x ncols. Here, we are visualizing the full detector (i.e., all columns and rows) and the 1st group.

We are using the Imviz tool within the jdaviz package to visualize the NIRISS image.

# Create an Imviz instance and set up default viewer

imviz_uncal = Imviz()

viewer_uncal = imviz_uncal.default_viewer

# Read in the science array for our visualization dataset:

uncal_science = examine.data

# Load the dataset into Imviz

imviz_uncal.load_data(uncal_science[0, 0, :, :])

# Visualize the dataset:

imviz_uncal.show()

Adjust settings for the viewer:

plotopt = imviz_uncal.plugins['Plot Options']

plotopt.stretch_function = 'sqrt'

plotopt.image_colormap = 'Viridis'

plotopt.stretch_preset = '99.5%'

plotopt.zoom_radius = 1024

The viewer looks like this:

viewer_uncal.save('./uncal_science.png')

Image('./uncal_science.png')

3. Stage 1 Processing #

Run the datasets through the Detector1 stage of the pipeline to apply detector level calibrations and create a countrate data product where slopes are fitted to the integration ramps. These *_rate.fits products are 2D (nrows x ncols), averaged over all integrations. 3D countrate data products (*_rateints.fits) are also created (nintegrations x nrows x ncols) which have the fitted ramp slopes for each integration.

The pipeline documentation discusses how to run the pipeline in the Python Interface, including how to configure pipeline steps and override reference files. By returning the results of the pipeline to a variable, the pipeline returns a datamodel. Note that the pipeline.call() method is preferred over the pipeline.run() method.

By default, all steps in the Detector1 stage of the pipeline are run for NIRISS except: the ipc correction step and the gain_scale step. Note that while the persistence step is set to run by default, this step does not automatically correct the science data for persistence. The persistence step creates a *_trapsfilled.fits file which is a model that records the number of traps filled at each pixel at the end of an exposure. This file would be used as an input to the persistence step, via the input_trapsfilled argument, to correct a science exposure for persistence. Since persistence is not well calibrated for NIRISS, we do not perform a persistence correction and thus turn off this step to speed up calibration and to not create files that will not be used in the subsequent analysis. This step can be turned off when running the pipeline in Python by doing:

rate_result = Detector1Pipeline.call(uncal,

steps={'persistence': {'skip': True}})

The charge_migration step is particularly important for NIRISS images to mitigate apparent flux loss in resampled images due to the spilling of charge from a central pixel into its neighboring pixels (see Goudfrooij et al. 2023 for details). Charge migration occurs when the accumulated charge in a central pixel exceeds a certain signal limit, which is ~25,000 ADU. This step is turned on by default for NIRISS imaging, Wide Field Slitless Spectroscopy (WFSS), and Aperture Masking Interferometry (AMI) modes when using CRDS contexts of jwst_1159.pmap or later. Different signal limits for each filter are provided by the pars-chargemigrationstep parameter files. Users can specify a different signal limit by running this step with the signal_threshold flag and entering another signal limit in units of ADU.

As of CRDS context jwst_1155.pmap and later, the jump step of the DETECTOR1 stage of the pipeline will remove residuals associated with snowballs for NIRISS imaging, WFSS, and AMI modes. The default parameters for this correction, where expand_large_events set to True turns on the snowball halo removal algorithm, are specified in the pars-jumpstep parameter reference files. Users may wish to alter parameters to optimize removal of snowball residuals. Available parameters are discussed in the Detection and Flagging of Showers and Snowballs in JWST Technical Report (Regan 2023).

# Define directory to save output from detector1

det1_dir = 'detector1/'

# Create directory if it does not exist

if not os.path.isdir(det1_dir):

os.mkdir(det1_dir)

# Run Detector1 stage of pipeline, specifying:

# output directory to save *_rate.fits files

# save_results flag set to True so the rate files are saved

# skipping the persistence step

for uncal in uncal_files:

rate_result = Detector1Pipeline.call(uncal,

output_dir=det1_dir,

save_results=True,

steps={'persistence': {'skip': True}})

2024-12-10 20:13:44,678 - CRDS - INFO - Calibration SW Found: jwst 1.16.1 (/opt/hostedtoolcache/Python/3.11.10/x64/lib/python3.11/site-packages/jwst-1.16.1.dist-info)

2024-12-10 20:13:45,072 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_system_datalvl_0002.rmap 694 bytes (1 / 200 files) (0 / 717.9 K bytes)

2024-12-10 20:13:45,242 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_system_calver_0044.rmap 5.0 K bytes (2 / 200 files) (694 / 717.9 K bytes)

2024-12-10 20:13:45,369 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_system_0043.imap 385 bytes (3 / 200 files) (5.7 K / 717.9 K bytes)

2024-12-10 20:13:45,528 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_wavelengthrange_0024.rmap 1.4 K bytes (4 / 200 files) (6.1 K / 717.9 K bytes)

2024-12-10 20:13:45,724 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_wavecorr_0005.rmap 884 bytes (5 / 200 files) (7.5 K / 717.9 K bytes)

2024-12-10 20:13:45,877 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_superbias_0074.rmap 33.8 K bytes (6 / 200 files) (8.3 K / 717.9 K bytes)

2024-12-10 20:13:46,014 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_sflat_0026.rmap 20.6 K bytes (7 / 200 files) (42.1 K / 717.9 K bytes)

2024-12-10 20:13:46,150 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_saturation_0018.rmap 2.0 K bytes (8 / 200 files) (62.7 K / 717.9 K bytes)

2024-12-10 20:13:46,302 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_refpix_0015.rmap 1.6 K bytes (9 / 200 files) (64.7 K / 717.9 K bytes)

2024-12-10 20:13:46,504 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_readnoise_0025.rmap 2.6 K bytes (10 / 200 files) (66.3 K / 717.9 K bytes)

2024-12-10 20:13:46,642 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_photom_0013.rmap 958 bytes (11 / 200 files) (68.9 K / 717.9 K bytes)

2024-12-10 20:13:46,806 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_pathloss_0007.rmap 1.1 K bytes (12 / 200 files) (69.8 K / 717.9 K bytes)

2024-12-10 20:13:46,922 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_pars-whitelightstep_0001.rmap 777 bytes (13 / 200 files) (70.9 K / 717.9 K bytes)

2024-12-10 20:13:47,065 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_pars-spec2pipeline_0013.rmap 2.1 K bytes (14 / 200 files) (71.7 K / 717.9 K bytes)

2024-12-10 20:13:47,252 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_pars-resamplespecstep_0002.rmap 709 bytes (15 / 200 files) (73.8 K / 717.9 K bytes)

2024-12-10 20:13:47,367 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_pars-outlierdetectionstep_0003.rmap 1.1 K bytes (16 / 200 files) (74.6 K / 717.9 K bytes)

2024-12-10 20:13:47,481 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_pars-jumpstep_0005.rmap 810 bytes (17 / 200 files) (75.7 K / 717.9 K bytes)

2024-12-10 20:13:47,623 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_pars-image2pipeline_0008.rmap 1.0 K bytes (18 / 200 files) (76.5 K / 717.9 K bytes)

2024-12-10 20:13:47,736 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_pars-detector1pipeline_0003.rmap 1.1 K bytes (19 / 200 files) (77.5 K / 717.9 K bytes)

2024-12-10 20:13:47,851 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_pars-darkpipeline_0003.rmap 872 bytes (20 / 200 files) (78.6 K / 717.9 K bytes)

2024-12-10 20:13:47,959 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_pars-darkcurrentstep_0001.rmap 622 bytes (21 / 200 files) (79.4 K / 717.9 K bytes)

2024-12-10 20:13:48,076 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_ote_0030.rmap 1.3 K bytes (22 / 200 files) (80.1 K / 717.9 K bytes)

2024-12-10 20:13:48,190 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_msaoper_0014.rmap 1.4 K bytes (23 / 200 files) (81.3 K / 717.9 K bytes)

2024-12-10 20:13:48,325 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_msa_0027.rmap 1.3 K bytes (24 / 200 files) (82.7 K / 717.9 K bytes)

2024-12-10 20:13:48,442 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_mask_0039.rmap 2.7 K bytes (25 / 200 files) (84.0 K / 717.9 K bytes)

2024-12-10 20:13:48,555 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_linearity_0017.rmap 1.6 K bytes (26 / 200 files) (86.7 K / 717.9 K bytes)

2024-12-10 20:13:48,669 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_ipc_0006.rmap 876 bytes (27 / 200 files) (88.2 K / 717.9 K bytes)

2024-12-10 20:13:48,783 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_ifuslicer_0017.rmap 1.5 K bytes (28 / 200 files) (89.1 K / 717.9 K bytes)

2024-12-10 20:13:48,893 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_ifupost_0019.rmap 1.5 K bytes (29 / 200 files) (90.7 K / 717.9 K bytes)

2024-12-10 20:13:49,008 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_ifufore_0017.rmap 1.5 K bytes (30 / 200 files) (92.2 K / 717.9 K bytes)

2024-12-10 20:13:49,125 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_gain_0023.rmap 1.8 K bytes (31 / 200 files) (93.7 K / 717.9 K bytes)

2024-12-10 20:13:49,244 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_fpa_0028.rmap 1.3 K bytes (32 / 200 files) (95.4 K / 717.9 K bytes)

2024-12-10 20:13:49,353 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_fore_0026.rmap 5.0 K bytes (33 / 200 files) (96.7 K / 717.9 K bytes)

2024-12-10 20:13:49,465 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_flat_0015.rmap 3.8 K bytes (34 / 200 files) (101.6 K / 717.9 K bytes)

2024-12-10 20:13:49,580 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_fflat_0026.rmap 7.2 K bytes (35 / 200 files) (105.5 K / 717.9 K bytes)

2024-12-10 20:13:49,688 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_extract1d_0018.rmap 2.3 K bytes (36 / 200 files) (112.7 K / 717.9 K bytes)

2024-12-10 20:13:49,799 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_disperser_0028.rmap 5.7 K bytes (37 / 200 files) (115.0 K / 717.9 K bytes)

2024-12-10 20:13:49,918 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_dflat_0007.rmap 1.1 K bytes (38 / 200 files) (120.7 K / 717.9 K bytes)

2024-12-10 20:13:50,029 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_dark_0069.rmap 32.6 K bytes (39 / 200 files) (121.8 K / 717.9 K bytes)

2024-12-10 20:13:50,198 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_cubepar_0015.rmap 966 bytes (40 / 200 files) (154.4 K / 717.9 K bytes)

2024-12-10 20:13:50,347 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_collimator_0026.rmap 1.3 K bytes (41 / 200 files) (155.3 K / 717.9 K bytes)

2024-12-10 20:13:50,481 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_camera_0026.rmap 1.3 K bytes (42 / 200 files) (156.7 K / 717.9 K bytes)

2024-12-10 20:13:50,661 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_barshadow_0007.rmap 1.8 K bytes (43 / 200 files) (158.0 K / 717.9 K bytes)

2024-12-10 20:13:50,824 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_area_0018.rmap 6.3 K bytes (44 / 200 files) (159.8 K / 717.9 K bytes)

2024-12-10 20:13:50,949 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_apcorr_0009.rmap 5.6 K bytes (45 / 200 files) (166.0 K / 717.9 K bytes)

2024-12-10 20:13:51,095 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nirspec_0383.imap 5.6 K bytes (46 / 200 files) (171.6 K / 717.9 K bytes)

2024-12-10 20:13:51,216 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_wfssbkg_0007.rmap 3.1 K bytes (47 / 200 files) (177.2 K / 717.9 K bytes)

2024-12-10 20:13:51,327 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_wavemap_0008.rmap 2.2 K bytes (48 / 200 files) (180.3 K / 717.9 K bytes)

2024-12-10 20:13:51,440 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_wavelengthrange_0006.rmap 862 bytes (49 / 200 files) (182.6 K / 717.9 K bytes)

2024-12-10 20:13:51,554 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_trappars_0004.rmap 753 bytes (50 / 200 files) (183.4 K / 717.9 K bytes)

2024-12-10 20:13:51,664 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_trapdensity_0005.rmap 705 bytes (51 / 200 files) (184.2 K / 717.9 K bytes)

2024-12-10 20:13:51,773 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_throughput_0005.rmap 1.3 K bytes (52 / 200 files) (184.9 K / 717.9 K bytes)

2024-12-10 20:13:51,893 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_superbias_0028.rmap 6.5 K bytes (53 / 200 files) (186.1 K / 717.9 K bytes)

2024-12-10 20:13:52,065 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_specwcs_0014.rmap 3.1 K bytes (54 / 200 files) (192.6 K / 717.9 K bytes)

2024-12-10 20:13:52,175 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_spectrace_0008.rmap 2.3 K bytes (55 / 200 files) (195.8 K / 717.9 K bytes)

2024-12-10 20:13:52,333 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_specprofile_0008.rmap 2.4 K bytes (56 / 200 files) (198.1 K / 717.9 K bytes)

2024-12-10 20:13:52,501 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_speckernel_0006.rmap 1.0 K bytes (57 / 200 files) (200.4 K / 717.9 K bytes)

2024-12-10 20:13:52,692 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_saturation_0015.rmap 829 bytes (58 / 200 files) (201.5 K / 717.9 K bytes)

2024-12-10 20:13:52,814 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_readnoise_0011.rmap 987 bytes (59 / 200 files) (202.3 K / 717.9 K bytes)

2024-12-10 20:13:52,937 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_photom_0035.rmap 1.3 K bytes (60 / 200 files) (203.3 K / 717.9 K bytes)

2024-12-10 20:13:53,114 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_persat_0007.rmap 674 bytes (61 / 200 files) (204.5 K / 717.9 K bytes)

2024-12-10 20:13:53,281 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_pathloss_0003.rmap 758 bytes (62 / 200 files) (205.2 K / 717.9 K bytes)

2024-12-10 20:13:53,396 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_pastasoss_0004.rmap 818 bytes (63 / 200 files) (206.0 K / 717.9 K bytes)

2024-12-10 20:13:53,519 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_pars-undersamplecorrectionstep_0001.rmap 904 bytes (64 / 200 files) (206.8 K / 717.9 K bytes)

2024-12-10 20:13:53,639 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_pars-tweakregstep_0012.rmap 3.1 K bytes (65 / 200 files) (207.7 K / 717.9 K bytes)

2024-12-10 20:13:53,753 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_pars-spec2pipeline_0008.rmap 984 bytes (66 / 200 files) (210.8 K / 717.9 K bytes)

2024-12-10 20:13:53,866 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_pars-sourcecatalogstep_0002.rmap 2.3 K bytes (67 / 200 files) (211.8 K / 717.9 K bytes)

2024-12-10 20:13:54,005 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_pars-resamplestep_0002.rmap 687 bytes (68 / 200 files) (214.1 K / 717.9 K bytes)

2024-12-10 20:13:54,153 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_pars-outlierdetectionstep_0004.rmap 2.7 K bytes (69 / 200 files) (214.8 K / 717.9 K bytes)

2024-12-10 20:13:54,324 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_pars-jumpstep_0007.rmap 6.4 K bytes (70 / 200 files) (217.5 K / 717.9 K bytes)

2024-12-10 20:13:54,671 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_pars-image2pipeline_0005.rmap 1.0 K bytes (71 / 200 files) (223.8 K / 717.9 K bytes)

2024-12-10 20:13:55,018 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_pars-detector1pipeline_0002.rmap 1.0 K bytes (72 / 200 files) (224.9 K / 717.9 K bytes)

2024-12-10 20:13:55,270 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_pars-darkpipeline_0002.rmap 868 bytes (73 / 200 files) (225.9 K / 717.9 K bytes)

2024-12-10 20:13:55,547 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_pars-darkcurrentstep_0001.rmap 591 bytes (74 / 200 files) (226.8 K / 717.9 K bytes)

2024-12-10 20:13:55,739 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_pars-chargemigrationstep_0004.rmap 5.7 K bytes (75 / 200 files) (227.4 K / 717.9 K bytes)

2024-12-10 20:13:55,865 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_nrm_0002.rmap 663 bytes (76 / 200 files) (233.0 K / 717.9 K bytes)

2024-12-10 20:13:56,281 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_mask_0020.rmap 859 bytes (77 / 200 files) (233.7 K / 717.9 K bytes)

2024-12-10 20:13:56,639 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_linearity_0022.rmap 961 bytes (78 / 200 files) (234.5 K / 717.9 K bytes)

2024-12-10 20:13:56,854 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_ipc_0007.rmap 651 bytes (79 / 200 files) (235.5 K / 717.9 K bytes)

2024-12-10 20:13:57,201 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_gain_0011.rmap 797 bytes (80 / 200 files) (236.2 K / 717.9 K bytes)

2024-12-10 20:13:57,336 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_flat_0023.rmap 5.9 K bytes (81 / 200 files) (236.9 K / 717.9 K bytes)

2024-12-10 20:13:57,477 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_filteroffset_0010.rmap 853 bytes (82 / 200 files) (242.8 K / 717.9 K bytes)

2024-12-10 20:13:57,614 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_extract1d_0007.rmap 905 bytes (83 / 200 files) (243.7 K / 717.9 K bytes)

2024-12-10 20:13:57,765 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_drizpars_0004.rmap 519 bytes (84 / 200 files) (244.6 K / 717.9 K bytes)

2024-12-10 20:13:57,978 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_distortion_0025.rmap 3.4 K bytes (85 / 200 files) (245.1 K / 717.9 K bytes)

2024-12-10 20:13:58,212 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_dark_0031.rmap 6.8 K bytes (86 / 200 files) (248.5 K / 717.9 K bytes)

2024-12-10 20:13:58,333 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_area_0014.rmap 2.7 K bytes (87 / 200 files) (255.3 K / 717.9 K bytes)

2024-12-10 20:13:58,483 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_apcorr_0010.rmap 4.3 K bytes (88 / 200 files) (258.0 K / 717.9 K bytes)

2024-12-10 20:13:58,706 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_abvegaoffset_0004.rmap 1.4 K bytes (89 / 200 files) (262.3 K / 717.9 K bytes)

2024-12-10 20:13:58,945 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_niriss_0261.imap 5.7 K bytes (90 / 200 files) (263.6 K / 717.9 K bytes)

2024-12-10 20:13:59,126 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_wfssbkg_0004.rmap 7.2 K bytes (91 / 200 files) (269.3 K / 717.9 K bytes)

2024-12-10 20:13:59,295 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_wavelengthrange_0010.rmap 996 bytes (92 / 200 files) (276.5 K / 717.9 K bytes)

2024-12-10 20:13:59,421 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_tsophot_0003.rmap 896 bytes (93 / 200 files) (277.5 K / 717.9 K bytes)

2024-12-10 20:13:59,529 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_trappars_0003.rmap 1.6 K bytes (94 / 200 files) (278.4 K / 717.9 K bytes)

2024-12-10 20:13:59,678 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_trapdensity_0003.rmap 1.6 K bytes (95 / 200 files) (280.0 K / 717.9 K bytes)

2024-12-10 20:13:59,796 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_superbias_0018.rmap 16.2 K bytes (96 / 200 files) (281.7 K / 717.9 K bytes)

2024-12-10 20:14:00,013 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_specwcs_0022.rmap 7.1 K bytes (97 / 200 files) (297.8 K / 717.9 K bytes)

2024-12-10 20:14:00,161 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_saturation_0010.rmap 2.2 K bytes (98 / 200 files) (305.0 K / 717.9 K bytes)

2024-12-10 20:14:00,273 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_readnoise_0025.rmap 23.2 K bytes (99 / 200 files) (307.1 K / 717.9 K bytes)

2024-12-10 20:14:00,440 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_psfmask_0008.rmap 28.4 K bytes (100 / 200 files) (330.3 K / 717.9 K bytes)

2024-12-10 20:14:00,596 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_photom_0028.rmap 3.4 K bytes (101 / 200 files) (358.7 K / 717.9 K bytes)

2024-12-10 20:14:00,706 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_persat_0005.rmap 1.6 K bytes (102 / 200 files) (362.0 K / 717.9 K bytes)

2024-12-10 20:14:00,819 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_pars-whitelightstep_0003.rmap 1.5 K bytes (103 / 200 files) (363.6 K / 717.9 K bytes)

2024-12-10 20:14:01,003 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_pars-tweakregstep_0003.rmap 4.5 K bytes (104 / 200 files) (365.1 K / 717.9 K bytes)

2024-12-10 20:14:01,282 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_pars-spec2pipeline_0008.rmap 984 bytes (105 / 200 files) (369.5 K / 717.9 K bytes)

2024-12-10 20:14:01,559 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_pars-sourcecatalogstep_0002.rmap 4.6 K bytes (106 / 200 files) (370.5 K / 717.9 K bytes)

2024-12-10 20:14:01,769 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_pars-resamplestep_0002.rmap 687 bytes (107 / 200 files) (375.2 K / 717.9 K bytes)

2024-12-10 20:14:01,958 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_pars-outlierdetectionstep_0003.rmap 940 bytes (108 / 200 files) (375.8 K / 717.9 K bytes)

2024-12-10 20:14:02,159 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_pars-jumpstep_0005.rmap 806 bytes (109 / 200 files) (376.8 K / 717.9 K bytes)

2024-12-10 20:14:02,293 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_pars-image2pipeline_0003.rmap 1.0 K bytes (110 / 200 files) (377.6 K / 717.9 K bytes)

2024-12-10 20:14:02,533 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_pars-detector1pipeline_0003.rmap 1.0 K bytes (111 / 200 files) (378.6 K / 717.9 K bytes)

2024-12-10 20:14:02,781 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_pars-darkpipeline_0002.rmap 868 bytes (112 / 200 files) (379.6 K / 717.9 K bytes)

2024-12-10 20:14:02,977 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_pars-darkcurrentstep_0001.rmap 618 bytes (113 / 200 files) (380.5 K / 717.9 K bytes)

2024-12-10 20:14:03,106 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_mask_0011.rmap 3.5 K bytes (114 / 200 files) (381.1 K / 717.9 K bytes)

2024-12-10 20:14:03,225 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_linearity_0011.rmap 2.4 K bytes (115 / 200 files) (384.6 K / 717.9 K bytes)

2024-12-10 20:14:03,339 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_ipc_0003.rmap 2.0 K bytes (116 / 200 files) (387.0 K / 717.9 K bytes)

2024-12-10 20:14:03,446 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_gain_0016.rmap 2.1 K bytes (117 / 200 files) (389.0 K / 717.9 K bytes)

2024-12-10 20:14:03,569 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_flat_0027.rmap 51.7 K bytes (118 / 200 files) (391.1 K / 717.9 K bytes)

2024-12-10 20:14:03,736 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_filteroffset_0004.rmap 1.4 K bytes (119 / 200 files) (442.8 K / 717.9 K bytes)

2024-12-10 20:14:03,885 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_extract1d_0004.rmap 842 bytes (120 / 200 files) (444.2 K / 717.9 K bytes)

2024-12-10 20:14:04,079 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_drizpars_0001.rmap 519 bytes (121 / 200 files) (445.1 K / 717.9 K bytes)

2024-12-10 20:14:04,194 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_distortion_0033.rmap 53.4 K bytes (122 / 200 files) (445.6 K / 717.9 K bytes)

2024-12-10 20:14:04,359 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_dark_0045.rmap 26.3 K bytes (123 / 200 files) (499.0 K / 717.9 K bytes)

2024-12-10 20:14:04,506 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_area_0012.rmap 33.5 K bytes (124 / 200 files) (525.3 K / 717.9 K bytes)

2024-12-10 20:14:04,655 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_apcorr_0008.rmap 4.3 K bytes (125 / 200 files) (558.8 K / 717.9 K bytes)

2024-12-10 20:14:04,783 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_abvegaoffset_0003.rmap 1.3 K bytes (126 / 200 files) (563.1 K / 717.9 K bytes)

2024-12-10 20:14:04,947 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_nircam_0297.imap 5.5 K bytes (127 / 200 files) (564.4 K / 717.9 K bytes)

2024-12-10 20:14:05,108 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_wavelengthrange_0027.rmap 929 bytes (128 / 200 files) (569.8 K / 717.9 K bytes)

2024-12-10 20:14:05,218 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_tsophot_0004.rmap 882 bytes (129 / 200 files) (570.8 K / 717.9 K bytes)

2024-12-10 20:14:05,325 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_straymask_0009.rmap 987 bytes (130 / 200 files) (571.6 K / 717.9 K bytes)

2024-12-10 20:14:05,463 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_specwcs_0042.rmap 5.8 K bytes (131 / 200 files) (572.6 K / 717.9 K bytes)

2024-12-10 20:14:05,630 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_saturation_0015.rmap 1.2 K bytes (132 / 200 files) (578.4 K / 717.9 K bytes)

2024-12-10 20:14:05,738 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_rscd_0008.rmap 1.0 K bytes (133 / 200 files) (579.6 K / 717.9 K bytes)

2024-12-10 20:14:05,891 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_resol_0006.rmap 790 bytes (134 / 200 files) (580.6 K / 717.9 K bytes)

2024-12-10 20:14:06,012 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_reset_0026.rmap 3.9 K bytes (135 / 200 files) (581.4 K / 717.9 K bytes)

2024-12-10 20:14:06,124 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_regions_0033.rmap 5.2 K bytes (136 / 200 files) (585.3 K / 717.9 K bytes)

2024-12-10 20:14:06,245 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_readnoise_0023.rmap 1.6 K bytes (137 / 200 files) (590.5 K / 717.9 K bytes)

2024-12-10 20:14:06,384 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_psfmask_0009.rmap 2.1 K bytes (138 / 200 files) (592.1 K / 717.9 K bytes)

2024-12-10 20:14:06,524 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_photom_0056.rmap 3.7 K bytes (139 / 200 files) (594.2 K / 717.9 K bytes)

2024-12-10 20:14:06,652 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_pathloss_0005.rmap 866 bytes (140 / 200 files) (598.0 K / 717.9 K bytes)

2024-12-10 20:14:06,768 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_pars-whitelightstep_0003.rmap 912 bytes (141 / 200 files) (598.9 K / 717.9 K bytes)

2024-12-10 20:14:06,882 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_pars-tweakregstep_0003.rmap 1.8 K bytes (142 / 200 files) (599.8 K / 717.9 K bytes)

2024-12-10 20:14:07,005 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_pars-spec3pipeline_0008.rmap 816 bytes (143 / 200 files) (601.6 K / 717.9 K bytes)

2024-12-10 20:14:07,112 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_pars-spec2pipeline_0012.rmap 1.3 K bytes (144 / 200 files) (602.4 K / 717.9 K bytes)

2024-12-10 20:14:07,225 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_pars-sourcecatalogstep_0003.rmap 1.9 K bytes (145 / 200 files) (603.7 K / 717.9 K bytes)

2024-12-10 20:14:07,340 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_pars-resamplestep_0002.rmap 677 bytes (146 / 200 files) (605.6 K / 717.9 K bytes)

2024-12-10 20:14:07,454 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_pars-resamplespecstep_0002.rmap 706 bytes (147 / 200 files) (606.3 K / 717.9 K bytes)

2024-12-10 20:14:07,562 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_pars-outlierdetectionstep_0017.rmap 3.4 K bytes (148 / 200 files) (607.0 K / 717.9 K bytes)

2024-12-10 20:14:07,671 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_pars-jumpstep_0009.rmap 1.4 K bytes (149 / 200 files) (610.4 K / 717.9 K bytes)

2024-12-10 20:14:07,779 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_pars-image2pipeline_0007.rmap 983 bytes (150 / 200 files) (611.8 K / 717.9 K bytes)

2024-12-10 20:14:07,898 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_pars-extract1dstep_0002.rmap 728 bytes (151 / 200 files) (612.8 K / 717.9 K bytes)

2024-12-10 20:14:08,008 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_pars-emicorrstep_0002.rmap 796 bytes (152 / 200 files) (613.6 K / 717.9 K bytes)

2024-12-10 20:14:08,120 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_pars-detector1pipeline_0009.rmap 1.4 K bytes (153 / 200 files) (614.4 K / 717.9 K bytes)

2024-12-10 20:14:08,241 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_pars-darkpipeline_0002.rmap 860 bytes (154 / 200 files) (615.8 K / 717.9 K bytes)

2024-12-10 20:14:08,349 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_pars-darkcurrentstep_0002.rmap 683 bytes (155 / 200 files) (616.6 K / 717.9 K bytes)

2024-12-10 20:14:08,462 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_mrsxartcorr_0002.rmap 2.2 K bytes (156 / 200 files) (617.3 K / 717.9 K bytes)

2024-12-10 20:14:08,579 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_mrsptcorr_0005.rmap 2.0 K bytes (157 / 200 files) (619.5 K / 717.9 K bytes)

2024-12-10 20:14:08,685 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_mask_0023.rmap 3.5 K bytes (158 / 200 files) (621.4 K / 717.9 K bytes)

2024-12-10 20:14:08,789 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_linearity_0018.rmap 2.8 K bytes (159 / 200 files) (624.9 K / 717.9 K bytes)

2024-12-10 20:14:08,905 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_ipc_0008.rmap 700 bytes (160 / 200 files) (627.7 K / 717.9 K bytes)

2024-12-10 20:14:09,020 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_gain_0013.rmap 3.9 K bytes (161 / 200 files) (628.4 K / 717.9 K bytes)

2024-12-10 20:14:09,127 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_fringefreq_0003.rmap 1.4 K bytes (162 / 200 files) (632.4 K / 717.9 K bytes)

2024-12-10 20:14:09,242 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_fringe_0019.rmap 3.9 K bytes (163 / 200 files) (633.8 K / 717.9 K bytes)

2024-12-10 20:14:09,350 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_flat_0065.rmap 15.5 K bytes (164 / 200 files) (637.7 K / 717.9 K bytes)

2024-12-10 20:14:09,481 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_filteroffset_0025.rmap 2.5 K bytes (165 / 200 files) (653.2 K / 717.9 K bytes)

2024-12-10 20:14:09,585 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_extract1d_0020.rmap 1.4 K bytes (166 / 200 files) (655.7 K / 717.9 K bytes)

2024-12-10 20:14:09,698 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_emicorr_0003.rmap 663 bytes (167 / 200 files) (657.1 K / 717.9 K bytes)

2024-12-10 20:14:09,804 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_drizpars_0002.rmap 511 bytes (168 / 200 files) (657.7 K / 717.9 K bytes)

2024-12-10 20:14:09,917 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_distortion_0040.rmap 4.9 K bytes (169 / 200 files) (658.2 K / 717.9 K bytes)

2024-12-10 20:14:10,030 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_dark_0036.rmap 4.4 K bytes (170 / 200 files) (663.1 K / 717.9 K bytes)

2024-12-10 20:14:10,136 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_cubepar_0017.rmap 800 bytes (171 / 200 files) (667.5 K / 717.9 K bytes)

2024-12-10 20:14:10,246 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_area_0015.rmap 866 bytes (172 / 200 files) (668.3 K / 717.9 K bytes)

2024-12-10 20:14:10,352 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_apcorr_0019.rmap 5.0 K bytes (173 / 200 files) (669.2 K / 717.9 K bytes)

2024-12-10 20:14:10,474 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_abvegaoffset_0002.rmap 1.3 K bytes (174 / 200 files) (674.1 K / 717.9 K bytes)

2024-12-10 20:14:10,583 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_miri_0416.imap 5.7 K bytes (175 / 200 files) (675.4 K / 717.9 K bytes)

2024-12-10 20:14:10,699 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_trappars_0004.rmap 903 bytes (176 / 200 files) (681.1 K / 717.9 K bytes)

2024-12-10 20:14:10,808 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_trapdensity_0006.rmap 930 bytes (177 / 200 files) (682.0 K / 717.9 K bytes)

2024-12-10 20:14:10,931 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_superbias_0017.rmap 3.8 K bytes (178 / 200 files) (682.9 K / 717.9 K bytes)

2024-12-10 20:14:11,043 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_saturation_0009.rmap 779 bytes (179 / 200 files) (686.7 K / 717.9 K bytes)

2024-12-10 20:14:11,151 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_readnoise_0011.rmap 1.3 K bytes (180 / 200 files) (687.5 K / 717.9 K bytes)

2024-12-10 20:14:11,256 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_photom_0014.rmap 1.1 K bytes (181 / 200 files) (688.8 K / 717.9 K bytes)

2024-12-10 20:14:11,363 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_persat_0006.rmap 884 bytes (182 / 200 files) (689.9 K / 717.9 K bytes)

2024-12-10 20:14:11,467 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_pars-tweakregstep_0002.rmap 850 bytes (183 / 200 files) (690.8 K / 717.9 K bytes)

2024-12-10 20:14:11,572 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_pars-sourcecatalogstep_0001.rmap 636 bytes (184 / 200 files) (691.6 K / 717.9 K bytes)

2024-12-10 20:14:11,701 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_pars-outlierdetectionstep_0001.rmap 654 bytes (185 / 200 files) (692.2 K / 717.9 K bytes)

2024-12-10 20:14:11,809 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_pars-image2pipeline_0005.rmap 974 bytes (186 / 200 files) (692.9 K / 717.9 K bytes)

2024-12-10 20:14:11,916 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_pars-detector1pipeline_0002.rmap 1.0 K bytes (187 / 200 files) (693.9 K / 717.9 K bytes)

2024-12-10 20:14:12,026 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_pars-darkpipeline_0002.rmap 856 bytes (188 / 200 files) (694.9 K / 717.9 K bytes)

2024-12-10 20:14:12,135 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_mask_0023.rmap 1.1 K bytes (189 / 200 files) (695.8 K / 717.9 K bytes)

2024-12-10 20:14:12,244 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_linearity_0015.rmap 925 bytes (190 / 200 files) (696.8 K / 717.9 K bytes)

2024-12-10 20:14:12,349 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_ipc_0003.rmap 614 bytes (191 / 200 files) (697.7 K / 717.9 K bytes)

2024-12-10 20:14:12,456 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_gain_0010.rmap 890 bytes (192 / 200 files) (698.4 K / 717.9 K bytes)

2024-12-10 20:14:12,569 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_flat_0009.rmap 1.1 K bytes (193 / 200 files) (699.2 K / 717.9 K bytes)

2024-12-10 20:14:12,681 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_distortion_0011.rmap 1.2 K bytes (194 / 200 files) (700.4 K / 717.9 K bytes)

2024-12-10 20:14:12,789 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_dark_0017.rmap 4.3 K bytes (195 / 200 files) (701.6 K / 717.9 K bytes)

2024-12-10 20:14:12,900 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_area_0010.rmap 1.2 K bytes (196 / 200 files) (705.9 K / 717.9 K bytes)

2024-12-10 20:14:13,017 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_apcorr_0004.rmap 4.0 K bytes (197 / 200 files) (707.1 K / 717.9 K bytes)

2024-12-10 20:14:13,124 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_abvegaoffset_0002.rmap 1.3 K bytes (198 / 200 files) (711.0 K / 717.9 K bytes)

2024-12-10 20:14:13,234 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_fgs_0117.imap 5.0 K bytes (199 / 200 files) (712.3 K / 717.9 K bytes)

2024-12-10 20:14:13,342 - CRDS - INFO - Fetching crds_cache/mappings/jwst/jwst_1303.pmap 580 bytes (200 / 200 files) (717.3 K / 717.9 K bytes)

2024-12-10 20:14:13,597 - CRDS - ERROR - Error determining best reference for 'pars-darkcurrentstep' = No match found.

2024-12-10 20:14:13,601 - CRDS - INFO - Fetching crds_cache/references/jwst/niriss/jwst_niriss_pars-chargemigrationstep_0018.asdf 1.1 K bytes (1 / 1 files) (0 / 1.1 K bytes)

2024-12-10 20:14:13,714 - stpipe - INFO - PARS-CHARGEMIGRATIONSTEP parameters found: crds_cache/references/jwst/niriss/jwst_niriss_pars-chargemigrationstep_0018.asdf

2024-12-10 20:14:13,725 - CRDS - INFO - Fetching crds_cache/references/jwst/niriss/jwst_niriss_pars-jumpstep_0087.asdf 1.6 K bytes (1 / 1 files) (0 / 1.6 K bytes)

2024-12-10 20:14:13,871 - stpipe - INFO - PARS-JUMPSTEP parameters found: crds_cache/references/jwst/niriss/jwst_niriss_pars-jumpstep_0087.asdf

2024-12-10 20:14:13,881 - CRDS - ERROR - Error determining best reference for 'pars-cleanflickernoisestep' = Unknown reference type 'pars-cleanflickernoisestep'

2024-12-10 20:14:13,884 - CRDS - INFO - Fetching crds_cache/references/jwst/niriss/jwst_niriss_pars-detector1pipeline_0001.asdf 1.1 K bytes (1 / 1 files) (0 / 1.1 K bytes)

2024-12-10 20:14:13,997 - stpipe - INFO - PARS-DETECTOR1PIPELINE parameters found: crds_cache/references/jwst/niriss/jwst_niriss_pars-detector1pipeline_0001.asdf

2024-12-10 20:14:14,015 - stpipe.Detector1Pipeline - INFO - Detector1Pipeline instance created.

2024-12-10 20:14:14,016 - stpipe.Detector1Pipeline.group_scale - INFO - GroupScaleStep instance created.

2024-12-10 20:14:14,017 - stpipe.Detector1Pipeline.dq_init - INFO - DQInitStep instance created.

2024-12-10 20:14:14,018 - stpipe.Detector1Pipeline.emicorr - INFO - EmiCorrStep instance created.

2024-12-10 20:14:14,019 - stpipe.Detector1Pipeline.saturation - INFO - SaturationStep instance created.

2024-12-10 20:14:14,020 - stpipe.Detector1Pipeline.ipc - INFO - IPCStep instance created.

2024-12-10 20:14:14,021 - stpipe.Detector1Pipeline.superbias - INFO - SuperBiasStep instance created.

2024-12-10 20:14:14,022 - stpipe.Detector1Pipeline.refpix - INFO - RefPixStep instance created.

2024-12-10 20:14:14,023 - stpipe.Detector1Pipeline.rscd - INFO - RscdStep instance created.

2024-12-10 20:14:14,024 - stpipe.Detector1Pipeline.firstframe - INFO - FirstFrameStep instance created.

2024-12-10 20:14:14,025 - stpipe.Detector1Pipeline.lastframe - INFO - LastFrameStep instance created.

2024-12-10 20:14:14,026 - stpipe.Detector1Pipeline.linearity - INFO - LinearityStep instance created.

2024-12-10 20:14:14,027 - stpipe.Detector1Pipeline.dark_current - INFO - DarkCurrentStep instance created.

2024-12-10 20:14:14,028 - stpipe.Detector1Pipeline.reset - INFO - ResetStep instance created.

2024-12-10 20:14:14,030 - stpipe.Detector1Pipeline.persistence - INFO - PersistenceStep instance created.

2024-12-10 20:14:14,031 - stpipe.Detector1Pipeline.charge_migration - INFO - ChargeMigrationStep instance created.

2024-12-10 20:14:14,033 - stpipe.Detector1Pipeline.jump - INFO - JumpStep instance created.

2024-12-10 20:14:14,034 - stpipe.Detector1Pipeline.clean_flicker_noise - INFO - CleanFlickerNoiseStep instance created.

2024-12-10 20:14:14,035 - stpipe.Detector1Pipeline.ramp_fit - INFO - RampFitStep instance created.

2024-12-10 20:14:14,037 - stpipe.Detector1Pipeline.gain_scale - INFO - GainScaleStep instance created.

2024-12-10 20:14:14,200 - stpipe.Detector1Pipeline - INFO - Step Detector1Pipeline running with args ('1475_f150w/jw01475006001_02201_00001_nis_uncal.fits',).

2024-12-10 20:14:14,222 - stpipe.Detector1Pipeline - INFO - Step Detector1Pipeline parameters are:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: detector1/

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: True

skip: False

suffix: None

search_output_file: True

input_dir: ''

save_calibrated_ramp: False

steps:

group_scale:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

dq_init:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

emicorr:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: True

suffix: None

search_output_file: True

input_dir: ''

save_intermediate_results: False

user_supplied_reffile: None

nints_to_phase: None

nbins: None

scale_reference: True

onthefly_corr_freq: None

use_n_cycles: 3

saturation:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

n_pix_grow_sat: 1

use_readpatt: True

ipc:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: True

suffix: None

search_output_file: True

input_dir: ''

superbias:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

refpix:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

odd_even_columns: True

use_side_ref_pixels: True

side_smoothing_length: 11

side_gain: 1.0

odd_even_rows: True

ovr_corr_mitigation_ftr: 3.0

preserve_irs2_refpix: False

irs2_mean_subtraction: False

rscd:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

type: baseline

firstframe:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

lastframe:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

linearity:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

dark_current:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

dark_output: None

average_dark_current: None

reset:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

persistence:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: True

suffix: None

search_output_file: True

input_dir: ''

input_trapsfilled: ''

flag_pers_cutoff: 40.0

save_persistence: False

save_trapsfilled: True

modify_input: False

charge_migration:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

signal_threshold: 21864.0

jump:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

rejection_threshold: 8.0

three_group_rejection_threshold: 6.0

four_group_rejection_threshold: 5.0

maximum_cores: '1'

flag_4_neighbors: False

max_jump_to_flag_neighbors: 200.0

min_jump_to_flag_neighbors: 10.0

after_jump_flag_dn1: 1000

after_jump_flag_time1: 90

after_jump_flag_dn2: 0

after_jump_flag_time2: 0

expand_large_events: True

min_sat_area: 5

min_jump_area: 15.0

expand_factor: 1.75

use_ellipses: False

sat_required_snowball: True

min_sat_radius_extend: 5.0

sat_expand: 0

edge_size: 20

mask_snowball_core_next_int: True

snowball_time_masked_next_int: 4000

find_showers: False

extend_snr_threshold: 1.2

extend_min_area: 90

extend_inner_radius: 1.0

extend_outer_radius: 2.6

extend_ellipse_expand_ratio: 1.1

time_masked_after_shower: 15.0

min_diffs_single_pass: 10

max_extended_radius: 100

minimum_groups: 3

minimum_sigclip_groups: 100

only_use_ints: True

clean_flicker_noise:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: True

suffix: None

search_output_file: True

input_dir: ''

fit_method: median

fit_by_channel: False

background_method: median

background_box_size: None

mask_science_regions: False

n_sigma: 2.0

fit_histogram: False

single_mask: True

user_mask: None

save_mask: False

save_background: False

save_noise: False

ramp_fit:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

algorithm: OLS_C

int_name: ''

save_opt: False

opt_name: ''

suppress_one_group: True

maximum_cores: '1'

gain_scale:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

2024-12-10 20:14:14,381 - stpipe.Detector1Pipeline - INFO - Prefetching reference files for dataset: 'jw01475006001_02201_00001_nis_uncal.fits' reftypes = ['dark', 'gain', 'linearity', 'mask', 'readnoise', 'refpix', 'reset', 'rscd', 'saturation', 'superbias']

2024-12-10 20:14:14,384 - CRDS - INFO - Fetching crds_cache/references/jwst/niriss/jwst_niriss_dark_0169.fits 1.0 G bytes (1 / 7 files) (0 / 1.4 G bytes)

2024-12-10 20:14:29,570 - CRDS - INFO - Fetching crds_cache/references/jwst/niriss/jwst_niriss_gain_0006.fits 16.8 M bytes (2 / 7 files) (1.0 G / 1.4 G bytes)

2024-12-10 20:14:30,066 - CRDS - INFO - Fetching crds_cache/references/jwst/niriss/jwst_niriss_linearity_0017.fits 205.5 M bytes (3 / 7 files) (1.0 G / 1.4 G bytes)

2024-12-10 20:14:33,583 - CRDS - INFO - Fetching crds_cache/references/jwst/niriss/jwst_niriss_mask_0017.fits 16.8 M bytes (4 / 7 files) (1.2 G / 1.4 G bytes)

2024-12-10 20:14:34,192 - CRDS - INFO - Fetching crds_cache/references/jwst/niriss/jwst_niriss_readnoise_0005.fits 16.8 M bytes (5 / 7 files) (1.3 G / 1.4 G bytes)

2024-12-10 20:14:34,760 - CRDS - INFO - Fetching crds_cache/references/jwst/niriss/jwst_niriss_saturation_0015.fits 33.6 M bytes (6 / 7 files) (1.3 G / 1.4 G bytes)

2024-12-10 20:14:36,403 - CRDS - INFO - Fetching crds_cache/references/jwst/niriss/jwst_niriss_superbias_0183.fits 50.4 M bytes (7 / 7 files) (1.3 G / 1.4 G bytes)

2024-12-10 20:14:41,036 - stpipe.Detector1Pipeline - INFO - Prefetch for DARK reference file is 'crds_cache/references/jwst/niriss/jwst_niriss_dark_0169.fits'.

2024-12-10 20:14:41,037 - stpipe.Detector1Pipeline - INFO - Prefetch for GAIN reference file is 'crds_cache/references/jwst/niriss/jwst_niriss_gain_0006.fits'.

2024-12-10 20:14:41,038 - stpipe.Detector1Pipeline - INFO - Prefetch for LINEARITY reference file is 'crds_cache/references/jwst/niriss/jwst_niriss_linearity_0017.fits'.

2024-12-10 20:14:41,038 - stpipe.Detector1Pipeline - INFO - Prefetch for MASK reference file is 'crds_cache/references/jwst/niriss/jwst_niriss_mask_0017.fits'.

2024-12-10 20:14:41,039 - stpipe.Detector1Pipeline - INFO - Prefetch for READNOISE reference file is 'crds_cache/references/jwst/niriss/jwst_niriss_readnoise_0005.fits'.

2024-12-10 20:14:41,040 - stpipe.Detector1Pipeline - INFO - Prefetch for REFPIX reference file is 'N/A'.

2024-12-10 20:14:41,040 - stpipe.Detector1Pipeline - INFO - Prefetch for RESET reference file is 'N/A'.

2024-12-10 20:14:41,041 - stpipe.Detector1Pipeline - INFO - Prefetch for RSCD reference file is 'N/A'.

2024-12-10 20:14:41,041 - stpipe.Detector1Pipeline - INFO - Prefetch for SATURATION reference file is 'crds_cache/references/jwst/niriss/jwst_niriss_saturation_0015.fits'.

2024-12-10 20:14:41,042 - stpipe.Detector1Pipeline - INFO - Prefetch for SUPERBIAS reference file is 'crds_cache/references/jwst/niriss/jwst_niriss_superbias_0183.fits'.

2024-12-10 20:14:41,043 - stpipe.Detector1Pipeline - INFO - Starting calwebb_detector1 ...

2024-12-10 20:14:41,422 - stpipe.Detector1Pipeline.group_scale - INFO - Step group_scale running with args (<RampModel(1, 16, 2048, 2048) from jw01475006001_02201_00001_nis_uncal.fits>,).

2024-12-10 20:14:41,432 - stpipe.Detector1Pipeline.group_scale - INFO - NFRAMES and FRMDIVSR are equal; correction not needed

2024-12-10 20:14:41,433 - stpipe.Detector1Pipeline.group_scale - INFO - Step will be skipped

2024-12-10 20:14:41,434 - stpipe.Detector1Pipeline.group_scale - INFO - Step group_scale done

2024-12-10 20:14:41,574 - stpipe.Detector1Pipeline.dq_init - INFO - Step dq_init running with args (<RampModel(1, 16, 2048, 2048) from jw01475006001_02201_00001_nis_uncal.fits>,).

2024-12-10 20:14:41,592 - stpipe.Detector1Pipeline.dq_init - INFO - Using MASK reference file crds_cache/references/jwst/niriss/jwst_niriss_mask_0017.fits

2024-12-10 20:14:41,852 - CRDS - INFO - Calibration SW Found: jwst 1.16.1 (/opt/hostedtoolcache/Python/3.11.10/x64/lib/python3.11/site-packages/jwst-1.16.1.dist-info)

2024-12-10 20:14:41,974 - stpipe.Detector1Pipeline.dq_init - INFO - Step dq_init done

2024-12-10 20:14:42,138 - stpipe.Detector1Pipeline.saturation - INFO - Step saturation running with args (<RampModel(1, 16, 2048, 2048) from jw01475006001_02201_00001_nis_uncal.fits>,).

2024-12-10 20:14:42,157 - stpipe.Detector1Pipeline.saturation - INFO - Using SATURATION reference file crds_cache/references/jwst/niriss/jwst_niriss_saturation_0015.fits

2024-12-10 20:14:42,187 - stpipe.Detector1Pipeline.saturation - WARNING - Keyword LOWILLUM does not correspond to an existing DQ mnemonic, so will be ignored

2024-12-10 20:14:42,188 - stpipe.Detector1Pipeline.saturation - WARNING - Keyword LOWRESP does not correspond to an existing DQ mnemonic, so will be ignored

2024-12-10 20:14:42,193 - stpipe.Detector1Pipeline.saturation - WARNING - Keyword UNCERTAIN does not correspond to an existing DQ mnemonic, so will be ignored

2024-12-10 20:14:42,281 - stpipe.Detector1Pipeline.saturation - INFO - Using read_pattern with nframes 4

2024-12-10 20:14:45,790 - stpipe.Detector1Pipeline.saturation - INFO - Detected 4239 saturated pixels

2024-12-10 20:14:45,821 - stpipe.Detector1Pipeline.saturation - INFO - Detected 1 A/D floor pixels

2024-12-10 20:14:45,827 - stpipe.Detector1Pipeline.saturation - INFO - Step saturation done

2024-12-10 20:14:45,982 - stpipe.Detector1Pipeline.ipc - INFO - Step ipc running with args (<RampModel(1, 16, 2048, 2048) from jw01475006001_02201_00001_nis_uncal.fits>,).

2024-12-10 20:14:45,983 - stpipe.Detector1Pipeline.ipc - INFO - Step skipped.

2024-12-10 20:14:46,119 - stpipe.Detector1Pipeline.superbias - INFO - Step superbias running with args (<RampModel(1, 16, 2048, 2048) from jw01475006001_02201_00001_nis_uncal.fits>,).

2024-12-10 20:14:46,138 - stpipe.Detector1Pipeline.superbias - INFO - Using SUPERBIAS reference file crds_cache/references/jwst/niriss/jwst_niriss_superbias_0183.fits

2024-12-10 20:14:46,409 - stpipe.Detector1Pipeline.superbias - INFO - Step superbias done

2024-12-10 20:14:46,563 - stpipe.Detector1Pipeline.refpix - INFO - Step refpix running with args (<RampModel(1, 16, 2048, 2048) from jw01475006001_02201_00001_nis_uncal.fits>,).

2024-12-10 20:14:46,651 - stpipe.Detector1Pipeline.refpix - INFO - NIR full frame data

2024-12-10 20:14:46,652 - stpipe.Detector1Pipeline.refpix - INFO - The following parameters are valid for this mode:

2024-12-10 20:14:46,653 - stpipe.Detector1Pipeline.refpix - INFO - use_side_ref_pixels = True

2024-12-10 20:14:46,653 - stpipe.Detector1Pipeline.refpix - INFO - odd_even_columns = True

2024-12-10 20:14:46,653 - stpipe.Detector1Pipeline.refpix - INFO - side_smoothing_length = 11

2024-12-10 20:14:46,654 - stpipe.Detector1Pipeline.refpix - INFO - side_gain = 1.0

2024-12-10 20:14:46,654 - stpipe.Detector1Pipeline.refpix - INFO - The following parameter is not applicable and is ignored:

2024-12-10 20:14:46,655 - stpipe.Detector1Pipeline.refpix - INFO - odd_even_rows = False

2024-12-10 20:14:51,093 - stpipe.Detector1Pipeline.refpix - INFO - Step refpix done

2024-12-10 20:14:51,244 - stpipe.Detector1Pipeline.linearity - INFO - Step linearity running with args (<RampModel(1, 16, 2048, 2048) from jw01475006001_02201_00001_nis_uncal.fits>,).

2024-12-10 20:14:51,263 - stpipe.Detector1Pipeline.linearity - INFO - Using Linearity reference file crds_cache/references/jwst/niriss/jwst_niriss_linearity_0017.fits

2024-12-10 20:14:51,326 - stpipe.Detector1Pipeline.linearity - WARNING - Keyword LOWILLUM does not correspond to an existing DQ mnemonic, so will be ignored

2024-12-10 20:14:51,327 - stpipe.Detector1Pipeline.linearity - WARNING - Keyword LOWRESP does not correspond to an existing DQ mnemonic, so will be ignored

2024-12-10 20:14:51,331 - stpipe.Detector1Pipeline.linearity - WARNING - Keyword UNCERTAIN does not correspond to an existing DQ mnemonic, so will be ignored

2024-12-10 20:14:51,896 - stpipe.Detector1Pipeline.linearity - INFO - Step linearity done

2024-12-10 20:14:52,047 - stpipe.Detector1Pipeline.persistence - INFO - Step persistence running with args (<RampModel(1, 16, 2048, 2048) from jw01475006001_02201_00001_nis_uncal.fits>,).

2024-12-10 20:14:52,048 - stpipe.Detector1Pipeline.persistence - INFO - Step skipped.

2024-12-10 20:14:52,197 - stpipe.Detector1Pipeline.dark_current - INFO - Step dark_current running with args (<RampModel(1, 16, 2048, 2048) from jw01475006001_02201_00001_nis_uncal.fits>,).

2024-12-10 20:14:52,216 - stpipe.Detector1Pipeline.dark_current - INFO - Using DARK reference file crds_cache/references/jwst/niriss/jwst_niriss_dark_0169.fits

2024-12-10 20:14:52,534 - stpipe.Detector1Pipeline.dark_current - INFO - Science data nints=1, ngroups=16, nframes=4, groupgap=0

2024-12-10 20:14:52,535 - stpipe.Detector1Pipeline.dark_current - INFO - Dark data nints=1, ngroups=30, nframes=4, groupgap=0

2024-12-10 20:14:52,727 - stpipe.Detector1Pipeline.dark_current - INFO - Step dark_current done

2024-12-10 20:14:52,894 - stpipe.Detector1Pipeline.charge_migration - INFO - Step charge_migration running with args (<RampModel(1, 16, 2048, 2048) from jw01475006001_02201_00001_nis_uncal.fits>,).

2024-12-10 20:14:52,984 - stpipe.Detector1Pipeline.charge_migration - INFO - Using signal_threshold: 21864.00

2024-12-10 20:14:53,734 - stpipe.Detector1Pipeline.charge_migration - INFO - Step charge_migration done

2024-12-10 20:14:53,885 - stpipe.Detector1Pipeline.jump - INFO - Step jump running with args (<RampModel(1, 16, 2048, 2048) from jw01475006001_02201_00001_nis_uncal.fits>,).

2024-12-10 20:14:53,980 - stpipe.Detector1Pipeline.jump - INFO - CR rejection threshold = 8 sigma

2024-12-10 20:14:53,981 - stpipe.Detector1Pipeline.jump - INFO - Maximum cores to use = 1

2024-12-10 20:14:53,992 - stpipe.Detector1Pipeline.jump - INFO - Using GAIN reference file: crds_cache/references/jwst/niriss/jwst_niriss_gain_0006.fits

2024-12-10 20:14:54,016 - stpipe.Detector1Pipeline.jump - INFO - Using READNOISE reference file: crds_cache/references/jwst/niriss/jwst_niriss_readnoise_0005.fits

2024-12-10 20:14:54,221 - stpipe.Detector1Pipeline.jump - INFO - Executing two-point difference method

2024-12-10 20:15:04,536 - stpipe.Detector1Pipeline.jump - INFO - Flagging Snowballs

2024-12-10 20:15:05,924 - stpipe.Detector1Pipeline.jump - INFO - Total snowballs = 34

2024-12-10 20:15:05,925 - stpipe.Detector1Pipeline.jump - INFO - Total elapsed time = 11.7034 sec

2024-12-10 20:15:06,017 - stpipe.Detector1Pipeline.jump - INFO - The execution time in seconds: 12.122203

2024-12-10 20:15:06,020 - stpipe.Detector1Pipeline.jump - INFO - Step jump done

2024-12-10 20:15:06,182 - stpipe.Detector1Pipeline.clean_flicker_noise - INFO - Step clean_flicker_noise running with args (<RampModel(1, 16, 2048, 2048) from jw01475006001_02201_00001_nis_uncal.fits>,).

2024-12-10 20:15:06,182 - stpipe.Detector1Pipeline.clean_flicker_noise - INFO - Step skipped.

2024-12-10 20:15:06,324 - stpipe.Detector1Pipeline.ramp_fit - INFO - Step ramp_fit running with args (<RampModel(1, 16, 2048, 2048) from jw01475006001_02201_00001_nis_uncal.fits>,).

2024-12-10 20:15:06,431 - stpipe.Detector1Pipeline.ramp_fit - INFO - Using READNOISE reference file: crds_cache/references/jwst/niriss/jwst_niriss_readnoise_0005.fits

2024-12-10 20:15:06,432 - stpipe.Detector1Pipeline.ramp_fit - INFO - Using GAIN reference file: crds_cache/references/jwst/niriss/jwst_niriss_gain_0006.fits

2024-12-10 20:15:06,460 - stpipe.Detector1Pipeline.ramp_fit - INFO - Using algorithm = OLS_C

2024-12-10 20:15:06,460 - stpipe.Detector1Pipeline.ramp_fit - INFO - Using weighting = optimal

2024-12-10 20:15:07,034 - stpipe.Detector1Pipeline.ramp_fit - INFO - Number of multiprocessing slices: 1

2024-12-10 20:15:12,649 - stpipe.Detector1Pipeline.ramp_fit - INFO - Ramp Fitting C Time: 5.611459493637085

2024-12-10 20:15:12,708 - stpipe.Detector1Pipeline.ramp_fit - INFO - Step ramp_fit done

2024-12-10 20:15:12,879 - stpipe.Detector1Pipeline.gain_scale - INFO - Step gain_scale running with args (<ImageModel(2048, 2048) from jw01475006001_02201_00001_nis_uncal.fits>,).

2024-12-10 20:15:12,907 - stpipe.Detector1Pipeline.gain_scale - INFO - GAINFACT not found in gain reference file

2024-12-10 20:15:12,908 - stpipe.Detector1Pipeline.gain_scale - INFO - Step will be skipped

2024-12-10 20:15:12,910 - stpipe.Detector1Pipeline.gain_scale - INFO - Step gain_scale done

2024-12-10 20:15:13,078 - stpipe.Detector1Pipeline.gain_scale - INFO - Step gain_scale running with args (<CubeModel(1, 2048, 2048) from jw01475006001_02201_00001_nis_uncal.fits>,).

2024-12-10 20:15:13,108 - stpipe.Detector1Pipeline.gain_scale - INFO - GAINFACT not found in gain reference file

2024-12-10 20:15:13,109 - stpipe.Detector1Pipeline.gain_scale - INFO - Step will be skipped

2024-12-10 20:15:13,111 - stpipe.Detector1Pipeline.gain_scale - INFO - Step gain_scale done

2024-12-10 20:15:13,265 - stpipe.Detector1Pipeline - INFO - Saved model in detector1/jw01475006001_02201_00001_nis_rateints.fits

2024-12-10 20:15:13,266 - stpipe.Detector1Pipeline - INFO - ... ending calwebb_detector1

2024-12-10 20:15:13,267 - stpipe.Detector1Pipeline - INFO - Results used CRDS context: jwst_1303.pmap

2024-12-10 20:15:13,411 - stpipe.Detector1Pipeline - INFO - Saved model in detector1/jw01475006001_02201_00001_nis_rate.fits

2024-12-10 20:15:13,411 - stpipe.Detector1Pipeline - INFO - Step Detector1Pipeline done

2024-12-10 20:15:13,412 - stpipe - INFO - Results used jwst version: 1.16.1

2024-12-10 20:15:13,578 - CRDS - ERROR - Error determining best reference for 'pars-darkcurrentstep' = No match found.

2024-12-10 20:15:13,581 - stpipe - INFO - PARS-CHARGEMIGRATIONSTEP parameters found: crds_cache/references/jwst/niriss/jwst_niriss_pars-chargemigrationstep_0018.asdf

2024-12-10 20:15:13,590 - stpipe - INFO - PARS-JUMPSTEP parameters found: crds_cache/references/jwst/niriss/jwst_niriss_pars-jumpstep_0087.asdf

2024-12-10 20:15:13,600 - CRDS - ERROR - Error determining best reference for 'pars-cleanflickernoisestep' = Unknown reference type 'pars-cleanflickernoisestep'

2024-12-10 20:15:13,603 - stpipe - INFO - PARS-DETECTOR1PIPELINE parameters found: crds_cache/references/jwst/niriss/jwst_niriss_pars-detector1pipeline_0001.asdf

2024-12-10 20:15:13,620 - stpipe.Detector1Pipeline - INFO - Detector1Pipeline instance created.

2024-12-10 20:15:13,622 - stpipe.Detector1Pipeline.group_scale - INFO - GroupScaleStep instance created.

2024-12-10 20:15:13,622 - stpipe.Detector1Pipeline.dq_init - INFO - DQInitStep instance created.

2024-12-10 20:15:13,624 - stpipe.Detector1Pipeline.emicorr - INFO - EmiCorrStep instance created.

2024-12-10 20:15:13,625 - stpipe.Detector1Pipeline.saturation - INFO - SaturationStep instance created.

2024-12-10 20:15:13,626 - stpipe.Detector1Pipeline.ipc - INFO - IPCStep instance created.

2024-12-10 20:15:13,627 - stpipe.Detector1Pipeline.superbias - INFO - SuperBiasStep instance created.

2024-12-10 20:15:13,628 - stpipe.Detector1Pipeline.refpix - INFO - RefPixStep instance created.

2024-12-10 20:15:13,629 - stpipe.Detector1Pipeline.rscd - INFO - RscdStep instance created.

2024-12-10 20:15:13,630 - stpipe.Detector1Pipeline.firstframe - INFO - FirstFrameStep instance created.

2024-12-10 20:15:13,631 - stpipe.Detector1Pipeline.lastframe - INFO - LastFrameStep instance created.

2024-12-10 20:15:13,631 - stpipe.Detector1Pipeline.linearity - INFO - LinearityStep instance created.

2024-12-10 20:15:13,633 - stpipe.Detector1Pipeline.dark_current - INFO - DarkCurrentStep instance created.

2024-12-10 20:15:13,634 - stpipe.Detector1Pipeline.reset - INFO - ResetStep instance created.

2024-12-10 20:15:13,635 - stpipe.Detector1Pipeline.persistence - INFO - PersistenceStep instance created.

2024-12-10 20:15:13,636 - stpipe.Detector1Pipeline.charge_migration - INFO - ChargeMigrationStep instance created.

2024-12-10 20:15:13,637 - stpipe.Detector1Pipeline.jump - INFO - JumpStep instance created.

2024-12-10 20:15:13,639 - stpipe.Detector1Pipeline.clean_flicker_noise - INFO - CleanFlickerNoiseStep instance created.

2024-12-10 20:15:13,640 - stpipe.Detector1Pipeline.ramp_fit - INFO - RampFitStep instance created.

2024-12-10 20:15:13,641 - stpipe.Detector1Pipeline.gain_scale - INFO - GainScaleStep instance created.

2024-12-10 20:15:13,798 - stpipe.Detector1Pipeline - INFO - Step Detector1Pipeline running with args ('1475_f150w/jw01475006001_02201_00002_nis_uncal.fits',).

2024-12-10 20:15:13,820 - stpipe.Detector1Pipeline - INFO - Step Detector1Pipeline parameters are:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: detector1/

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: True

skip: False

suffix: None

search_output_file: True

input_dir: ''

save_calibrated_ramp: False

steps:

group_scale:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

dq_init:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

emicorr:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: True

suffix: None

search_output_file: True

input_dir: ''

save_intermediate_results: False

user_supplied_reffile: None

nints_to_phase: None

nbins: None

scale_reference: True

onthefly_corr_freq: None

use_n_cycles: 3

saturation:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

n_pix_grow_sat: 1

use_readpatt: True

ipc:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: True

suffix: None

search_output_file: True

input_dir: ''

superbias:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

refpix:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

odd_even_columns: True

use_side_ref_pixels: True

side_smoothing_length: 11

side_gain: 1.0

odd_even_rows: True

ovr_corr_mitigation_ftr: 3.0

preserve_irs2_refpix: False

irs2_mean_subtraction: False

rscd:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

type: baseline

firstframe:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

lastframe:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

linearity:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

dark_current:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

dark_output: None

average_dark_current: None

reset:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

persistence:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: True

suffix: None

search_output_file: True

input_dir: ''

input_trapsfilled: ''

flag_pers_cutoff: 40.0

save_persistence: False

save_trapsfilled: True

modify_input: False

charge_migration:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

signal_threshold: 21864.0

jump:

pre_hooks: []

post_hooks: []

output_file: None

output_dir: None

output_ext: .fits

output_use_model: False

output_use_index: True

save_results: False

skip: False

suffix: None

search_output_file: True

input_dir: ''

rejection_threshold: 8.0

three_group_rejection_threshold: 6.0

four_group_rejection_threshold: 5.0

maximum_cores: '1'

flag_4_neighbors: False

max_jump_to_flag_neighbors: 200.0

min_jump_to_flag_neighbors: 10.0

after_jump_flag_dn1: 1000

after_jump_flag_time1: 90

after_jump_flag_dn2: 0

after_jump_flag_time2: 0

expand_large_events: True

min_sat_area: 5

min_jump_area: 15.0

expand_factor: 1.75

use_ellipses: False

sat_required_snowball: True

min_sat_radius_extend: 5.0

sat_expand: 0

edge_size: 20

mask_snowball_core_next_int: True

snowball_time_masked_next_int: 4000

find_showers: False

extend_snr_threshold: 1.2

extend_min_area: 90

extend_inner_radius: 1.0

extend_outer_radius: 2.6

extend_ellipse_expand_ratio: 1.1

time_masked_after_shower: 15.0

min_diffs_single_pass: 10

max_extended_radius: 100

minimum_groups: 3

minimum_sigclip_groups: 100

only_use_ints: True

clean_flicker_noise:

pre_hooks: []